AI Research

World AI race faces hurdle as effective altruism movement opposes Trump

NEWYou can now listen to Fox News articles!

FIRST ON FOX: President Donald Trump’s push to establish “America’s global AI dominance” could run into friction from an unlikely source: the “effective altruism” movement, a small but influential group that has a darker outlook on artificial intelligence.

Trump signed an executive order earlier this year titled, “Removing Barriers to American Leadership in Artificial Intelligence.” This week he met with top technology industry leaders, including Mark Zuckerburg, Bill Gates and others, for meetings at the White House in which AI loomed large in the discussions. However, not all the industry’s leaders share the president’s vision for American AI dominance.

Jason Matheny, a former senior Biden official who currently serves as the CEO of the RAND Corporation, is a leader in the effective altruism movement, which, among other priorities, seeks to regulate the development of artificial intelligence with the goal of reducing its risks.

Effective altruism is a philanthropic social movement where proponents claim to be aiming to maximize the good they can do in the world and give to what they calculate are the most effective charities and interventions. Part of this movement includes powerful donors from across many sectors, including technology, where funds are poured into fighting against what the group sees as existential threats, including artificial intelligence.

NEW AI APPS HELP RENTAL DRIVERS AVOID FAKE DAMAGE FEES

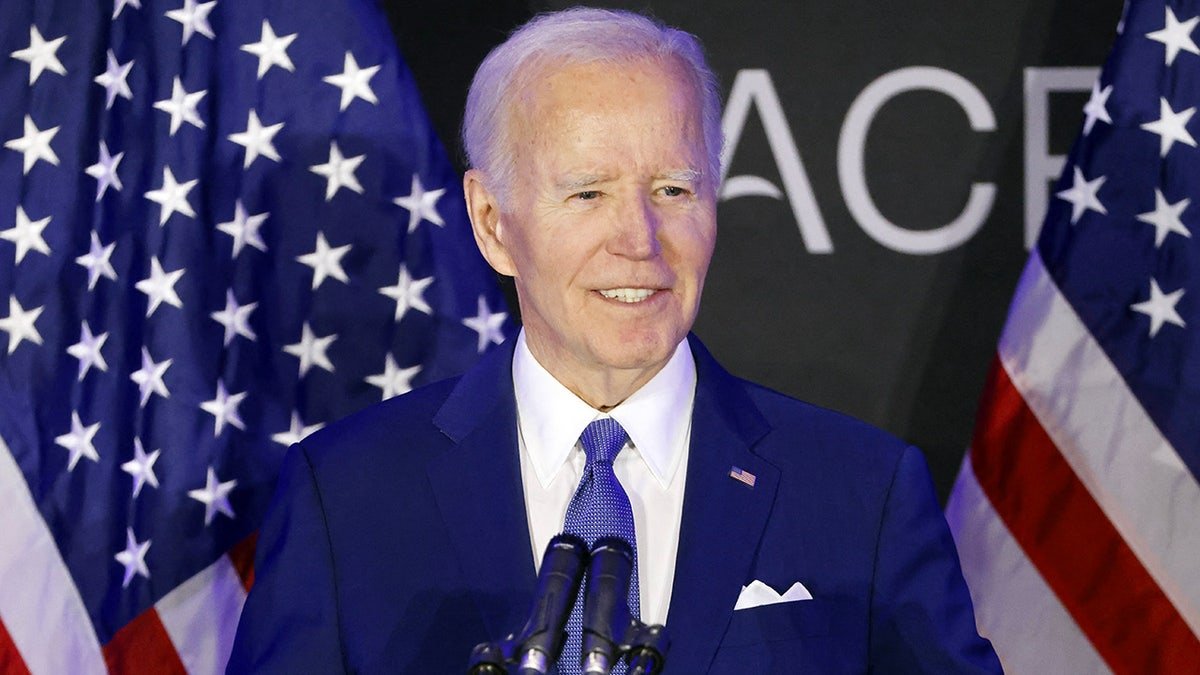

US President Donald Trump delivers remarks at the “Winning the AI Race” AI Summit at the Andrew W. Mellon Auditorium in Washington, DC, on July 23, 2025. (Getty)

Some in the movement have also pledged to give away a portion of their income, while others have argued about the morality of earning as much money as possible in order to give it away.

A former Defense Department official familiar with the industry’s leaders told Fox News Digital that since a 2017 speech at an effective altruism forum in which he laid out his vision, Matheny has “been very deliberate about inserting personnel who share his AI-doomerism worldview” into government and government contractor roles.

“Since then, he has made good on every single one of his calls to action to explicitly infiltrate think tanks, in-government decision makers, and trusted government contractors with this effective altruism, AI kind of doomsayer philosophy,” the official continued.

A RAND Corporation spokesperson pushed back against this label and said that Matheny “believes a wide range of views and backgrounds are essential to analyzing and informing sound public policy. His interest is in encouraging talented young people to embrace public service.”

The spokesperson added that AI being an “existential threat” is “not the lens” through which the company approaches AI, but said, “Our researchers are taking a broad look at the many ways AI is and will impact society – including both opportunities and threats.”

In his 2017 speech, Matheny discussed his vision of influencing the government from the inside and outside to advance effective altruistic goals.

“The work that I’ve done at IARPA (Intelligence Advanced Research Projects Activity) has convinced me that there’s a lot of low-hanging fruit within government positions that we should be picking as effective altruists. There are many different roles that effective altruists can have within government organizations,” Matheny told an effective altruism forum in 2017, before going on to explain how even “fairly junior positions” can “wield incredible influence.”

GOOGLE CEO, MAJOR TECH LEADERS JOIN FIRST LADY MELANIA TRUMP AT WHITE HOUSE AI MEETING

Jason Matheny is an ex-appointee from former President Joe Biden’s administration, where he served in multiple roles between 2021 and 2022. (Tannen Maury/AFP via Getty Images)

Matheny went on to explain the need for “influence” on the “outside” in the form of contractors working for government agencies specialized in fields like biology and chemistry along with experts at various think tanks.

“That’s another way you can have an influence on the government,” Matheny said.

Matheny advanced the philosophy’s ideals in the Biden White House in his roles as deputy assistant to the president for technology and national security, deputy director for national security in the Office of Science and Technology Policy and coordinator for technology and national security at the National Security Council.

According to reporting by Politico, RAND officials were involved in writing former President Joe Biden’s 2023 executive order “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.” The order mirrored many effective altruist goals regarding AI, such as the idea that “harnessing AI for good and realizing its myriad benefits requires mitigating its substantial risks.”

However, a RAND spokesperson told Fox News Digital that Matheny had “no role” crafting the Biden EO, but said its “researchers did provide technical expertise and analysis to inform the EO in response to requests from policymakers.”

The order read that “responsible AI use has the potential to help solve urgent challenges while making our world more prosperous, productive, innovative, and secure. At the same time, irresponsible use could exacerbate societal harms such as fraud, discrimination, bias, and disinformation; displace and disempower workers; stifle competition; and pose risks to national security.”

President Trump has made AI dominance a key part of his agenda. (Omar Marques/SOPA Images/LightRocket/CHARLY TRIBALLEAU/AFP)

Its solution was to increase regulations on the development of AI and add new government reporting requirements for companies developing the technology. For many in the industry, this was seen as an example of government overreach that stifled innovation and hurt the U.S.’s ability to compete with countries like China.

The order has since been revoked by Trump’s AI order, which was signed in the first few days of his second administration. However, as head of RAND, a public policy and research advising group, Matheny and RAND have continued to push their vision for AI regulation and warn about the potential pitfalls.

RAND has posted on social media in recent months warning that AI will “fundamentally reshape the economics of cybersecurity” and that the “growing use of AI chatbots for mental health support means society is ‘deploying pseudo-therapists at an unprecedented scale.'”

Semafor reported earlier this year that the Trump administration was butting heads with Anthropic, a top artificial intelligence company with ties to the EA movement and the Biden administration, on AI policy.

CLICK HERE TO GET THE FOX NEWS APP

“It’s hard to tell a clean story of every single actor involved, but at the heart, the Doomerism community that Jason’s really at the heart of, what they are really concerned with is they truly believe about a runaway super-intelligent model that takes over the world like a Terminator scenario,” the former DOD official told Fox News Digital, adding that the fear of Effective Altruists that AI is an “existential threat” has led to their push that is “restrictive” to the “growth of the technology.”

“With respect to the Trump administration’s AI policies, much RAND analysis is focused on key parts of the President’s AI Action Plan, including analysis we’ve done on AI evaluations, secure data centers, energy options for AI, cybersecurity and biosecurity,” the RAND spokesperson said.

“Mr. Matheny appreciates that the Trump Administration may have different views than the prior administration on AI policy,” the spokesperson continued. “He remains committed, along with RAND, to contributing expertise and analysis to helping the Trump Administration shape policies to advance the United States’ interests.”

AI Research

New AI study aims to predict and prevent sinkholes in Tennessee’s vulnerable roadways

CHATTANOOGA, Tenn — A large sinkhole that appeared on Chattanooga’s Northshore after last month’s historic flooding is just the latest example of roadway problems that are causing concern for drivers.

But a new study looks to use artificial intelligence (AI) to predict where these sinkholes will appear before they do any damage.

“It’s pretty hard to go about a week without hearing somebody talking about something going wrong with the road.”

According to the American Geoscience Institute, sinkholes can have both natural and artificial causes.

However, they tend to occur in places where water can dissolve bedrock, making Tennessee one of the more sinkhole prone states in the country.

Brett Malone, CEO of UTK’s research park, says…

“Geological instability, the erosions, we have a lot of that in East Tennessee, and so a lot of unsteady rock formations underground just create openings that then eventually sort of cave in.”

Sinkholes like the one on Heritage Landing Drive have become a serious headache for drivers in Tennessee.

Nearby residents say its posed safety issues for their neighborhood.

Now, UTK says they are partnering with tech company TreisD to find a statewide solution.

The company’s AI technology could help predict where a sinkhole forms before it actually happens.

“You can speed up your research. So since we’ve been able to now use AI for 3D images, it means we get to our objective and our goals much faster.”

TreisD founder Jerry Nims says their AI algorithm uses those 3D images to study sinkholes in the hopes of learning ways to prevent them.

“If you can see what you’re working with, the experts, and they can gain more information, more knowledge, and it’ll help them in their decision making.”

We asked residents in our area, like Hudson Norton, how they would feel about a study like this in our area.

“If it’s helping people and it can save people, then it sounds like a good use of AI, and responsible use of it, more importantly.”

Chattanooga officials say the sinkhole on Heritage Landing Drive could take up to 6 months to repair.

AI Research

New Study Reveals Challenges in Integrating AI into NHS Healthcare

Implementing artificial intelligence (AI) within the National Health Service (NHS) has emerged as a daunting endeavor, revealing significant challenges rarely anticipated by policymakers and healthcare leaders. A recent peer-reviewed qualitative study conducted by researchers at University College London (UCL) sheds light on the complexities involved in the procurement and early deployment of AI technologies tailored for diagnosing chest conditions, particularly lung cancer. The study surfaces amidst a broader national momentum aimed at integrating digital technology within healthcare systems as outlined in the UK Government’s ambitious 10-year NHS plan, which identifies digital transformation as pivotal for enhancing service delivery and improving patient experiences.

As artificial intelligence gains traction in healthcare diagnostics, NHS England launched a substantial initiative in 2023, whereby AI tools were introduced across 66 NHS hospital trusts, underpinned by a notable funding commitment of £21 million. This ambitious project aimed to establish twelve imaging diagnostic networks that could expand access to specialist healthcare opinions for a greater number of patients. The expected functionalities of these AI tools are significant, including prioritizing urgent cases for specialist review and assisting healthcare professionals by flagging abnormalities in radiological scans—tasks that could potentially ease the burden on overworked NHS staff.

However, two key aspects have emerged from this research, revealing that the rollout of AI systems has not proceeded as swiftly as NHS leadership had anticipated. Building on evidence gleaned from interviews with hospital personnel and AI suppliers, the UCL team identified procurement processes that were unanticipatedly protracted, with delays stretching from four to ten months beyond initial schedules. Strikingly, by June 2025—18 months post-anticipated completion—approximately a third of the participating hospital trusts had yet to integrate these AI tools into clinical practice. This delay emphasizes a critical gap between the technological promise of AI and the operational realities faced by healthcare institutions.

Compounding these challenges, clinical staff equipped with already high workloads have found it tough to engage wholeheartedly with the AI project. Many staff members expressed skepticism about the efficacy of AI technologies, rooted in concerns about their integration with existing healthcare workflows, and the compatibility of new AI tools with aging IT infrastructures that vary widely across numerous NHS hospitals. The researchers noted that many frontline workers struggled to perceive the full potential of AI, especially in environments that overly complicated the procurement and implementation processes.

In addition to identifying these hurdles, the study underscored several factors that proved beneficial in the smooth embedding of AI tools. Enthusiastic and committed local hospital teams played a significant role in facilitating project management, and strong national leadership was critical in guiding the transition. Hospitals that employed dedicated project managers to oversee the implementation found their involvement invaluable in navigating bureaucratic obstacles, indicating a clear advantage to having directed oversight in challenging integrations.

Dr. Angus Ramsay, the study’s first author, observed the lessons highlighted by this investigation, particularly within the context of the UK’s push toward digitizing the NHS. The study advocates for a recalibrated approach towards AI implementation—one that considers existing pressures within the healthcare system. Ramsay noted that the integration of AI technologies, while potentially transformative, requires tempered expectations regarding their ability to resolve deep-rooted challenges within healthcare services as policymakers might wish.

Throughout the evaluation, which spanned from March to September of last year, the research team analyzed how different NHS trusts approached AI deployment and their varied focal points, such as X-ray and CT scanning applications. They observed both the enthusiasm and the reluctance among staff to adapt to this novel technology, with senior clinical professionals expressing reservations over accountability and decision-making processes potentially being handed over to AI systems without adequate human oversight. This skepticism highlighted an urgent need for comprehensive training and guidance, as current onboarding processes were often inadequate for addressing the query-laden concerns of employees.

The analysis conducted by the UCL-led research team revealed that initial challenges, such as the overwhelming amount of technical information available, hampered effective procurement. Many involved in the selection process struggled to distill and comprehend essential elements contained within intricate AI proposals. This situation suggests the utility of establishing a national shortlist of approved AI suppliers to streamline procurement processes at local levels and alleviate the cognitive burdens faced by procurement teams.

Moreover, the emergence of widespread enthusiasm in some instances provided a counterbalance to initial skepticism. The collaborative nature of the imaging networks was particularly striking; team members freely exchanged knowledge and resources, which enriched the collective expertise as they navigated the implementation journey. The fact that many hospitals had staff committed to fostering interdepartmental collaboration made a substantial difference, aiding the mutual learning process involved in the integration of AI technologies.

One of the most pressing findings from the study was the realization that AI is unlikely to serve as a “silver bullet” for the multifaceted issues confronting the NHS. The variability in clinical requirements among the numerous organizations that compose the NHS creates an inherently complicated landscape for the introduction of diagnostic tools. Professor Naomi Fulop, a senior author of the study, emphasized that the diversity of clinical needs across numerous agencies complicates the implementation of diagnostic systems that can cater effectively to everyone. Lessons learned from this research will undoubtedly inform future endeavors in making AI tools more accessible while ensuring the NHS remains responsive to its staff and patients.

Moving forward, an essential next step will involve evaluating the use of AI tools post-implementation, aiming to understand their impact once they have been fully integrated into clinical operations. The researchers acknowledge that, while they successfully captured the procurement and initial deployment stages, further investigation is necessary to assess the experiences of patients and caregivers, thereby filling gaps in understanding around equity in healthcare delivery with AI involvement.

The implications of this study are profound, shedding light on the careful considerations necessary for effective AI introduction within healthcare systems, underscoring the urgency of embedding educational frameworks that equip staff not just with operational knowledge, but with an understanding of the philosophical, ethical, and practical nuances of AI in medicine. This nuanced understanding is pivotal as healthcare practitioners prepare for a future increasingly defined by technological integration and automation.

Faculty members involved in this transformative study, spanning various academic and research backgrounds, are poised to lead this critical discourse, attempting to bridge the knowledge gap that currently exists between technological innovation and clinical practice. As AI continues its trajectory toward becoming an integral part of healthcare, this analysis serves as a clarion call for future studies that prioritize patient experience, clinical accountability, and healthcare equity in the age of artificial intelligence.

Subject of Research: AI tools for chest diagnostics in NHS services.

Article Title: Procurement and early deployment of artificial intelligence tools for chest diagnostics in NHS services in England: A rapid, mixed method evaluation.

News Publication Date: 11-Sep-2025.

Web References: –

References: –

Image Credits: –

Keywords

AI, NHS, healthcare, diagnostics, technology, implementation, policy, research, patient care, digital transformation.

Tags: AI integration challenges in NHS healthcareAI tools for urgent case prioritizationartificial intelligence in lung cancer diagnosiscomplexities of AI deployment in healthcareenhancing patient experience with AIfunding for AI in NHS hospitalshealthcare technology procurement difficultiesNHS digital transformation initiativesNHS imaging diagnostic networksNHS policy implications for AI technologiesrole of AI in improving healthcare deliveryUCL research on AI in healthcare

AI Research

Fight AI-powered cyber attacks with AI tools, intelligence leaders say

Cyber defenders need AI tools to fend off a new generation of AI-powered attacks, the head of the National Geospatial-Intelligence Agency said Wednesday.

“The concept of using AI to combat AI attack or something like that is very real to us. So this, again, is commanders’ business. You need to enable your [chief information security officer] with the tools that he or she needs in order to employ AI to properly handle AI-generated threats,” Vice Adm. Frank Whitworth said at the Billington Cybersecurity Summit Wednesday.

Artificial intelligence has reshaped cyber, making it easier for hackers to manipulate data and craft more convincing fraud campaigns, like phishing emails used in ransomware attacks.

Whitworth spoke a day after Sean Cairncross, the White House’s new national cyber director, called for a “whole-of-nation” approach to ward off foreign-based cyberattacks.

“Engagement and increased involvement with the private sector is necessary for our success,” Cairncross said Tuesday at the event. “I’m committed to marshalling a unified, whole-of-nation approach on this, working in lockstep with our allies who share our commitment to democratic values, privacy and liberty…Together, we’ll explore concepts of operation to enable our extremely capable private sector, from exposing malign actions to shifting adversaries’ risk calculus and bolstering resilience.”

The Pentagon has been incorporating AI, from administrative tasks to combat. The NGA has long used it to spot and predict threats; use of its signature Maven platform has doubled since January and quadrupled since March 2024.

But the agency is also using “good old-fashioned automation” to more quickly make the military’s maps.

“This year, we were able to produce 7,500 maps of the area involving Latin America and a little bit of Central America…that would have been 7.5 years of work, and we did it in 7.5 weeks,” Whitworth said. “Sometimes just good old-fashioned automation, better practices of using automation, it helps you achieve some of the speed, the velocity that we’re looking for.”

The military’s top officer also stressed the importance of using advanced tech to monitor and preempt modern threats.

“There’s always risk of unintended escalation, and that’s what’s so important about using advanced tech tools to understand the environment that we’re operating in and to help leaders see and sense the risk that we’re facing. And there’s really no shortage of those risks right now,” said Gen. Dan Caine, chairman of the Joint Chiefs of Staff, who has an extensive background in irregular warfare and special operations, which can lean heavily on cutting-edge technologies.

“The fight is now centered in many ways around our ability to harvest all of the available information, put it into an appropriate data set, stack stuff on top of it—APIs and others—and end up with a single pane of glass that allows commanders at every echelon…to see that, those data bits at the time and place that we need to to be able to make smart tactical, operational and strategic decisions that will allow us to win and dominate on the battlefields of the future. And so AI is a big part of that,” Caine said.

The Pentagon recently awarded $200 million in AI contracts while the Army doubled down on its partnership with Palantir with a decade-long contract potentially worth $10 billion. The Pentagon has also curbed development of its primary AI platform, Advana, and slashed staff in its chief data and AI office with plans of a reorganization that promises to “accelerate Department-wide AI transformation” and make the Defense Department “an AI-first enterprise.”

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi