Education

Why Every School Needs an AI Policy

AI is transforming the way we work but without a clear policy in place, even the smartest technology can lead to costly mistakes, ethical missteps and serious security risks

CREDIT: This is an edited version of an article that originally appeared in All Business

Artificial Intelligence is no longer a futuristic concept. It’s here, and it’s everywhere. From streamlining operations to powering chatbots, AI is helping organisations work smarter, faster and more efficiently.

According to G-P’s AI at Work Report, a staggering 91% of executives are planning to scale up their AI initiatives. But while AI offers undeniable advantages, it also comes with significant risks that organisations cannot afford to ignore. As AI continues to evolve, it’s crucial to implement a well-structured AI policy to guide its use within your school.

Understanding the Real-World Challenges of AI

While AI offers exciting opportunities for streamlining admin, personalising learning and improving decision-making in schools, the reality of implementation is more complex. The upfront costs of adopting AI tools be high. Many schools, especially those with legacy systems, find it difficult to integrate new technologies smoothly without creating further inefficiencies or administrative headaches.

There’s also a human impact to consider. As AI automates tasks once handled by staff, concerns about job displacement and deskilling begin to surface. In an environment built on relationships and pastoral care, it’s important to question how AI complements rather than replaces the human touch.

Data security is another significant concern. AI in schools often relies on sensitive pupil data to function effectively. If these systems are compromised the consequences can be serious. From safeguarding breaches to trust erosion among parents and staff, schools must be vigilant about privacy and protection.

And finally, there’s the environmental angle. AI requires substantial computing power and infrastructure, which comes with a carbon cost. As schools strive to meet sustainability targets and educate students on climate responsibility, it’s worth considering AI’s footprint and the long-term environmental impact of widespread adoption.

The Role of an AI Policy in Modern School

To navigate these issues responsibly, schools must adopt a comprehensive AI policy. This isn’t just a box-ticking exercise, it’s a roadmap for how your school will use AI ethically, securely and sustainably. A good AI policy doesn’t just address technology; it reflects your values, goals and responsibilities. The first step in building your policy is to create a dedicated AI policy committee. This group should consist of senior leaders, board members, department heads and technical stakeholders. Their mission? To guide the safe and strategic use of AI across your school. This group should be cross-functional so they can represent all school areas and raise practical concerns around how AI may affect people, processes and performance.

Protecting Privacy: A Top Priority

One of the most important responsibilities when implementing AI is protecting personal and corporate data. Any AI system that collects, stores, or processes sensitive data must be governed by robust security measures. Your AI policy should establish strict rules for what data can be collected, how long it can be stored and who has access. Use end-to-end encryption and multi-factor authentication wherever possible. And always ask: is this data essential? If not, don’t collect it.

Ethics Matter: Keep AI Aligned With Your Values

When creating an AI policy, you must consider how your principles translate to digital behaviour. Unfortunately, AI models can unintentionally amplify bias, especially when trained on datasets that lack diversity or were built without appropriate oversight. Plagiarism, misattribution and theft of intellectual property are also common concerns. Ensure your policy includes regular audits and bias detection protocols. Consult ethical frameworks such as those provided by the EU AI Act or OECD principles to ensure you’re building in fairness, transparency and accountability from day one.

The Bottom Line: Use AI to Support, Not Replace, Your Strengths

AI is powerful. But like any tool, its value depends on how you use it. With a strong, ethical policy in place, you can harness the benefits of AI without compromising your people, principles, or privacy.

Education

Seiji Isotani’s Mission to Humanize AI in Education

Newswise — As Penn GSE expands its leadership in AI and education, newly hired associate professor Seiji Isotani adds a vital perspective shaped by decades of work in Brazil, Japan, and the United States.

A pioneer in intelligent tutoring systems, Isotani develops AI tools that adapt to the needs of students and teachers—especially in under-resourced settings. But for him, technology is never the starting point.

In this Q&A, he reflects on the childhood experience that launched his career, the importance of human-centered design, and why responsible AI must begin with understanding the people it’s meant to serve.

Q: What originally drew you to the field of educational technology?

A: Working with educational technology holds special meaning for me, inspired by a personal experience. As a child, I struggled in school. I couldn’t read or work with numbers at age 7, and some even thought I had a learning disability. Fortunately, my mom, a talented public school math teacher, and my dad, a professor at the University of São Paulo, worked with me every night until I caught up. Eventually, we discovered that my difficulties were due to issues with vision, communication, and hearing.

Once those were addressed, everything changed. I went from falling behind to becoming one of the top students, especially in STEM subjects.

That transformation lit a fire in me. I wanted to help others experience that same change, and I started to help other students with their struggles during class. When I got my first computer at age 11, I was instantly in love. I taught myself to program and quickly realized that I could use this technology to help other students learn in ways that worked for them. That spark led me to educational technology, and it still fuels my work today, particularly in one of my main research areas: intelligent tutoring systems.

Q: Your work explores the use of AI to improve learning. What’s one way you think AI could genuinely help students or teachers?

A: One of the most exciting ways AI can make a difference is by acting as a true partner for teachers, helping them do what they already do well or even improve their practices, but with more insight and support. By deeply understanding teachers’ needs (as explored in my recent study) and developing AI-powered tools like those in the MathAIde project, we’ve shown that even in resource-constrained environments, teachers can receive timely, actionable feedback about their students’ learning. They can use AI to plan lessons more efficiently and get personalized suggestions for tailoring activities to each learner’s needs.

At the same time, students benefit from more engaging and personalized learning experiences, including gamified elements that adapt to their interests and help keep them motivated (as evidenced in another study of mine). It’s not about replacing teachers or increasing students’ cognitive offload. It’s about giving both teachers and students “superpowers” so they can shine and show all their might.

Q: What drew you to join the faculty at Penn GSE?

A: Honestly, I couldn’t imagine a better place to do the work I care about. Penn GSE is a powerhouse when it comes to rethinking education through innovation. I’ve admired the school’s deep commitment to investing in the field of AI in Education to create real-world impact, especially in K–12 education.

The opportunity to contribute to and grow with Penn GSE, by helping build a strong program that not only trains the next generation of AI in education leaders but also shapes the global conversation on how AI can support more accessible and meaningful learning experiences, is incredibly exciting to me!

Penn is already leading the AI in education agenda across top universities in the U.S. and around the world, and the chance to be part of that movement is truly inspiring. After meeting the faculty and students, it became crystal clear to me: this is where I want to be to help change the world. I’m especially excited to collaborate with such a passionate and visionary community made up of people who, like me, are committed to making education more human, more engaging, and more inspiring through the thoughtful and responsible development and use of AI.

Q: You’ve worked in both Brazil and the U.S.—how have those experiences shaped your perspective on education and innovation?

A: Working in Brazil and the U.S. has certainly shaped my perspective, and adding to my journey, I’ve also had the opportunity to collaborate closely with researchers and schools in other countries, such as Japan, where I completed my doctoral studies. These experiences across very different educational systems and cultures have given me the flexibility to embrace multicultural environments, understand diverse classroom contexts, and navigate the complexities of working in both low- and high-income communities.

What I’ve learned is that challenges in education are not exclusive to any one region. Whether I am working in a rural school in the Amazon, an urban district in the U.S., or a high-tech classroom in Japan, I encounter students and teachers who struggle, whether due to lack of infrastructure, insufficient support, or systems that do not fully meet their needs. These shared struggles, though expressed differently across contexts, have helped me become more connected to what really matters: understanding people and their goals. This is why a human-centered approach is at the heart of my work. For me, it’s not just about gathering data or training AI models. It’s about what we can do with AI and data to help people learn better, teach better, and feel more empowered. Designing AI in education means listening deeply to communities, co-creating solutions with them, and ensuring that the tools we develop truly serve the people they are meant to support.

Q: What’s something surprising or lesser known about your research that you enjoy sharing with others?

A: One thing that often surprises people is that I tend to avoid starting my projects by advocating for the use of AI. Even though I’m deeply involved in AI in education, I actually try to avoid introducing AI at the beginning, especially when the goal is to improve public policy through technology.

In fact, when people ask me, “How can we use AI to improve education policy?”, my first response is usually a question: “Do you already have a shared vision for the kind of education you want in your country or community? Do you have an understanding of the needs of your teachers, principals, students, and families?”

If we can’t answer those questions clearly, it’s very hard to use AI effectively, because we don’t yet know why we need AI or how it should be developed or used to actually serve the people it’s intended to help.

Thus, whether I’m working with a ministry of education or a local school, I begin by engaging deeply with people on the ground. And sometimes, AI is not the silver bullet; in fact, it often isn’t. What tends to work best is not AI alone, but a thoughtful combination of AI with practices rooted in the learning sciences and well-established educational strategies (e.g., structured pedagogy). Nothing flashy, just what works.

Q: When you’re not thinking about research, how do you like to spend your time?

A: Outside of work, my favorite role is being a dad. Spending time with my 4-year-old son and 8-year-old daughter brings me so much joy. They constantly remind me that the true wonders of the world live in small discoveries, in the curiosity to learn about everything, and in the joy of sharing what you’ve learned with those you love.

I have also practiced Judo for about 35 years. In my early days, I trained professionally for competitions and was fortunate to win state and national championships a few times. Although I had to stop training during the pandemic and haven’t returned to a dojo since, I hope to resume my practice in Philadelphia. In the meantime, I still enjoy “training” and playing Judo with my kids at home.

Finally, I also love trying new foods, exploring different cultures, and occasionally watching anime, a fun reminder of my “otaku” days back when I lived in Japan.

Q: Are you more hopeful or cautious about the role of AI in education over the next decade—and what gives you that outlook?

A: You tell me. Just kidding! I’m definitely more hopeful, but in a grounded and thoughtful way. AI is already shaping our society, and it holds incredible potential to transform education for the better, especially when it’s designed responsibly with people and for people. I’ve seen what happens when we get it right: teachers feel empowered, students feel seen and supported, and learning becomes more meaningful, engaging, and joyful.

I have a strong sense that once we move past this initial wave of hype and the overemphasis on the technology itself, we will enter a new phase. That is when the real breakthroughs in AI for education will emerge, focused on truly serving educational needs and empowering communities.

Education

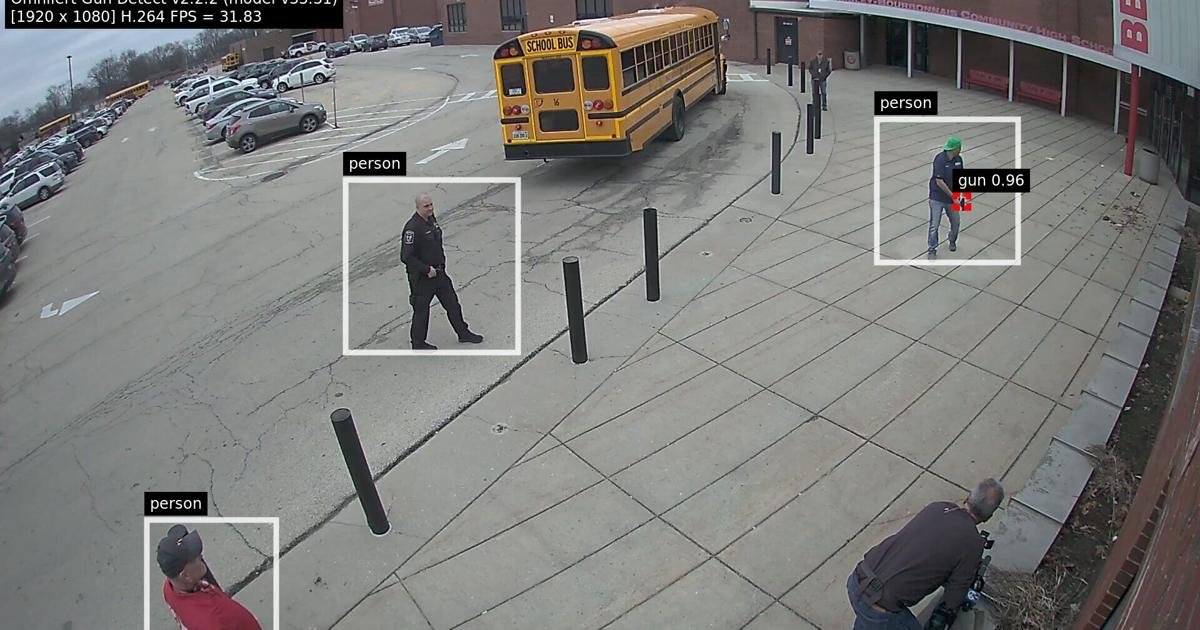

Roseburg Public Schools installs AI tech for added security layer – nrtoday.com

Education

AI cheating in US schools prompts shift to in-class assessments, clearer policies

Artificial intelligence is reshaping education in the United States, forcing schools to rethink how students are assessed.

Traditional homework like take-home essays and book reports is increasingly being replaced by in-class writing and digital monitoring. The rise of AI has blurred the definition of honest work, leaving both teachers and students grappling with new challenges.

California English teacher Casey Cuny, a 2024 Teacher of the Year, said, “The cheating is off the charts. It’s the worst I’ve seen in my entire career.”

He added that teachers now assume any work done at home may involve AI. “We have to ask ourselves, what is cheating? Because I think the lines are getting blurred.”

SHIFT TO IN-CLASS ASSESSMENTS

Across schools, teachers are designing assignments that must be completed during lessons. Oregon teacher Kelly Gibson explained, “I used to give a writing prompt and say, ‘In two weeks I want a five-paragraph essay.’

These days, I can’t do that. That’s almost begging teenagers to cheat.”

Students themselves are unsure how far they can go. Some use AI for research or editing, but question whether summarising readings or drafting outlines counts as cheating.

College student Lily Brown admitted, “Sometimes I feel bad using ChatGPT to summarise reading, because I wonder is this cheating?”

POLICY CONFUSION AND NEW GUIDELINES

Guidance on AI use varies widely, even within the same school. Some classrooms encourage AI-assisted study, while others enforce strict bans. Valencia 11th grader Jolie Lahey called it “confusing” and “outdated.”

Universities are also drafting clearer rules. At the University of California, Berkeley, faculty are urged to state expectations on AI in syllabi. Without clarity, administrators warn, students may use tools inappropriately.

At Carnegie Mellon University, rising cases of academic responsibility violations have prompted a rethink. Faculty have been told that outright bans ‘are not viable’ unless assessment methods change.

Emily DeJeu, who teaches at Carnegie Mellon’s business school, stressed, “To expect an 18-year-old to exercise great discipline is unreasonable, that’s why it’s up to instructors to put up guardrails.”

The debate continues as schools balance innovation with integrity, shaping how the next generation learns in an AI-driven world.

(With inputs from AP)

– Ends

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi