Books, Courses & Certifications

What I Needed as a Teacher Is the Compass I Use Now as a Coach

“I’ve never done this work alone,” I thought as I looked around the high school gymnasium at the other instructional coaches and school leaders. We had been given a critical, quick-write prompt to begin the professional learning session that asked: Who helped you grow the most in your profession? How did they help you grow? And how does that impact your work today?

As I leaned toward my Chromebook to respond, the thought sharpened. I’ve never done this work alone, and the people beside me have shaped every step I’ve taken.

I’ve had mentors and coaches who did more than hand me tips or point me toward resources. Some were my practicum and student teaching supervisors who trusted me to figure things out, but never let me feel like I had to figure them out alone.

I remember being told, “Your students don’t need you to be perfect; they need you to be present.” One reminded me often, “Take the risk. The worst thing that happens is you learn something new.” Their guidance gave me permission to show up as my whole self and take risks as I shaped my evolving teacher identity. As a high school English teacher, former instructional coach, and now as a district instructional support leader, I’ve come to understand that the mentoring I once relied on is the same coaching I try to give others.

Adjusting to a New Environment

When I stepped into the role of instructional coach last year, I brought the habits I’d relied on as a teacher: listening before advising, learning alongside others and remembering what it felt like to be supported well.

Unfortunately, that approach wasn’t instantly recognized in my new school setting.

No one told me I didn’t belong, but in those first weeks, I could feel the distance between myself and my new colleagues. Conversations quieted when I walked by. Some teachers kept their distance. The glances might have lasted just a little too long. One afternoon, a teacher finally asked, “So what exactly is it you’re here to do?”

The question stayed with me. I know that it wasn’t meant to be hostile. My experience stepping into instructional coaching was part of a larger story that was playing out in schools. Nearly 60 percent of public schools have at least one instructional coach, though in many places, the role is underfunded, temporary or narrowly defined. In Rhode Island where I’m from, that’s beginning to shift.

Last year, the state invested $5 million to expand coaching across districts, with the goal of making it a permanent, embedded part of school life. This year, the Rhode Island Department of Education followed with nearly $40 million more over five years, as part of the Comprehensive Literacy State Development grant, building partnerships between schools and teacher preparation programs to strengthen literacy instruction and improve student outcomes.

Still, in that moment, being asked what it is I’m here to do stung. I felt the heat of my own insecurities rising. Was I stepping on toes? Was I doing enough? More than once, I wondered if I was in over my head or if my colleagues realized I wasn’t the professional they expected. I’d been a teacher for nearly 20 years, yet in those early days of coaching, I felt like an imposter.

Part of the distance, I realized, was rooted in a common assumption: If I need a coach, that must mean I don’t know what I’m doing.

No one said it outright, but I recognized it in the reluctance to invite me into their classes, or when teachers would come to me for immediate solutions rather than a full coaching cycle. It’s a belief born from a profession that too often equates needing help with being less capable, when in truth, the opposite is almost always true.

Some also saw coaches as the ears and eyes of the central office. Others thought I was there to evaluate. I wasn’t an administrator, though I worked closely with school leaders. I was a union member, though my proximity to administrators made teachers cautious. I lived in the middle: in classrooms but not a teacher, in leadership meetings but not a decision-maker.

As a coach, you see everything from two vantage points, and you’re constantly translating. You hear the teacher’s frustrations and see the administrator’s challenges. You try to bridge the two without losing the trust of either. No one really trains you for that; I had to figure it out in real time.

Finding the Rhythm

In time, I began to ask myself a question that became my compass as an instructional coach: What would I need if I were being coached?

That question grounded me when the role felt murky. If I were a teacher looking for support, I wouldn’t need another checklist or a curriculum pacing reminder because that kind of guidance already came from administration. I would need a thought partner who offered reassurance and helped me untangle the knot of implementing high-quality instructional materials, district goals and the unique needs of my students.

As the year progressed, I slowly bridged the gap and found teachers willing to move through coaching cycles. We planned lessons together. Sometimes we co-taught and something didn’t land, so we regrouped and tried again. None of it fit neatly into a script. And none of it was about compliance.

The most meaningful moments as a coach rarely happened on a schedule. They often came in the hall or between classes when a teacher stopped me to say, “Can I show you something I’m trying? Can you stop by next period?”

One teacher I partnered with taught science electives, including a forensics course. Her students were deep into analyzing blood splatter patterns in mock crime scenes. I still remember the excitement on the day she invited me to visit. She handed me goggles and an apron, and I entered a room buzzing with collaboration. I was hooked.

The real magic happened after the lab, when we sat down with student work and asked, “What could this be next time?” She took risks, tried new tools, and centered student voice. When she doubted herself, I reminded her of what the students already knew: she was an incredible educator. Over the year, her teaching became even more responsive and inventive, building on what worked and letting go of what didn’t.

Over time, I began to see that the real power of coaching wasn’t in providing fixes but in creating the space for teachers to think aloud about their own questions and choose the next steps that felt right to them. I was never supposed to fix it all.

Enjoying the Journey Together

I believe that learning to teach is a career-long journey. It is never something you do alone; it is something you grow into alongside others, while still figuring out what kind of teacher you are and what type you want to be.

Coaching, like teaching, is rarely neat. Early on, I thought my role was to smooth things out or provide solutions. While I still catch myself slipping into that impulse, I’ve realized that what matters most is sitting alongside teachers and learning through the uncertainty, however uncomfortable. That is the improvisational, human work of coaching.

If I were back in the classroom tomorrow, I’d want a coach who saw my potential and pushed me, even on days I doubted myself. That’s the coach I try to be now: encouraging and willing to step in when the work gets messy.

Books, Courses & Certifications

How School-Family Partnerships Can Boost Early Literacy

When the National Assessment of Educational Progress, often called the Nation’s Report Card, was released last year, the results were sobering. Despite increased funding streams and growing momentum behind the Science of Reading, average fourth grade reading scores declined by another two points from 2022.

In a climate of growing accountability and public scrutiny, how can we do things differently — and more effectively — to ensure every child becomes a proficient reader?

The answer lies not only in what happens inside the classroom but in the connections forged between schools and families. Research shows that when families are equipped with the right tools and guidance, literacy development accelerates. For many schools, creating this home-to-school connection begins by rethinking how they communicate with and involve families from the start.

A School-Family Partnership in Practice

My own experience with my son William underscored just how impactful a strong school-family partnership can be.

When William turned four, he began asking, “When will I be able to read?” He had watched his older brother learn to read with relative ease, and, like many second children, William was eager to follow in his big brother’s footsteps. His pre-K teacher did an incredible job introducing foundational literacy skills, but for William, it wasn’t enough. He was ready to read, but we, his parents, weren’t sure how to support him.

During a parent-teacher conference, his teacher recommended a free, ad-free literacy app that she uses in her classroom. She assigned stories to read and phonics games to play that aligned with his progress at school. The characters in the app became his friends, and the activities became his favorite challenge. Before long, he was recognizing letters on street signs, rhyming in the car and asking to read his favorite stories over and over again.

William’s teacher used insights from his at-home learning to personalize his instruction in the classroom. For our family, this partnership made a real difference.

Where Literacy Begins: Bridging Home and School

Reading develops in stages, and the pre-K to kindergarten years are especially foundational. According to the National Reading Panel and the Institute of Education Sciences, five key pillars support literacy development:

- Phonemic awareness: recognizing and playing with individual sounds in spoken words

- Phonics: connecting those sounds to written letters

- Fluency: reading with ease, accuracy and expression

- Vocabulary: understanding the meaning of spoken and written words

- Comprehension: making sense of what is read

For schools, inviting families into this framework doesn’t mean making parents into teachers. It means providing simple ways for families to reinforce these pillars at home, often through everyday routines, such as reading together, playing language games or talking about daily activities.

Families are often eager to support their children’s reading, but many aren’t sure how. At the same time, educators often struggle to communicate academic goals to families in ways that are clear and approachable.

Three Ways to Strengthen School-Family Literacy Partnerships

Forging effective partnerships between schools and families can feel daunting, but small, intentional shifts can make a powerful impact. Here are three research-backed strategies that schools can use to bring families into the literacy-building process.

1. Communicate the “why” and the “how”

Families become vital partners when they understand not just what their children are learning, but why it matters. Use newsletters, family literacy nights or informal conversations to break down the five pillars in accessible terms. For example, explain that clapping out syllables at home supports phonemic awareness or that spotting road signs helps with letter recognition (phonics).

Even basic activities can reinforce classwork. Sample ideas for family newsletters:

- “This week we’re working on beginning sounds. Try playing a game where you name things in your house that start with the letter ‘B’.”

- “We’re focusing on listening to syllables in words. See if your child can clap out the beats in their name!”

Provide families with specific activities that match what is being taught at school. For example, William’s teacher used the Khan Academy Kids app to assign letter-matching games and read-aloud books that aligned with classroom learning. The connection made it easier for us to support him at home.

2. Establish everyday reading routines

Consistency builds confidence. Encourage families to create regular reading moments, such as a story before bed, a picture book over breakfast or a read-aloud during bath time. Reinforce that reading together in any language is beneficial. Oral storytelling, silly rhymes and even talking through the day’s events help develop vocabulary and comprehension.

Help parents understand that it’s okay to stop when it’s no longer fun. If a child isn’t interested, it’s better to pause and return later than to force the activity. The goal is for children to associate reading with enjoyment and a sense of connection.

3. Empower families with fun, flexible tools

Families are more likely to participate when activities are playful and accessible, not just another assignment. Suggest resources that fit different family preferences: printable activity sheets, suggested library books and no-cost, ad-free digital platforms, such as Khan Academy Kids. These give children structured ways to practice and offer families tools that are easy to use, even with limited time.

In our district, many families use technology to extend classroom skills at home. For William, a rhyming game on a literacy app made practicing phonological awareness fun and stress-free; he returned to it repeatedly, reinforcing new skills through play.

Literacy Grows Best in Partnership

School-family partnerships also offer educators valuable feedback. When families share observations about what excites or challenges their children at home, teachers gain a fuller picture of each student’s progress. Digital platforms, such as teacher, school and district-level reporting, can support this feedback loop by providing teachers with real-time data on at-home practice. This two-way exchange strengthens instruction and empowers both families and educators.

While curriculum, assessment and skilled teaching are essential, literacy is most likely to flourish when nurtured by both schools and families. When educators invite families into the process — demystifying the core elements of literacy, sharing routines and providing flexible, accessible tools — they help create a culture where reading is valued everywhere.

Strong school-family partnerships don’t just address achievement gaps. They lay the groundwork for the joy, confidence and curiosity that help children become lifelong readers.

At Khan Academy Kids, we believe in the power of the school-family partnership. For free resources to help strengthen children’s literacy development, explore the Khan Academy Kids resource hub for schools.

Books, Courses & Certifications

TII Falcon-H1 models now available on Amazon Bedrock Marketplace and Amazon SageMaker JumpStart

This post was co-authored with Jingwei Zuo from TII.

We are excited to announce the availability of the Technology Innovation Institute (TII)’s Falcon-H1 models on Amazon Bedrock Marketplace and Amazon SageMaker JumpStart. With this launch, developers and data scientists can now use six instruction-tuned Falcon-H1 models (0.5B, 1.5B, 1.5B-Deep, 3B, 7B, and 34B) on AWS, and have access to a comprehensive suite of hybrid architecture models that combine traditional attention mechanisms with State Space Models (SSMs) to deliver exceptional performance with unprecedented efficiency.

In this post, we present an overview of Falcon-H1 capabilities and show how to get started with TII’s Falcon-H1 models on both Amazon Bedrock Marketplace and SageMaker JumpStart.

Overview of TII and AWS collaboration

TII is a leading research institute based in Abu Dhabi. As part of UAE’s Advanced Technology Research Council (ATRC), TII focuses on advanced technology research and development across AI, quantum computing, autonomous robotics, cryptography, and more. TII employs international teams of scientists, researchers, and engineers in an open and agile environment, aiming to drive technological innovation and position Abu Dhabi and the UAE as a global research and development hub in alignment with the UAE National Strategy for Artificial Intelligence 2031.

TII and Amazon Web Services (AWS) are collaborating to expand access to made-in-the-UAE AI models across the globe. By combining TII’s technical expertise in building large language models (LLMs) with AWS Cloud-based AI and machine learning (ML) services, professionals worldwide can now build and scale generative AI applications using the Falcon-H1 series of models.

About Falcon-H1 models

The Falcon-H1 architecture implements a parallel hybrid design, using elements from Mamba and Transformer architectures to combine the faster inference and lower memory footprint of SSMs like Mamba with the effectiveness of Transformers’ attention mechanism in understanding context and enhanced generalization capabilities. The Falcon-H1 architecture scales across multiple configurations ranging from 0.5–34 billion parameters and provides native support for 18 languages. According to TII, the Falcon-H1 family demonstrates notable efficiency with published metrics indicating that smaller model variants achieve performance parity with larger models. Some of the benefits of Falcon-H1 series include:

- Performance – The hybrid attention-SSM model has optimized parameters with adjustable ratios between attention and SSM heads, leading to faster inference, lower memory usage, and strong generalization capabilities. According to TII benchmarks published in Falcon-H1’s technical blog post and technical report, Falcon-H1 models demonstrate superior performance across multiple scales against other leading Transformer models of similar or larger scales. For example, Falcon-H1-0.5B delivers performance similar to typical 7B models from 2024, and Falcon-H1-1.5B-Deep rivals many of the current leading 7B-10B models.

- Wide range of model sizes – The Falcon-H1 series includes six sizes: 0.5B, 1.5B, 1.5B-Deep, 3B, 7B, and 34B, with both base and instruction-tuned variants. The Instruct models are now available in Amazon Bedrock Marketplace and SageMaker JumpStart.

- Multilingual by design – The models support 18 languages natively (Arabic, Czech, German, English, Spanish, French, Hindi, Italian, Japanese, Korean, Dutch, Polish, Portuguese, Romanian, Russian, Swedish, Urdu, and Chinese) and can scale to over 100 languages according to TII, thanks to a multilingual tokenizer trained on diverse language datasets.

- Up to 256,000 context length – The Falcon-H1 series enables applications in long-document processing, multi-turn dialogue, and long-range reasoning, showing a distinct advantage over competitors in practical long-context applications like Retrieval Augmented Generation (RAG).

- Robust data and training strategy – Training of Falcon-H1 models employs an innovative approach that introduces complex data early on, contrary to traditional curriculum learning. It also implements strategic data reuse based on careful memorization window assessment. Additionally, the training process scales smoothly across model sizes through a customized Maximal Update Parametrization (µP) recipe, specifically adapted for this novel architecture.

- Balanced performance in science and knowledge-intensive domains – Through a carefully designed data mixture and regular evaluations during training, the model achieves strong general capabilities and broad world knowledge while minimizing unintended specialization or domain-specific biases.

In line with their mission to foster AI accessibility and collaboration, TII have released Falcon-H1 models under the Falcon LLM license. It offers the following benefits:

- Open source nature and accessibility

- Multi-language capabilities

- Cost-effectiveness compared to proprietary models

- Energy-efficiency

About Amazon Bedrock Marketplace and SageMaker JumpStart

Amazon Bedrock Marketplace offers access to over 100 popular, emerging, specialized, and domain-specific models, so you can find the best proprietary and publicly available models for your use case based on factors such as accuracy, flexibility, and cost. On Amazon Bedrock Marketplace you can discover models in a single place and access them through unified and secure Amazon Bedrock APIs. You can also select your desired number of instances and the instance type to meet the demands of your workload and optimize your costs.

SageMaker JumpStart helps you quickly get started with machine learning. It provides access to state-of-the-art model architectures, such as language models, computer vision models, and more, without having to build them from scratch. With SageMaker JumpStart you can deploy models in a secure environment by provisioning them on SageMaker inference instances and isolating them within your virtual private cloud (VPC). You can also use Amazon SageMaker AI to further customize and fine-tune the models and streamline the entire model deployment process.

Solution overview

This post demonstrates how to deploy a Falcon-H1 model using both Amazon Bedrock Marketplace and SageMaker JumpStart. Although we use Falcon-H1-0.5B as an example, you can apply these steps to other models in the Falcon-H1 series. For help determining which deployment option—Amazon Bedrock Marketplace or SageMaker JumpStart—best suits your specific requirements, see Amazon Bedrock or Amazon SageMaker AI?

Deploy Falcon-H1-0.5B-Instruct with Amazon Bedrock Marketplace

In this section, we show how to deploy the Falcon-H1-0.5B-Instruct model in Amazon Bedrock Marketplace.

Prerequisites

To try the Falcon-H1-0.5B-Instruct model in Amazon Bedrock Marketplace, you must have access to an AWS account that will contain your AWS resources.Prior to deploying Falcon-H1-0.5B-Instruct, verify that your AWS account has sufficient quota allocation for ml.g6.xlarge instances. The default quota for endpoints using several instance types and sizes is 0, so attempting to deploy the model without a higher quota will trigger a deployment failure.

To request a quota increase, open the AWS Service Quotas console and search for Amazon SageMaker. Locate ml.g6.xlarge for endpoint usage and choose Request quota increase, then specify your required limit value. After the request is approved, you can proceed with the deployment.

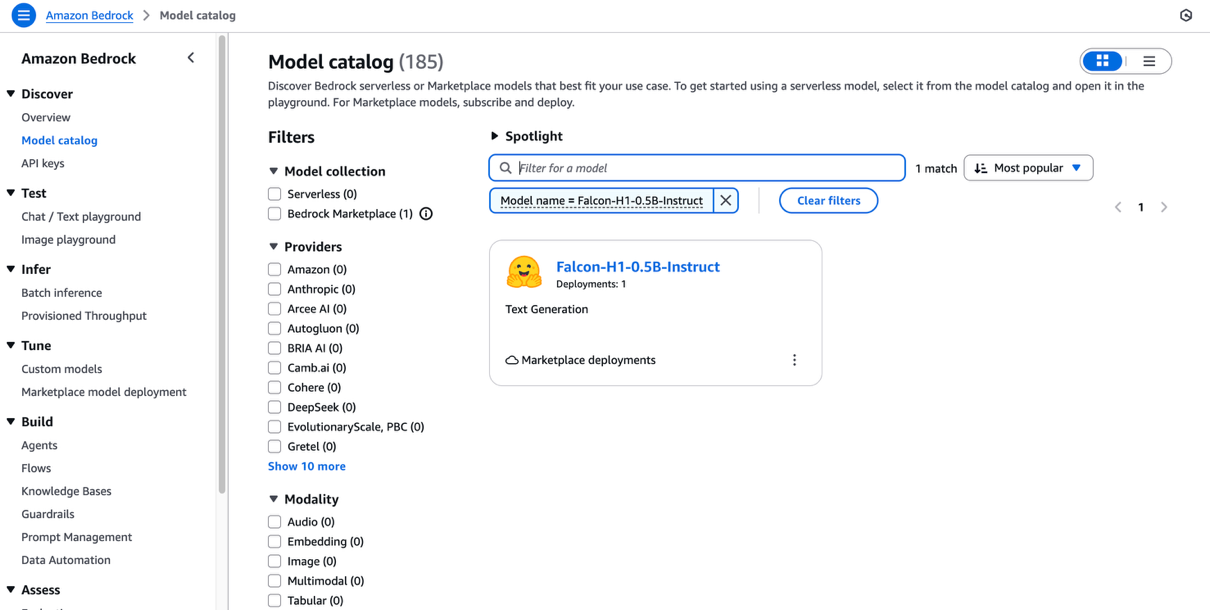

Deploy the model using the Amazon Bedrock Marketplace UI

To deploy the model using Amazon Bedrock Marketplace, complete the following steps:

- On the Amazon Bedrock console, under Discover in the navigation pane, choose Model catalog.

- Filter for Falcon-H1 as the model name and choose Falcon-H1-0.5B-Instruct.

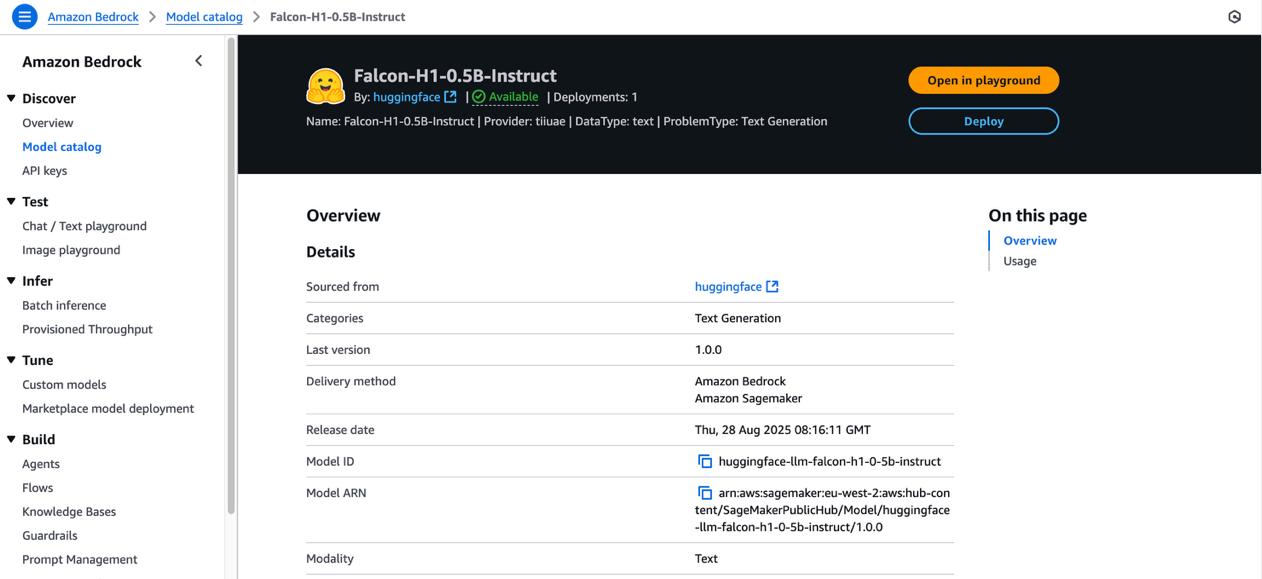

The model overview page includes information about the model’s license terms, features, setup instructions, and links to further resources.

- Review the model license terms, and if you agree with the terms, choose Deploy.

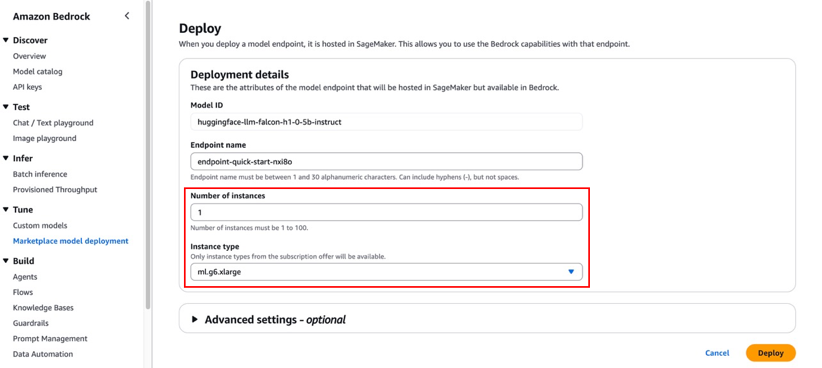

- For Endpoint name, enter an endpoint name or leave it as the default pre-populated name.

- To minimize costs while experimenting, set the Number of instances to 1.

- For Instance type, choose from the list of compatible instance types. Falcon-H1-0.5B-Instruct is an efficient model, so ml.m6.xlarge is sufficient for this exercise.

Although the default configurations are typically sufficient for basic needs, you can customize advanced settings like VPC, service access permissions, encryption keys, and resource tags. These advanced settings might require adjustment for production environments to maintain compliance with your organization’s security protocols.

- Choose Deploy.

- A prompt asks you to stay on the page while the AWS Identity and Access Management (IAM) role is being created. If your AWS account lacks sufficient quota for the selected instance type, you’ll receive an error message. In this case, refer to the preceding prerequisite section to increase your quota, then try the deployment again.

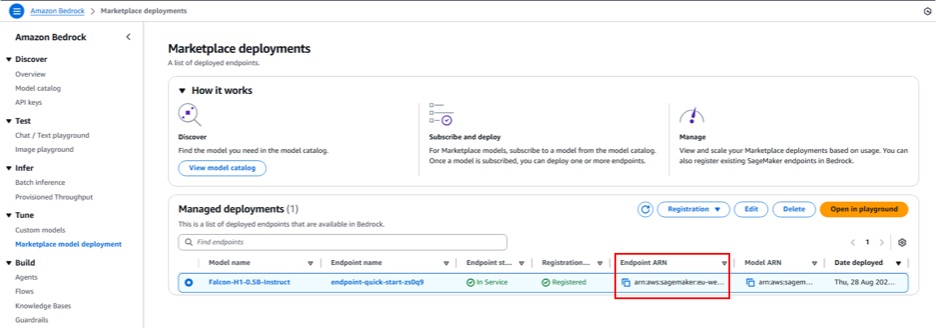

While deployment is in progress, you can choose Marketplace model deployments in the navigation pane to monitor the deployment progress in the Managed deployment section. When the deployment is complete, the endpoint status will change from Creating to In Service.

Interact with the model in the Amazon Bedrock Marketplace playground

You can now test Falcon-H1 capabilities directly in the Amazon Bedrock playground by selecting the managed deployment and choosing Open in playground.

You can now use the Amazon Bedrock Marketplace playground to interact with Falcon-H1-0.5B-Instruct.

Invoke the model using code

In this section, we demonstrate to invoke the model using the Amazon Bedrock Converse API.

Replace the placeholder code with the endpoint’s Amazon Resource Name (ARN), which begins with arn:aws:sagemaker. You can find this ARN on the endpoint details page in the Managed deployments section.

To learn more about the detailed steps and example code for invoking the model using Amazon Bedrock APIs, refer to Submit prompts and generate response using the API.

Deploy Falcon-H1-0.5B-Instruct with SageMaker JumpStart

You can access FMs in SageMaker JumpStart through Amazon SageMaker Studio, the SageMaker SDK, and the AWS Management Console. In this walkthrough, we demonstrate how to deploy Falcon-H1-0.5B-Instruct using the SageMaker Python SDK. Refer to Deploy a model in Studio to learn how to deploy the model through SageMaker Studio.

Prerequisites

To deploy Falcon-H1-0.5B-Instruct with SageMaker JumpStart, you must have the following prerequisites:

- An AWS account that will contain your AWS resources.

- An IAM role to access SageMaker AI. To learn more about how IAM works with SageMaker AI, see Identity and Access Management for Amazon SageMaker AI.

- Access to SageMaker Studio with a JupyterLab space, or an interactive development environment (IDE) such as Visual Studio Code or PyCharm.

Deploy the model programmatically using the SageMaker Python SDK

Before deploying Falcon-H1-0.5B-Instruct using the SageMaker Python SDK, make sure you have installed the SDK and configured your AWS credentials and permissions.

The following code example demonstrates how to deploy the model:

When the previous code segment completes successfully, the Falcon-H1-0.5B-Instruct model deployment is complete and available on a SageMaker endpoint. Note the endpoint name shown in the output—you will replace the placeholder in the following code segment with this value.The following code demonstrates how to prepare the input data, make the inference API call, and process the model’s response:

Clean up

To avoid ongoing charges for AWS resources used while experimenting with Falcon-H1 models, make sure to delete all deployed endpoints and their associated resources when you’re finished. To do so, complete the following steps:

- Delete Amazon Bedrock Marketplace resources:

- On the Amazon Bedrock console, choose Marketplace model deployment in the navigation pane.

- Under Managed deployments, choose the Falcon-H1 model endpoint you deployed earlier.

- Choose Delete and confirm the deletion if you no longer need to use this endpoint in Amazon Bedrock Marketplace.

- Delete SageMaker endpoints:

- On the SageMaker AI console, in the navigation pane, choose Endpoints under Inference.

- Select the endpoint associated with the Falcon-H1 models.

- Choose Delete and confirm the deletion. This stops the endpoint and avoids further compute charges.

- Delete SageMaker models:

- On the SageMaker AI console, choose Models under Inference.

- Select the model associated with your endpoint and choose Delete.

Always verify that all endpoints are deleted after experimentation to optimize costs. Refer to the Amazon SageMaker documentation for additional guidance on managing resources.

Conclusion

The availability of Falcon-H1 models in Amazon Bedrock Marketplace and SageMaker JumpStart helps developers, researchers, and businesses build cutting-edge generative AI applications with ease. Falcon-H1 models offer multilingual support (18 languages) across various model sizes (from 0.5B to 34B parameters) and support up to 256K context length, thanks to their efficient hybrid attention-SSM architecture.

By using the seamless discovery and deployment capabilities of Amazon Bedrock Marketplace and SageMaker JumpStart, you can accelerate your AI innovation while benefiting from the secure, scalable, and cost-effective AWS Cloud infrastructure.

We encourage you to explore the Falcon-H1 models in Amazon Bedrock Marketplace or SageMaker JumpStart. You can use these models in AWS Regions where Amazon Bedrock or SageMaker JumpStart and the required instance types are available.

For further learning, explore the AWS Machine Learning Blog, SageMaker JumpStart GitHub repository, and Amazon Bedrock User Guide. Start building your next generative AI application with Falcon-H1 models and unlock new possibilities with AWS!

Special thanks to everyone who contributed to the launch: Evan Kravitz, Varun Morishetty, and Yotam Moss.

About the authors

Mehran Nikoo leads the Go-to-Market strategy for Amazon Bedrock and agentic AI in EMEA at AWS, where he has been driving the development of AI systems and cloud-native solutions over the last four years. Prior to joining AWS, Mehran held leadership and technical positions at Trainline, McLaren, and Microsoft. He holds an MBA from Warwick Business School and an MRes in Computer Science from Birkbeck, University of London.

Mehran Nikoo leads the Go-to-Market strategy for Amazon Bedrock and agentic AI in EMEA at AWS, where he has been driving the development of AI systems and cloud-native solutions over the last four years. Prior to joining AWS, Mehran held leadership and technical positions at Trainline, McLaren, and Microsoft. He holds an MBA from Warwick Business School and an MRes in Computer Science from Birkbeck, University of London.

Mustapha Tawbi is a Senior Partner Solutions Architect at AWS, specializing in generative AI and ML, with 25 years of enterprise technology experience across AWS, IBM, Sopra Group, and Capgemini. He has a PhD in Computer Science from Sorbonne and a Master’s degree in Data Science from Heriot-Watt University Dubai. Mustapha leads generative AI technical collaborations with AWS partners throughout the MENAT region.

Mustapha Tawbi is a Senior Partner Solutions Architect at AWS, specializing in generative AI and ML, with 25 years of enterprise technology experience across AWS, IBM, Sopra Group, and Capgemini. He has a PhD in Computer Science from Sorbonne and a Master’s degree in Data Science from Heriot-Watt University Dubai. Mustapha leads generative AI technical collaborations with AWS partners throughout the MENAT region.

Jingwei Zuo is a Lead Researcher at the Technology Innovation Institute (TII) in the UAE, where he leads the Falcon Foundational Models team. He received his PhD in 2022 from University of Paris-Saclay, where he was awarded the Plateau de Saclay Doctoral Prize. He holds an MSc (2018) from the University of Paris-Saclay, an Engineer degree (2017) from Sorbonne Université, and a BSc from Huazhong University of Science & Technology.

Jingwei Zuo is a Lead Researcher at the Technology Innovation Institute (TII) in the UAE, where he leads the Falcon Foundational Models team. He received his PhD in 2022 from University of Paris-Saclay, where he was awarded the Plateau de Saclay Doctoral Prize. He holds an MSc (2018) from the University of Paris-Saclay, an Engineer degree (2017) from Sorbonne Université, and a BSc from Huazhong University of Science & Technology.

John Liu is a Principal Product Manager for Amazon Bedrock at AWS. Previously, he served as the Head of Product for AWS Web3/Blockchain. Prior to joining AWS, John held various product leadership roles at public blockchain protocols and financial technology (fintech) companies for 14 years. He also has nine years of portfolio management experience at several hedge funds.

John Liu is a Principal Product Manager for Amazon Bedrock at AWS. Previously, he served as the Head of Product for AWS Web3/Blockchain. Prior to joining AWS, John held various product leadership roles at public blockchain protocols and financial technology (fintech) companies for 14 years. He also has nine years of portfolio management experience at several hedge funds.

Hamza MIMI is a Solutions Architect for partners and strategic deals in the MENAT region at AWS, where he bridges cutting-edge technology with impactful business outcomes. With expertise in AI and a passion for sustainability, he helps organizations architect innovative solutions that drive both digital transformation and environmental responsibility, transforming complex challenges into opportunities for growth and positive change.

Hamza MIMI is a Solutions Architect for partners and strategic deals in the MENAT region at AWS, where he bridges cutting-edge technology with impactful business outcomes. With expertise in AI and a passion for sustainability, he helps organizations architect innovative solutions that drive both digital transformation and environmental responsibility, transforming complex challenges into opportunities for growth and positive change.

Books, Courses & Certifications

Oldcastle accelerates document processing with Amazon Bedrock

This post was written with Avdhesh Paliwal of Oldcastle APG.

Oldcastle APG, one of the largest global networks of manufacturers in the architectural products industry, was grappling with an inefficient and labor-intensive process for handling proof of delivery (POD) documents, known as ship tickets. The company was processing 100,000–300,000 ship tickets per month across more than 200 facilities. Their existing optical character recognition (OCR) system was unreliable, requiring constant maintenance and manual intervention. It could only accurately read 30–40% of the documents, leading to significant time and resource expenditure.

This post explores how Oldcastle partnered with AWS to transform their document processing workflow using Amazon Bedrock with Amazon Textract. We discuss how Oldcastle overcame the limitations of their previous OCR solution to automate the processing of hundreds of thousands of POD documents each month, dramatically improving accuracy while reducing manual effort. This solution demonstrates a practical, scalable approach that can be adapted to your specific needs, such as similar challenges addressing document processing or using generative AI for business process optimization.

Challenges with document processing

The primary challenge for Oldcastle was to find a solution that could accomplish the following:

- Accurately process a high volume of ship tickets (PODs) with minimal human intervention

- Scale to handle 200,000–300,000 documents per month

- Handle inconsistent inputs like rotated pages and variable formatting

- Improve the accuracy of data extraction from the current 30–40% to a much higher rate

- Add new capabilities like signature validation on PODs

- Provide real-time visibility into outstanding PODs and deliveries

Additionally, Oldcastle needed a solution for processing supplier invoices and matching them against purchase orders, which presented similar challenges due to varying document formats.The existing process required dispatchers at more than 200 facilities to spend 4–5 hours daily manually processing ship tickets. This consumed valuable human resources and led to delays in processing and potential errors in data entry. The IT team was burdened with constant maintenance and development efforts to keep the unreliable OCR system functioning.

Solution overview

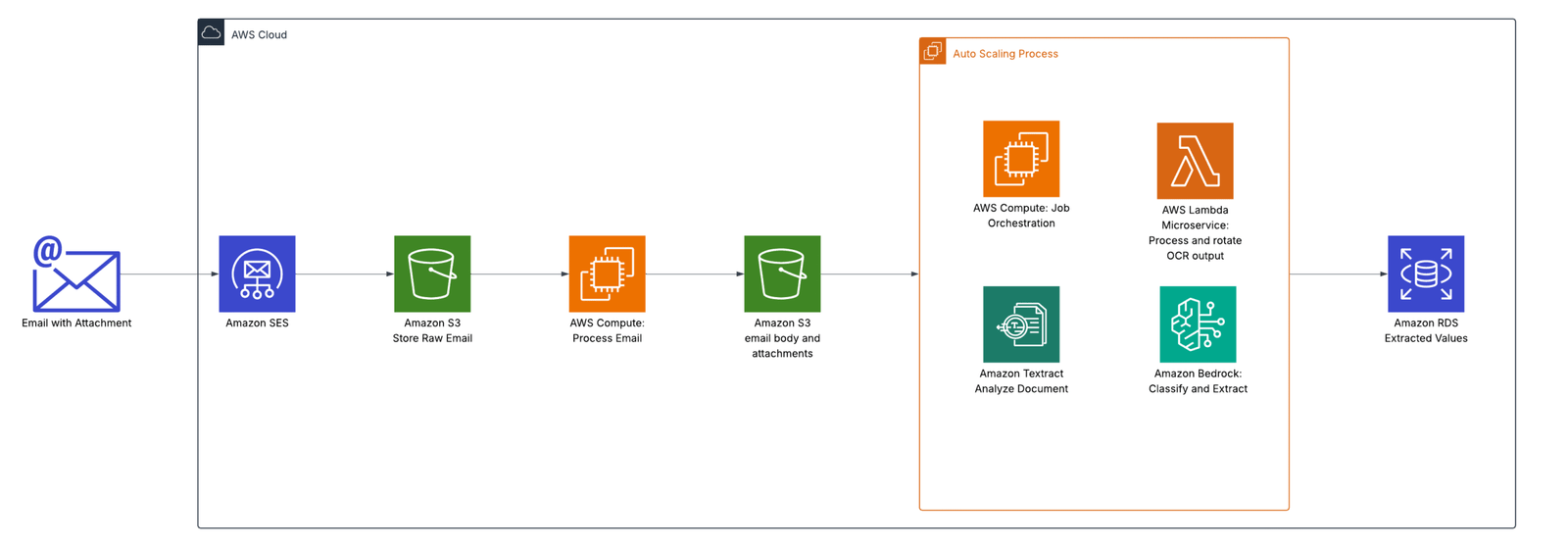

AWS Solutions Architects worked closely with Oldcastle engineers to build a solution addressing these challenges. The end-to-end workflow uses Amazon Simple Email Service (Amazon SES) to receive ship tickets, which are sent directly from drivers in the field. The system processes emails at scale using an event-based architecture centered on Amazon S3 Event Notifications. The workflow sends ship ticket documents to an automatic scaling compute job orchestrator. Documents are processed with the following steps:

- The system sends PDF files to Amazon Textract using the Start Document Analysis API with Layout and Signature features.

- Amazon Textract results are processed by an AWS Lambda microservice. This microservice resolves rotation issues with page text and generates a collection of pages of markdown representation of the text.

- The markdown is passed to Amazon Bedrock, which efficiently extracts key values from the markdown text.

- The orchestrator saves the results to their Amazon Relational Database Service (Amazon RDS) for PostgreSQL database.

The following diagram illustrates the solution architecture.

In this architecture, Amazon Textract is an effective solution to handle large PDF files at scale. The output of Amazon Textract contains the necessary geometries used to calculate rotation and fix layout issues before generating markdown. Quality markdown layouts are critical for Amazon Bedrock in identifying the right key-value pairs from the content. We further optimized cost by extracting only the data needed to limit output tokens and by using Amazon Bedrock batch processing to get the lowest token cost. Amazon Bedrock was used for its cost-effectiveness and ability to process format shipping tickets where the fields that need to be extracted are the same.

Results

The implementation using this architecture on AWS brought numerous benefits to Oldcastle:

- Business process improvement – The solution accomplished the following:

- Alleviated the need for manual processing of ship tickets at each facility

- Automated document processing with minimal human intervention

- Improved accuracy and reliability of data extraction

- Enhanced ability to validate signatures and reject incomplete documents

- Provided real-time visibility into outstanding PODs and deliveries

- Productivity gains – Oldcastle saw the following benefits:

- Significantly fewer human hours were spent on manual data entry and document processing

- Staff had more time for more value-added activities

- The IT team benefited from reduced development and maintenance efforts

- Scalability and performance – The team experienced the following performance gains:

- They seamlessly scaled from processing a few thousand documents to 200,000–300,000 documents per month

- The team observed no performance issues with increased volume

- User satisfaction – The solution improved user sentiment in several ways:

- High user confidence in the new system due to its accuracy and reliability

- Positive feedback from business users on the ease of use and effectiveness

- Cost-effective – With this approach, Oldcastle can process documents at less than $0.04 per page

Conclusion

With the success of the AWS implementation, Oldcastle is exploring potential expansion to other use cases such as AP invoice processing, W9 form validation, and automated document approval workflows. This strategic move towards AI-powered document processing is positioning Oldcastle for improved efficiency and scalability in its operations.

Review your current manual document processing procedures and identify where intelligent document processing can help you automate these workflows for your business.

For further exploration and learning, we recommend checking out the following resources:

About the authors

Erik Cordsen is a Solutions Architect at AWS serving customers in Georgia. He is passionate about applying cloud technologies and ML to solve real life problems. When he is not designing cloud solutions, Erik enjoys travel, cooking, and cycling.

Erik Cordsen is a Solutions Architect at AWS serving customers in Georgia. He is passionate about applying cloud technologies and ML to solve real life problems. When he is not designing cloud solutions, Erik enjoys travel, cooking, and cycling.

Sourabh Jain is a Senior Solutions Architect with over 8 years of experience developing cloud solutions that drive better business outcomes for organizations worldwide. He specializes in architecting and implementing robust cloud software solutions, with extensive experience working alongside global Fortune 500 teams across diverse time zones and cultures.

Sourabh Jain is a Senior Solutions Architect with over 8 years of experience developing cloud solutions that drive better business outcomes for organizations worldwide. He specializes in architecting and implementing robust cloud software solutions, with extensive experience working alongside global Fortune 500 teams across diverse time zones and cultures.

Avdhesh Paliwal is an accomplished Application Architect at Oldcastle APG with 29 years of extensive ERP experience. His expertise spans Manufacturing, Supply Chain, and Human Resources modules, with a proven track record of designing and implementing enterprise solutions that drive operational efficiency and business value.

Avdhesh Paliwal is an accomplished Application Architect at Oldcastle APG with 29 years of extensive ERP experience. His expertise spans Manufacturing, Supply Chain, and Human Resources modules, with a proven track record of designing and implementing enterprise solutions that drive operational efficiency and business value.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi