Books, Courses & Certifications

We Are Only Beginning to Understand How to Use AI – O’Reilly

I remember once flying to a meeting in another country and working with a group of people to annotate a proposed standard. The convener projected a Word document on the screen and people called out proposed changes, which were then debated in the room before being adopted or adapted, added or subtracted. I kid you not.

I don’t remember exactly when this was, but I know it was after the introduction of Google Docs in 2005, because I do remember being completely baffled and frustrated that this international standards organization was still stuck somewhere in the previous century.

You may not have experienced anything this extreme, but many people will remember the days of sending around Word files as attachments and then collating and comparing multiple divergent versions. And this behavior also persisted long after 2005. (Apparently, this is still the case in some contexts, such as in parts of the U.S. government.) If you aren’t old enough to have experienced that, consider yourself lucky.

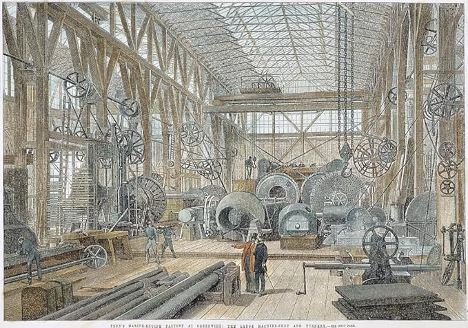

This is, in many ways, the point of Arvind Narayanan and Sayash Kapoor’s essay “AI as Normal Technology.” There is a long gap between the invention of a technology and a true understanding of how to apply it. One of the canonical examples came at the end of the Second Industrial Revolution. When first electrified, factories duplicated the design of factories powered by coal and steam, where immense central boilers and steam engines distributed mechanical power to various machines by complex arrangements of gears and pulleys. The steam engines were replaced by large electric motors, but the layout of the factory remained unchanged.

Only over time were factories reconfigured to take advantage of small electric motors that could be distributed throughout the factory and incorporated into individual specialized machines. As I discussed last week with Arvind Narayanan, there are four stages to every technology revolution: the invention of new technology; the diffusion of knowledge about it; the development of products based on it; and adaptation by consumers, businesses, and society as a whole. All this takes time. I love James Bessen’s framing of this process as “learning by doing.” It takes time to understand how best to apply a new technology, to search the possible for its possibleness. People try new things, show them to others, and build on them in a marvelous kind of leapfrogging of the imagination.

So it is no surprise that in 2005 files were still being sent around by email, and that one day a small group of inventors came up with a way to realize the true possibilities of the internet and built an environment where a file could be shared in real time by a set of collaborators, with all the mechanisms of version control present but hidden from view.

On next Tuesday’s episode of Live with Tim O’Reilly, I’ll be talking with that small group—Sam Schillace, Steve Newman, and Claudia Carpenter—whose company Writely was launched in beta 20 years ago this month. Writely was acquired by Google in March of 2006 and became the basis of Google Docs.

In that same year, Google also reinvented online maps, spreadsheets, and more. It was a year that some fundamental lessons of the internet—already widely available since the early 1990s—finally began to sink in.

Remembering this moment matters a lot, because we are at a similar point today, where we think we know what to do with AI but are still building the equivalent of factories with huge centralized engines rather than truly searching out the possibility of its deployed capabilities. Ethan Mollick recently wrote a wonderful essay about the opportunities (and failure modes) of this moment in “The Bitter Lesson Versus the Garbage Can.” Do we really begin to grasp what is possible with AI or just try to fit it into our old business processes? We have to wrestle with the angel of possibility and remake the familiar into something that at present we can only dimly imagine.

I’m really looking forward to talking with Sam, Steve, Claudia, and those of you who attend, to reflect not just on their achievement 20 years ago but also on what it can teach us about the current moment. I hope you can join us.

AI tools are quickly moving beyond chat UX to sophisticated agent interactions. Our upcoming AI Codecon event, Coding for the Agentic World, will highlight how developers are already using agents to build innovative and effective AI-powered experiences. We hope you’ll join us on September 9 to explore the tools, workflows, and architectures defining the next era of programming. It’s free to attend. Register now to save your seat.

Books, Courses & Certifications

Gies College of Business Wins the Coursera Trailblazer Award for Online Education: Voices of Learners at Gies

Gies College of Business at the University of Illinois has been honored with the Trailblazer Award at the September 2025 Coursera Connect conference, recognizing its bold leadership in reshaping online education. From launching the very first degree with Coursera to pioneering tools like Coursera Coach and AI avatars, Gies has consistently pushed the boundaries of what’s possible in accessible, high-quality business education.

Today, learners around the world can pursue three Gies Business master’s degrees online in conjunction with Coursera: the Master of Business Administration (iMBA), Master of Science in Management (iMSM), and Master of Science in Accountancy (iMSA), as well as access stackable open content in AI, reflecting the school’s commitment to staying innovative and meeting students wherever they are. Yet the truest reflection of this trailblazing spirit comes from its global learners whose voices showcase the impact, community, and transformative nature that define the Gies Business.

Flexible Learning for Diverse Lifestyles

Chelsea Doman, a single mother of four, found the Gies iMBA to be the perfect fit for her busy life. “When I saw Illinois had an online MBA with Coursera, it was like the heavens opened,” she says. The program’s structure allowed her to balance family, work, and studies without the need for a GMAT score. Chelsea emphasizes the value of learning for personal growth, stating, “I did it for the joy of learning, a longtime goal, a holistic business understanding.”

Similarly, Tom Fail, who pursued the iMBA while working in EdTech, appreciated the program’s blend of online flexibility and in-person networking opportunities. He notes, “With this program, you get to blend the benefits of networking and having a hands-on experience that you’d get from an in-person program, with the flexibility of an online program.”

Global Community and Real-World Application

Ishpinder Kailey, an Australia-based learner, leveraged the iMBA to transition from a background in chemistry to a leadership role in business strategy. “This degree gave me the confidence to lead my business from the front.” She also highlights the program’s global reach and collaborative environment: “The professors are exceptional, and the peer learning from a global cohort is a tremendous asset.”

Joshua David Tarfa, a Nigeria-based senior product manager who recently graduated from the 12-month MS in Management (iMSM) degree program, found immediate applicability of his coursework to his professional role. He shares, “From the very beginning, I could apply what I was learning. I remember taking a strategic management course and using concepts from it immediately at work.”

A Commitment to Lifelong Learning and Connection

For many learners, the Gies experience extends far beyond graduation. Dennis Harlow describes how the iMSA program connected him to a global network: “Even though it’s online, I felt like I was part of a community. The connections I made with peers and instructors have been amazing.” Similarly, Patrick Surrett emphasizes the flexibility and ongoing engagement of the program: “I believe in lifelong learning and this program made it possible. It truly is online by design.” Together, their experiences reflect Gies’ dedication to fostering lifelong learning and a supportive global community.

Online with Gies Business: A Degree That Works for You

Congratulations to Gies College of Business on earning the esteemed Trailblazer Award. This recognition reflects not only the school’s pioneering approach to online education but also the achievements and experiences of its learners. From balancing family and careers to pursuing personal growth and professional impact, Gies students around the world continue to thrive in a flexible, rigorous, and supportive environment. Their stories are a testament to the community, innovation, and opportunity that define what it means to learn with Gies.

Interested in an online degree with the University of Illinois Gies College of Business? Check out the programs here→

Books, Courses & Certifications

10 generative AI certifications to grow your skills

AWS Certified AI Practitioner

Amazon’s AWS Certified AI Practitioner certification validates your knowledge of AI, machine learning (ML), and generative AI concepts and use cases, as well as your ability to apply those in an enterprise setting. The exam covers the fundamentals of AI and ML, generative AI, applications of foundation models, and guidelines for responsible use of AI, as well as security, compliance, and governance for AI solutions. It’s targeted at business analysts, IT support professionals, marketing professionals, product and project managers, line-of-business or IT managers, and sales professionals. It’s an entry-level certification and there are no prerequisites to take the exam.

Cost: $100

Certified Generative AI Specialist (CGAI)

Offered through the Chartered Institute of Professional Certifications, the Certified Generative AI Specialist (CGAI) certification is designed to teach you the in-depth knowledge and skills required to be successful with generative AI. The course covers principles of generative AI, data acquisition and preprocessing, neural network architectures, natural language processing (NLP), image and video generation, audio synthesis, and creative AI applications. On completing the learning modules, you will need to pass a chartered exam to earn the CGAI designation.

Books, Courses & Certifications

Enhance video understanding with Amazon Bedrock Data Automation and open-set object detection

In real-world video and image analysis, businesses often face the challenge of detecting objects that weren’t part of a model’s original training set. This becomes especially difficult in dynamic environments where new, unknown, or user-defined objects frequently appear. For example, media publishers might want to track emerging brands or products in user-generated content; advertisers need to analyze product appearances in influencer videos despite visual variations; retail providers aim to support flexible, descriptive search; self-driving cars must identify unexpected road debris; and manufacturing systems need to catch novel or subtle defects without prior labeling.In all these cases, traditional closed-set object detection (CSOD) models—which only recognize a fixed list of predefined categories—fail to deliver. They either misclassify the unknown objects or ignore them entirely, limiting their usefulness for real-world applications.Open-set object detection (OSOD) is an approach that enables models to detect both known and previously unseen objects, including those not encountered during training. It supports flexible input prompts, ranging from specific object names to open-ended descriptions, and can adapt to user-defined targets in real time without requiring retraining. By combining visual recognition with semantic understanding—often through vision-language models—OSOD helps users query the system broadly, even if it’s unfamiliar, ambiguous, or entirely new.

In this post, we explore how Amazon Bedrock Data Automation uses OSOD to enhance video understanding.

Amazon Bedrock Data Automation and video blueprints with OSOD

Amazon Bedrock Data Automation is a cloud-based service that extracts insights from unstructured content like documents, images, video and audio. Specifically, for video content, Amazon Bedrock Data Automation supports functionalities such as chapter segmentation, frame-level text detection, chapter-level classification Interactive Advertising Bureau (IAB) taxonomies, and frame-level OSOD. For more information about Amazon Bedrock Data Automation, see Automate video insights for contextual advertising using Amazon Bedrock Data Automation.

Amazon Bedrock Data Automation video blueprints support OSOD on the frame level. You can input a video along with a text prompt specifying the desired objects to detect. For each frame, the model outputs a dictionary containing bounding boxes in XYWH format (the x and y coordinates of the top-left corner, followed by the width and height of the box), along with corresponding labels and confidence scores. You can further customize the output based on their needs—for instance, filtering by high-confidence detections when precision is prioritized.

The input text is highly flexible, so you can define dynamic fields in the Amazon Bedrock Data Automation video blueprints powered by OSOD.

Example use cases

In this section, we explore some examples of different use cases for Amazon Bedrock Data Automation video blueprints using OSOD. The following table summarizes the functionality of this feature.

| Functionality | Sub-functionality | Examples |

| Multi-granular visual comprehension | Object detection from fine-grained object reference | "Detect the apple in the video." |

| Object detection from cross-granularity object reference | "Detect all the fruit items in the image." |

|

| Object detection from open questions | "Find and detect the most visually important elements in the image." |

|

| Visual hallucination detection | Identify and flag object mentionings in the input text that do not correspond to actual content in the given image. | "Detect if apples appear in the image." |

Ads analysis

Advertisers can use this feature to compare the effectiveness of various ad placement strategies across different locations and conduct A/B testing to identify the most optimal advertising approach. For example, the following image is the output in response to the prompt “Detect the locations of echo devices.”

Smart resizing

By detecting key elements in the video, you can choose appropriate resizing strategies for devices with different resolutions and aspect ratios, making sure important visual information is preserved. For example, the following image is the output in response to the prompt “Detect the key elements in the video.”

Surveillance with intelligent monitoring

In home security systems, producers or users can take advantage of the model’s high-level understanding and localization capabilities to maintain safety, without the need to manually enumerate all possible scenarios. For example, the following image is the output in response to the prompt “Check dangerous elements in the video.”

Custom labels

You can define your own labels and search through videos to retrieve specific, desired results. For example, the following image is the output in response to the prompt “Detect the white car with red wheels in the video.”

Image and video editing

With flexible text-based object detection, you can accurately remove or replace objects in photo editing software, minimizing the need for imprecise, hand-drawn masks that often require multiple attempts to achieve the desired result. For example, the following image is the output in response to the prompt “Detect the people riding motorcycles in the video.”

Sample video blueprint input and output

The following example demonstrates how to define an Amazon Bedrock Data Automation video blueprint to detect visually prominent objects at the chapter level, with sample output including objects and their bounding boxes.

The following code is our example blueprint schema:

The following code is out example video custom output:

For the full example, refer to the following GitHub repo.

Conclusion

The OSOD capability within Amazon Bedrock Data Automation significantly enhances the ability to extract actionable insights from video content. By combining flexible text-driven queries with frame-level object localization, OSOD helps users across industries implement intelligent video analysis workflows—ranging from targeted ad evaluation and security monitoring to custom object tracking. Integrated seamlessly into the broader suite of video analysis tools available in Amazon Bedrock Data Automation, OSOD not only streamlines content understanding but also help reduce the need for manual intervention and rigid pre-defined schemas, making it a powerful asset for scalable, real-world applications.

To learn more about Amazon Bedrock Data Automation video and audio analysis, see New Amazon Bedrock Data Automation capabilities streamline video and audio analysis.

About the authors

Dongsheng An is an Applied Scientist at AWS AI, specializing in face recognition, open-set object detection, and vision-language models. He received his Ph.D. in Computer Science from Stony Brook University, focusing on optimal transport and generative modeling.

Dongsheng An is an Applied Scientist at AWS AI, specializing in face recognition, open-set object detection, and vision-language models. He received his Ph.D. in Computer Science from Stony Brook University, focusing on optimal transport and generative modeling.

Lana Zhang is a Senior Solutions Architect in the AWS World Wide Specialist Organization AI Services team, specializing in AI and generative AI with a focus on use cases including content moderation and media analysis. She’s dedicated to promoting AWS AI and generative AI solutions, demonstrating how generative AI can transform classic use cases by adding business value. She assists customers in transforming their business solutions across diverse industries, including social media, gaming, ecommerce, media, advertising, and marketing.

Lana Zhang is a Senior Solutions Architect in the AWS World Wide Specialist Organization AI Services team, specializing in AI and generative AI with a focus on use cases including content moderation and media analysis. She’s dedicated to promoting AWS AI and generative AI solutions, demonstrating how generative AI can transform classic use cases by adding business value. She assists customers in transforming their business solutions across diverse industries, including social media, gaming, ecommerce, media, advertising, and marketing.

Raj Jayaraman is a Senior Generative AI Solutions Architect at AWS, bringing over a decade of experience in helping customers extract valuable insights from data. Specializing in AWS AI and generative AI solutions, Raj’s expertise lies in transforming business solutions through the strategic application of AWS’s AI capabilities, ensuring customers can harness the full potential of generative AI in their unique contexts. With a strong background in guiding customers across industries in adopting AWS Analytics and Business Intelligence services, Raj now focuses on assisting organizations in their generative AI journey—from initial demonstrations to proof of concepts and ultimately to production implementations.

Raj Jayaraman is a Senior Generative AI Solutions Architect at AWS, bringing over a decade of experience in helping customers extract valuable insights from data. Specializing in AWS AI and generative AI solutions, Raj’s expertise lies in transforming business solutions through the strategic application of AWS’s AI capabilities, ensuring customers can harness the full potential of generative AI in their unique contexts. With a strong background in guiding customers across industries in adopting AWS Analytics and Business Intelligence services, Raj now focuses on assisting organizations in their generative AI journey—from initial demonstrations to proof of concepts and ultimately to production implementations.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi