Tools & Platforms

Vanderbilt Law Announces Inaugural AI Governance Symposium -Vanderbilt Law School

Vanderbilt Law School is pleased to announce the inaugural Vanderbilt AI Governance Symposium, to be held on Tuesday, October 21, 2025, on campus at Vanderbilt Law School.

Hosted by the Vanderbilt AI Law Lab (VAILL), this landmark event will bring together a stellar lineup of experts from industry, academia, government, and beyond to explore issues of AI accountability, transparency, and governance.

“Whether you work in the tech sector, academia, government, or legal practice, this is an essential event for anyone operating at the intersection of AI and society,” said Mark Williams, VAILL Co-Director and Professor of the Practice of Law.

Panels featuring policymakers, practitioners, and academics will discuss governance frameworks, AI’s environmental impacts, developments in AI policy, teaching AI subject matter, and more. The event concludes with a networking reception.

The symposium was organized by Williams; Sean Perryman, Adjunct Professor of Law; and Asad Ramzanali, Director of Artificial Intelligence & Technology Policy at the Vanderbilt Policy Accelerator.

There are multiple lodging options around Vanderbilt’s campus. Nearby accommodations include the Aertson Midtown, Graduate Hotel, Loews Vanderbilt, Marriott Vanderbilt, Hotel Fraye, Virgin Nashville, and Courtyard Vanderbilt/West End.

To register for the symposium, please visit the registration section of the event page.

For additional details, schedule, logistics, and updates, please refer to the main event page.

Tools & Platforms

Industrial AI in manufacturing to hit USD $153.9 billion by 2030

The global industrial artificial intelligence (AI) market is projected to increase to USD $153.9 billion by 2030, from its value of USD $43.6 billion in 2024, reflecting a 23% compound annual growth rate according to new analysis from IoT Analytics.

Findings from the Industrial AI Market Report 2025–2030 show that although spending on industrial AI currently accounts for just 0.1% of manufacturing sector revenue, there has been a notable shift in strategic focus areas, including industrial data management, quality inspection, edge AI, industrial copilots, employee training and upskilling, and initial trials involving agentic AI.

Strategic shift

Analysis by IoT Analytics indicates that AI is now moving beyond pilot phases and isolated projects within manufacturing. The adoption is becoming a CEO-level priority, with a greater share of resources being allocated to modernising data architectures and scaling use cases previously proven in areas such as quality inspection.

Knud Lasse Lueth, CEO at IoT Analytics, comments that “Industrial AI reached $43.6 billion in 2024 and is set to surpass $150 billion by 2030. While spending remains below 10% of manufacturing R&D or IT budgets, it is increasingly driven by CEO-level strategies. The focus has shifted to modernizing data architectures and scaling proven use cases such as quality inspection. Following the wave of industrial copilots launched in 2024 and 2025, edge AI and agentic AI are now emerging as the next areas of attention going into 2026.”

IoT Analytics’ report outlines that manufacturers are adjusting their approaches after early AI trials in previous years often produced disappointment due to unclear business cases or insufficient value creation. The report highlights a sea change as end-user awareness and AI education improve across the sector.

Technological drivers

According to the research, key technological drivers now include high-performance data architectures, the expansion of edge AI solutions, and the deployment of AI “copilots” to support operational decisions and workflows on the manufacturing floor. Manufacturers are also focusing on workforce training to ensure employees can adapt to and manage new AI-enabled solutions.

Fernando Brügge, Senior Analyst at IoT Analytics, adds that “Industrial AI is finally taking off. Today, we see AI becoming a central part of manufacturers’ strategies. Back in 2021, this was far from the case: many companies struggled to identify a business case or derive real value from early deployments, and a good number were left disappointed when promised outcomes did not materialize. Now, we are in a very different position: the infrastructure is in place, end-user awareness and education have improved, and the technology is beginning to deliver tangible benefits. These factors are driving manufacturers to develop their own industrial AI strategies. In the years ahead, industrial AI will not just support operations but increasingly shape how machines are designed, how supply chains are managed, and how factories compete.”

The IoT Analytics report details how the quality inspection segment is already seeing AI-driven enhancements, with computer vision and machine learning being used for defect detection, predictive maintenance, and optimising production processes. The emergence of edge AI – AI processing located on or near the physical equipment – is also highlighted as a next step for manufacturers seeking to reduce data latency and increase operational autonomy.

Next areas of attention

Expert commentary in the report signals that following the adoption of industrial copilots in 2024 and 2025, attention is now shifting to the potential of agentic AI. This approach enables autonomous agents, supported by advanced machine learning, to perform decision-making tasks and optimise industrial processes without direct human intervention.

The report emphasises the relatively modest proportion of current manufacturing budgets dedicated to AI, but points to evidence of increasing investment driven by strategic objectives at the top levels of industrial organisations.

IoT Analytics’ findings also suggest that the maturation of AI use in manufacturing is a result of both infrastructure readiness and improved education, making it possible for manufacturing firms to extract measurable benefits from their AI deployments.

The report and the accompanying public analysis provide further detail on ten key insights regarding AI’s transformation of the manufacturing industry and can be accessed through IoT Analytics by interested parties.

Tools & Platforms

New venture competition brings AI startup craze to Waco

By Josh Siatkowski | Staff Writer

There are 498 private AI companies valued at over $1 billion. A new initiative in the Hankamer School of Business has many hoping number 499 comes from Baylor.

“Think you’ve got the next big idea in AI?” asks the flyer for Baylor’s inaugural AI Venture Challenge, a Shark Tank-style startup competition in which Baylor students can win up to $3,000 in funding for their AI business plans.

The challenge came from the desk of Business School Dean Dr. David Szymanski, who, in his first year at Baylor, has pushed for the adoption of AI across all business disciplines. Working alongside the entrepreneurship department, Szymanski wanted to lead something that combines a top-10 program with the popular technology.

“We have a top entrepreneurship and innovation program,” Szymanski said. “And so what you really want to do is ask the question, ‘What would a leader in this field do?’… It’s about being out in front, seeing things differently and seeing opportunities in the marketplace that other people don’t.”

For Szymanski, the answer is AI. From an institutional perspective, staying ahead of the workplace skills of the future is essential, Szymanski said. And on the student side, it’s a way to build AI proficiency and a chance to get a business off the ground.

Students with an idea must submit their business plans through a survey by Sept. 30. According to Dr. Lee Grumbles, assistant clinical professor in the entrepreneurship and corporate innovation department, the only requirements are that each team consists of one to three students, that all students are undergraduates and that the business is AI-based.

Following the initial questionnaire, the top 10 teams will advance to the final round on Oct. 21, which will involve a presentation before a panel of two entrepreneurship professors and one industry professional. About 25 teams have already expressed interest, but Grumbles said he’d like to see 75 or 100 teams apply and turn the challenge into a recurring event.

Students outside the business school are welcome, even encouraged, as Grumbles will meet with computer science students in their classes throughout the week. There’s also no limit on how developed one’s business plan can be. The submission can be a day-old idea or a more developed, already existing business.

“The term startup is fairly broad,” Grumbles said. “Companies that have been in business for two years are still considered new ventures. They can be brand new startup ideas that are still in the vetting stage, or ones that already have LLCs formed.”

Though a unique-to-Baylor challenge, the push to adopt AI in entrepreneurship is well underway. There are already over 10,000 AI startups existing worldwide, and the funding keeps coming. 1,300 of these have already crossed the $100 million valuation threshold, and new self-made billionaires are popping up like never before.

The funding for Baylor’s challenge might not make any billionaires, but winners will split a prize of $5,000, which Grumbles said is for funding each team’s venture. First prize will be awarded $3,000, while second and third will win $1,500 and $500, respectively. However, the flyer states that winnings can be paid in the form of a scholarship.

As AI continues to fast-track to the future, Szymanski hopes competitions and other AI initiatives become not just a new experience for Baylor students, but the norm.

“I think what we’ll see in another three to six months is that we’re probably not going to have these separate conversations about AI,” Szymanski said. “It’s going to be a part of what we are and part of what we do.”

Tools & Platforms

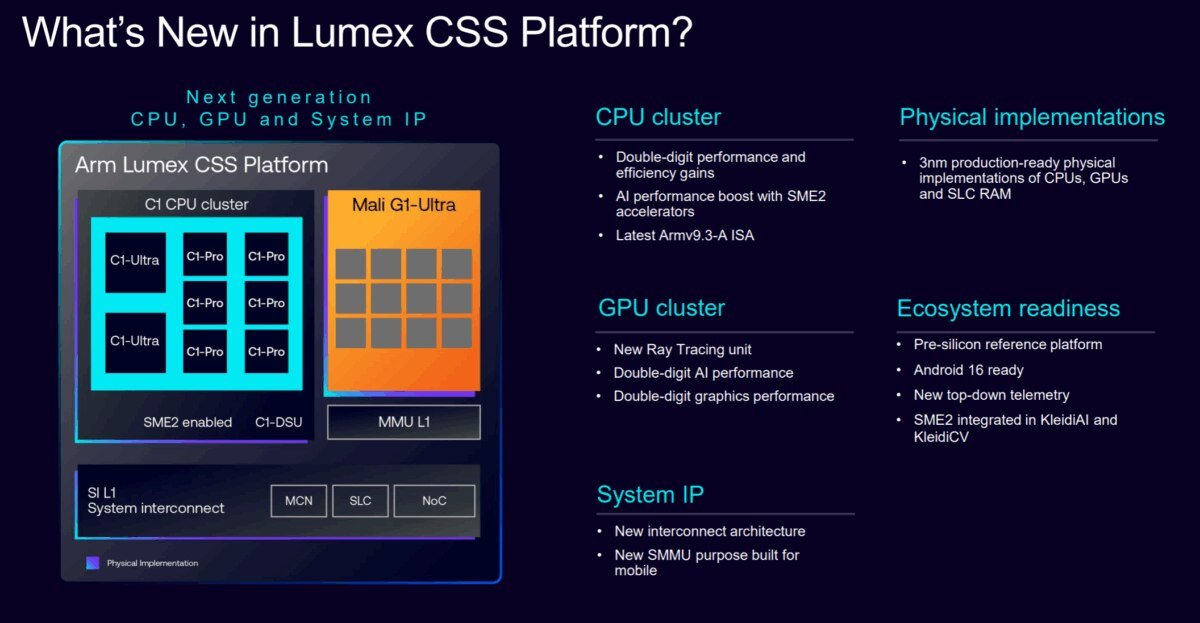

Accelerating Development Cycles and Scalable, High-Performance On-Device AI with New Arm Lumex CSS Platform

New platform tightly integrates hardware and software to speed time-to-market and meet the growing demands of on-device AI experiences across the ecosystem.

Mobile devices are evolving into AI-powered tools that adapt, anticipate, and enhance how we interact with the world. However, as on-device AI becomes more advanced and sophisticated, the pressure on mobile silicon is intensifying.

Accelerated product cycles – where each new generation of flagship mobile devices arrives faster than the last – mean silicon providers and OEMs need to deliver innovation on tighter timescales with less margin for error. Advanced packaging techniques to sustain AI performance can be challenging to achieve in area and thermally constrained mobile form factors. Finally, the move to shrinking process nodes, like 3nm, introduces steep design complexities.

This is exactly why Arm introduced integrated platforms that combine Arm CPU and GPU IP with physical implementations and read-to-deploy software stacks to speed time-to-market and deliver best-in-class performance on the latest cutting-edge process nodes. The next evolution is Arm Lumex: our new purpose-built compute subsystem (CSS) platform to meet the growing demands of on-device AI experiences on flagship mobile and PC devices.

Re-Designed for the AI-first Era

Lumex provides the latest co-designed, co-optimized Arm compute IP with advanced features into a modular and highly configurable platform:

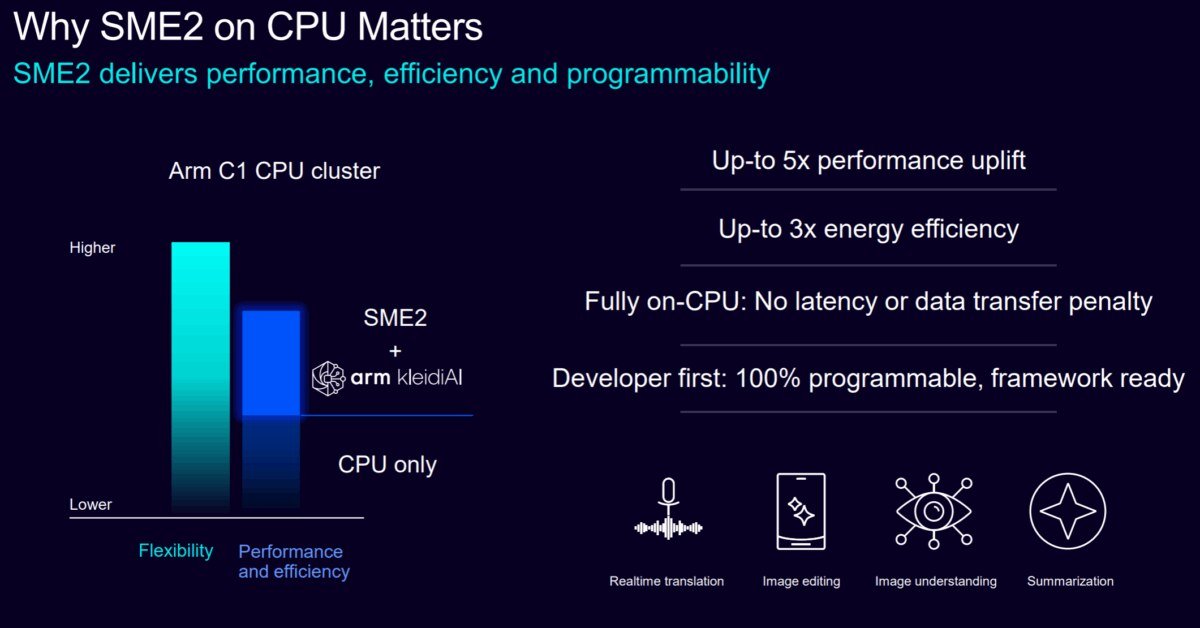

Accelerated Real-World AI Performance Across CPU and GPU Technologies

On the CPU side, the SME2-enabled Armv9.3 C1 CPU cluster, combined with Arm KleidiAI providing native support for leading frameworks and runtime libraries, delivers a significant speed-up across a range of AI-based workloads, including classic machine learning (ML) inference, speech and generative AI, compared to the previous generation CPU cluster under the same conditions. This is alongside a 5x AI performance uplift and 3x improved efficiency. These SME2-enabled improvements mean users experience smoother AI-based interactions and longer days of use on their favorite consumer devices.

Moreover, thanks to micro-architectural improvements and tighter integration across cores, the C1 CPU cluster sets a new bar for performance and efficiency, including:

- An average 30 percent performance uplift across six industry-leading performance benchmarks compared to the previous generation CPU cluster under the same conditions;

- An average 15 percent speed-up across leading applications, including gaming and video streaming compared to the previous generation CPU cluster under the same conditions;

- An average 12 percent less power used across daily mobile workloads, like video playback, social media and web browsing compared to previous generation CPU cluster under the same conditions; and,

- Double digit IPC (Instructions per Cycle) performance gains through the Arm C1-Ultra CPU compared to the previous generation Arm Cortex-X925 CPU.

Mali G1-Ultra pushes AI performance and efficiency even further, with 20 percent faster inference across AI and ML networks compared to the previous generation Arm Immortalis-G925 GPU.

For gaming, the Mali G1-Ultra delivers a 2x uplift in ray tracing performance for high-end, desktop-class visuals on mobile thanks to a new Arm Ray Tracing Unit v2 (RTUv2). This is alongside 20 percent higher graphics performance across key industry benchmarks and gaming applications, including Arena Breakout, Fortnite, Genshin Impact, and Honkai Star Rail.

The Scalable System Backbone of Lumex

However, to support AI-first experiences, the mobile system-on-chip (SoC) must evolve across the entire interconnect and memory architecture, and not just the compute IP.

This is why we’re introducing a new scalable System Interconnect which is optimized to meet the bandwidth and latency demands of demanding AI and other compute heavy workloads. This helps ensure leading performance on Lumex without compromising system responsiveness. The new SI L1 System Interconnect features the industry’s most advanced and area efficient system-level cache (SLC), with a 71 percent leakage reduction compared to a standard compiled RAM to minimize idle power consumption.

For our partners, the System Interconnect delivers a highly flexible, scalable solution that can be optimized for different PPA needs across a wide range of mobile and consumer devices. The SI L1 System Interconnect is for flagship mobile devices with a fully integrated optional SLC and support for the Arm Memory Tagging Extension (MTE) feature to deliver best-in-class security. Meanwhile, the Arm NoC S3 Network-on-Chip Interconnect (NoC S3) is targeted for cost-sensitive and non-coherent mobile systems.

Alongside the new Interconnect, we are also introducing the next-gen Arm MMU L1 System Memory Management Unit, which provides secure, cost-efficient virtualization that scales across a broad category of mobile and consumer devices.

Unlocking Industry-Leading PPA with Physical Implementations

Lumex provides production-ready CPU and GPU implementations that are optimized for 3nm and available on multiple foundries, allowing our silicon and OEM partners to:

- Use the implementations as flexible building blocks, so they can focus on differentiation at the CPU and GPU cluster level;

- Achieve compelling frequency and PPA; and,

- Help ensure first-time silicon success when transitioning to the latest 3nm process node.

To unlock the full potential of Lumex, developers need early access to its capabilities ahead of actual device availability. That’s why we’re introducing a new broad range of software and tools, so developers can prototype and build their AI workloads and utilize the full AI capabilities of the Lumex CSS platform now. These include:

- A complete Android 16 ready software stack, from trusted firmware to the application layer;

- A full, freely available SME2-enabled KleidiAI libraries; and

- New top-down telemetry to analyze app performance, identify bottlenecks and optimize algorithms.

Following a highly successful first year, KleidiAI is now integrated into all major AI frameworks and already shipping across a broad range of applications, devices, and system services, including Android. This work means that everything is ready, so when Lumex-based devices hit the market in the coming months, applications will immediately experience performance and efficiency uplifts for their AI workloads.

On the graphics side, with RenderDoc support coming in future Android releases and unified observability tools like Vulkan counters, Streamline, and Perfetto available through Lumex, developers can analyze workloads in real time, fine-tune for latency, and balance battery and visual quality with precision.

Laying the Foundation for the Next Generation of Mobile Intelligence

Mobile computing is entering a new era that is defined by how intelligence is built, scaled, and delivered. As AI becomes foundational to every experience, platforms must predict, adapt, scale, and accelerate what comes next.

Lumex is designed with that future in mind, with benefits across the entire ecosystem. From OEMs building and scaling innovative devices to developers creating next-gen apps, Lumex makes it easier for our ecosystem to deliver differentiated AI-first platforms and experiences faster at scale, with more intelligent performance.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi