Tools & Platforms

US Defense Department accelerates AI adoption with contracts to several genAI vendors – Computerworld

CDAO is also providing access to all four companies’ genAI models for general purpose use in multiple defense departments, including the Office of the Secretary of Defense, and will provide wider access via embedded AI models within Department of Defense (DoD) enterprise data and AI platforms. It is also partnering with General Services Administration (GSA) to bring AI technologies to the US government as a whole, while aiming to control the costs of AI production and required computing resources through combined buying power.

“The adoption of AI is transforming the Department’s ability to support our warfighters and maintain strategic advantage over our adversaries,” Chief Digital and AI Officer Doug Matty said in a statement. “Leveraging commercially available solutions into an integrated capabilities approach will accelerate the use of advanced AI as part of our Joint mission essential tasks in our warfighting domain as well as intelligence, business, and enterprise information systems.”

In a separate announcement, xAI today unveiled Grok for Government, a suite of products for US federal, state, local, and national security customers. In addition to what’s in the company’s commercial offerings, it includes custom models, additional support, custom AI-powered applications, and soon, models in classified and other restricted environments.

Tools & Platforms

Is The Tech Market Going Nuts? – JOSH BERSIN

Today we learned that OpenAI just signed a five-year deal with Oracle to spend $300 Billion on data centers (this is five-times Oracle’s annual revenues today), more or less doubling Oracle’s annualized revenue in one transaction. Oracle’s stock went up about 35% or more.

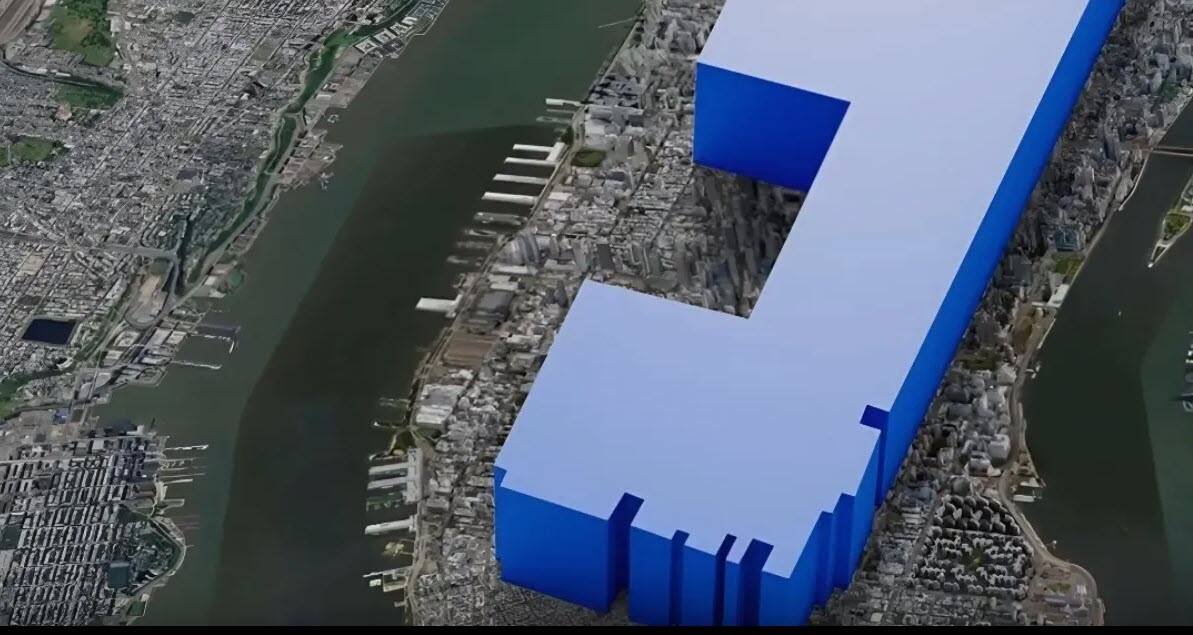

This $300 billion deal can now be added to more than $450 billion cited by Meta, Amazon, Microsoft, and Google to total almost $750 billion allocated this year toward AI infrastructure alone. If you add the cost of engineering talent and energy to be built, it’s probably more like $800 billion. (Meta’s new Louisiana data center is the size of Manhattan.)

If you consider that US GDP is around $30.3 trillion (expected to grow at 1.7% per year), this means that almost 2.5-3% of our entire US GDP has been allocated to AI infrastructure this year.

I’m not sure if we’ve ever committed so much capital to one activity in one year since the Apollo Space Program in 1964. Even the Manhattan Project, which developed the Atomic Bomb to end World War 2, was only about .4% of GDP.

I’m not saying this is too much money, since these are private companies committing funds from their shareholders. But the bet these companies are taking is that somehow, over the coming decades, some multiple of 2.5% of GDP is going to come back as revenue to providers around the business of AI.

This next week is the big HR Technology Conference in Vegas, and I”m going to be keynoting on Thursday to give you an overview of the space. It’s quite astounding how quickly we went from “What is AI?” to “Let’s implement AI as fast as we can” in virtually every company I talk with.

Just a few of the things going on to talk about next week.

- Workday announced the acquisition of Paradox, cementing their move into high-volume recruiting. This complements the company’s acquisition of Hiredscore, and further increases Workday’s AI bench of talent. At the same time the class-action lawsuit (Mobley vs. Workday) is moving ahead, threatening the company with some kind of major settlement for AI-induced age discrimination in hiring.

- ServiceNow, the most “AI centric” of the big vendors, announced its Zurich release, which includes new dev tools for IT departments (and users), more features for data management and security, and Agentic Playbooks to help companies understand how to build human to AI workflows.

- WorkHuman, the billion dollar employee recognition company, introduced its Human Intelligence system, which gives companies highly reliable skills and capability inferences across the workforce, based on peer to peer recognition and feedback,

- Eightfold introduced its end-to-end toolset for high-volume recruiting, including an AI-interviewer (infinite scale for high volume job openings), Candidate Concierge (candidate chat), and new workflows for its talent intelligence and applicant tracking system,

- SAP SuccessFactors announced the acquisition of SmartRecruiters, and you can expect to see more about SAP and our Galileo at SAP Connect in October,

- UKG has announced and is now delivering a wide array of AI agents for its massive customer base of front-line worker companies. These agents help with shift scheduling, real-time pay, payroll reconciliation, shift sharing, and all sorts of important but complex scheduling issues faced by the 70% of workers who work in retail, hospitality, healthcare, transportation, entertainment, and other front-line positions.

- OpenAI announced its Jobs platform and strategy to develop and certify AI skills.

- Many dozens of new companies have been announcing new agentic features including Microsoft, Arist, Cornerstone, ADP, Lightcast, Draup, SHL, Techwolf, Visier, Beamery, Gloat, Reejig, Deel, HiBob, and many others.

- We will be announcing a major new set of features in Galileo, and also highlighting that we have more than 600 companies now using our intelligent Agent for HR.

Where is all this going?

As you’ll hear about next week, Agentic AI in HR is here now, and this means our HR organizations are going to get smarter, more integrated, and probably smaller. The tradition ratio of 100:1 employee to HR ratio could go up to 150-200 over the next few years, and that means HR professionals will become Superworkers, with even more opportunity to add value.

The big HCM vendors (SAP, Workday, Oracle, ADP, Dayforce, UKG) are all heavily investing in AI now, and they’ve hired and developed strong AI teams internally. Each is focused in different areas, so most of you will start to see many AI options from your core providers. In the complex areas like talent acquisition, L&D, and employee experience.

Several things I hope to share next week. First, I’ll try to give you an overall sense of how to manage this proliferation of new tools, and share what we’ve learned about AI transformation process and governance. Second, I’ll share what we’ve been learning about in job redesign and task analysis, an important enterprise discipline in large AI transformations. And third, I’ll be showing you more about the culture and leadership changes we can expect that will help our companies transform more effectively.

I’d say there’s a lot of fast-moving activity taking place (witness Salesforce’s layoff of 4,000 people last week), but for many of you this is a new career in rethinking jobs, organization structure, and skills development as we all move to become what we call Superworker companies.

There will be many announcements and I’ll cover as many as I can, but just strap yourself in. When the technology industry invests almost 3% of US GDP in AI, we are all going to be flooded with tools, systems, and new toys to play with.

Additional Information

Galileo Learn™ – Get Ahead. Stay Ahead. A Revolutionary Approach To Corporate Learning

Can AI Beat Human Intuition For Complex Decision-Making? I Think Not.

The Road To AI-Driven Productivity: Four Stages of Transformation

Tools & Platforms

‘Kicking our butts’: Rapid pace of AI development sparks an urgent push to build better infrastructure

Artificial intelligence innovation is moving at warp speed, but major tech industry players are sounding alarm bells that infrastructure is failing to keep pace with advancements in the field.

“AI is kicking our butts and teaching us that we know nothing” about infrastructure, Yee Jiun Song (pictured), vice president of engineering at Meta Platforms Inc., said Tuesday at the AI Infra Summit in Santa Clara, California.

Zeroing in on the fundamental disconnect, Dion Harris, senior director of AI and HPC Infrastructure Solutions at Nvidia Corp., also noted at the conference that though new AI models are being introduced every week, the time frame for building out the infrastructure to support AI is currently measured in years.

“We have to get everyone else to be prepared for where we’re going,” Harris told the gathering. “The biggest challenge is making sure that everyone is ready to come with us. There is this misalignment of time scales. That in and of itself is a challenge.”

Nvidia previews faster inferencing processor

For its part, Nvidia Tuesday previewed an upcoming chip, the Rubin CPX, that is designed to provide 8 exaflops of computing capacity for AI inferencing. According to the chipmaker, the Rubin CPX will be able to optimize certain mechanisms for large language models three times faster than its current-generation silicon. It’s part of Nvidia’s philosophy that an investment of several million dollars in infrastructure can generate tens of millions in token revenue.

“The performance of the platform is the revenue of an AI factory,” Ian Buck, vice president of hyperscale and high-performance computing at Nvidia, said during a keynote appearance. “This is how we feel about inference.”

More than 3,000 attendees participated in the AI Infra Summit in Silicon Valley this week.

Though Nvidia’s latest chip will help boost computing capacity for AI inferencing and specific LLM tasks, the scale of AI adoption is forcing model providers to invest hundreds of billions of dollars to build out new data center clusters. One of the more notable examples of this is the Prometheus supercluster under development by Meta. Scheduled to come online in 2026, the Ohio-based facility will be one of the first gigawatt data center clusters in the AI era.

“Meta is now only one of a few companies that are racing to build data centers at this scale,”

Song said. “There never has been a more exciting time to be working in infrastructure.”

Prometheus is just a warm-up for future data center clusters in the planning stage. Meta has also announced Hyperion, a second data center cluster that is expected to require up to 5 gigawatts of power. Although Meta has not announced a date for Hyperion’s completion, one industry leader is already questioning whether clusters of this size will meet the global demand for AI processing.

“I don’t think that’s enough,” said Richard Ho, head of hardware at OpenAI. “It doesn’t appear clear to us that there is an end to the scaling model. It just appears to keep going. We’re trying to ring the bell and say, ‘It’s time to build.’”

AI agents drive need for the right stack

Increasing adoption of agents for enterprise tasks is one factor behind the urgency in building the infrastructure to support AI deployment. Large tech players such as Amazon Web Services Inc. are making major investments in agentic AI, fueling rapid advancement of what the technology can ultimately do.

Though one of the key use cases is currently “agent-assisted” application development, the technology is expected to progress rapidly toward “agent-driven” solutions, which will place further demands on infrastructure, according to Barry Cooks, vice president of compute abstractions at AWS.

“The expectation here is this will just continue to expand,” Cooks said during an appearance at the conference. “We’re in the midst of a huge change in the technical landscape in how we do our day-to-day work. It’s super-important that you have the right stack.”

Having the right stack will require new approaches in how systems are architected, a challenge that is being addressed in areas such as memory. For AI processors to function effectively, they need rapid access to data, driven by temporary storage such as dynamic random access memory or DRAM. If DRAM is slow, memory becomes a bottleneck.

Software-defined memory provider Kove Inc. has been working on this issue by essentially virtualizing server memory into a large pool to reduce data latency. On Tuesday, Kove announced benchmark results for AI inference engines Redis and Valkey that demonstrated a capability to run five times larger workloads faster than local DRAM.

“The big challenge that we have is traditional DRAM,” Kove CEO John Overton said during his keynote presentation. “GPUs are scaling, CPUs are scaling… memory has not. As long as we think about memory as stuck in the box, we’ll remain stuck in the box.”

Another big challenge is in the processors that keep getting bigger and bigger, ganging up hundreds or thousands of compute cores on a single piece of silicon. That’s creating another bottleneck — communications among all those cores.

“The next 1,000x leap in computing will be completely about interconnect,” said Nick Harris, founder and CEO of Lightmatter Inc., which has raised $850 million for its silicon photonics technology, “Chips are getting bigger. I/O at the ‘shoreline’ is not enough. It’s time for more horsepower. Not faster horses.”

Meantime, AI itself is becoming critical all the way down to the design of chips, too. “About half the chips built today are using AI; in three years, it will be 90%,” noted Charles Alpert, an AI fellow at chip design software firm Cadence Design Systems Inc., which for years has steadily been incorporating more AI into its tools. “The need to make designers more productive has never been higher.”

Leveraging open-source solutions

Companies are also increasingly turning to the open-source community for help in building out the infrastructure to support AI. Initiatives such as the Open Compute Project have fostered an ecosystem focused on redesigning hardware technology to support demands on compute infrastructure. Last year, Nvidia contributed portions of its Blackwell computing platform design to OCP.

Meta joined a number of high-profile firms in 2023 to found the Ultra Ethernet Consortium, a group dedicated to building an Ethernet-based communication stack architecture for high-performance networking. The group has characterized its mission as promoting open, interoperable standards to prevent vendor lock-in and released its first specification in June.

“What we need here are open standards, open weight models and open-source software,” said Meta’s Song. “I believe open standards are going to be critical in allowing us to manage complexity.”

Whether the buildout of gigawatt data centers, streamlined memory performance and open-source collaboration will enable the tech industry to close the gap between AI innovation and the infrastructure to support it remains to be seen. What is undeniable is that hardware engineering is drawing renewed attention, another element in the wave of transformation brought on by the rise of AI.

“I’ve never seen hardware and infrastructure move more quickly,” said Song. “AI has made hardware engineering sexy again. Now hardware engineers get to have fun too.”

With reporting from Robert Hof

Photos: Mark Albertson/SiliconANGLE

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

- 15M+ viewers of theCUBE videos, powering conversations across AI, cloud, cybersecurity and more

- 11.4k+ theCUBE alumni — Connect with more than 11,400 tech and business leaders shaping the future through a unique trusted-based network.

About SiliconANGLE Media

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.

Tools & Platforms

Reimagining Education In The Age Of AI

Students going to college

The Jopwell Collection

At the Executive Technology Board, we’ve been reflecting on how #education must evolve in a world shaped by #AI. Much of what worked in the past is no longer sufficient: Higher education was designed for a time when information was scarce, when expertise was locked away in libraries, and when tools for discovery and application were limited. Today, knowledge is instantly accessible, and AI is not just a new tool—it is reshaping the very definition of work.

The pace of change is extraordinary. Traditional curricula are being obsoleted faster than ever before. Skills that once served as a career foundation are now outdated within years, sometimes months. And AI is not only transforming existing roles but also creating entirely new categories of work whilst at the same time, rendering others obsolete. The question is no longer whether education needs to change, but how quickly it can.

Last week in London, we had the privilege of hosting the Dean and Associate Dean in computer sciences at Northeastern University. The conversation was inspiring – NU’s work offers a glimpse of how education can be reimagined for this new era. Their perspective underscored a vital truth: preparing students for the world ahead requires moving beyond incremental updates to curricula and toward a fundamental rethinking of what education itself should be.

From that discussion and broader reflections at our global technology think tank, three imperatives stand out:

1. From centers of learning to facilitators of learning

Universities can no longer view themselves simply as repositories of knowledge. Instead, they must evolve into facilitators of continuous learning. This means building systems and cultures that are agile — able to incorporate new knowledge, tools, and practices as quickly as industries themselves are evolving. Students won’t succeed because they memorized a body of facts; they’ll succeed because they developed the capacity to adapt, unlearn, and relearn.

2. AI as a multidisciplinary foundation

One of the most exciting promises of AI lies at the intersections: healthcare and AI, design and AI, sustainability and AI, law and AI. The future of innovation will come less from siloed expertise and more from multidisciplinary collaboration. Universities must therefore embed AI literacy across disciplines — not just computer science programs but also the social sciences, arts, and professional schools. Students of law, medicine, business, and even the humanities need a working fluency in AI, because it will define their fields as much as it will define technology itself.

3. Integrating real-world experience into the curriculum

Learning cannot remain confined to the classroom. The most effective education models are those that blend theory with real-world practice. Northeastern’s co-op program is a leading example: students alternate between classroom study and full-time industry roles, graduating not only with degrees but also with significant hands-on experience. This kind of integration is no longer optional — it’s essential in an era where employers expect graduates to contribute immediately, and where technologies shift too quickly for classroom learning alone to keep pace.

Building an ecosystem for lifelong learning

Perhaps the biggest shift we need to embrace is that education no longer ends at graduation. In the age of AI, every professional will need to continuously refresh their skills, adapt to new tools, and reinvent themselves over the course of their career. Universities, industry, and policymakers must collaborate to create a true ecosystem for lifelong learning. Micro-credentials, modular certifications, and continuous access to new learning pathways will be the new norm.

This is not just a challenge for academia. It is a collective responsibility. Businesses must invest in workforce development. Policymakers must create frameworks that support reskilling at scale. Universities must reinvent their models. And learners themselves must take ownership of continuous growth. The future of education will not be about teaching students what to learn, but preparing them how to learn—continuously, adaptively, and across disciplines.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi