Imagine a student working on an assignment and they are stuck. Their lecturer or tutor is not available. Or maybe they feel worried about looking silly if they ask for help. So they turn to ChatGPT for feedback instead.

In mere moments they will have an answer, which they can prompt for further clarification if they need.

They are not alone. Our research shows nearly half of surveyed Australian university students use generative artificial intelligence (AI) for feedback.

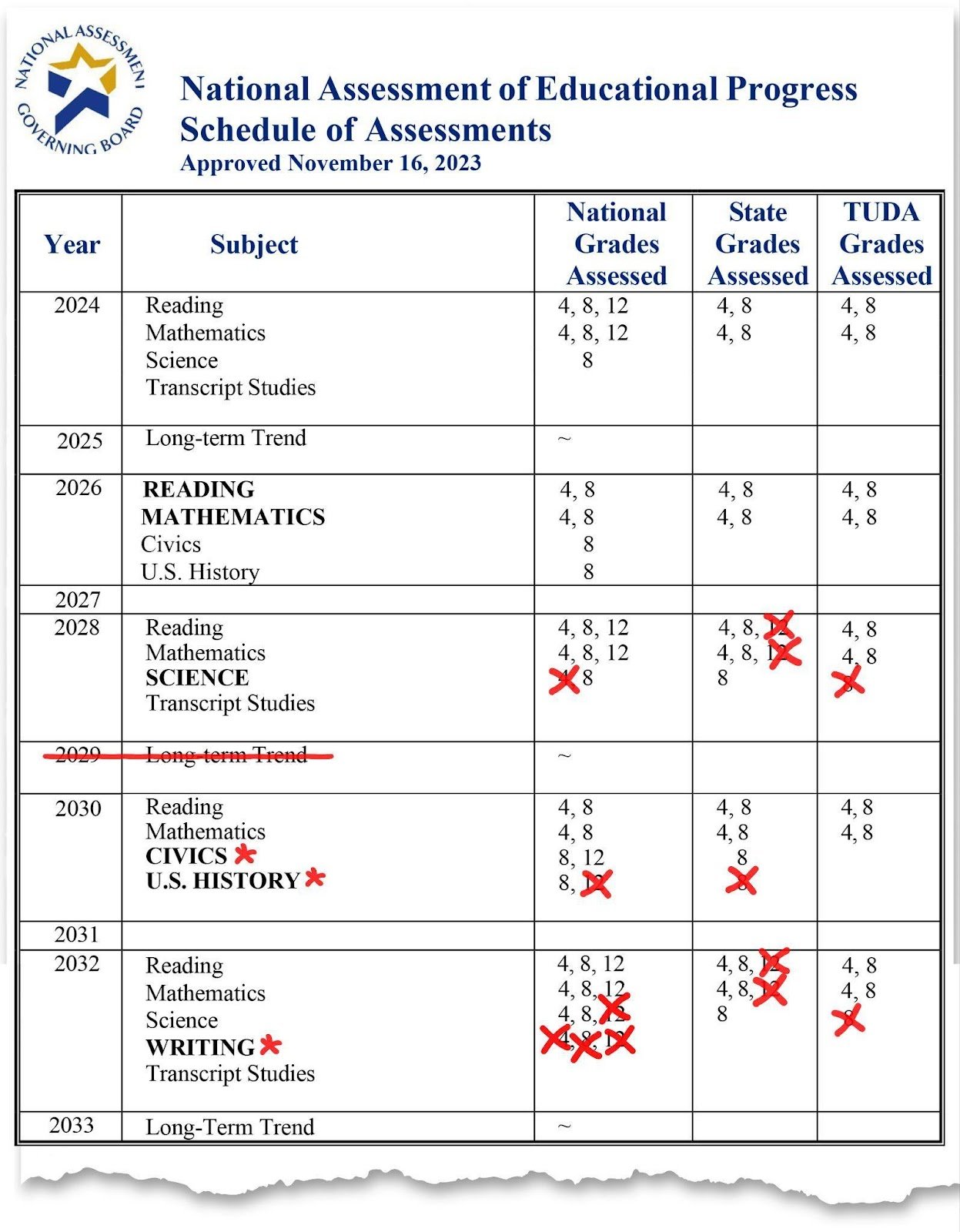

Our study

Between August and October 2024, my colleagues surveyed 6,960 students across four major Australian universities.

The participants studied a wide range of subjects including sciences, engineering and mathematics, health, humanities, business and law.

More than half (57%) were women, 72% were aged between 18 and 24 years. Nearly 90% were full-time students, 58% were domestic students, 61% were undergraduate and 92% were attending on campus activities as part of their studies.

Each of the universities invited its enrolled students to complete an online survey.

We wanted to understand how students use AI for learning, particularly if they have used AI for feedback, and what were their perceptions of the helpfulness and trustworthiness of feedback from both AI and teachers.

Students think AI feedback is helpful but not trustworthy

We found almost half of those surveyed (49%) were using AI for feedback to help them improve their university work. For example, this could involve typing questions into popular tools, such as ChatGPT. It could also involve getting suggestions for improving a piece of work, details of the strengths and weaknesses of the work, suggested text edits, and additional ideas.

These students told us they found both AI feedback and teacher feedback helpful: 84% rated AI feedback as helpful, while 82% said the same about their lecturers.

But there was a big gap when it came to trust. Some 90% of students considered their teacher’s feedback trustworthy, compared to just 60% for AI feedback.

As one student said,

[AI] offers immediate access to information, explanations, and creative ideas, which can be helpful for quick problem-solving and exploring new concepts.

Another student said teacher feedback was “more challenging but rewarding”. That was because

[AI] appears to confirm some thoughts I have, which makes me sceptical of how helpful it is.

AI provides volume, teachers have expertise

Our thematic analysis of students’ open-ended responses suggests AI and teachers serve different purposes.

Students reported they found AI less reliable and less specific. They also noted AI did not understand the assignment context as well as their teachers did.

However, AI was easier to access – students could ask for feedback multiple times without feeling like a burden.

The vulnerability factor

Research tells us students can feel vulnerable when seeking feedback from teachers. They may worry about being judged, feeling embarrassed, or damaging their academic relationships if their work is not of a high enough standard.

AI seems to remove this worry. One student described how “[AI] feedback feels safer and less judgmental”. Another student explained:

[AI] allows me to ask stupid questions that I’m too ashamed to ask my teacher.

But many students do not know AI can help

Half of the participants (50.3%) did not use AI for feedback purposes – 28% of this group simply did not know it was possible.

Other reasons included not trusting AI (28%) and having personal values that opposed the use of this kind of technology (23%).

This could create an equity issue. Students who are aware of AI’s capabilities have 24/7 access to some possibly useful feedback support, while others have none.

What this means for unis

As student participants said, AI can be useful in providing quick, accessible feedback for initial drafts.

Teachers excel at providing expert, contextualised guidance that develops deeper understanding. This makes it a bit like getting medical advice from a qualified doctor versus looking up symptoms on Google. Both might be helpful, but in different circumstances, and you know which one you would trust more with something serious.

For those universities trying to find a way to incorporate AI in their teaching and learning systems, one challenge will be creating opportunities and structures that enable educators to focus on their strengths. AI can complement them by presenting helpful, digestible information about student work that is easy to understand and is almost always accessible and free of personal judgement.

This suggests the future is not about choosing between AI and humans, it is about understanding how they can work together to support student learning more effectively.

This article draws upon research conducted by Michael Henderson from Monash University, Margaret Bearman and Jennifer Chung from Deakin University, Tim Fawns from Monash University, Simon Buckingham Shum from the University of Technology Sydney, Kelly E. Matthews from The University of Queensland and Jimena de Mello Heredia from Monash University.