AI Insights

The Cruz AI Policy Framework & SANDBOX Act: Pro-Innovation Policies to Ensure American AI Leadership

Sen. Ted Cruz (R-TX), chairman of the Senate Commerce Committee, has introduced an important new artificial intelligence (AI) policy framework to guide congressional policymaking. He has also floated a new bill that proposes “regulatory sandboxes” as a method of encouraging creative policy change in areas where new AI innovations might be constrained by existing regulations or bureaucratic processes.

His proposed “Legislative Framework for American Leadership in Artificial Intelligence” includes five pillars for congressional action:

- Unleash American innovation and long-term growth

- Protect free speech in the age of AI

- Prevent a patchwork of burdensome AI regulation

- Stop nefarious uses of AI against Americans

- Defend human value and dignity

Sen. Cruz said “the United States should adopt a light-touch regulatory approach” based on these principles to speed-up development of American AI systems and ensure that China does not achieve its goal of becoming the global leader in AI development. This framework is not a formal bill, but is meant to encourage forthcoming AI policy action by Congress.

The first part of the Cruz AI plan to be rolled out formally is the “Strengthening Artificial intelligence Normalization and Diffusion By Oversight and eXperimentation,” or “SANDBOX Act.” If passed, this would give the Office of Science and Technology Policy (OSTP) in the White House the ability to work with AI deployers and developers to modify or waive regulations that could impede their work.

Under the bill, OSTP would coordinate with federal agencies to evaluate requests for 2-year waivers under the sandbox programs with the possibility of extensions being granted upon request. The bill also requires the OSTP to report back to Congress and identify what waivers were granted and provide an evaluation of their effectiveness. Sen. Cruz hopes the process will help “better inform future policy decisions and the regulatory structure applicable to AI” and “encourage American ingenuity, improve transparency in lawmaking, and ultimately lead to safe, long-term AI usage domestically.”

Sen. Cruz introduced his new AI policy framework and new sandbox bill at a Wednesday hearing in the Senate Commerce Committee where OSTP director Michael Kratsios testified and discussed the importance of the Trump administration’s new “AI Action Plan,” a major policy strategy document the White House released in July. The Cruz bill compliments the Trump administration’s vision for a “try-first” approach to AI policy that allows American AI innovation and investment to flourish and meet the challenge that China poses to American leadership and values on this front.

Overcoming Ossified “Set-It-And-Forget-It” Regulation

Sen. Cruz’s bill is important because it addresses a somewhat forgotten element of AI policy. The AI policy debate thus far has primarily focused on new types of regulation for AI systems and applications. There are, however, many laws and regulations already on the books that cover—or could come to cover—algorithmic applications. But are those rules right for the AI age? Are they fit for purpose, or will they just hold back important innovations without having much corresponding benefit?

Once implemented, regulations often prove hard to update or eliminate to be consistent with new technological and marketplace realities. This leads to what the World Economic Forum famously referred to as a “regulate-and-forget” approach to technological governance, or what others have labelled a “build-and-freeze model” of policymaking.

For rules to be effective, however, they need to keep pace with changing circumstances. Unfortunately, older rules are rarely revisited or revised, even after new social, economic, and technological realities render them obsolete or ineffective. A 2017 survey of U.S. Code of Regulations by Deloitte revealed that 68 percent of federal regulations were never updated and that 17 percent have only been updated once. This results in chronic regulatory accumulation, which has serious costs for the economy and consumers. When red tape grows without constraint and becomes untethered from modern marketplace realities, it can undermine innovation and investment, undermine entrepreneurship and competition, raise costs to consumers, limit worker opportunities, and undermine long-term economic growth. Meanwhile, the growing complexity of some government systems result in “regulatory overload,” which can contradict the well-intentioned safety goals behind many rules.

Before he served as Colorado Attorney General, Phil Weiser wrote that “[a] core failing of today’s administrative state. . . is the lack of imagination as to how agencies should operate.” He called for more “entrepreneurial administration” to “develop experimental regulatory strategies” that could also ensure that “legislative bodies have the opportunity to evaluate regulatory innovations in practice before deciding whether to embrace, revise, reject, or merely tolerate them.”

Sen. Cruz’s AI sandbox bill is a good example of that sort of entrepreneurial administration and “experimental regulatory strategies.” Just as trial-and-error improves markets, it can improve public policy. Policies for complex and fast-moving technologies must not be set in stone if America hopes to remain on the cutting edge of new sectors. Many new AI applications in healthcare, transportation, and financial services could offer the public important new life-enriching services. But, those innovations could also be immediately confronted by archaic rules that block those benefits by standing in the way of marketplace experimentation. Sen. Cruz’s bill can help address this problem.

The Cruz AI Policy Vision Takes Shape

With the release of his new AI legislative framework and the SANDBOX Act, Sen. Cruz’s vision for AI policy is now clearer. Sen. Cruz has previously identified the importance of America leading the world on AI innovation and the particular need to counter China’s efforts to become a global leader on this front. In a March speech at the Special Competitive Studies Project’s “Compute Summit,” he argued, “if we don’t innovate, we’ll lose. And if China dominates AI for the world, that is a profoundly bad outcome for America and the rest of the world.”

“It is far better for America to lead and innovate” to stay ahead of China, Sen. Cruz said, and to do so, America should follow the same smart policy model we adopted for the Internet, which helped the nation trounce Europe, which once represented major competition on the digital front. The European Union (E.U.) adopted a “heavy-handed prior approval regulatory approach” that decimated innovation across the European continent, Cruz noted, while America benefited from tech and energy-led technological revolution, both spurred by smarter policy choices. Thus, “putting government in charge of innovation in AI would almost ensure America loses the race to AI,” which would be “catastrophic.”

In that March address, Sen. Cruz also correctly explained how “we have tools in place to go after misuse [and] criminal conduct” with the many existing consumer protection regulations already on the books. He also argued that any new AI-related regulations should be narrowly-tailored, as opposed to a “broad-brush regulatory approach that would empower government to silence and stifle innovation.” Consistent with his call for tailored AI policies, Sen. Cruz co-sponsored the “TAKE IT DOWN Act,” a bill targeting the nonconsensual online publication of intimate visual depictions of individuals, whether authentic or computer-generated. The bill passed through Congress with widespread support and was signed into law by President Trump on May 19th.

A National Framework & Preemption Are Essential

Finally, Sen. Cruz has also acknowledged the importance of a national policy framework to address the rapid proliferation of AI legislative proposals happening across the nation. Over 1,000 AI-related bills were introduced in the first half of 2025, and fully one-quarter of them were floated in four major progressive states—California, New York, Colorado, and Illinois—where lawmakers are looking to aggressively regulate AI systems, but in many different ways.

Earlier this summer, Sen. Cruz made an effort to push through his own version of the House-passed 10-year moratorium on state AI-specific regulatory enactments. “A single state should not have the power to set AI rules for the entire country,” Cruz said when announcing his version of the moratorium proposal. “Instead, the U.S. should take steps to prevent an unworkable patchwork of disparate and conflicting state AI laws and to encourage states to adopt commonsense tech-neutral policies.” This is in line with President Trump’s call for a national AI policy framework. During a speech announcing the AI Action Plan in July, Trump told the audience, “We need one commonsense federal standard that supersedes all states.”

When the moratorium proposals came under fire from some of his Senate colleagues, Cruz worked with Sen. Marsha Blackburn (R-Tenn.) to create a compromise that would have shortened the AI moratorium length to five years and provided further clarification on what was exempt from the proposal. Unfortunately, that compromise fell apart quickly after Sen. Blackburn walked away from the deal and the original moratorium proposal was ultimately defeated on the Senate floor.

Nonetheless, Cruz has continued to identify the importance of a “light-touch” national policy framework for AI policy and the third pillar of his new legislative framework stresses the need to “clarify federal standards to prevent burdensome state AI regulations.” At Wednesday’s hearing where Sen. Cruz launched his framework, OSTP head Kratsios also reiterated that, “a patchwork of state regulations is anti-innovation” and it would particularly hurt smaller firms that cannot handle the compliance costs associated with hundreds of confusing, contradictory rules.

Conclusion: A Blueprint for Winning the AI Future

Taken together, Sen. Cruz’s new AI policy framework and the Trump administration’s recent AI Action Plan point the way forward for Congress. It is essential that federal lawmakers take steps promptly to formulate a national policy framework instead of letting European Union lawmakers or a handful of activist-minded states dictate AI policy for America. The principles and strategies laid out in the Cruz framework and Trump AI Action Plan offer lawmakers a constructive blueprint to help ensure America wins the AI future.

AI Insights

To ChatGPT or not to ChatGPT: Professors grapple with AI in the classroom

As shopping period settles, students may notice a new addition to many syllabi: an artificial intelligence policy. As one of his first initiatives as associate provost for artificial intelligence, Michael Littman PhD’96 encouraged professors to implement guidelines for the use of AI.

Littman also recommended that professors “discuss (their) expectations in class” and “think about (their) stance around the use of AI,” he wrote in an Aug. 20 letter to faculty. But, professors on campus have applied this advice in different ways, reflecting the range of attitudes towards AI.

In her nonfiction classes, Associate Teaching Professor of English Kate Schapira MFA’06 prohibits AI usage entirely.

“I teach nonfiction because evidence … clarity and specificity are important to me,” she said. AI threatens these principles at a time “when they are especially culturally devalued” nationally.

She added that an overreliance on AI goes beyond the classroom. “It can get someone fired. It can screw up someone’s medication dosage. It can cause someone to believe that they have justification to harm themselves or another person,” she said.

Nancy Khalek, an associate professor of religious studies and history, said she is intentionally designing assignments that are not suitable for AI usage. Instead, she wants students “to engage in reflective assignments, for which things like ChatGPT and the like are not particularly useful or appropriate.”

Khalek said she considers herself an “AI skeptic” — while she acknowledged the tool’s potential, she expressed opposition to “the anti-human aspects of some of these technologies.”

But AI policies vary within and across departments.

Professors “are really struggling with how to create good AI policies, knowing that AI is here to stay, but also valuing some of the intermediate steps that it takes for a student to gain knowledge,” said Aisling Dugan PhD’07, associate teaching professor of biology.

In her class, BIOL 0530: “Principles of Immunology,” Dugan said she allows students to choose to use artificial intelligence for some assignments, but that she requires students to critique their own AI-generated work.

She said this reflection “is a skill that I think we’ll be using more and more of.”

Dugan added that she thinks AI can serve as a “study buddy” for students. She has been working with her teaching assistants to develop an AI chatbot for her classes, which she hopes will eventually answer student questions and supplement the study videos made by her TAs.

Despite this, Dugan still shared concerns over AI in classrooms. “It kind of misses the mark sometimes,” she said, “so it’s not as good as talking to a scientist.”

For some assignments, like primary literature readings, she has a firm no-AI policy, noting that comprehending primary literature is “a major pedagogical tool in upper-level biology courses.”

“There’s just some things that you have to do yourself,” Dugan said. “It (would be) like trying to learn how to ride a bike from AI.”

Assistant Professor of the Practice of Computer Science Eric Ewing PhD’24 is also trying to strike a balance between how AI can support and inhibit student learning.

This semester, his courses, CSCI 0410: “Foundations of AI and Machine Learning” and CSCI 1470: “Deep Learning,” heavily focus on artificial intelligence. He said assignments are no longer “measuring the same things,” since “we know students are using AI.”

While he does not allow students to use AI on homework, his classes offer projects that allow them “full rein” use of AI. This way, he said, “students are hopefully still getting exposure to these tools, but also meeting our learning objectives.”

Get The Herald delivered to your inbox daily.

Ewing also added that the skills required of graduated students are shifting — the growing presence of AI in the professional world requires a different toolkit.

He believes students in upper level computer science classes should be allowed to use AI in their coding assignments. “If you don’t use AI at the moment, you’re behind everybody else who’s using it,” he said.

Ewing says that he identifies AI policy violations through code similarity — last semester, he found that 25 students had similarly structured code. Ultimately, 22 of those 25 admitted to AI usage.

Littman also provided guidance to professors on how to identify the dishonest use of AI, noting various detection tools.

“I personally don’t trust any of these tools,” Littman said. In his introductory letter, he also advised faculty not to be “overly reliant on automated detection tools.”

Although she does not use detection tools, Schapira provides specific reasons in her syllabi to not use AI in order to convince students to comply with her policy.

“If you’re in this class because you want to get better at writing — whatever “better” means to you — those tools won’t help you learn that,” her syllabus reads. “It wastes water and energy, pollutes heavily, is vulnerable to inaccuracies and amplifies bias.”

In addition to these environmental concerns, Dugan was also concerned about the ethical implications of AI technology.

Khalek also expressed her concerns “about the increasingly documented mental health effects of tools like ChatGPT and other LLM-based apps.” In her course, she discussed with students how engaging with AI can “resonate emotionally and linguistically, and thus impact our sense of self in a profound way.”

Students in Schapira’s class can also present “collective demands” if they find the structure of her course overwhelming. “The solution to the problem of too much to do is not to use an AI tool. That means you’re doing nothing. It’s to change your conditions and situations with the people around you,” she said.

“There are ways to not need (AI),” Schapira continued. “Because of the flaws that (it has) and because of the damage (it) can do, I think finding those ways is worth it.”

AI Insights

This Artificial Intelligence (AI) Stock Could Outperform Nvidia by 2030

When investors think about artificial intelligence (AI) and the chips powering this technology, one company tends to dominate the conversation: Nvidia (NASDAQ: NVDA). It has become an undisputed barometer for AI adoption, riding the wave with its industry-leading GPUs and the sticky ecosystem of its CUDA software that keep developers in its orbit. Since the launch of ChatGPT about three years ago, Nvidia stock has surged nearly tenfold.

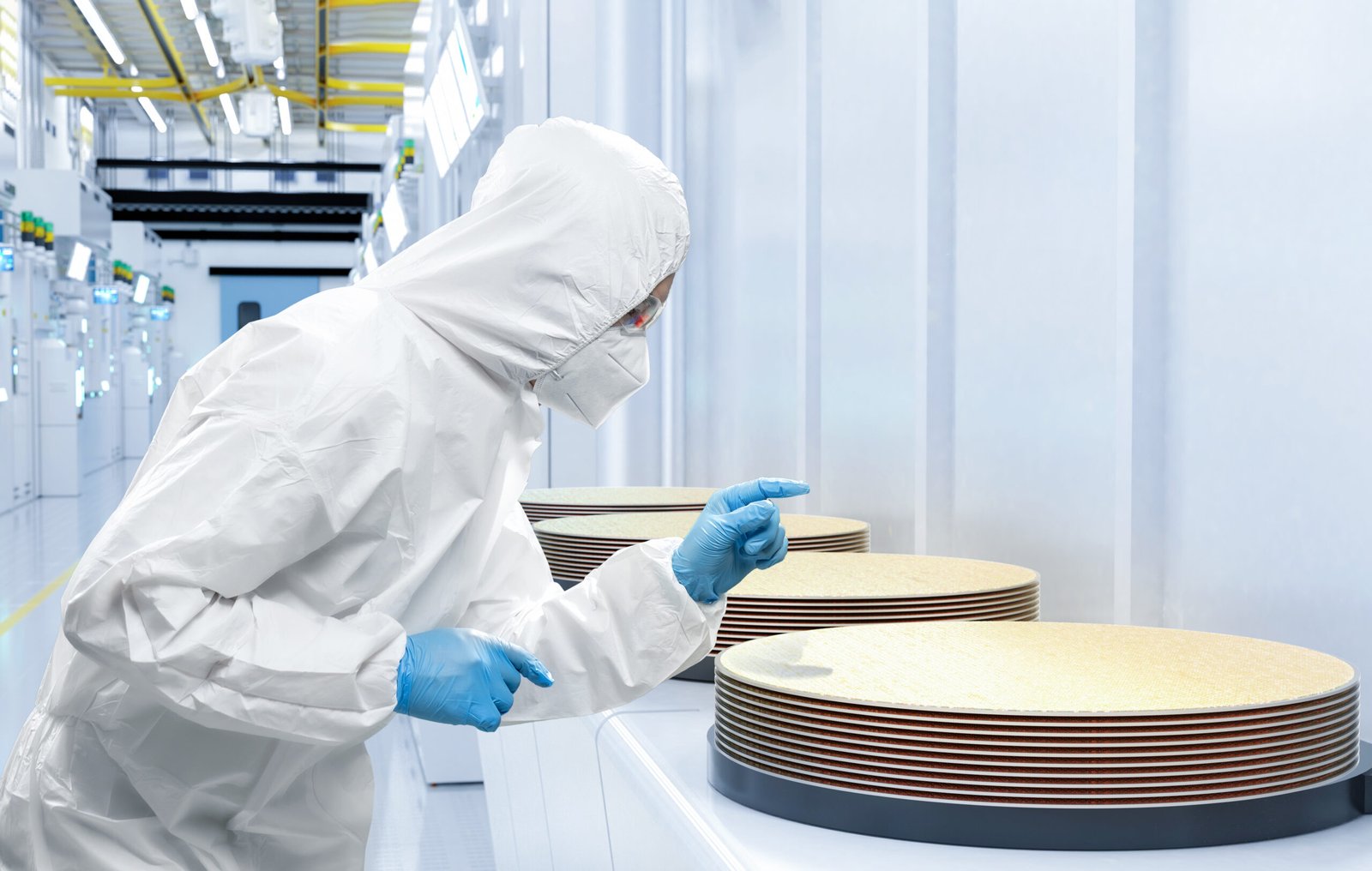

Here’s the twist: While Nvidia commands the spotlight today, it may be Taiwan Semiconductor Manufacturing (NYSE: TSM) that holds the real keys to growth as we look toward the next decade. Below, I’ll unpack why Taiwan Semi — or TSMC, as it’s often called — isn’t just riding the AI wave, but rather is building the foundation that brings the industry to life.

What makes Taiwan Semi so critical is its role as the backbone of the semiconductor ecosystem. Its foundry operations serve as the lifeblood of the industry, transforming complex chip designs into the physical processors that power myriad generative AI applications.

Source Fool.com

AI Insights

Albania puts AI-created ‘minister’ in charge of public procurement | Albania

A digital assistant that helps people navigate government services online has become the first “virtually created” AI cabinet minister and put in charge of public procurement in an attempt to cut down on corruption, the Albanian prime minister has said.

Diella, which means Sun in Albanian, has been advising users on the state’s e-Albania portal since January, helping them through voice commands with the full range of bureaucratic tasks they need to perform in order to access about 95% of citizen services digitally.

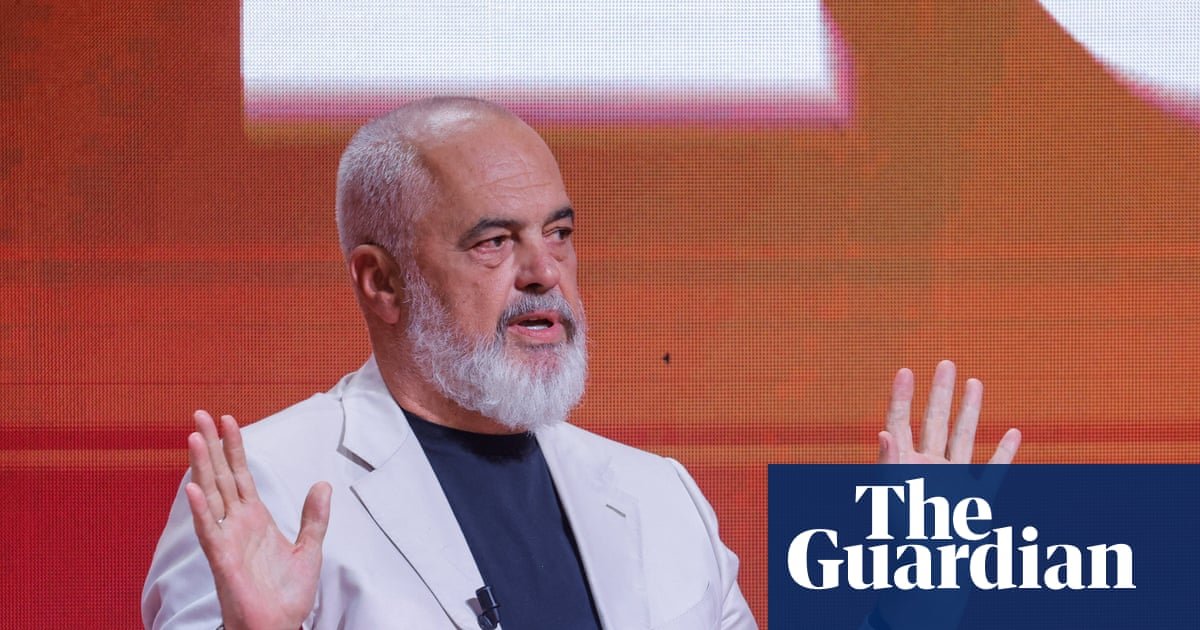

“Diella, the first cabinet member who is not physically present, but has been virtually created by AI”, would help make Albania “a country where public tenders are 100% free of corruption”, Edi Rama said on Thursday.

Announcing the makeup of his fourth consecutive government at the ruling Socialist party conference in Tirana, Rama said Diella, who on the e-Albania portal is dressed in traditional Albanian costume, would become “the servant of public procurement”.

Responsibility for deciding the winners of public tenders would be removed from government ministries in a “step-by-step” process and handled by artificial intelligence to ensure “all public spending in the tender process is 100% clear”, he said.

Diella would examine every tender in which the government contracts private companies and objectively assess the merits of each, said Rama, who was re-elected in May and has previously said he sees AI as a potentially effective anti-corruption tool that would eliminate bribes, threats and conflicts of interest.

Public tenders have long been a source of corruption scandals in Albania, which experts say is a hub for international gangs seeking to launder money from trafficking drugs and weapons and where graft has extended into the upper reaches of government.

Albanian media praised the move as “a major transformation in the way the Albanian government conceives and exercises administrative power, introducing technology not only as a tool, but also as an active participant in governance”.

after newsletter promotion

Not everyone was convinced, however. “In Albania, even Diella will be corrupted,” commented one Facebook user.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi