Ethics & Policy

The AI Workforce: What LinkedIn data reveals about ‘AI Talent’ trends in OECD.AI’s live data

LinkedIn’s Data for Impact programme’s collaboration with the OECD.AI Policy Observatory brings together the latest LinkedIn data on AI workforce trends to provide policymakers with information to understand the AI ecosystem better and make evidence-based decisions.

LinkedIn’s more than one billion members represent an economic graph that is a granular representation of the global economy updated in real time. While administrative systems can lag in updating taxonomies on AI occupations and skills, LinkedIn’s data identifies the latest trends in AI and the labour market.

LinkedIn’s latest methodology considers a member as AI engineering talent if they have explicitly added at least two AI Engineering Skills (see list below) – such as model training, AI agents, or large language models – to their profile and/or they are or have been employed in an AI job like an AI engineer. By capturing occupational and skills components, this refined definition represents the wider AI workforce more clearly and incorporates ongoing efforts to upskill and utilise AI across multiple sectors.

7 out of every 1,000 LinkedIn members globally have AI talent

The AI workforce is growing at an unprecedented pace in response to the rapidly accelerating adoption of AI among employers. As of 2024, 7 out of every 1,000 LinkedIn members globally are considered AI engineering talent, representing a 130% increase since 2016. The highest concentrations of AI engineering talent are found in Israel (1.98%), Singapore (1.64%), and Luxembourg (1.45%). Furthermore, AI Literacy Skills (see list below), such as ChatGPT and Microsoft Copilot, grew by 600% in the last year.

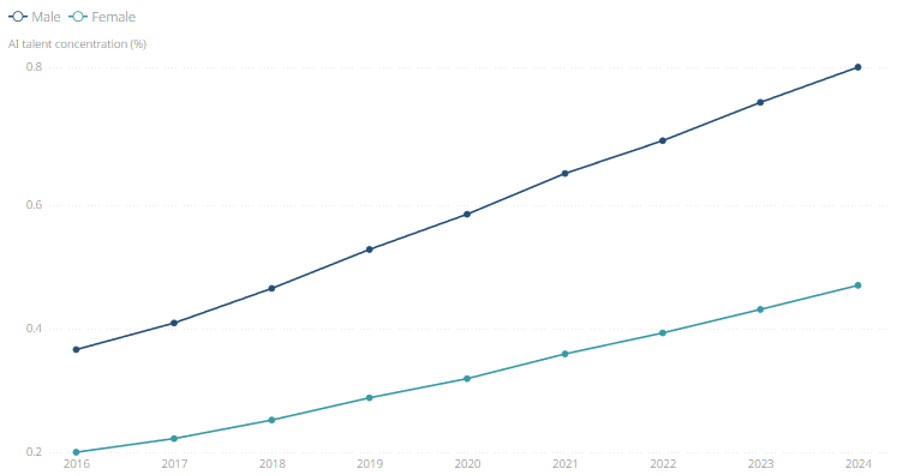

Figure: Global AI engineering talent concentration of LinkedIn members by gender

AI adoption is not just in the Tech sector

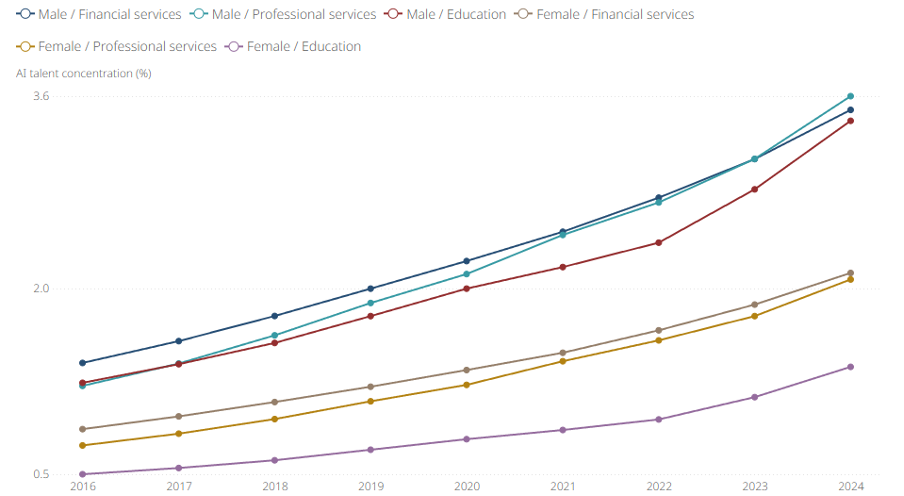

Increasing concentrations of AI talent across multiple industries demonstrate the widespread adoption of AI, not only in technology but also in professional services, financial services, and education. As AI talent develops across sectors, we also observe a persistent gender gap, although some industries seem better positioned to close it.

LinkedIn’s latest data on the AI gender gap reveals that in 2024, the share of men with AI Engineering Skills is 74% higher than the share of women with the same skills. This gap varies by industry; most industries analysed have a smaller gender gap than the 74% average.[CW5] One notable exception is education, where the AI gender gap is more than double the average, despite the industry having better representation of women. We also observe a gender gap in AI Literacy Skills, with the share of men possessing these skills being 138% greater than women. LinkedIn’s research on gender gaps within STEM further examines potential drivers of these disparities and their changes over time globally.

Figure: Global AI engineering talent concentration of LinkedIn members by industry and gender

Skill sets are adapting

As workers collectively seek to leverage this emerging technology, we observe skill sets evolving across the workforce. Understanding which skills are critical for leveraging AI, where those skills currently reside, and how to distribute them more evenly across the workforce may soon underpin countries’ economic growth and resilience.

The fastest-growing AI skills added by LinkedIn members in 2024 were Custom GPTs, AI Productivity, and AI Agents, reflecting AI’s broader shift in focus to GenAI and its use as a productivity tool. We also see strategic AI skills growing, such as AI Strategy (#7) and Responsible AI (#8), suggesting the evolution of a more mature AI ecosystem and a focus on policymaking.

Table: The fastest growing AI Skills (as defined by LinkedIn) added by LinkedIn members in OECD countries in 2024

| AI Engineering Skills | AI Literacy Skills | |

| 1 | Custom GPTs | Generative AI Tools |

| 2 | AI Productivity | Anthropic Claude |

| 3 | AI Agents | Microsoft Copilot Studio |

| 4 | Azure AI Studio | Microsoft Copilot |

| 5 | Amazon Bedrock | AI Prompting |

| 6 | OpenAI API | LLaMA |

| 7 | AI Strategy | Google Gemini |

| 8 | Responsible AI | Generative AI Studio |

| 9 | LangChain | GitHub Copilot |

| 10 | Computational Intelligence | AI Builder |

See data about the fastest-growing AI Literacy Skills and AI Engineering Skills

See data about the fastest-growing AI Literacy Skills and AI Engineering Skills

Data that pinpoints AI skill gaps

The latest 2024 LinkedIn data from OECD.AI offers a glimpse into the evolving landscape of AI skills and occupations, as well as the demographic dynamics of this workforce. LinkedIn’s methodology and definition of AI talent help pinpoint skills gaps and align workforce development with industry demands. Employers, workers, and policymakers can utilise this data and these methodologies to keep pace with the ever-changing nature of AI-related jobs and skills.

The post The AI Workforce: What LinkedIn data reveals about ‘AI Talent’ trends in OECD.AI’s live data appeared first on OECD.AI.

Ethics & Policy

Elon Musk predicts big on AI: ‘AI could be smarter than the sum of all humans soon…’ |

Elon Musk has issued a striking prediction about the future of artificial intelligence, suggesting that AI could surpass any single human in intelligence as soon as next year (2026) and potentially become smarter than all humans combined by 2030. He made the comment during a recent appearance on the All-In podcast, emphasising both his optimism about AI’s capabilities and his longstanding concern over its rapid development. While experts continue to debate the exact timelines for achieving human-level AI, Musk’s projections underscore the accelerating pace of AI advancement. His remarks also highlight the urgent need for global discussions on the societal, technological, and ethical implications of increasingly powerful AI systems.

Possible reasons behind Elon Musk’s prediction

Although Musk did not specify all motivations, several factors could explain why he made this statement:

- Technological trajectory: Rapid advancements in AI, especially in agentic AI, physical AI, and sovereign AI highlighted in Deloitte’s 2025 trends report, indicate a fast-moving technological frontier.

xAI positioning: As CEO of xAI, Musk may be aiming to draw attention to AI’s capabilities and the company’s role in accelerating scientific discovery.- Historical pattern: Musk has a history of bold predictions, such as suggesting in 2020 that AI might overtake humans by 2025, reflecting his tendency to stimulate public discourse and action.

- Speculative assessment: Musk’s comments may reflect his personal belief in the exponential growth of AI, fueled by increasing computational power, data availability, and breakthroughs in machine learning.

Expert context: human-level AI

While Musk’s timeline is aggressive, it aligns loosely with broader expert speculation on human-level machine intelligence (HLMI). A 2017 MIT study estimated a 50% chance of HLMI within 45 years and a 10% chance within 9 years, suggesting that Musk’s forecast is on the optimistic, yet not implausible, side of expert predictions.Additional surveys support this spectrum of expert opinion. The 2016 Survey of AI Experts by Müller and Bostrom, which included 550 AI researchers, similarly found a 50% probability of HLMI within 45 years and a 10% probability within 9 years. More recent AI Impacts Surveys (2016, 2022, 2023) indicate growing optimism, with the 2023 survey of 2,778 AI researchers estimating a 50% chance of HLMI by 2047, reflecting technological breakthroughs like large language models. These findings show that while Musk’s timeline is aggressive, it remains within the broader range of expert projections.

Potential dangers and opposition views

Musk’s prediction has sparked debate among experts and the public:

- Job losses: AI capable of outperforming humans in most tasks could disrupt labor markets, potentially replacing millions of jobs in sectors from manufacturing to knowledge work.

- Concentration of power:

Superintelligent AI could concentrate power among a few corporations or governments, raising fears about inequality and control. - Safety concerns: Critics warn of unintended consequences if AI surpasses human intelligence without robust safety measures, including errors in decision-making or misuse in military or financial systems.

- Ethical dilemmas: Questions about accountability, transparency, and moral responsibility for AI decisions remain unresolved, fueling ongoing debates about regulation and ethical frameworks.

Societal and generational implications

The rise of superintelligent AI may profoundly affect society:

- Education and skill shifts: New generations may need entirely different skill sets, emphasizing creativity, critical thinking, and AI oversight rather than routine work.

- Economic transformation: Industries could see unprecedented efficiency gains, but also significant displacement, requiring proactive retraining programs and social policies.

- Human identity and purpose: As AI surpasses human capabilities in more domains, society may face philosophical questions about work, creativity, and the role of humans in a highly automated world.

The AI ethics debate

Musk’s comments feed into the broader discussion on AI ethics, highlighting the need for responsible AI deployment. Experts stress balancing innovation with safeguards, including:

- Ethical AI design and transparency

- Policy and regulatory frameworks for high-risk AI systems

- Global cooperation to prevent misuse or unintended consequences

- Public awareness and discourse on AI’s societal impacts

Ethics & Policy

Fairfield University awarded three-year grant to lead AI ethics in education collaborative research project — EdTech Innovation Hub

The project aims to “serve the national interest by enhancing AI education through the integration of ethical considerations in AI curricula, fostering design and development of responsible and secure AI systems”, according to the project summary approved by the National Science Foundation.

Aiming the improve the effectiveness of AI ethics education for computer science students, the research will develop an “innovative pedagogical strategy.

Funding has also been awarded to partnering institutions, bringing the total support to nearly $400,000.

Sidike Paheding, PhD, Chair and Associate Professor of Computer Science at Fairfield University’s School of Engineering and Computing, is the principal investigator of the project.

“Throughout this project, we aim to advance AI education and promote responsible AI development and use. By enhancing AI education, we seek to foster safer, more secure, and trustworthy AI technologies,” Dr. Paheding comments.

“Among its many challenges, AI poses significant ethical issues that need to be addressed in computer science courses. This project will give faculty practical, hands-on teaching tools to explore these issues with their students.”

The ETIH Innovation Awards 2026

Ethics & Policy

Sovereign Australia AI believes LLMs can be ethical

Welcome back to Neural Notes, a weekly column where I look at how AI is affecting Australia. In this edition: a challenger appears for sovereign Australian AI, and it actually wants to pay creatives.

Sovereign Australia AI is a newly launched Sydney venture aiming to build foundational large language models that are entirely Australian. Founded by Simon Kriss and Dr Troy Neilson, the company’s models are said to be trained on Australian data, run on local infrastructure, and governed by domestic privacy standards.

Setting itself apart from offshore giants like OpenAI and Meta, the company plans to build the system for under $100 million, utilising 256 Nvidia Blackwell B200 GPUs. This is the largest domestic AI hardware deployment to date.

The startup also says it has no interest in going up against the likes of OpenAI and Perplexity.

“We are not trying to directly compete with ChatGPT or other global models, because we don’t need to. Instead, we are creating our own foundational models that will serve as viable alternatives that better capture the Australian voice,” Neilson said to SmartCompany.

There are many public and private organisations in Australia that will greatly benefit from a truly sovereign AI solution, but do not want to sacrifice real-world performance. Our Ginan and Australis models will fill that gap.”

Its other point of differentiation is the plan for ethically sourced datasets. In fact, Sovereign Australia AI says that over $10 million is earmarked for licensing and compensating copyright holders whose work contributes to training. Though it admits it will probably require more cash.

“We want to set that benchmark high. Users should be able to understand what datasets the AI they use is trained on. They should be able to be aware of how the data has been curated,” Kriss told SmartCompany.

“They should expect that copyright holders whose data was used to make the model more capable are compensated. That’s what we will bring to the table.”

The price of ethics

As we have seen in recent weeks, copyright has been at the forefront of the generative AI conversation, with both tech founders and lobbyists arguing for relaxed rules and ‘fair use’ exemptions in the name of innovation

Kriss sees an urgent need to value Australian creativity not just as a resource but as an ethical benchmark.

AI development, he says, must avoid the “Wild West, lawless and lacking empathy” mentality that defined its early years and pursue a path that actively engages and protects local content makers.

There’s also a shift away from Silicon Valley’s ‘move fast and litigate later’ philosophy.

Neilson told SmartCompany he has watched international platforms defer creator payment until legal action forced their hand, pointing out the “asking for forgiveness instead of seeking permission” playbook is now coming with a hefty price tag.

Moving forward together with content creators, he suggests, is not only right for Australia but essential if they want to build lasting trust and capability.

But compensation sits awkwardly with technical realities. The company is openly exploring whether a public library-like model, using meta tagging and attribution to route payments, could meaningfully support creators.

Kriss frames this not only as a technical necessity but as a principle: paying for content actually consumed is the backbone of sustainable AI training. The team acknowledges “synthesis is a tough nut to crack,” but for Neilson, that’s a discussion the sector needs rather than something to defer to lawsuits and policy cycles.

As AI industry figures urge Australia to model its approach on the US’ “fair use,” creators and advocates warn this risks legitimising mass scraping and leaving local culture unpaid and unprotected.

This legal ambiguity is rapidly becoming a global standard. Anthropic’s proposed US$1.5 billion book piracy settlement, a case promising $3,000 per affected title, is now on hold as US courts question both the payout and the precedent.

Judges caution that dismissing other direct copyright claims does not resolve the lawfulness of training AI on copyrighted material, leaving creators and platforms worldwide in limbo.

And in recent weeks, another US judge dismissed a copyright infringement lawsuit brought against Meta by 13 authors, including comedian Sarah Silverman. It was the second claim of this nature to be dismissed by the court in San Francisco at the time.

When funding and talent collide with ambition

The presence of multiple teams working in this area, such as Maincode’s Matilda model, suggests that Australia’s sovereign AI movement is well underway.

Neilson welcomes this competition and doesn’t see fragmentation as a potential risk.

“We applaud anyone in the space working on sovereign AI. We’re proud to be part of the initial ground swell standing up AI capability in Australia, and we look forward to the amazing things that the Maincode team will build,” Neilson said.

“The worst parties are the ones where you’re in the room by yourself.”

Behind the scenes, the budget calculations remain complicated. Sovereign Australia AI’s planned $100 million investment sits well below what analysts believe is required for competitive, world-class infrastructure. Industry bodies have called for $2–4 billion to ensure genuine sovereign capability.

While Neilson maintains that local talent and expertise are up to the challenge, persistent skills gaps and global talent poaching mean only coordinated investment can bridge the distance from prototype to deployment.

Transparency and Australian investment

According to Sovereign Australia AI, transparency is a platform feature.

“We can’t control all commercial conversations, but I think it would benefit everyone if these deals were disclosed,” Kriss said.

“Somewhat selfishly, it would benefit us, as users would understand how much of what we charge is going back to creators to make them whole.”

Neilson also welcomes the idea of independent audits.

“How we use that data, may be commercial in confidence, but the raw data, absolutely.”

“This is critical for several reasons. Firstly, it helps eliminate the black box nature of LLMs, where a lack of understanding of the underlying data impedes understanding of outputs.

Secondly, we want to provide the owners of the data the opportunity to opt out of our models if they choose. We need to tag all data to empower this process and we need to have that process audited so every Australian can be proud of the work we do.”

The team also says its Australian and ethical focus is fundamental.

“We’re sovereign down to our corporate structure, our employees, our supply chain, and where we house our hardware. We cannot and will not change our tune just because someone is willing to write a larger cheque,” Kriss said.

He also said the startup would refuse foreign investment “without giving it a second thought”.

“For us, this is not about finding any money … it is all about finding the RIGHT money. If an investor said they would not support paying creatives, we would walk away from the deal. We need to do this right for Australia.”

Finally, the founders challenge conventional notions of what sovereignty and security mean for digital Australia.

Kriss proposed genuine sovereignty can’t be reduced to protection against threats or state interests alone.

“Security goes beyond guns, bombs and intelligence. It goes to a musician being secure in being able to pay their bills, to a reporter being confident that their work isn’t being unfairly ripped off. To divorce being fair from sovereignty is downright unAustralian.”

Can principle meet practicality in Australia’s sovereign AI experiment?

The aims of Sovereign Australia AI are an ambitious counterpoint to the global status quo. Whether these promises will prove practical or affordable for all Australian creators is still an open question.

And this will be all the more difficult to achieve without targeting a global market. Sovereign Australia AI has remained firm about building local for local.

The founders have indicated no plans to chase global scale or go head-to-head with US tech giants.

“No, Australia is our market, and we need this to maintain our voice on the world stage,” Neilson said.

“Yet we hope that other sovereign nations around the globe see the amazing work that we’re doing and seek us out. We have the capability and smarts to help other nations.

“This would enable us to scale commercially beyond Australia, without jeopardising our sovereignty.”

As for paying creators, the startup is still considering different options.

It says that a sustainable model may be easier to structure with large organisations, which, as Neilson puts it, “have well-developed systems in place for content licensing and attribution”.

But for individual artists and writers, he acknowledges, the solution could “look to systems like those used by YouTube” to engage at scale.

“We are not saying we have all the answers, but we are open to working it out for the benefit of all Australians.”

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi