Ethics & Policy

The AI Ethics Brief #162: Beyond the Prompt

Welcome to The AI Ethics Brief, a bi-weekly publication by the Montreal AI Ethics Institute. Stay informed on the evolving world of AI ethics with key research, insightful reporting, and thoughtful commentary. Learn more at montrealethics.ai/about.

Follow MAIEI on Bluesky and LinkedIn.

Thank you to everyone who joined us on April 10, 2025, whether in person in Montreal or online from around the world, to honour the life and legacy of Abhishek Gupta, founder of the Montreal AI Ethics Institute.

We laughed, we cried, and we shared stories. It was an evening of deep reflection and celebration, one that reminded us not only of Abhishek’s profound impact on the field of AI ethics but also of the global community he helped build with care, conviction, and joy.

A special thank you to Planned for their generous support and hospitality. And to each of you who attended, spoke, or held space with us, your presence meant more than words can express.

-

From Prompts to Policy: Tariffs and the Risks of Vibe Governing with AI

-

New York State Judge Not Amused with AI Avatar

-

AI First, People Second? Shopify’s Hiring Memo Sparks Debate

-

AI Policy Corner: The Colorado State Deepfakes Act

-

How the U.S. Public and AI Experts View Artificial Intelligence – Pew Research Center

-

Amazon’s Privacy Ultimatum Starts Today: Let Echo Devices Process Your Data or Stop Using Alexa – CNET

-

Cyberattacks by AI agents are coming – MIT Technology Review

-

Introducing Claude for Education – Anthropic

-

Meta’s AI research lab is ‘dying a slow death,’ some insiders say. Meta prefers to call it ‘a new beginning’ – Fortune

As AI systems become more capable and more advanced, recent developments in the AI space have prompted us to consider AI’s role in our lives. AI avatars are now appearing in courtrooms, Shopify’s CEO has made new hires contingent on whether AI could do the job, and a recent study found generative AI therapy ranked on par with human interventions amongst a small population of users. New and evolving AI tools continue to blur the line between machine and human roles, including in areas as personal as romance.

At MAIEI, we focus on building civic competency around AI, helping people engage with and understand AI systems more thoughtfully. One helpful lens we often use: Does it add value to what I’m doing? AI can be helpful for simulating difficult conversations, whether it’s a physician practicing hard patient conversations with AI avatars or someone rehearsing an anxiety-inducing conversation they will have with a colleague.

However, this over-reliance comes with tradeoffs where there are instances when it does not add value. Excessive use of generative AI can harm critical thinking skills and lead to blind trust in AI systems. Delegating legal representation to an AI, for example, may also undermine the credibility of your case.

And so, where do we draw the line?

Asking, “Does this add value?” is a good starting point. From there, we begin to establish what we’re comfortable delegating to AI and what we must keep human.

Please share your thoughts with the MAIEI community:

What Happened: The White House’s new tariff proposal under the Trump Administration, released on April 2, seems to have been influenced, directly or indirectly, by large language models (LLMs). Multiple AI systems (ChatGPT, Claude, Gemini, Grok) produced nearly identical responses when prompted with how the U.S. could “easily” calculate tariffs.

As economic journalist James Surowiecki points out:

Just figured out where these fake tariff rates come from. They didn’t actually calculate tariff rates + non-tariff barriers, as they say they did. Instead, for every country, they just took our trade deficit with that country and divided it by the country’s exports to us.

So we have a $17.9 billion trade deficit with Indonesia. Its exports to us are $28 billion. $17.9/$28 = 64%, which Trump claims is the tariff rate Indonesia charges us. What extraordinary nonsense this is.

📌 MAIEI’s Take and Why It Matters:

This moment reveals a larger shift: the quiet mainstreaming of LLMs into high-stakes geopolitical decision-making. When every major frontier model converges on the same overly simplistic method for tariff calculation, and when that formula shapes real-world policy, it’s clear that technical accuracy alone isn’t enough. We need civic competence, especially within institutions responsible for high-impact decisions.

The issue isn’t just the formula’s limitations (economists and critics alike have widely panned it). It’s the framing of LLMs as know-it-all answer machines, offering complex policy advice stripped of context, nuance, or accountability.

Good questions matter. But so does knowing how to ask them.

As @krishnanrohit aptly put it:

“This is now an AI safety issue.”

And also: “This is Vibe Governing.”

Without proper guardrails, LLMs in governance risk turning vibes into verdicts. The models may sound confident, but their judgment is only as strong as the prompt behind it. And in this case, the stakes aren’t abstract. It’s measured in trillions in global trade and the livelihoods of millions teetering on the balance.

What Happened: Earlier this month, Jerome Dewald attempted to represent himself in front of an appellate panel of New York State judges with an AI-generated avatar. He had struggled with his words in prior legal settings and hoped an AI avatar would be more eloquent. Thus, in court, he began playing a video of an AI-generated man delivering his arguments until he was promptly stopped by a judge, Justice Sallie Manzanet-Daniels of the Appellate Division’s First Judicial Department. She felt misled. While Mr. Dewald had obtained approval to utilize an accompanying video presentation, he had not disclosed his use of an AI avatar. He has since expressed deep regret and written the judges an apology letter.

📌 MAIEI’s Take and Why It Matters:

While Mr. Dewald’s intent may have been genuine, this incident raises broader concerns about human accountability in high-stakes settings. Legal proceedings demand transparency. Those impacted by court decisions have the right to hear directly from the people involved and not AI-generated personas, no matter how polished or perfect.

This also isn’t an isolated case: AI has similarly been used to replace human voices in other significant contexts. In 2023, three Vanderbilt University administrators were heavily criticized for using ChatGPT to generate a message to students addressing the Michigan State University shooting that killed three students and injured five more people. At a moment when students needed empathy and sincerity from real people, generative AI stepped in instead. The motivation was likely understandable, a desire to “say the right thing” in a difficult moment, but these are precisely the circumstances where a human voice matters most.

Meanwhile, in March 2025, Arizona’s Supreme Court launched AI avatars, “Daniel” and “Victoria,” to explain rulings and improve public access to the judicial system. While the goal is admirable, building trust through transparency, there’s a risk that these tools further detach the public from the human decision-makers behind life-altering judgments.

As Chief Justice Ann Timmer of the Arizona Supreme Court puts it:

“We are, at the end of the day, public servants—and the public deserves to hear from us.”

We agree. In moments that call for clarity, compassion, or accountability, AI should support human voices, not replace them.

What Happened: In a recent internal memo, Shopify CEO Tobi Lütke told employees that before requesting additional headcount, they must first demonstrate that AI can’t do the job. The memo, later shared publicly as “it was in the process of being leaked and (presumably) shown in bad faith,” reflects a broader shift in company culture: “Reflexive AI usage” is now a baseline expectation at Shopify. AI proficiency will also factor into performance reviews. Lütke framed the directive as a response to rapid advances in generative AI and a desire to increase productivity without growing the team.

The memo has sparked strong reactions, both supportive and skeptical, including from Wharton professor Ethan Mollick, who noted that while the policy is bold, it leaves critical questions unanswered:

1) What is management’s vision of what the future of work looks like at Shopify? What do people do all day a few years from now?

2) What is the plan for turning self-directed learning into organizational innovation?

3) How are organizational incentives being aligned so that people want to share what they learn rather than hiding it?

4) How do employees get better at using AI?

📌 MAIEI’s Take and Why It Matters:

Shopify’s memo is a clear signal: AI is no longer optional—it’s foundational. But while the mandate sets a high bar for efficiency, it also raises important questions about how we balance automation with learning, collaboration, and organizational health.

Requiring teams to “prove a human is necessary” flips the burden of justification and could accelerate innovation. But without a clear framework for AI upskilling and institutional support for experimentation, there’s a risk that employees fall into compliance mode rather than true capability-building.

The bigger concern is cultural: When AI is treated as a baseline expectation without addressing who gets to learn, experiment, and fail safely, it can deepen divides rather than close them. A future of work built around AI should be inclusive, not performative.

The memo may be the start of something transformative, but only if paired with a vision for what work looks like with AI, not just because of it.

Did we miss anything? Let us know in the comments below.

Help us keep The AI Ethics Brief free and accessible for everyone by becoming a paid subscriber on Substack for the price of a coffee or making a one-time or recurring donation at montrealethics.ai/donate

Your support sustains our mission of Democratizing AI Ethics Literacy, honours Abhishek Gupta’s legacy, and ensures we can continue serving our community.

For corporate partnerships or larger donations, please contact us at support@montrealethics.ai

The Insights & Perspectives summarized below capture the tension between innovation and accountability at three critical fault lines: electoral integrity, public trust, and personal agency.

From Colorado’s effort to regulate deepfakes to Pew’s findings on public skepticism toward AI and Amazon’s quiet redefinition of privacy defaults, each piece demonstrates that the stakes of AI deployment are not abstract—they are legal, social, and deeply personal.

What emerges is a shared pattern: technology is evolving faster than the guardrails meant to protect those most impacted. Regulation struggles to keep pace. Consent is reinterpreted without notice. And the voices of the public, especially those outside tech and policy circles, are still too often marginalized. The work ahead is not just technical; it is civic, participatory, and moral.

AI Policy Corner: The Colorado State Deepfakes Act

By Ogadinma Enwereazu. This article is part of our AI Policy Corner series, a collaboration between the Montreal AI Ethics Institute (MAIEI) and the Governance and Responsible AI Lab (GRAIL) at Purdue University. The series provides concise insights into critical AI policy developments from the local to international levels, helping our readers stay informed about the evolving landscape of AI governance.

In 2024, Colorado enacted a law requiring disclosure of AI-generated deepfakes in political campaigns, part of a growing wave of state-level efforts to regulate synthetic and manipulated media. While over 30 states have introduced similar laws, Colorado’s relatively modest penalties highlight the uneven and still-evolving landscape of AI and election regulation.

To dive deeper, read the full article here.

How the U.S. Public and AI Experts View Artificial Intelligence – Pew Research Center

A recent Pew Research Center study (April 3, 2025) reveals a significant perception gap between AI experts and the American public on AI’s role and risks. While experts advocate for responsible innovation, nuanced regulation, and system transparency, the general public expresses deeper concerns about job displacement, algorithmic bias, and inadequate oversight. This division isn’t merely about technical literacy, it reflects fundamentally different priorities, with experts focusing on interdisciplinary governance while the public emphasizes fairness, harm prevention, and democratic protections. The research suggests public skepticism stems not from ignorance but from legitimate discomfort with distant, technocratic decision-making and uncertainty about who truly benefits from AI advancements. As AI becomes further embedded in healthcare, education, and employment, failing to bridge this trust divide threatens to undermine public confidence and worsen structural inequalities. The findings challenge the narrative that innovation is inherently beneficial, calling for more than technical safeguards. Participatory governance, diverse perspectives, and genuine commitment to amplifying the voices of those most affected by technological transformation are essential to reconciling these divergent viewpoints.

To dive deeper, read the full article here.

In a significant policy shift implemented on March 28, 2025, Amazon made cloud-based voice processing mandatory for all Echo devices with its “Alexa Plus” generative AI upgrade, which eliminates users’ ability to prevent voice recordings from being transmitted to Amazon’s servers. While the company asserts that all data is encrypted and promptly deleted after processing, this change fundamentally alters the consent paradigm in ambient AI by converting what was previously a user-controlled privacy setting into a non-negotiable condition of service. The update exemplifies a concerning industry pattern where advanced features increasingly require centralized data collection with diminishing opt-out opportunities that are forcing privacy-conscious consumers to either accept deeper integration within Amazon’s data ecosystem or abandon their devices entirely. Such development raises critical ethical questions about the persistence of meaningful consent when software updates override previously established user choices. As competitors like Apple embrace privacy-preserving technologies, Amazon’s approach highlights a broader realignment in the tech industry where convenience supersedes control and personalization eclipses permission, ultimately posing rising challenges to users’ agency in technologies that gradually permeate their everyday lives.

To dive deeper, read the full article here.

Cyberattacks by AI agents are coming – MIT Technology Review

-

Summary: AI agents are becoming increasingly capable of executing complex tasks, from scheduling meetings to autonomously hacking systems. While cybercriminals are not yet deploying these agents at scale, research shows they’re capable of conducting sophisticated cyberattacks, including data theft and system infiltration. Palisade Research has created a honeypot system to lure and detect these AI-driven agents. Experts warn that agent-led cyberattacks may become more common soon, as they are faster, cheaper, and more adaptable than human hackers or traditional bots. New benchmarks show AI agents can exploit real-world vulnerabilities even with limited prior information.

-

Why It Matters: The rise of AI agents marks a turning point in cybersecurity, where autonomous systems could soon outpace human-led attacks in both scale and sophistication. While today’s threats remain largely experimental, the infrastructure for widespread AI-driven attacks is already being tested and refined. Palisade’s proactive detection work highlights the importance of early interventions, yet the unpredictability of AI development suggests we may face a sudden surge in malicious use. To stay ahead, the cybersecurity industry must treat AI agents not just as tools but as potential adversaries requiring entirely new defence mechanisms.

To dive deeper, read the full article here.

Introducing Claude for Education – Anthropic

-

Summary: Anthropic has released Claude for Education, a specialized version of its language model designed for educational institutions and students. This version helps learners by guiding them through questions rather than providing direct answers, creating personalized study guides, and offering feedback on assignments before deadlines.

-

Why It Matters: When LLMs first entered classrooms, they were often met with fear and panic, leading to outright bans, like New York City’s in 2023, and a scramble to detect AI-generated content to combat a possible rise in cheating, efforts which have now mostly been discontinued. But the conversation is shifting. Instead of blocking AI, institutions are now exploring how to integrate it meaningfully, as seen with Claude for Education and OpenAI’s AI Academy.

Still, some educators argue we’re focusing on the wrong problems. As McGill professor Renee Sieber writes, generative AI in education is overwhelmingly framed around students and learning outcomes, while the real opportunity may lie in reducing administrative burdens that often distract from teaching. If AI can automate the bureaucratic demands placed on faculty, it could free up space for deeper, more human-centred education.

To dive deeper, read the full article here.

-

Summary: Meta’s long-standing AI research lab, FAIR (Fundamental AI Research), is reportedly undergoing a significant internal transformation. According to current and former employees, the lab is “dying a slow death,” citing the departure of key scientists—including McGill professor and Montreal-based Meta head of AI research Joelle Pineau—a decline in internal influence, and fewer ties to Meta’s product teams. The company, however, frames the shift as a “new beginning,” emphasizing a more applied focus for its AI work, with FAIR scientists now working more closely with product development teams.

-

Why It Matters: FAIR once stood at the forefront of open-ended, “blue sky” AI research, producing influential work on self-supervised learning, large-scale vision systems, and fundamental model design. Its apparent pivot reflects broader industry pressure to commercialize research faster and deliver immediate ROI.

This mirrors a trend across Big Tech: the centre of gravity for AI research is shifting from foundational science to applied deployment. While this may increase short-term impact, some fear it could narrow the scope of inquiry and reduce long-term innovation. It also raises important questions about the future of public-interest AI research and the incentives shaping what gets studied and what gets shelved.

To dive deeper, read the full article here.

We’d love to hear from you, our readers, about any recent research papers, articles, or newsworthy developments that have captured your attention. Please share your suggestions to help shape future discussions!

Ethics & Policy

5 interesting stats to start your week

Third of UK marketers have ‘dramatically’ changed AI approach since AI Act

More than a third (37%) of UK marketers say they have ‘dramatically’ changed their approach to AI, since the introduction of the European Union’s AI Act a year ago, according to research by SAP Emarsys.

Additionally, nearly half (44%) of UK marketers say their approach to AI is more ethical than it was this time last year, while 46% report a better understanding of AI ethics, and 48% claim full compliance with the AI Act, which is designed to ensure safe and transparent AI.

The act sets out a phased approach to regulating the technology, classifying models into risk categories and setting up legal, technological, and governance frameworks which will come into place over the next two years.

However, some marketers are sceptical about the legislation, with 28% raising concerns that the AI Act will lead to the end of innovation in marketing.

Source: SAP Emarsys

Shoppers more likely to trust user reviews than influencers

Nearly two-thirds (65%) of UK consumers say they have made a purchase based on online reviews or comments from fellow shoppers, as opposed to 58% who say they have made a purchase thanks to a social media endorsement.

Nearly two-thirds (65%) of UK consumers say they have made a purchase based on online reviews or comments from fellow shoppers, as opposed to 58% who say they have made a purchase thanks to a social media endorsement.

Sports and leisure equipment (63%), decorative homewares (58%), luxury goods (56%), and cultural events (55%) are identified as product categories where consumers are most likely to find peer-to-peer information valuable.

Accurate product information was found to be a key factor in whether a review was positive or negative. Two-thirds (66%) of UK shoppers say that discrepancies between the product they receive and its description are a key reason for leaving negative reviews, whereas 40% of respondents say they have returned an item in the past year because the product details were inaccurate or misleading.

According to research by Akeeno, purchases driven by influencer activity have also declined since 2023, with 50% reporting having made a purchase based on influencer content in 2025 compared to 54% two years ago.

Source: Akeeno

77% of B2B marketing leaders say buyers still rely on their networks

When vetting what brands to work with, 77% of B2B marketing leaders say potential buyers still look at the company’s wider network as well as its own channels.

When vetting what brands to work with, 77% of B2B marketing leaders say potential buyers still look at the company’s wider network as well as its own channels.

Given the amount of content professionals are faced with, they are more likely to rely on other professionals they already know and trust, according to research from LinkedIn.

More than two-fifths (43%) of B2B marketers globally say their network is still their primary source for advice at work, ahead of family and friends, search engines, and AI tools.

Additionally, younger professionals surveyed say they are still somewhat sceptical of AI, with three-quarters (75%) of 18- to 24-year-olds saying that even as AI becomes more advanced, there’s still no substitute for the intuition and insights they get from trusted colleagues.

Since professionals are more likely to trust content and advice from peers, marketers are now investing more in creators, employees, and subject matter experts to build trust. As a result, 80% of marketers say trusted creators are now essential to earning credibility with younger buyers.

Source: LinkedIn

Business confidence up 11 points but leaders remain concerned about economy

Business leader confidence has increased slightly from last month, having risen from -72 in July to -61 in August.

Business leader confidence has increased slightly from last month, having risen from -72 in July to -61 in August.

The IoD Directors’ Economic Confidence Index, which measures business leader optimism in prospects for the UK economy, is now back to where it was immediately after last year’s Budget.

This improvement comes from several factors, including the rise in investment intentions (up from -27 in July to -8 in August), the rise in headcount expectations from -23 to -4 over the same period, and the increase in revenue expectations from -8 to 12.

Additionally, business leaders’ confidence in their own organisations is also up, standing at 1 in August compared to -9 in July.

Several factors were identified as being of concern for business leaders; these include UK economic conditions at 76%, up from 67% in May, and both employment taxes (remaining at 59%) and business taxes (up to 47%, from 45%) continuing to be of significant concern.

Source: The Institute of Directors

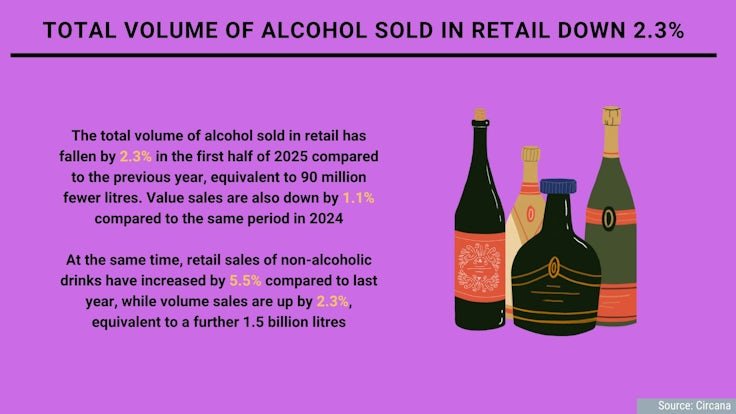

Total volume of alcohol sold in retail down 2.3%

The total volume of alcohol sold in retail has fallen by 2.3% in the first half of 2025 compared to the previous year, equivalent to 90 million fewer litres. Value sales are also down by 1.1% compared to the same period in 2024.

The total volume of alcohol sold in retail has fallen by 2.3% in the first half of 2025 compared to the previous year, equivalent to 90 million fewer litres. Value sales are also down by 1.1% compared to the same period in 2024.

At the same time, retail sales of non-alcoholic drinks have increased by 5.5% compared to last year, while volume sales are up by 2.3%, equivalent to a further 1.5 billion litres.

As the demand for non-alcoholic beverages grows, people increasingly expect these options to be available in their local bars and restaurants, with 55% of Brits and Europeans now expecting bars to always serve non-alcoholic beer.

As well as this, there are shifts happening within the alcoholic beverages category with value sales of no and low-alcohol spirits rising by 16.1%, and sales of ready-to-drink spirits growing by 11.6% compared to last year.

Source: Circana

Ethics & Policy

AI ethics under scrutiny, young people most exposed

New reports into the rise of artificial intelligence (AI) showed incidents linked to ethical breaches have more than doubled in just two years.

At the same time, entry-level job opportunities have been shrinking, partly due to the spread of this automation.

AI is moving from the margins to the mainstream at extraordinary speed and both workplaces and universities are struggling to keep up.

Tools such as ChatGPT, Gemini and Claude are now being used to draft emails, analyse data, write code, mark essays and even decide who gets a job interview.

Alongside this rapid rollout, a March report from McKinsey, one by the OECD in July and an earlier Rand report warned of a sharp increase in ethical controversies — from cheating scandals in exams to biased recruitment systems and cybersecurity threats — leaving regulators and institutions scrambling to respond.

The McKinsey survey said almost eight in 10 organisations now used AI in at least one business function, up from half in 2022.

While adoption promises faster workflows and lower costs, many companies deploy AI without clear policies. Universities face similar struggles, with students increasingly relying on AI for assignments and exams while academic rules remain inconsistent, it said.

The OECD’s AI Incidents and Hazards Monitor reported that ethical and operational issues involving AI have more than doubled since 2022.

Common concerns included accountability — who is responsible when AI errs; transparency — whether users understand AI decisions; and fairness, whether AI discriminates against certain groups.

Many models operated as “black boxes”, producing results without explanation, making errors hard to detect and correct, it said.

In workplaces, AI is used to screen CVs, rank applicants, and monitor performance. Yet studies show AI trained on historical data can replicate biases, unintentionally favouring certain groups.

Rand reported that AI was also used to manipulate information, influence decisions in sensitive sectors, and conduct cyberattacks.

Meanwhile, 41 per cent of professionals report that AI-driven change is harming their mental health, with younger workers feeling most anxious about job security.

LinkedIn data showed that entry-level roles in the US have fallen by more than 35 per cent since 2023, while 63 per cent of executives expected AI to replace tasks currently done by junior staff.

Aneesh Raman, LinkedIn’s chief economic opportunity officer, described this as “a perfect storm” for new graduates: Hiring freezes, economic uncertainty and AI disruption, as the BBC reported August 26.

LinkedIn forecasts that 70 per cent of jobs will look very different by 2030.

Recent Stanford research confirmed that employment among early-career workers in AI-exposed roles has dropped 13 per cent since generative AI became widespread, while more experienced workers or less AI-exposed roles remained stable.

Companies are adjusting through layoffs rather than pay cuts, squeezing younger workers out, it found.

In Belgium, AI ethics and fairness debates have intensified following a scandal in Flanders’ medical entrance exams.

Investigators caught three candidates using ChatGPT during the test.

Separately, 19 students filed appeals, suspecting others may have used AI unfairly after unusually high pass rates: Some 2,608 of 5,544 participants passed but only 1,741 could enter medical school. The success rate jumped to 47 per cent from 18.9 per cent in 2024, raising concerns about fairness and potential AI misuse.

Flemish education minister Zuhal Demir condemned the incidents, saying students who used AI had “cheated themselves, the university and society”.

Exam commission chair Professor Jan Eggermont noted that the higher pass rate might also reflect easier questions, which were deliberately simplified after the previous year’s exam proved excessively difficult, as well as the record number of participants, rather than AI-assisted cheating alone.

French-speaking universities, in the other part of the country, were not concerned by this scandal, as they still conduct medical entrance exams entirely on paper, something Demir said he was considering going back to.

Ethics & Policy

Governing AI with inclusion: An Egyptian model for the Global South

When artificial intelligence tools began spreading beyond technical circles and into the hands of everyday users, I saw a real opportunity to understand this profound transformation and harness AI’s potential to benefit Egypt as a state and its citizens. I also had questions: Is AI truly a national priority for Egypt? Do we need a legal framework to regulate it? Does it provide adequate protection for citizens? And is it safe enough for vulnerable groups like women and children?

These questions were not rhetorical. They were the drivers behind my decision to work on a legislative proposal for AI governance. My goal was to craft a national framework rooted in inclusion, dialogue, and development, one that does not simply follow global trends but actively shapes them to serve our society’s interests. The journey Egypt undertook can offer inspiration for other countries navigating the path toward fair and inclusive digital policies.

Egypt’s AI Development Journey

Over the past five years, Egypt has accelerated its commitment to AI as a pillar of its Egypt Vision 2030 for sustainable development. In May 2021, the government launched its first National AI Strategy, focusing on capacity building, integrating AI in the public sector, and fostering international collaboration. A National AI Council was established under the Ministry of Communications and Information Technology (MCIT) to oversee implementation. In January 2025, President Abdel Fattah El-Sisi unveiled the second National AI Strategy (2025–2030), which is built around six pillars: governance, technology, data, infrastructure, ecosystem development, and capacity building.

Since then, the MCIT has launched several initiatives, including training 100,000 young people through the “Our Future is Digital” programme, partnering with UNESCO to assess AI readiness, and integrating AI into health, education, and infrastructure projects. Today, Egypt hosts AI research centres, university departments, and partnerships with global tech companies—positioning itself as a regional innovation hub.

AI-led education reform

AI is not reserved for startups and hospitals. In May 2025, President El-Sisi instructed the government to consider introducing AI as a compulsory subject in pre-university education. In April 2025, I formally submitted a parliamentary request and another to the Deputy Prime Minister, suggesting that the government include AI education as part of a broader vision to prepare future generations, as outlined in Egypt’s initial AI strategy. The political leadership’s support for this proposal highlighted the value of synergy between decision-makers and civil society. The Ministries of Education and Communications are now exploring how to integrate AI concepts, ethics, and basic programming into school curricula.

From dialogue to legislation: My journey in AI policymaking

As Deputy Chair of the Foreign Affairs Committee in Parliament, I believe AI policymaking should not be confined to closed-door discussions. It must include all voices. In shaping Egypt’s AI policy, we brought together:

- The private sector, from startups to multinationals, will contribute its views on regulations, data protection, and innovation.

- Civil society – to emphasise ethical AI, algorithmic justice, and protection of vulnerable groups.

- International organisations, such as the OECD, UNDP, and UNESCO, share global best practices and experiences.

- Academic institutions – I co-hosted policy dialogues with the American University in Cairo and the American Chamber of Commerce (AmCham) to discuss governance standards and capacity development.

From recommendations to action: The government listening session

To transform dialogue into real policy, I formally requested the MCIT to host a listening session focused solely on the private sector. Over 70 companies and experts attended, sharing their recommendations directly with government officials.

This marked a key turning point, transitioning the initiative from a parliamentary effort into a participatory, cross-sectoral collaboration.

Drafting the law: Objectives, transparency, and risk-based classification

Based on these consultations, participants developed a legislative proposal grounded in transparency, fairness, and inclusivity. The proposed law includes the following core objectives:

- Support education and scientific research in the field of artificial intelligence

- Provide specific protection for individuals and groups most vulnerable to the potential risks of AI technologies

- Govern AI systems in alignment with Egypt’s international commitments and national legal framework

- Enhance Egypt’s position as a regional and international hub for AI innovation, in partnership with development institutions

- Support and encourage private sector investment in the field of AI, especially for startups and small enterprises

- Promote Egypt’s transition to a digital economy powered by advanced technologies and AI

To operationalise these objectives, the bill includes:

- Clear definitions of AI systems

- Data protection measures aligned with Egypt’s 2020 Personal Data Protection Law

- Mandatory algorithmic fairness, transparency, and auditability

- Incentives for innovation, such as AI incubators and R&D centres

Establishment of ethics committees and training programmes for public sector staff

The draft law also introduces a risk-based classification framework, aligning it with global best practices, which categorises AI systems into three tiers:

1. Prohibited AI systems – These are banned outright due to unacceptable risks, including harm to safety, rights, or public order.

2. High-risk AI systems – These require prior approval, detailed documentation, transparency, and ongoing regulatory oversight. Common examples include AI used in healthcare, law enforcement, critical infrastructure, and education.

3. Limited-risk AI systems – These are permitted with minimal safeguards, such as user transparency, labelling of AI-generated content, and optional user consent. Examples include recommendation engines and chatbots.

This classification system ensures proportionality in regulation, protecting the public interest without stifling innovation.

Global recognition: The IPU applauds Egypt’s model

The Inter-Parliamentary Union (IPU), representing over 179 national parliaments, praised Egypt’s AI bill as a model for inclusive AI governance. It highlighted that involving all stakeholders builds public trust in digital policy and reinforces the legitimacy of technology laws.

Key lessons learned

- Inclusion builds trust – Multistakeholder participation leads to more practical and sustainable policies.

- Political will matters – President El-Sisi’s support elevated AI from a tech topic to a national priority.

- Laws evolve through experience – Our draft legislation is designed to be updated as the field develops.

- Education is the ultimate infrastructure – Bridging the future digital divide begins in the classroom.

- Ethics come first – From the outset, we established values that focus on fairness, transparency, and non-discrimination.

Challenges ahead

As the draft bill progresses into final legislation and implementation, several challenges lie ahead:

- Training regulators on AI fundamentals

- Equipping public institutions to adopt ethical AI

- Reducing the urban-rural digital divide

- Ensuring national sovereignty over data

- Enhancing Egypt’s global role as a policymaker—not just a policy recipient

Ensuring representation in AI policy

As a female legislator leading this effort, it was important for me to prioritise the representation of women, youth, and marginalised groups in technology policymaking. If AI is built on biased data, it reproduces those biases. That’s why the policymaking table must be round, diverse, and representative.

A vision for the region

I look forward to seeing Egypt:

- Advance regional AI policy partnerships across the Middle East and Africa

- Embedd AI ethics in all levels of education

- Invest in AI for the public good

Because AI should serve people—not control them.

Better laws for a better future

This journey taught me that governing AI requires courage to legislate before all the answers are known—and humility to listen to every voice. Egypt’s experience isn’t just about technology; it’s about building trust and shared ownership. And perhaps that’s the most important infrastructure of all.

The post Governing AI with inclusion: An Egyptian model for the Global South appeared first on OECD.AI.

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies