Tools & Platforms

Tech stocks head south as investors see that growth in AI is not limitless

- Tech stocks declined in August as investors questioned the limits to growth of AI companies. Nvidia, Marvell Technology, and Super Micro Computer Inc all underperformed the broader market in August. This uncertainty may impact the S&P 500, which is dominated by the “Magnificent 7” tech giants.

The Nasdaq 100 closed down 1.22% on Friday and while U.S. markets are closed today for the Labor Day holiday, futures contracts for the index are not: They’re trading flat this morning, implying that investors are not expecting much from tech stocks once the opening bell rings in New York on Tuesday.

The Nasdaq 100 closed down for the month of August (-0.16%) even though the broader S&P 500 was up 3.56%.

Tech stocks were dogged all month by discussion about whether AI was in a bubble. And a study by MIT suggested that 95% of companies have yet to see a return on their investment in AI.

As Jim Reid and his team of analysts at Deutsche Bank said this morning: “Nvidia (-3.32% on Friday) was a major driver of this softness, losing ground after Marvell Technology’s outlook raised doubts over demand for data-centre equipment and as China’s Alibaba unveiled a new AI Chip. Last Wednesday Nvidia’s results had delivered a modest quarterly beat but saw slowing revenue growth for the data centre division, in part due to a pause in sales of AI chips to China.”

Marvell Technology is based in Santa Clara, California, and makes semiconductor chips. It has a partnership with Nvidia. On its fiscal Q2 2026 earnings call on August 28, CEO Matt Murphy said, “We expect overall data center revenue in the third quarter to be flat sequentially.” Flat is not up, and that sent Marvell’s stock down 19% the next day. (In May, Marvell cancelled its investor day presentations, citing macroeconomic uncertainty.)

That disappointment came after Nvidia’s earnings call the day before. The company reported robust data center revenue growth but it was nonetheless below analyst expectations.

And then there is Super Micro Computer Inc, another chipmaker buoyed by the AI boom. In early August, it reduced its revenue outlook for the year to $33 billion. Back in February, it had estimated $40 billion. On top of that, on August 28 the company said in its annual report, “We have identified material weaknesses in our internal control over financial reporting, which could, if not remediated, adversely affect our ability to report our financial condition and results of operations in a timely and accurate manner.” Its stock fell 5.5% after that and was down 27% for the month.

Shakiness in AI stocks could have consequences for the broader market. The “Magnificent 7” tech companies (Apple, Amazon, Alphabet, Meta, Microsoft, Nvidia, and Tesla), which have all placed large bets on AI, are currently worth 34% of the entire market cap of the S&P 500.

Here’s a snapshot of the markets globally this morning:

- S&P 500 futures were up 0.1% this morning. U.S. markets are closed for Labor Day.

- STOXX Europe 600 was up 0.19% in early trading.

- The U.K.’s FTSE 100 was up 0.08% in early trading.

- Japan’s Nikkei 225 was down 1.24%.

- China’s CSI 300 was up 0.6%.

- The South Korea KOSPI was down 1.35%.

- India’s Nifty 50 was up 0.81% before the end of the session.

- Bitcoin fell to $109.3K.

Tools & Platforms

AI-powered cameras aim to make Gulf Shores’ roads safer

GULF SHORES, Ala. (WALA) – The City of Gulf Shores is using the power of Artificial Intelligence to track events and make the city safer. It’s the only city in the state and one of just 40 in the country to use this particular AI system to monitor intersection activity.

“So, it tells me that this is a near-miss where a car cut another car off,” said Gulf Shores City Engineer, Jenny Wolfschlag as she referenced her computer monitor. “The first car was going southeast, and the second car was going westbound. It left a dot where the accident would have happened if they would have been traveling at a little bit different speed and actually hit each other.”

Wolfschlag has a new, high-tech tool to help her department analyze problems with traffic patterns and make decisions on the best safety-related changes to make. Gulf Shores is the first city in Alabama to put the DERQ-AI platform to work on the street and Wolfschlag said it’s already proving its worth.

“The data that we’re getting out of that system is fantastic,” Wolfschlag said. “One thing that it does that’s really interesting, it produces hot spot maps, so we can pull up a heat map and see in particular, where pedestrians are crossing illegally.”

The integrated camera system does far more than monitor traffic flow in real time. It is also tied into the city’s Centrac’s software platform, allowing on the fly adjustments to signal timing based on the amount, and direction of traffic. It can even track pedestrian traffic, something Wolfschlag sees as a valuable feature.

“For example, if you have a group of one or two people crossing the street, it will set a set time. It might give them twenty seconds to cross the street, but if it recognizes a person with a mobility aid, it can automatically adjust that and maybe give them twenty-five seconds to cross the street,” Wolfschlag explained.

Those driving around or walking to the beach would never know Artificial Intelligence was at work making the trip quicker and safer. Those I pointed it out to were impressed and happy to learn of the city’s investment into this technology.

“AI is changing so we might as well adapt to certain situations, but I think it will be good,” Austin Nguyen of Fairhope said.

“I think it’s a great idea,” agreed Mo Rhazi of Baton Rouge. “I think it’s always great to collect data and improve the safety and make sure that you can avoid. Always, a proactive solution is always better than reactive.”

Right now, the only intersection covered by this new AI technology in at Hwy. 59 and E/W Beach Blvd., but that will soon change. The goal is to have an AI device at every intersection between there and Fort Morgan Road.

“Since this really integrates that pedestrian with the vehicle movement, we thought it would be a good place to start. We’re also going to put in in the light at our new high school that is getting ready to open in August of next year,” added Wolfschlag.

It costs the city around $40,000 per intersection to install the DERQ – AI platform. The hope is that they’ll be able to transition at least one intersection per year until the goal is reached.

Copyright 2025 WALA. All rights reserved.

Tools & Platforms

AI edge cloud service provisioning for knowledge management smart applications

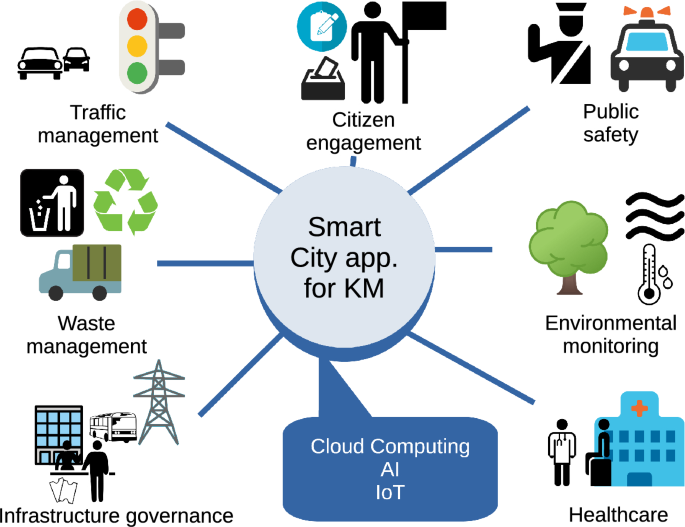

Smart cities enhance the quality of life of its residents, and promote transparent management of public resources, including financial assets, natural resources, and infrastructure. Successful smart city initiatives rely on three core components in knowledge management: technology, people, and institutions. Together, these elements support urban infrastructure and governance, improving public services and citizen engagement. Technology-driven services leverage data to gain insights, crowdsource ideas, and deliver enhanced public services. Additionally, smart applications empower citizens to participate in shaping public policy, enriching both decision-making processes and the value of business operations.

KM in a smart city forms a cyber-physical social system that encourages collaboration among organizations and stakeholders, creating a sophisticated technological network within the urban ecosystem. Key components of this network are AI, IoT and Big Data, which play critical roles in knowledge co-creation, restructuring KM processes, and facilitating the exchange of human and organizational knowledge. Stakeholders contribute essential data, and trust is a crucial factor in fostering agreements between them and the municipality to effectively deploy smart technologies and successfully implement smart initiatives6,29.

For transformative innovations that reshape how organizations and companies deliver benefits within the ecosystem, a supportive framework must emerge. This includes investments in new systems and skill development by firms, as well as an adaptation to the new approach by the customers, learning to use it to generate value. Over time, customers and citizens themselves become more adept at using information to manage their lives as workers, consumers, and travellers.

Modern platforms depend on the ability of businesses and individuals to create, access, and analyse vast amounts of data across various devices. Digital technologies such as social networks and mobile applications are driving the expansion of platforms into smart applications. These platforms utilize Big Data to collect, store, and manage extensive data sets30. A major challenge for the integration of smart applications into knowledge management processes is the workforce skill gap. To avoid the cost of acquiring new talents with the right expertise, Kolding et al.31 conclude that organizations should plan ahead training programs to update the skills of the employee base in order to meet long-term development goals and other enterprise-wide priorities.

Figure 1 shows examples of smart city applications that involve knowledge management processes. The following sections will explore the technologies that establish platforms for knowledge management in smart cities.

Cloud computing

Cloud computing is a computing paradigm where the resources, be it applications and software, data, frameworks, servers or hardware, are stored on remote servers and accessed over the internet. This makes those resources available from anywhere with an internet connection. “Weak” computing devices send their computations to cloud servers, a practice known as Computing Offloading32.

The cloud brings numerous key benefits. It enables ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction33. It is a rapidly evolving field that has gained significant traction in recent years. It encompasses a range of technologies and market players, and is expected to continue growing in the future34.

Organizations gain a set of advantages by using the cloud, including cost saving, scalability, reliability and flexibility7. It also allows organizations to focus on their core competencies instead of managing IT infrastructure. Additionally, cloud computing can help organizations improve their agility, since they no longer need to wait for hardware to be provisioned and deployed. Furthermore, cloud computing enables organizations to access powerful applications and services that they may not have been able to afford on their own8.

Cloud resources range from data and software to concrete hardware resources. The following list provides an overview of the typical applications of cloud computing.

-

Web-based applications: Cloud computing provides a platform for hosting web applications, allowing businesses to deploy and scale their applications without the need for significant upfront investment in hardware infrastructure.

-

Data storage and backup: Cloud storage services offer scalable and reliable data storage solutions, allowing businesses to store and back up their data securely in the cloud.

-

Software as a service (SaaS): Cloud-based SaaS applications enable users to access software applications over the internet, eliminating the need for local installation and maintenance.

-

Infrastructure as a Service (IaaS): IaaS providers offer virtualized computing resources, enabling businesses to build and manage their IT infrastructure in the cloud.

-

Platform as a service (PaaS): PaaS offerings provide a platform for developers to build, deploy, and manage applications without the complexity of managing underlying infrastructure.

-

Big data analytics: Cloud computing platforms provide the scalability and computing power required for processing and analyzing large volumes of data, making it easier for organizations to derive insights from their data.

-

Internet of things: Cloud platforms offer services for managing and processing data generated by IoT devices, enabling real-time analytics and decision-making.

-

Artificial intelligence: and Machine Learning: Cloud-based AI and machine learning services provide access to powerful algorithms and computing resources for training and deploying machine learning models.

-

Content delivery networks (CDNs): Cloud-based CDNs distribute content such as web pages, images, and videos to users worldwide, reducing latency and improving performance, which in term translates to better user experience.

-

Development and testing environments: Cloud platforms offer on-demand access to development and testing environments, allowing developers to quickly provision resources and collaborate on projects.

-

Disaster recovery and business continuity: Cloud-based disaster recovery services provide organizations with the ability to replicate and recover their IT infrastructure and data in the event of a disaster.

To smart city applications, offloading allows them to deploy complex applications on low power mobile devices. An intrinsic problem for an offloading application is resource allocation. Smart City applications need to allocate remote computing and networking resources to satisfy35,36,37. One of the main challenges of the offloading model is complying with the Service Level Agreement (SLA) between the cloud provider and the client. Generally, cloud platforms optimize resource scheduling and latency. These techniques strive to maintain deadlines during task execution due to urgency and resource budged limits. These extra resources needed during peak demand, known as marginal resources, are a challenge due to the variability of demand38,39. There are several strategies to this problem, such as decision support models using top-k nearest neighbour algorithm38 or minority game theory40. Other authors incorporate deep learning into their resource allocation schemes35,41.

Cloud computing helps knowledge management processes. It reduces the barrier to entry, as it eliminates the need to invest in IT infrastructure to implement knowledge management systems. Instead of investing in expensive infrastructure and hardware, organizations can leverage cloud resources on a pay-as-you-go basis, reducing upfront costs and operational expenses. It provides an alternative to the classical approach, as it provides the mechanisms to control, virtualize and externalize the infrastructure42. It is specially important SMEs, where the lack of adequate technical capabilities can hinder their implementations of knowledge management strategies43 A framework for Knowledge Management as a Service (KMaaS) allows the users to access services from anytime, anywhere, and from any devices based on the user subscription for a specific domain. The knowledge management processes are impacted by this technology, specially knowledge sharing, knowledge creation, and knowledge transfer44. The implementation of cloud computing technologies impact in the overall organizational agility. Cloud computing gives them the capacity to deploy mass computing technology quickly, responding quickly to changes in the market. As a result, the performance of an organization is positively affected by cloud computing43. Practical applications of Cloud Computing in Knowledge Management include45:

-

Knowledge storage and sharing: Cloud platforms facilitate centralized storage, allowing SMEs to store, retrieve, and share knowledge in real time.

-

Collaboration and communication: Cloud-based tools (e.g., Google Drive, Microsoft Teams) enhance teamwork, remote work, and knowledge exchange.

-

Data security and backup: Cloud computing provides secure environments with automated backups, minimizing data loss risks.

-

Scalability and cost-efficiency: SMEs can scale their KM systems without significant infrastructure investments.

-

AI and analytics integration: Cloud computing enables AI-driven knowledge discovery, pattern recognition, and decision-making.

-

Knowledge process automation: Automating workflows and document management improves efficiency in KM processes.

Edge computing

Edge computing has emerged as a transformative paradigm in distributed computing, bringing computational and storage resources closer to the point of origin or consumption of data. This paradigm shift addresses the critical limitations of traditional cloud computing by reducing latency, saving bandwidth, and enabling real-time data processing46. Unlike centralized cloud architectures, edge computing strategically places resources at the network edge, facilitating ultra-responsive systems and location-aware applications47.

The importance of edge computing is further underscored by its potential to support latency-sensitive applications in areas such as IoT, intelligent manufacturing, and autonomous systems. By processing data locally, edge nodes alleviate network congestion and enhance system reliability48. Moreover, edge computing is uniquely positioned to complement existing cloud infrastructure by acting as a bridge, ensuring seamless data transfer and computational efficiency49.

This paradigm also introduces new opportunities and challenges in distributed system design, including efficient resource allocation, robust system architectures, and scalable management solutions. Edge computing’s adaptability to evolving computational demands and its proximity to users make it a cornerstone of next-generation digital ecosystems50. By leveraging its unique capabilities, edge computing enhances existing technologies but also to paves the way for innovative applications across diverse domains.

Edge computing plays a pivotal role in enhancing knowledge management processes by addressing the critical challenges of data accessibility, processing efficiency, and timely decision-making. Its ability to integrate seamlessly with existing technologies and facilitate localized data processing has made it invaluable for knowledge-intensive operations.

One notable application of edge computing in knowledge management is in fostering collaboration across industrial systems. For instance, multi-access edge computing (MEC) frameworks enable the creation of knowledge-sharing environments within smart manufacturing contexts, such as intelligent machine tool swarms in Industry 4.0. This integration supports real-time data exchange and decision-making, critical for efficient knowledge dissemination51.

Furthermore, edge computing contributes significantly to green supply chain management by facilitating the sharing of critical knowledge across enterprises. It enhances transparency and reduces resource consumption by integrating blockchain technologies to secure data transactions52. In open manufacturing ecosystems, edge computing has been employed to establish cross-enterprise knowledge exchange frameworks. By integrating blockchain and edge technologies, these frameworks ensure secure and efficient management of trade secrets and regional constraints53. From an enterprise innovation perspective, edge computing-based knowledge bases allow for enhanced data processing and storage at the edge, aligning with organizational goals of speed and reliability54. Edge computing also demonstrates its versatility in master data management by processing data at the source. This capability minimizes latency and ensures real-time updates for critical knowledge databases, particularly in dynamic business environments55. Finally, edge computing’s integration into virtualized communication systems has paved the way for knowledge-centric architectures. Such systems optimize data collection and sharing, ensuring that actionable knowledge reaches stakeholders effectively56.

There are several examples of the adoption of edge computing in KM processes. For example, Coppino57 studied Italian SMEs for Industry 4.0 adoption for knowledge management. The research highlights that edge computing, when integrated with knowledge management enables SMEs to scale knowledge-sharing processes while improving real-time decision-making. However, SMEs struggle with insufficient IT infrastructure and lack of expertise, which limits overall adoption. For that reason, the author recommends developing frameworks to reduce technological investments. Stadnika et al.58 perform a survey of representatives of companies from mainly European countries. Their results show that enterprises use edge data processing to increase data security and reduce latency.

Serverless computing

Serverless computing is a paradigm within the realm of cloud computing where developers can focus solely on writing and deploying applications without concerning themselves with the underlying infrastructure. In a serverless architecture, the cloud provider dynamically manages the allocation and provisioning of servers, allowing developers to create event based applications without having to explicitly manage servers or scaling concerns59,60.

Instead of traditional server-based models where developers need to provision, scale, and manage servers to run applications, serverless computing abstracts away the infrastructure layer entirely. Developers simply upload their application, define the events that trigger its execution (such as HTTP requests, database changes, file uploads, etc.), and the cloud provider handles the rest, automatically scaling resources up or down as needed61,62.

This model offers several advantages:

-

Scalability: Serverless platforms automatically scale resources based on demand. Applications can handle sudden spikes in demand without manual intervention.

-

Cost-effectiveness: With serverless computing, you only pay for the actual compute resources consumed during the execution. There are no charges for idle time, which can lead to cost savings, especially for applications with sporadic or unpredictable workloads.

-

Simplified operations: Since there’s no need to manage servers, infrastructure provisioning, or scaling, developers can focus more on developing applications and less on system configuration. This can accelerate development cycles and reduce operational overhead.

-

High availability: Serverless platforms typically offer built-in high availability and fault tolerance features. Cloud providers manage the underlying infrastructure redundancies and ensure that applications remain available even in the event of failures.

-

Faster time to market: By abstracting away infrastructure concerns and simplifying operations, serverless computing allows developers to deploy applications more quickly, enabling faster iteration and innovation.

Serverless computing is being explored as a solution for smart society applications, due to its ability to automatically execute lightweight functions in response to events. It offers benefits such as lower development and management barriers for service integration and roll-out. Typically, IoT applications use a computing outsourcing architecture with three major components for the processing of knowledge: Sensor Nodes, Networked Devices and Actuators. In the data gathering point, sensors gather data from specific locations or sites and submit it to the cloud service. Later, the analysis of the sensor data is carried out at cloud servers, where the data is processed to get useful knowledge from it. Applications have connection points for the clients to get access to the knowledge in the form of web applications63,64.

Using the traditional cloud approach, the services should always be active to listen to service requests from clients or cloud users. The implementation of microservices is not a viable solution when considering the green aspect of systems. Holding servers for a longer period consequently increases the cost of the cloud services, as cloud computing is a pay-as-you-go computing model. With the serverless model, when an event is triggered by a request of the application, the needed computing resources to execute the function are provisioned, and released after they are not needed (scale to zero). With this model, applications, processes and platforms benefit from reduced cost of operation, as only useful computing time is paid for65. In fact, case studies show that entities that adopt the serverless paradigm achieve a lower cost of operation and faster response times66.

Serverless edge computing

With the increasing number of IoT devices, the load on cloud servers continues to grow, making it essential to minimize data transfers and computations sent to the cloud11. Edge computing addresses this need by relocating computations closer to where data is gathered, utilizing IoT devices or local edge servers to perform processing tasks67. This proximity enhances latency, bandwidth efficiency, trust, and system survivability.

Serverless edge computing enables running code at edge locations without managing servers. It introduces a pay-per-use, event-driven model with “scale-to-zero” capability and automatic scaling at the edge. Applications in this model are structured as independent, stateless functions that can run in parallel67. Edge networks typically consist of a diverse range of devices, and a serverless framework allows applications to be developed independently of the specific infrastructure68. With the infrastructure fully managed by the provider, serverless edge applications are simpler to develop than traditional ones, making them ideal for latency-sensitive use cases.

Numerous studies have examined the challenges and opportunities within this model, proposing various approaches to leverage its strengths. Reduced latency and cost-effective computation are especially valuable in real-time data analytics69. Organizations benefit from the scalability of the serverless paradigm, which supports a flexible, expansive data product portfolio70. In this context, serverless edge computing enables more affordable data processing and improves user experience by reducing latency in data access and knowledge delivery interfaces.

Today, there are several serverless edge providers offering their services71. We studied ten different providers (Akamai Edge, Cloudflare Workers, IBM Edge Functions, AWS, EDJX, Fastly, Azure IoT Edge, Google Distributed Cloud, Stackpath and Vercel Serverless Functions) to understand how they offer their services. These providers can be divided into two main categories. The first category improves traditional CDN functionality by leveraging serverless functions to modify HTTP requests before they are sent to the user. The second group offers serverless edge computing frameworks that integrate edge infrastructure with their cloud platforms, enabling clients to build and incorporate their own private edge infrastructure into the public cloud. Additionally, we identified one provider, EDJX, that employs a unique Peer-to-Peer (P2P) technology to execute its serverless edge functions.

The technologies used by these providers are similar to their traditional serverless cloud counterparts. To implement the applications, high-level programming languages are typically used, like JavaScript, Python, Java. Usually, development is streamlined through the provider framework, which provides all the tools needed.

AI in KM processes

Knowledge management processes benefit significantly from AI through enhanced data processing, analysis, automation, and decision-making capabilities. AI brings sophisticated tools to KM that help organizations capture, organize, share, and apply knowledge more effectively. The following key points were extracted from the literature review:

-

Knowledge discovery and extraction. AI tools, particularly natural language processing (NLP) and machine learning, can extract insights from vast amounts of unstructured data (e.g., documents, emails, reports, and social media). NLP enables AI to parse and understand text, identifying valuable patterns, relationships, and topics within data sources. For instance, AI can automatically categorize and tag documents, identify key insights, and detect trends that are relevant to the organization. In scientific research or industry, AI-driven tools can mine research papers, patents, and technical documents to highlight emerging technologies and innovations72,73.

-

Organizing and structuring knowledge. AI-driven categorization, clustering, and tagging help organize knowledge into structured formats for easier retrieval. Using machine learning algorithms, AI can automatically classify information based on its content and relevance, enabling employees to find information more quickly. Semantic analysis, powered by AI, also groups related documents and concepts, creating a connected network of information that mirrors human knowledge organization74.

-

Knowledge sharing and recommendation systems. AI-powered recommendation systems suggest relevant knowledge resources to users based on their roles, recent activities, or queries. It is most common used to recommend content in digital platforms such as YouTube, Netflix, or Amazon. By analysing usage patterns and preferences, AI-driven KM systems can suggest documents, experts, or solutions that match immediate needs. This tailored approach to knowledge sharing ensures that clients and users receive the most relevant information without needing to sift through large repositories75.

-

Automating knowledge capture. AI technologies, such as robotic process automation (RPA) and machine learning, facilitate automatic knowledge capture by monitoring and recording daily activities and processes within an organization. For example, AI-powered chatbots can log interactions with customers or employees, storing valuable insights from these interactions in a knowledge base. AI also captures information from emails, meetings, or customer support calls, automatically adding relevant details to knowledge repositories76.

-

Enhancing knowledge retrieval with search and NLP. AI improves knowledge retrieval by enabling more sophisticated search mechanisms. With NLP, AI systems understand user queries in natural language, refining search results based on the intent behind queries rather than just matching keywords. Advanced AI-driven search engines in KM systems also employ semantic search to understand contextual relationships between terms, improving the accuracy and relevance of results77,78.

-

Contextualizing and personalizing knowledge. AI can personalize the KM experience by tailoring knowledge delivery based on an employee’s role, department, or project involvement. Using machine learning, KM platforms analyse patterns in user behaviour to predict and deliver information that aligns with individual needs. This contextualization makes knowledge sharing more effective, ensuring that the right knowledge reaches the right person at the right time79.

-

Augmenting decision-making and expertise. AI supports decision-making by providing analytical insights drawn from historical data, documents, and external sources. In KM, AI-driven predictive analytics and machine learning models assess past data to offer insights, identify risks, and make informed predictions. Expert systems can also use AI to simulate the decision-making processes of human experts, providing guidance on complex tasks80.

-

Developing virtual assistants for knowledge management. AI-based virtual assistants, like chatbots, facilitate KM by answering employee questions, providing document links, or assisting with common tasks. NLP-powered chatbots in KM systems help employees access the knowledge they need by interacting through natural language queries. These assistants can handle frequently asked questions, provide guided instructions, and retrieve information, making KM accessible and interactive, which ends up increasing overall productivity81.

-

Supporting knowledge creation through insights and innovation. AI-driven analytics can highlight trends, patterns, and gaps in an organization’s knowledge, encouraging innovation and knowledge creation. By identifying emerging trends, AI helps organizations remain competitive and proactive in knowledge development. AI tools can also support research and development by generating insights from internal and external data, suggesting new ideas or directions for innovation82.

Applications and platforms for knowledge management in the smart city

Research on service provisioning models for KM in smart cities reveals a variety of approaches, emphasizing the integration of technological and organizational strategies to optimize services.

Several studies highlight the critical role of structured frameworks and platforms to facilitate efficient service delivery. For instance, Prasetyo et al.83 propose a service platform that aligns with smart city architecture, promoting digital service introduction in dynamic urban environments. Similarly, Yoon et al.84 describe HERMES, a platform that uses GS1 standards to streamline service sharing and discovery, enabling citizens to efficiently engage with services in a geographically and linguistically optimized manner.

Smart city service models also emphasize interoperability and tailored service offerings for diverse urban needs. The study by Kim et al.85 discusses adapting service provisioning models according to urban types, underscoring the need for knowledge services tailored to the unique characteristics of each city. Additionally, Weber & Zarko86 argue for regulatory frameworks to support interoperability, ensuring that services can be consistently deployed across various smart city contexts.

Technological advancements, including AI, IoT and big data are also foundational to KM service provisioning in smart cities. Sadhukhan87 develops a framework that integrates IoT for data collection and processing, addressing the challenges posed by heterogeneous technologies in smart city infrastructures. In the same vein, 5G technology is viewed as a transformative tool for enhancing service efficiency, especially in traffic management, healthcare, and public safety domains88.

The convergence of these platforms and frameworks signals a broader move towards knowledge-driven, citizen-centric service models. Efforts like Caputo et al.89 model illustrate the importance of stakeholder engagement in building sustainable, effective service chains within smart cities. Ultimately, these models aim to transform urban living by making smart city services accessible, efficient, and responsive to the needs of the citizens.

Findings

Knowledge management has driven organizations and companies into investing heavily to harness, store and share information in knowledge networks. Through the smart city context, it has meant the sprawl of innovative platforms and applications. They are based on technologies such as AI, big data and IoT. To process the vast amount of data, they need a supporting computing architecture. In this regard, cloud computing comes as a great way to provide the necessary resources, due to its flexibility and pay per use model.

Serverless edge computing has gained significant attention recently for its potential to reduce costs and simplify development. Numerous technologies have emerged to enhance performance and minimize latency, reflecting strong industry interest. This trend is evident in the growing number of providers now offering serverless edge services. For knowledge management, it enables faster data processing, reduced latency, improved scalability, and enhanced reliability. The serverless edge paradigm also reduces the costs of developing and maintaining new knowledge management systems, which is specially for SMEs.

KM in smart cities requires structured frameworks and platforms to enable efficient service delivery. Frameworks aligned with smart city architecture support the smooth integration of digital services, while platforms that prioritize interoperability and adaptability ensure that services meet diverse urban needs. Foundational technologies such as AI, IoT, and big data play a critical role in facilitating KM, as they streamline data collection, processing, and sharing. These technologies, combined with 5G connectivity, support essential services like traffic management, healthcare, and public safety. The convergence of these tools indicates a shift toward knowledge-driven, citizen-centric models that prioritize accessibility and responsiveness, with stakeholder engagement emerging as a vital component for building effective, sustainable smart city services.

Particularly, AI significantly enhances KM by improving data processing, analysis, automation, and decision-making. Key aspects include knowledge discovery, where AI tools extract insights from unstructured data, and organization, where AI-driven categorization and clustering make knowledge more accessible. AI also aids in knowledge sharing by recommending relevant resources to users based on their roles and activities. Automation supports the capture of knowledge from daily activities, while enhanced retrieval techniques allow AI systems to understand and respond accurately to user queries. Personalization and contextualization further tailor the KM experience, ensuring that knowledge reaches the right individuals. Additionally, AI augments decision-making by providing predictive insights and supports innovation by identifying trends, gaps, and opportunities for knowledge creation.

Providers have leveraged existing infrastructure to deliver their services, with most vendors basing their offerings on established CDNs. These CDNs provide a global network of strategically located computing nodes, enabling reduced latency compared to traditional cloud servers. Many vendors also offer frameworks that facilitate easy integration of clients’ own edge infrastructure. However, despite their improved latency, CDN servers remain centralized in specific geographic locations90.

Related work

The integration of artificial intelligence and edge cloud computing has made advances in various applications. Duan et al.91 provide a comprehensive survey on distributed AI that uses edge cloud computing, highlighting its use in various AIoT applications and enabling technologies. Similarly, Walia et al.92 focus on resource management challenges and opportunities in Distributed IoT (DIoT) applications.

The synergy of cloud and edge computing to optimize service provisioning is well-documented. Wu et al.93 discuss cloud-edge orchestration for IoT applications, emphasizing real-time AI-powered data processing. Hossain et al.94 highlight the integration of AI with edge computing for real-time decision-making in smart cities, focusing on intelligent traffic systems and other data-driven applications. Kumar et al.95 presents a deadline-aware, cost-effective and energy-efficient resource allocation approach for mobile edge computing, which outperforms existing methods in reducing processing time, cost, and energy consumption.

The taxonomy and systematic reviews by Gill et al.96 provide a detailed overview of AI on the edge, emphasizing applications, challenges, and future directions. Their research underscores the potential of AI-driven edge-cloud frameworks for improved scalability and resource management.

The synergy between edge and cloud computing has been applied to improve predictive maintenance systems. For example, Sathupadi et al.97 highlight how real-time analysis of sensor networks can enhance outcomes through AI integration. Additionally, Campolo et al.98 explore how distributing AI across edge nodes can effectively support intelligent IoT applications.

Iftikhar et al.99 contribute to the discussion on AI-based systems in fog and edge computing, presenting a taxonomy for task offloading, resource provisioning, and application placement. Gu et al.100 propose a collaborative computing architecture for smart grids, blending cloud-edge-terminal layers for enhanced network efficiency. Jazayeri et al.101 propose a latency-aware and energy-efficient computation offloading approach for mobile fog computing using a Hidden Markov Model-based Auto-scaling Offloading (HMAO) method, which optimally distributes computation tasks between mobile devices, fog nodes, and the cloud to balance execution time and energy consumption.

Lastly, Ji et al.102 delve into AI-powered mobile edge computing for vehicle systems, emphasizing distributed architecture and IT-cloud provisions. These studies collectively underscore the transformative impact of AI-integrated edge-cloud frameworks across domains, particularly in knowledge management and smart applications. The novelty of our work lies in the conceptualization of a general model architecture for these use cases, where resources can be more efficiently used across applications.

Table 2 provides a summary of studies on AI-based edge-cloud architectures. While this topic has already been discussed extensively, the aim of this paper is to integrate this architecture with knowledge management based AI applications.

Tools & Platforms

Tech Founder Paid Anguilla $700,000 to Use the .Ai Domain

Anguilla hit the jackpot with its national website domain.

Although US residents are likely used to visiting sites with .com, .org, and .edu, it’s common for other countries to use domains that reflect their names, like .uk in the United Kingdom or .cn in China.

Anguilla, a British territory, also uses a country domain, and until the last few years, its .ai domain wasn’t remarkable.

Industry insiders have been keeping an eye on how AI’s growth could impact Anguilla since 2023, as Business Insider previously reported. Now, as AI transforms the internet and our world, .ai is a moneymaker Anguilla never saw coming, as eager AI founders pay the country to register .ai domains.

For instance, tech founder Dharmesh Shah paid Anguilla $700,000 to secure the domain you.ai, as the BBC first reported.

Bob Krist/Getty Images

“I usually don’t invest in .ai domains — I lean towards .com,” Shah, the cofounder and CTO of HubSpot, told Business Insider. “But, you.ai was a great name and I had an idea for a project for it.”

The BBC reported that Shah said he hoped you.ai could serve as the domain for a product that would “allow people to create digital versions of themselves that could do specific tasks on their behalf.”

Shah told Business Insider he doesn’t usually set out to buy domains to sell them, though in 2024, he posted on X that he bought chat.com for over $15 million and later sold it to ChatGPT for an undisclosed price. He owns a few other .ai-related domains as well, including simple.ai, easy.ai, and chess.ai.

According to Domain Name Stat, the number of .ai domains jumped from just 40,000 at the beginning of 2020 to 859,000 as of August 2025. Additionally, in a 2024 report, the International Monetary Fund said that Anguilla made $32 million through .ai domain registrations in 2023 alone.

Anguilla has been working with the US-based firm Identity Digital to manage the influx of registrations since 2024.

Although he’s an early investor in .ai domains, Shah said he doesn’t think they’re going to be lucrative long-term.

“Long-term, I think the interest is likely going to taper off,” he said. “I think .com domains will end up holding their value better.”

Business Insider reached out to Anguilla and Identity Digital for comment.

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies