Books, Courses & Certifications

Taming Chaos with Antifragile GenAI Architecture – O’Reilly

What if uncertainty wasn’t something to simply endure but something to actively exploit? The convergence of Nassim Taleb’s antifragility principles with generative AI capabilities is creating a new paradigm for organizational design powered by generative AI—one where volatility becomes fuel for competitive advantage rather than a threat to be managed.

The Antifragility Imperative

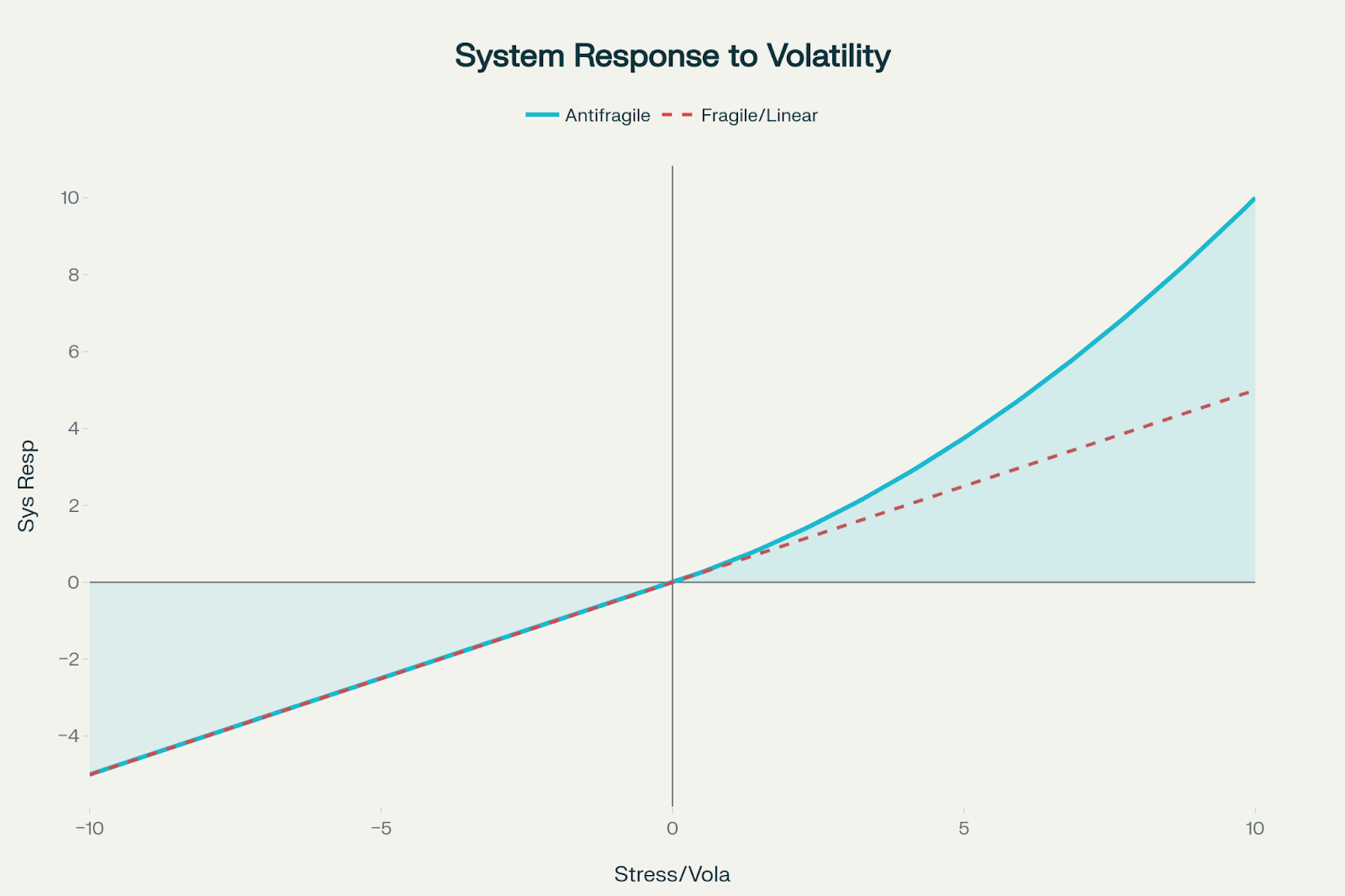

Antifragility transcends resilience. While resilient systems bounce back from stress and robust systems resist change, antifragile systems actively improve when exposed to volatility, randomness, and disorder. This isn’t just theoretical—it’s a mathematical property where systems exhibit positive convexity, gaining more from favorable variations than they lose from unfavorable ones.

To visualize the concept of positive convexity in antifragile systems, consider a graph where the x-axis represents stress or volatility and the y-axis represents the system’s response. In such systems, the curve is upward bending (convex), demonstrating that the system gains more from positive shocks than it loses from negative ones—by an accelerating margin.

The convex (upward-curving) line shows that small positive shocks yield increasingly larger gains, while equivalent negative shocks cause comparatively smaller losses.

For comparison, a straight line representing a fragile or linear system shows a proportional (linear) response, with gains and losses of equal magnitude on either side.

The concept emerged from Taleb’s observation that certain systems don’t just survive Black Swan events—they thrive because of them. Consider how Amazon’s supply chain AI during the 2020 pandemic demonstrated true antifragility. When lockdowns disrupted normal shipping patterns and consumer behavior shifted dramatically, Amazon’s demand forecasting systems didn’t just adapt; they used the chaos as training data. Every stockout, every demand spike for unexpected products like webcams and exercise equipment, every supply chain disruption became input for improving future predictions. The AI learned to identify early signals of changing consumer behavior and supply constraints, making the system more robust for future disruptions.

For technology organizations, this presents a fundamental question: How do we design systems that don’t just survive unexpected events but benefit from them? The answer lies in implementing specific generative AI architectures that can learn continuously from disorder.

Generative AI: Building Antifragile Capabilities

Certain generative AI implementations can exhibit antifragile characteristics when designed with continuous learning architectures. Unlike static models deployed once and forgotten, these systems incorporate feedback loops that allow real-time adaptation without full model retraining—a critical distinction given the resource-intensive nature of training large models.

Netflix’s recommendation system demonstrates this principle. Rather than retraining its entire foundation model, the company continuously updates personalization layers based on user interactions. When users reject recommendations or abandon content midstream, this negative feedback becomes valuable training data that refines future suggestions. The system doesn’t just learn what users like. It becomes expert at recognizing what they’ll hate, leading to higher overall satisfaction through accumulated negative knowledge.

The key insight is that these AI systems don’t just adapt to new conditions; they actively extract information from disorder. When market conditions shift, customer behavior changes, or systems encounter edge cases, properly designed generative AI can identify patterns in the chaos that human analysts might miss. They transform noise into signal, volatility into opportunity.

Error as Information: Learning from Failure

Traditional systems treat errors as failures to be minimized. Antifragile systems treat errors as information sources to be exploited. This shift becomes powerful when combined with generative AI’s ability to learn from mistakes and generate improved responses.

IBM Watson for Oncology’s failure has been attributed to synthetic data problems, but it highlights a critical distinction: Synthetic data isn’t inherently problematic—it’s essential in healthcare where patient privacy restrictions limit access to real data. The issue was that Watson was trained exclusively on synthetic, hypothetical cases created by Memorial Sloan Kettering physicians rather than being validated against diverse real-world outcomes. This created a dangerous feedback loop where the AI learned physician preferences rather than evidence-based medicine.

When deployed, Watson recommended potentially fatal treatments—such as prescribing bevacizumab to a 65-year-old lung cancer patient with severe bleeding, despite the drug’s known risk of causing “severe or fatal hemorrhage.” A truly antifragile system would have incorporated mechanisms to detect when its training data diverged from reality—for instance, by tracking recommendation acceptance rates and patient outcomes to identify systematic biases.

This challenge extends beyond healthcare. Consider AI diagnostic systems deployed across different hospitals. A model trained on high-end equipment at a research hospital performs poorly when deployed to field hospitals with older, poorly calibrated CT scanners. An antifragile AI system would treat these equipment variations not as problems to solve but as valuable training data. Each “failed” diagnosis on older equipment becomes information that improves the system’s robustness across diverse deployment environments.

Netflix: Mastering Organizational Antifragility

Netflix’s approach to chaos engineering exemplifies organizational antifragility in practice. The company’s famous “Chaos Monkey” randomly terminates services in production to ensure the system can handle failures gracefully. But more relevant to generative AI is its content recommendation system’s sophisticated approach to handling failures and edge cases.

When Netflix’s AI began recommending mature content to family accounts rather than simply adding filters, its team created systematic “chaos scenarios”—deliberately feeding the system contradictory user behavior data to stress-test its decision-making capabilities. They simulated situations where family members had vastly different viewing preferences on the same account or where content metadata was incomplete or incorrect.

The recovery protocols the team developed go beyond simple content filtering. Netflix created hierarchical safety nets: real-time content categorization, user context analysis, and human oversight triggers. Each “failure” in content recommendation becomes data that strengthens the entire system. The AI learns what content to recommend but also when to seek additional context, when to err on the side of caution, and how to gracefully handle ambiguous situations.

This demonstrates a key antifragile principle: The system doesn’t just prevent similar failures—it becomes more intelligent about handling edge cases it has never encountered before. Netflix’s recommendation accuracy improved precisely because the system learned to navigate the complexities of shared accounts, diverse family preferences, and content boundary cases.

Technical Architecture: The LOXM Case Study

JPMorgan’s LOXM (Learning Optimization eXecution Model) represents the most sophisticated example of antifragile AI in production. Developed by the global equities electronic trading team under Daniel Ciment, LOXM went live in 2017 after training on billions of historical transactions. While this predates the current era of transformer-based generative AI, LOXM was built using deep learning techniques that share fundamental principles with today’s generative models: the ability to learn complex patterns from data and adapt to new situations through continuous feedback.

Multi-agent architecture: LOXM uses a reinforcement learning system where specialized agents handle different aspects of trade execution.

- Market microstructure analysis agents learn optimal timing patterns.

- Liquidity assessment agents predict order book dynamics in real time.

- Impact modeling agents minimize market disruption during large trades.

- Risk management agents enforce position limits while maximizing execution quality.

Antifragile performance under stress: While traditional trading algorithms struggled with unprecedented conditions during the market volatility of March 2020, LOXM’s agents used the chaos as learning opportunities. Each failed trade execution, each unexpected market movement, each liquidity crisis became training data that improved future performance.

The measurable results were striking. LOXM improved execution quality by 50% during the most volatile trading days—exactly when traditional systems typically degrade. This isn’t just resilience; it’s mathematical proof of positive convexity where the system gains more from stressful conditions than it loses.

Technical innovation: LOXM prevents catastrophic forgetting through “experience replay” buffers that maintain diverse trading scenarios. When new market conditions arise, the system can reference similar historical patterns while adapting to novel situations. The feedback loop architecture uses streaming data pipelines to capture trade outcomes, model predictions, and market conditions in real time, updating model weights through online learning algorithms within milliseconds of trade completion.

The Information Hiding Principle

David Parnas’s information hiding principle directly enables antifragility by ensuring that system components can adapt independently without cascading failures. In his 1972 paper, Parnas emphasized hiding “design decisions likely to change”—exactly what antifragile systems need.

When LOXM encounters market disruption, its modular design allows individual components to adapt their internal algorithms without affecting other modules. The “secret” of each module—its specific implementation—can evolve based on local feedback while maintaining stable interfaces with other components.

This architectural pattern prevents what Taleb calls “tight coupling”—where stress in one component propagates throughout the system. Instead, stress becomes localized learning opportunities that strengthen individual modules without destabilizing the whole system.

Via Negativa in Practice

Nassim Taleb’s concept of “via negativa”—defining systems by what they’re not rather than what they are—translates directly to building antifragile AI systems.

When Airbnb’s search algorithm was producing poor results, instead of adding more ranking factors (the typical approach), the company applied via negativa: It systematically removed listings that consistently received poor ratings, hosts who didn’t respond promptly, and properties with misleading photos. By eliminating negative elements, the remaining search results naturally improved.

Netflix’s recommendation system similarly applies via negativa by maintaining “negative preference profiles”—systematically identifying and avoiding content patterns that lead to user dissatisfaction. Rather than just learning what users like, the system becomes expert at recognizing what they’ll hate, leading to higher overall satisfaction through subtraction rather than addition.

In technical terms, via negativa means starting with maximum system flexibility and systematically removing constraints that don’t add value—allowing the system to adapt to unforeseen circumstances rather than being locked into rigid predetermined behaviors.

Implementing Continuous Feedback Loops

The feedback loop architecture requires three components: error detection, learning integration, and system adaptation. In LOXM’s implementation, market execution data flows back into the model within milliseconds of trade completion. The system uses streaming data pipelines to capture trade outcomes, model predictions, and market conditions in real time. Machine learning models continuously compare predicted execution quality to actual execution quality, updating model weights through online learning algorithms. This creates a continuous feedback loop where each trade makes the next trade execution more intelligent.

When a trade execution deviates from expected performance—whether due to market volatility, liquidity constraints, or timing issues—this immediately becomes training data. The system doesn’t wait for batch processing or scheduled retraining; it adapts in real time while maintaining stable performance for ongoing operations.

Organizational Learning Loop

Antifragile organizations must cultivate specific learning behaviors beyond just technical implementations. This requires moving beyond traditional risk management approaches toward Taleb’s “via negativa.”

The learning loop involves three phases: stress identification, system adaptation, and capability improvement. Teams regularly expose systems to controlled stress, observe how they respond, and then use generative AI to identify improvement opportunities. Each iteration strengthens the system’s ability to handle future challenges.

Netflix institutionalized this through monthly “chaos drills” where teams deliberately introduce failures—API timeouts, database connection losses, content metadata corruption—and observe how their AI systems respond. Each drill generates postmortems focused not on blame but on extracting learning from the failure scenarios.

Measurement and Validation

Antifragile systems require new metrics beyond traditional availability and performance measures. Key metrics include:

- Adaptation speed: Time from anomaly detection to corrective action

- Information extraction rate: Number of meaningful model updates per disruption event

- Asymmetric performance factor: Ratio of system gains from positive shocks to losses from negative ones

LOXM tracks these metrics alongside financial outcomes, demonstrating quantifiable improvement in antifragile capabilities over time. During high-volatility periods, the system’s asymmetric performance factor consistently exceeds 2.0—meaning it gains twice as much from favorable market movements as it loses from adverse ones.

The Competitive Advantage

The goal isn’t just surviving disruption—it’s creating competitive advantage through chaos. When competitors struggle with market volatility, antifragile organizations extract value from the same conditions. They don’t just adapt to change; they actively seek out uncertainty as fuel for growth.

Netflix’s ability to recommend content accurately during the pandemic, when viewing patterns shifted dramatically, gave it a significant advantage over competitors whose recommendation systems struggled with the new normal. Similarly, LOXM’s superior performance during market stress periods has made it JPMorgan’s primary execution algorithm for institutional clients.

This creates sustainable competitive advantage because antifragile capabilities compound over time. Each disruption makes the system stronger, more adaptive, and better positioned for future challenges.

Beyond Resilience: The Antifragile Future

We’re witnessing the emergence of a new organizational paradigm. The convergence of antifragility principles with generative AI capabilities represents more than incremental improvement—it’s a fundamental shift in how organizations can thrive in uncertain environments.

The path forward requires commitment to experimentation, tolerance for controlled failure, and systematic investment in adaptive capabilities. Organizations must evolve from asking “How do we prevent disruption?” to “How do we benefit from disruption?”

The question isn’t whether your organization will face uncertainty and disruption—it’s whether you’ll be positioned to extract competitive advantage from chaos when it arrives. The integration of antifragility principles with generative AI provides the roadmap for that transformation, demonstrated by organizations like Netflix and JPMorgan that have already turned volatility into their greatest strategic asset.

Books, Courses & Certifications

Automate advanced agentic RAG pipeline with Amazon SageMaker AI

Retrieval Augmented Generation (RAG) is a fundamental approach for building advanced generative AI applications that connect large language models (LLMs) to enterprise knowledge. However, crafting a reliable RAG pipeline is rarely a one-shot process. Teams often need to test dozens of configurations (varying chunking strategies, embedding models, retrieval techniques, and prompt designs) before arriving at a solution that works for their use case. Furthermore, management of high-performing RAG pipeline involves complex deployment, with teams often using manual RAG pipeline management, leading to inconsistent results, time-consuming troubleshooting, and difficulty in reproducing successful configurations. Teams struggle with scattered documentation of parameter choices, limited visibility into component performance, and the inability to systematically compare different approaches. Additionally, the lack of automation creates bottlenecks in scaling the RAG solutions, increases operational overhead, and makes it challenging to maintain quality across multiple deployments and environments from development to production.

In this post, we walk through how to streamline your RAG development lifecycle from experimentation to automation, helping you operationalize your RAG solution for production deployments with Amazon SageMaker AI, helping your team experiment efficiently, collaborate effectively, and drive continuous improvement. By combining experimentation and automation with SageMaker AI, you can verify that the entire pipeline is versioned, tested, and promoted as a cohesive unit. This approach provides comprehensive guidance for traceability, reproducibility, and risk mitigation as the RAG system advances from development to production, supporting continuous improvement and reliable operation in real-world scenarios.

Solution overview

By streamlining both experimentation and operational workflows, teams can use SageMaker AI to rapidly prototype, deploy, and monitor RAG applications at scale. Its integration with SageMaker managed MLflow provides a unified platform for tracking experiments, logging configurations, and comparing results, supporting reproducibility and robust governance throughout the pipeline lifecycle. Automation also minimizes manual intervention, reduces errors, and streamlines the process of promoting the finalized RAG pipeline from the experimentation phase directly into production. With this approach, every stage from data ingestion to output generation operates efficiently and securely, while making it straightforward to transition validated solutions from development to production deployment.

For automation, Amazon SageMaker Pipelines orchestrates end-to-end RAG workflows from data preparation and vector embedding generation to model inference and evaluation all with repeatable and version-controlled code. Integrating continuous integration and delivery (CI/CD) practices further enhances reproducibility and governance, enabling automated promotion of validated RAG pipelines from development to staging or production environments. Promoting an entire RAG pipeline (not just an individual subsystem of the RAG solution like a chunking layer or orchestration layer) to higher environments is essential because data, configurations, and infrastructure can vary significantly across staging and production. In production, you often work with live, sensitive, or much larger datasets, and the way data is chunked, embedded, retrieved, and generated can impact system performance and output quality in ways that are not always apparent in lower environments. Each stage of the pipeline (chunking, embedding, retrieval, and generation) must be thoroughly evaluated with production-like data for accuracy, relevance, and robustness. Metrics at every stage (such as chunk quality, retrieval relevance, answer correctness, and LLM evaluation scores) must be monitored and validated before the pipeline is trusted to serve real users.

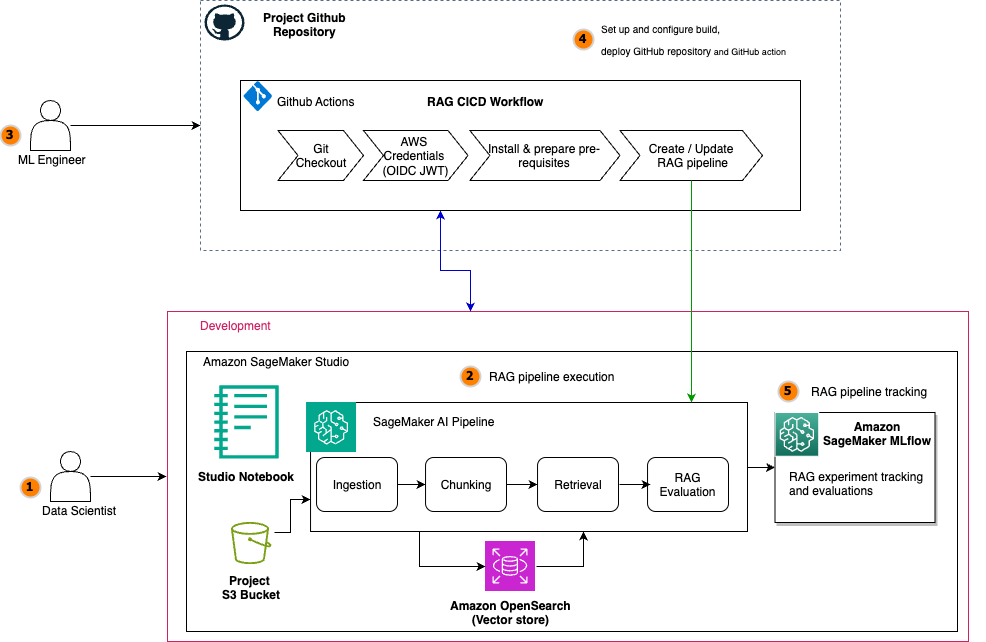

The following diagram illustrates the architecture of a scalable RAG pipeline built on SageMaker AI, with MLflow experiment tracking seamlessly integrated at every stage and the RAG pipeline automated using SageMaker Pipelines. SageMaker managed MLflow provides a unified platform for centralized RAG experiment tracking across all pipeline stages. Every MLflow execution run whether for RAG chunking, ingestion, retrieval, or evaluation sends execution logs, parameters, metrics, and artifacts to SageMaker managed MLflow. The architecture uses SageMaker Pipelines to orchestrate the entire RAG workflow through versioned, repeatable automation. These RAG pipelines manage dependencies between critical stages, from data ingestion and chunking to embedding generation, retrieval, and final text generation, supporting consistent execution across environments. Integrated with CI/CD practices, SageMaker Pipelines enable seamless promotion of validated RAG configurations from development to staging and production environments while maintaining infrastructure as code (IaC) traceability.

For the operational workflow, the solution follows a structured lifecycle: During experimentation, data scientists iterate on pipeline components within Amazon SageMaker Studio notebooks while SageMaker managed MLflow captures parameters, metrics, and artifacts at every stage. Validated workflows are then codified into SageMaker Pipelines and versioned in Git. The automated promotion phase uses CI/CD to trigger pipeline execution in target environments, rigorously validating stage-specific metrics (chunk quality, retrieval relevance, answer correctness) against production data before deployment. The other core components include:

- Amazon SageMaker JumpStart for accessing the latest LLM models and hosting them on SageMaker endpoints for model inference with the embedding model

huggingface-textembedding-all-MiniLM-L6-v2and text generation modeldeepseek-llm-r1-distill-qwen-7b. - Amazon OpenSearch Service as a vector database to store document embeddings with the OpenSearch index configured for k-nearest neighbors (k-NN) search.

- The Amazon Bedrock model

anthropic.claude-3-haiku-20240307-v1:0as an LLM-as-a-judge component for all the MLflow LLM evaluation metrics. - A SageMaker Studio notebook for a development environment to experiment and automate the RAG pipelines with SageMaker managed MLflow and SageMaker Pipelines.

You can implement this agentic RAG solution code from the GitHub repository. In the following sections, we use snippets from this code in the repository to illustrate RAG pipeline experiment evolution and automation.

Prerequisites

You must have the following prerequisites:

- An AWS account with billing enabled.

- A SageMaker AI domain. For more information, see Use quick setup for Amazon SageMaker AI.

- Access to a running SageMaker managed MLflow tracking server in SageMaker Studio. For more information, see the instructions for setting up a new MLflow tracking server.

- Access to SageMaker JumpStart to host LLM embedding and text generation models.

- Access to the Amazon Bedrock foundation models (FMs) for RAG evaluation tasks. For more details, see Subscribe to a model.

SageMaker MLFlow RAG experiment

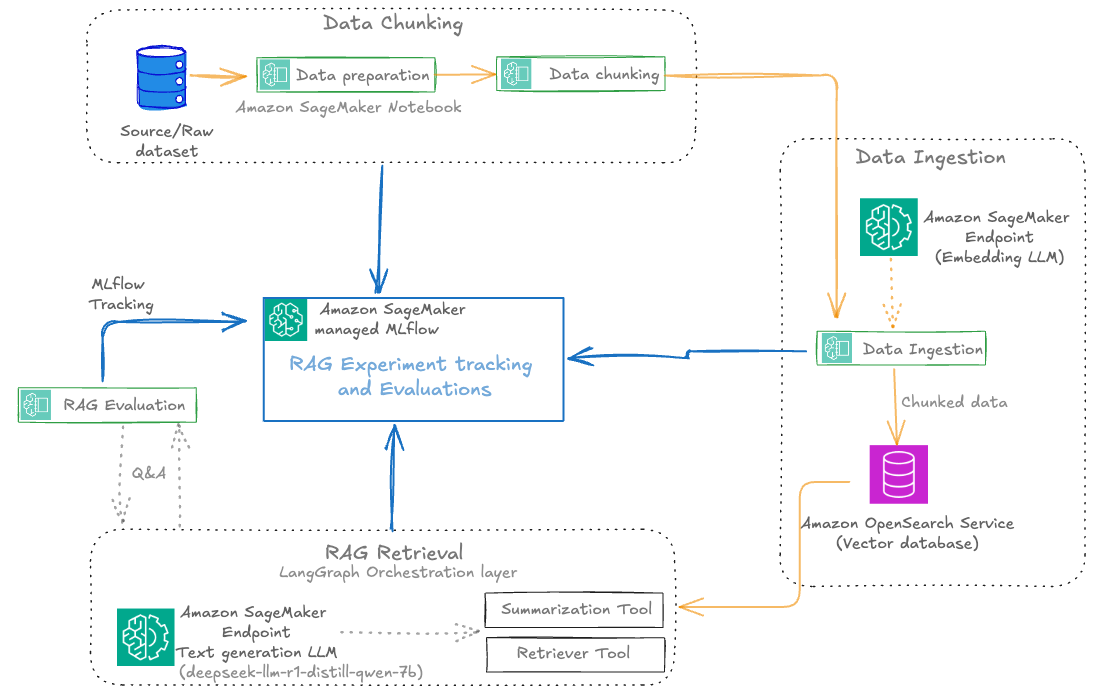

SageMaker managed MLflow provides a powerful framework for organizing RAG experiments, so teams can manage complex, multi-stage processes with clarity and precision. The following diagram illustrates the RAG experiment stages with SageMaker managed MLflow experiment tracking at every stage. This centralized tracking offers the following benefits:

- Reproducibility: Every experiment is fully documented, so teams can replay and compare runs at any time

- Collaboration: Shared experiment tracking fosters knowledge sharing and accelerates troubleshooting

- Actionable insights: Visual dashboards and comparative analytics help teams identify the impact of pipeline changes and drive continuous improvement

The following diagram illustrates the solution workflow.

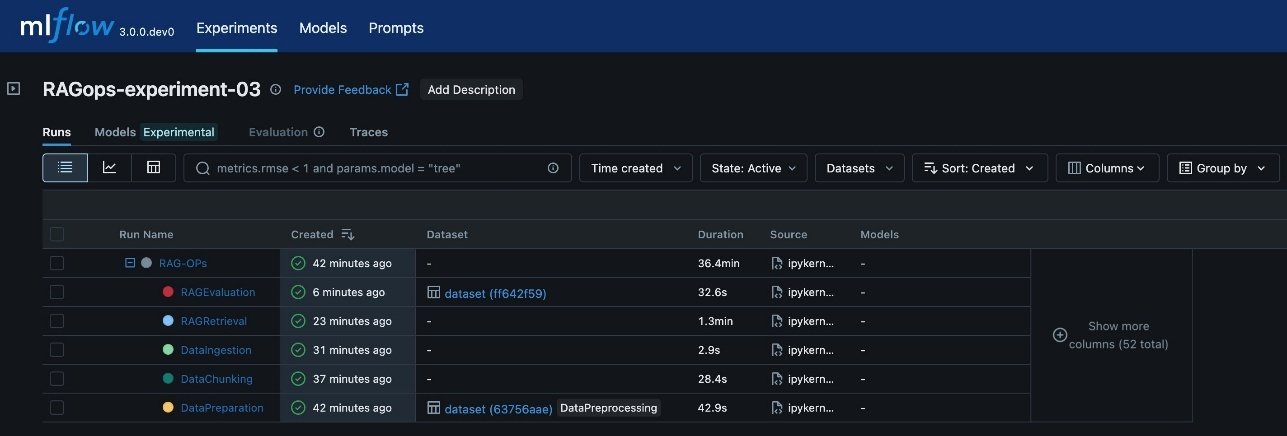

Each RAG experiment in MLflow is structured as a top-level run under a specific experiment name. Within this top-level run, nested runs are created for each major pipeline stage, such as data preparation, data chunking, data ingestion, RAG retrieval, and RAG evaluation. This hierarchical approach allows for granular tracking of parameters, metrics, and artifacts at every step, while maintaining a clear lineage from raw data to final evaluation results.

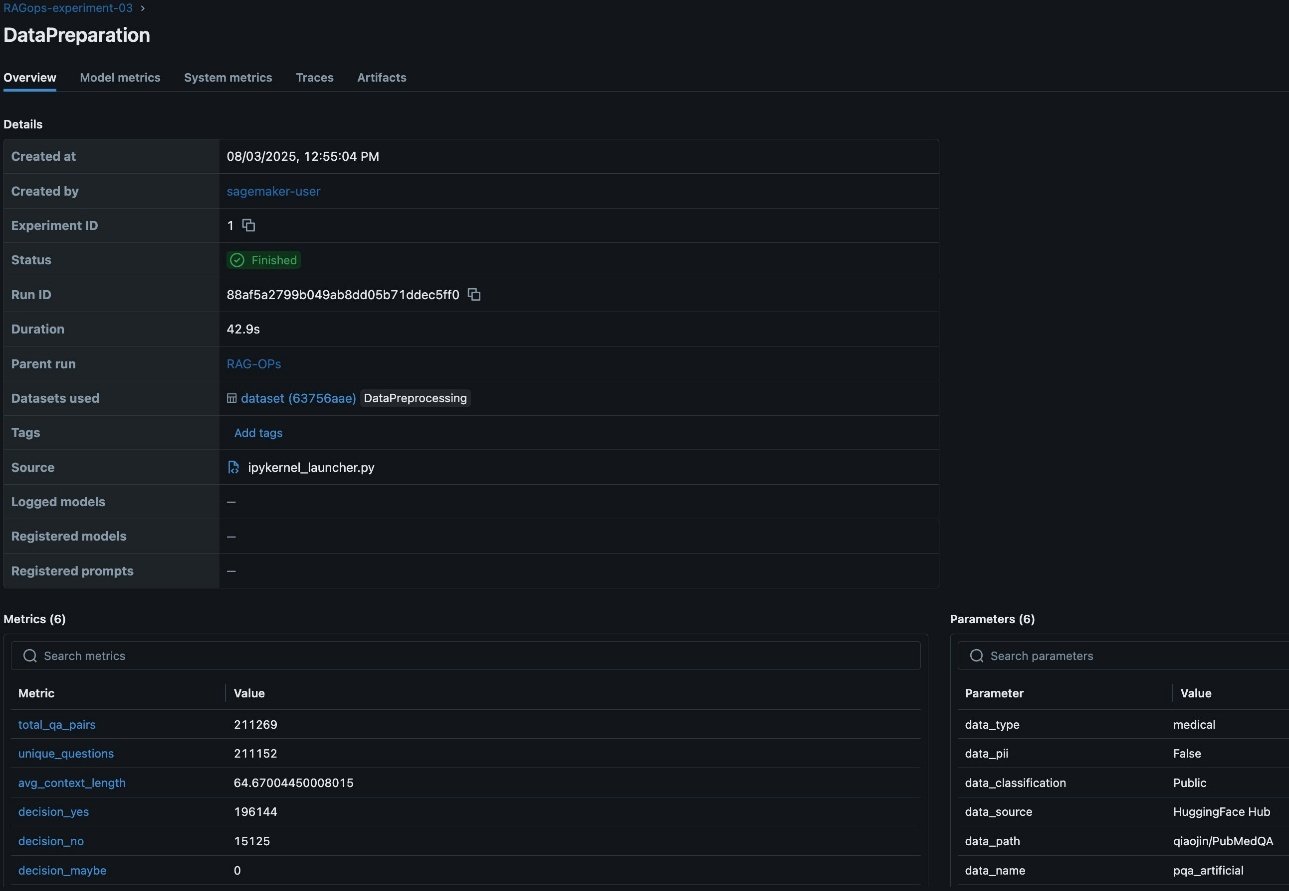

The following screenshot shows an example of the experiment details in MLflow.

The various RAG pipeline steps defined are:

- Data preparation: Logs dataset version, preprocessing steps, and initial statistics

- Data chunking: Records chunking strategy, chunk size, overlap, and resulting chunk counts

- Data ingestion: Tracks embedding model, vector database details, and document ingestion metrics

- RAG retrieval: Captures retrieval model, context size, and retrieval performance metrics

- RAG evaluation: Logs evaluation metrics (such as answer similarity, correctness, and relevance) and sample results

This visualization provides a clear, end-to-end view of the RAG pipeline’s execution, so you can trace the impact of changes at any stage and achieve full reproducibility. The architecture supports scaling to multiple experiments, each representing a distinct configuration or hypothesis (for example, different chunking strategies, embedding models, or retrieval parameters). MLflow’s experiment UI visualizes these experiments side by side, enabling side-by-side comparison and analysis across runs. This structure is especially valuable in enterprise settings, where dozens or even hundreds of experiments might be conducted to optimize RAG performance.

We use MLflow experimentation throughout the RAG pipeline to log metrics and parameters, and the different experiment runs are initialized as shown in the following code snippet:

RAG pipeline experimentation

The key components of the RAG workflow are ingestion, chunking, retrieval, and evaluation, which we explain in this section. The MLflow dashboard makes it straightforward to visualize and analyze these parameters and metrics, supporting data-driven refinement of the chunking stage within the RAG pipeline.

Data ingestion and preparation

In the RAG workflow, rigorous data preparation is foundational to downstream performance and reliability. Tracking detailed metrics on data quality, such as the total number of question-answer pairs, the count of unique questions, average context length, and initial evaluation predictions, provides essential visibility into the dataset’s structure and suitability for RAG tasks. These metrics help validate the dataset is comprehensive, diverse, and contextually rich, which directly impacts the relevance and accuracy of the RAG system’s responses. Additionally, logging critical RAG parameters like the data source, detected personally identifiable information (PII) types, and data lineage information is vital for maintaining compliance, reproducibility, and trust in enterprise environments. Capturing this metadata in SageMaker managed MLflow supports robust experiment tracking, auditability, efficient comparison, and root cause analysis across multiple data preparation runs, as visualized in the MLflow dashboard. This disciplined approach to data preparation lays the groundwork for effective experimentation, governance, and continuous improvement throughout the RAG pipeline. The following screenshot shows an example of the experiment run details in MLflow.

Data chunking

After data preparation, the next step is to split documents into manageable chunks for efficient embedding and retrieval. This process is pivotal, because the quality and granularity of chunks directly affect the relevance and completeness of answers returned by the RAG system. The RAG workflow in this post supports experimentation and RAG pipeline automation with both fixed-size and recursive chunking strategies for comparison and validations. However, this RAG solution can be expanded to many other chucking techniques.

FixedSizeChunkerdivides text into uniform chunks with configurable overlapRecursiveChunkersplits text along logical boundaries such as paragraphs or sentences

Tracking detailed chunking metrics such as total_source_contexts_entries, total_contexts_chunked, and total_unique_chunks_final is crucial for understanding how much of the source data is represented, how effectively it is segmented, and whether the chunking approach is yielding the desired coverage and uniqueness. These metrics help diagnose issues like excessive duplication or under-segmentation, which can impact retrieval accuracy and model performance.

Additionally, logging parameters such as chunking_strategy_type (for example, FixedSizeChunker), chunking_strategy_chunk_size (for example, 500 characters), and chunking_strategy_chunk_overlap provide transparency and reproducibility for each experiment. Capturing these details in SageMaker managed MLflow helps teams systematically compare the impact of different chunking configurations, optimize for efficiency and contextual relevance, and maintain a clear audit trail of how chunking decisions evolve over time. The MLflow dashboard makes it straightforward to visualize and analyze these parameters and metrics, supporting data-driven refinement of the chunking stage within the RAG pipeline. The following screenshot shows an example of the experiment run details in MLflow.

After the documents are chunked, the next step is to convert these chunks into vector embeddings using a SageMaker embedding endpoint, after which the embeddings are ingested into a vector database such as OpenSearch Service for fast semantic search. This ingestion phase is crucial because the quality, completeness, and traceability of what enters the vector store directly determine the effectiveness and reliability of downstream retrieval and generation stages.

Tracking ingestion metrics such as the number of documents and chunks ingested provides visibility into pipeline throughput and helps identify bottlenecks or data loss early in the process. Logging detailed parameters, including the embedding model ID, endpoint used, and vector database index, is essential for reproducibility and auditability. This metadata helps teams trace exactly which model and infrastructure were used for each ingestion run, supporting root cause analysis and compliance, especially when working with evolving datasets or sensitive information.

Retrieval and generation

For a given query, we generate an embedding and retrieve the top-k relevant chunks from OpenSearch Service. For answer generation, we use a SageMaker LLM endpoint. The retrieved context and the query are combined into a prompt, and the LLM generates an answer. Finally, we orchestrate retrieval and generation using LangGraph, enabling stateful workflows and advanced tracing:

With the GenerativeAI agent defined with LangGraph framework, the agentic layers are evaluated for each iteration of RAG development, verifying the efficacy of the RAG solution for agentic applications. Each retrieval and generation run is logged to SageMaker managed MLflow, capturing the prompt, generated response, and key metrics and parameters such as retrieval performance, top-k values, and the specific model endpoints used. Tracking these details in MLflow is essential for evaluating the effectiveness of the retrieval stage, making sure the returned documents are relevant and that the generated answers are accurate and complete. It is equally important to track the performance of the vector database during retrieval, including metrics like query latency, throughput, and scalability. Monitoring these system-level metrics alongside retrieval relevance and accuracy makes sure the RAG pipeline delivers correct and relevant answers and meets production requirements for responsiveness and scalability. The following screenshot shows an example of the Langraph RAG retrieval tracing in MLflow.

RAG Evaluation

Evaluation is conducted on a curated test set, and results are logged to MLflow for quick comparison and analysis. This helps teams identify the best-performing configurations and iterate toward production-grade solutions. With MLflow you can evaluate the RAG solution with heuristics metrics, content similarity metrics and LLM-as-a-judge. In this post, we evaluate the RAG pipeline using advanced LLM-as-a-judge MLflow metrics (answer similarity, correctness, relevance, faithfulness):

The following screenshot shows an RAG evaluation stage experiment run details in MLflow.

You can use MLflow to log all metrics and parameters, enabling quick comparison of different experiment runs. See the following code for reference:

By using MLflow’s evaluation capabilities (such as mlflow.evaluate()), teams can systematically assess retrieval quality, identify potential gaps or misalignments in chunking or embedding strategies, and compare the performance of different retrieval and generation configurations. MLflow’s flexibility allows for seamless integration with external libraries and evaluation libraries such as RAGAS for comprehensive RAG pipeline assessment. RAGAS is an open source library that provide tools specifically for evaluation of LLM applications and generative AI agents. RAGAS includes the method ragas.evaluate() to run evaluations for LLM agents with the choice of LLM models (evaluators) for scoring the evaluation, and an extensive list of default metrics. To incorporate RAGAS metrics into your MLflow experiments, refer to the following GitHub repository.

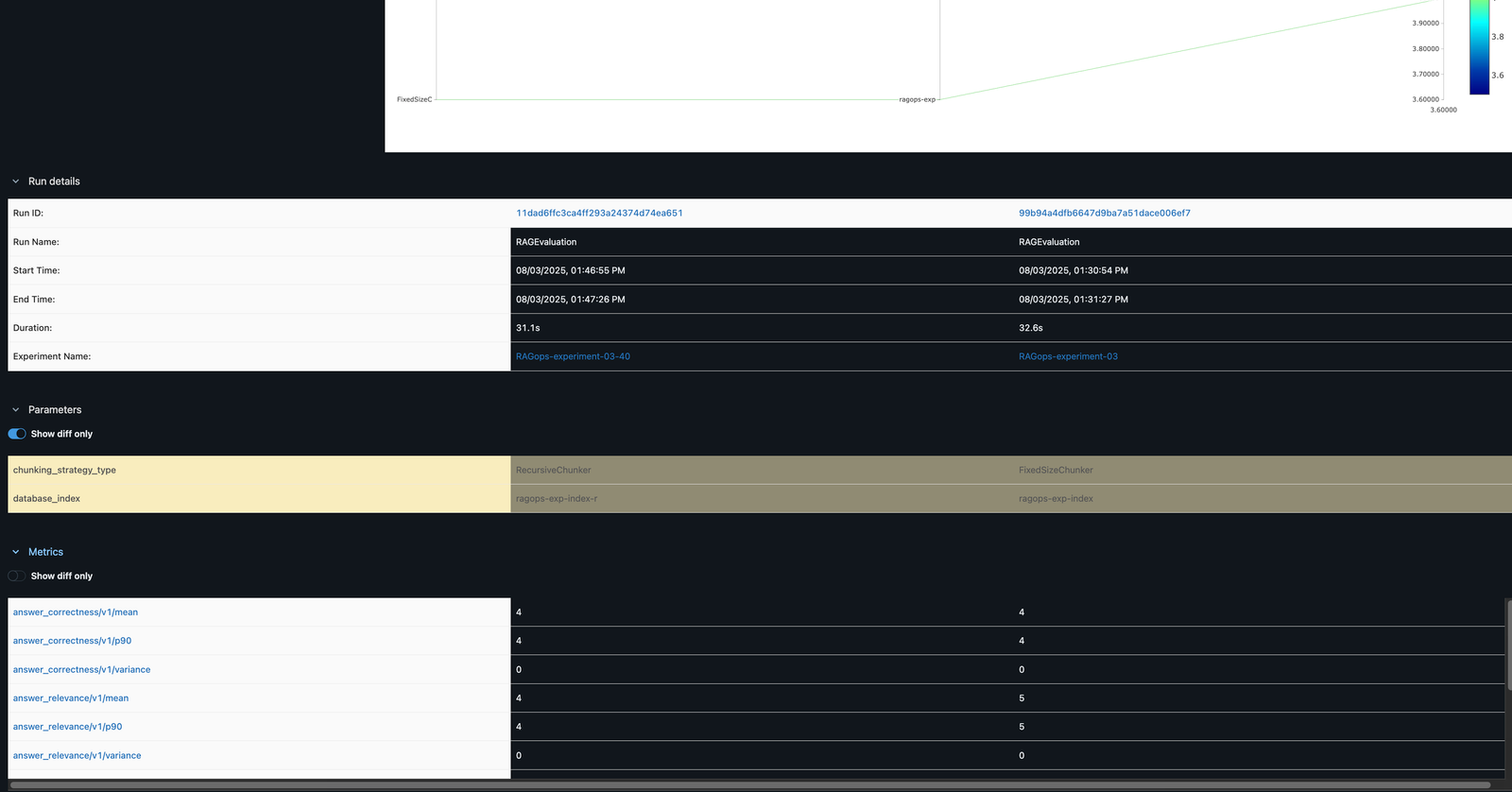

Comparing experiments

In the MLflow UI, you can compare runs side by side. For example, comparing FixedSizeChunker and RecursiveChunker as shown in the following screenshot reveals differences in metrics such as answer_similarity (a difference of 1 point), providing actionable insights for pipeline optimization.

Automation with Amazon SageMaker pipelines

After systematically experimenting with and optimizing each component of the RAG workflow through SageMaker managed MLflow, the next step is transforming these validated configurations into production-ready automated pipelines. Although MLflow experiments help identify the optimal combination of chunking strategies, embedding models, and retrieval parameters, manually reproducing these configurations across environments can be error-prone and inefficient.

To produce the automated RAG pipeline, we use SageMaker Pipelines, which helps teams codify their experimentally validated RAG workflows into automated, repeatable pipelines that maintain consistency from development through production. By converting the successful MLflow experiments into pipeline definitions, teams can make sure the exact same chunking, embedding, retrieval, and evaluation steps that performed well in testing are reliably reproduced in production environments.

SageMaker Pipelines offers a serverless workflow orchestration for converting experimental notebook code into a production-grade pipeline, versioning and tracking pipeline configurations alongside MLflow experiments, and automating the end-to-end RAG workflow. The automated Sagemaker pipeline-based RAG workflow offers dependency management, comprehensive custom testing and validation before production deployment, and CI/CD integration for automated pipeline promotion.

With SageMaker Pipelines, you can automate your entire RAG workflow, from data preparation to evaluation, as reusable, parameterized pipeline definitions. This provides the following benefits:

- Reproducibility – Pipeline definitions capture all dependencies, configurations, and executions logic in version-controlled code

- Parameterization – Key RAG parameters (chunk sizes, model endpoints, retrieval settings) can be quickly modified between runs

- Monitoring – Pipeline executions provide detailed logs and metrics for each step

- Governance – Built-in lineage tracking supports full audibility of data and model artifacts

- Customization – Serverless workflow orchestration is customizable to your unique enterprise landscape, with scalable infrastructure and flexibility with instances optimized for CPU, GPU, or memory-intensive tasks, memory configuration, and concurrency optimization

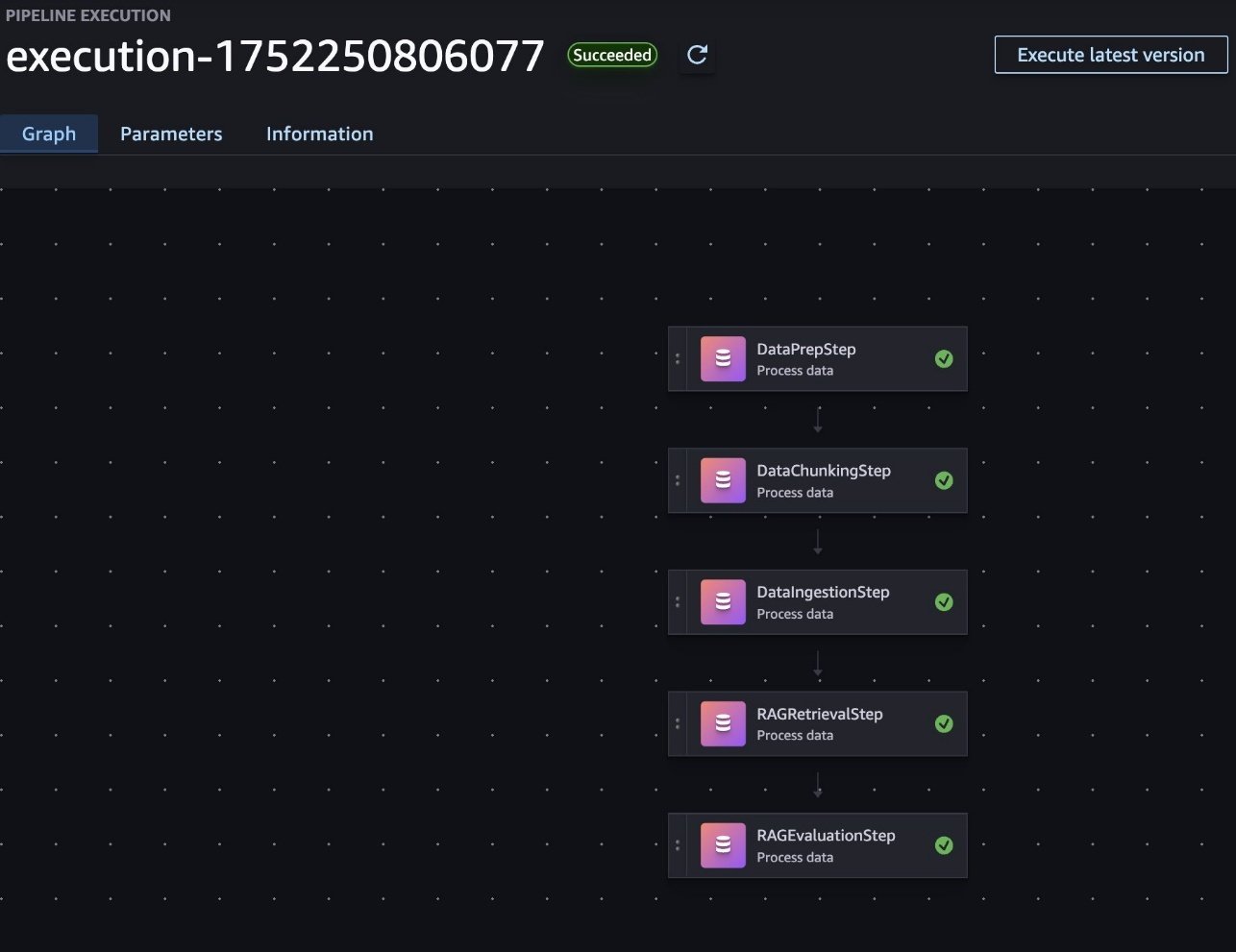

To implement a RAG workflow in SageMaker pipelines, each major component of the RAG process (data preparation, chunking, ingestion, retrieval and generation, and evaluation) is included in a SageMaker processing job. These jobs are then orchestrated as steps within a pipeline, with data flowing between them, as shown in the following screenshot. This structure allows for modular development, quick debugging, and the ability to reuse components across different pipeline configurations.

The key RAG configurations are exposed as pipeline parameters, enabling flexible experimentation with minimal code changes. For example, the following code snippets showcase the modifiable parameters for RAG configurations, which can be used as pipeline configurations:

In this post, we provide two agentic RAG pipeline automation approaches to building the SageMaker pipeline, each with own benefits: single-step SageMaker pipelines and multi-step pipelines.

The single-step pipeline approach is designed for simplicity, running the entire RAG workflow as one unified process. This setup is ideal for straightforward or less complex use cases, because it minimizes pipeline management overhead. With fewer steps, the pipeline can start quickly, benefitting from reduced execution times and streamlined development. This makes it a practical option when rapid iteration and ease of use are the primary concerns.

The multi-step pipeline approach is preferred for enterprise scenarios where flexibility and modularity are essential. By breaking down the RAG process into distinct, manageable stages, organizations gain the ability to customize, swap, or extend individual components as needs evolve. This design enables plug-and-play adaptability, making it straightforward to reuse or reconfigure pipeline steps for various workflows. Additionally, the multi-step format allows for granular monitoring and troubleshooting at each stage, providing detailed insights into performance and facilitating robust enterprise management. For enterprises seeking maximum flexibility and the ability to tailor automation to unique requirements, the multi-step pipeline approach is the superior choice.

CI/CD for an agentic RAG pipeline

Now we integrate the SageMaker RAG pipeline with CI/CD. CI/CD is important for making a RAG solution enterprise-ready because it provides faster, more reliable, and scalable delivery of AI-powered workflows. Specifically for enterprises, CI/CD pipelines automate the integration, testing, deployment, and monitoring of changes in the RAG system, which brings several key benefits, such as faster and more reliable updates, version control and traceability, consistency across environments, modularity and flexibility for customization, enhanced collaboration and monitoring, risk mitigation, and cost savings. This aligns with general CI/CD benefits in software and AI systems, emphasizing automation, quality assurance, collaboration, and continuous feedback essential to enterprise AI readiness.

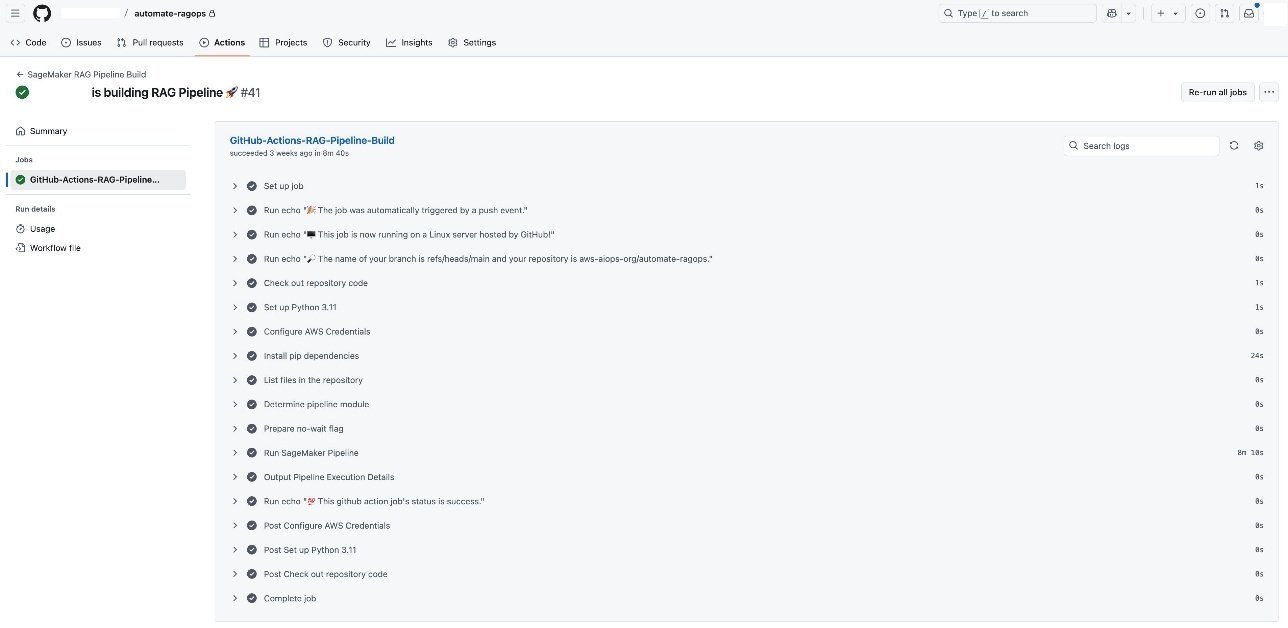

When your SageMaker RAG pipeline definition is in place, you can implement robust CI/CD practices by integrating your development workflow and toolsets already enabled at your enterprise. This setup makes it possible to automate code promotion, pipeline deployment, and model experimentation through simple Git triggers, so changes are versioned, tested, and systematically promoted across environments. For demonstration, in this post, we show the CI/CD integration using GitHub Actions and by using GitHub Actions as the CI/CD orchestrator. Each code change, such as refining chunking strategies or updating pipeline steps, triggers an end-to-end automation workflow, as shown in the following screenshot. You can use the same CI/CD pattern with your choice of CI/CD tool instead of GitHub Actions, if needed.

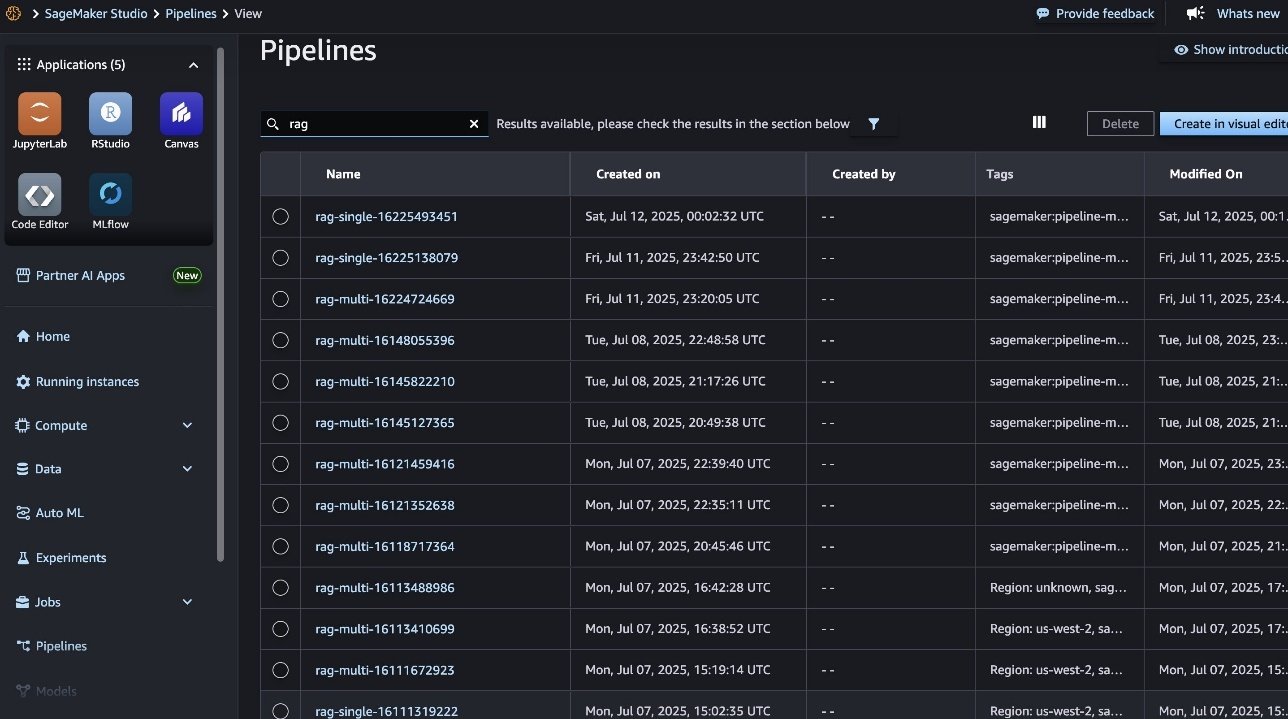

Each GitHub Actions CI/CD execution automatically triggers the SageMaker pipeline (shown in the following screenshot), allowing for seamless scaling of serverless compute infrastructure.

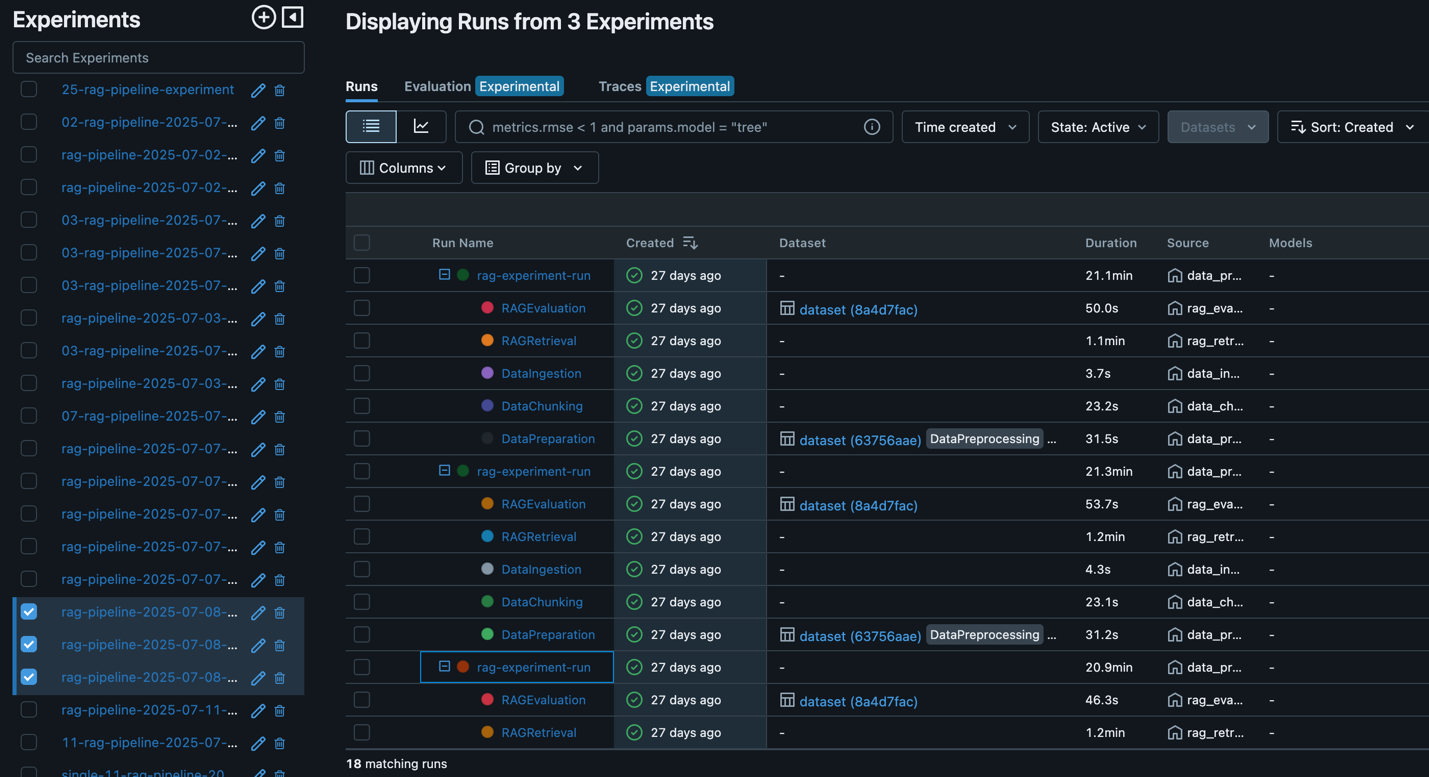

Throughout this cycle, SageMaker managed MLflow records every executed pipeline (shown in the following screenshot), so you can seamlessly review results, compare performance across different pipeline runs, and manage the RAG lifecycle.

After an optimal RAG pipeline configuration is determined, the new desired configuration (Git version tracking captured in MLflow as shown in the following screenshot) can be promoted to higher stages or environments directly through an automated workflow, minimizing manual intervention and reducing risk.

Clean up

To avoid unnecessary costs, delete resources such as the SageMaker managed MLflow tracking server, SageMaker pipelines, and SageMaker endpoints when your RAG experimentation is complete. You can visit the SageMaker Studio console to destroy resources that aren’t needed anymore or call appropriate AWS APIs actions.

Conclusion

By integrating SageMaker AI, SageMaker managed MLflow, and Amazon OpenSearch Service, you can build, evaluate, and deploy RAG pipelines at scale. This approach provides the following benefits:

- Automated and reproducible workflows with SageMaker Pipelines and MLflow, minimizing manual steps and reducing the risk of human error

- Advanced experiment tracking and comparison for different chunking strategies, embedding models, and LLMs, so every configuration is logged, analyzed, and reproducible

- Actionable insights from both traditional and LLM-based evaluation metrics, helping teams make data-driven improvements at every stage

- Seamless deployment to production environments, with automated promotion of validated pipelines and robust governance throughout the workflow

Automating your RAG pipeline with SageMaker Pipelines brings additional benefits: it enables consistent, version-controlled deployments across environments, supports collaboration through modular, parameterized workflows, and supports full traceability and auditability of data, models, and results. With built-in CI/CD capabilities, you can confidently promote your entire RAG solution from experimentation to production, knowing that each stage meets quality and compliance standards.

Now it’s your turn to operationalize RAG workflows and accelerate your AI initiatives. Explore SageMaker Pipelines and managed MLflow using the solution from the GitHub repository to unlock scalable, automated, and enterprise-grade RAG solutions.

About the authors

Sandeep Raveesh is a GenAI Specialist Solutions Architect at AWS. He works with customers through their AIOps journey across model training, generative AI applications like agents, and scaling generative AI use cases. He also focuses on Go-To-Market strategies, helping AWS build and align products to solve industry challenges in the generative AI space. You can find Sandeep on LinkedIn.

Sandeep Raveesh is a GenAI Specialist Solutions Architect at AWS. He works with customers through their AIOps journey across model training, generative AI applications like agents, and scaling generative AI use cases. He also focuses on Go-To-Market strategies, helping AWS build and align products to solve industry challenges in the generative AI space. You can find Sandeep on LinkedIn.

Blake Shin is an Associate Specialist Solutions Architect at AWS who enjoys learning about and working with new AI/ML technologies. In his free time, Blake enjoys exploring the city and playing music.

Blake Shin is an Associate Specialist Solutions Architect at AWS who enjoys learning about and working with new AI/ML technologies. In his free time, Blake enjoys exploring the city and playing music.

Books, Courses & Certifications

Unlock model insights with log probability support for Amazon Bedrock Custom Model Import

You can use Amazon Bedrock Custom Model Import to seamlessly integrate your customized models—such as Llama, Mistral, and Qwen—that you have fine-tuned elsewhere into Amazon Bedrock. The experience is completely serverless, minimizing infrastructure management while providing your imported models with the same unified API access as native Amazon Bedrock models. Your custom models benefit from automatic scaling, enterprise-grade security, and native integration with Amazon Bedrock features such as Amazon Bedrock Guardrails and Amazon Bedrock Knowledge Bases.

Understanding how confident a model is in its predictions is essential for building reliable AI applications, particularly when working with specialized custom models that might encounter domain-specific queries.

With log probability support now added to Custom Model Import, you can access information about your models’ confidence in their predictions at the token level. This enhancement provides greater visibility into model behavior and enables new capabilities for model evaluation, confidence scoring, and advanced filtering techniques.

In this post, we explore how log probabilities work with imported models in Amazon Bedrock. You will learn what log probabilities are, how to enable them in your API calls, and how to interpret the returned data. We also highlight practical applications—from detecting potential hallucinations to optimizing RAG systems and evaluating fine-tuned models—that demonstrate how these insights can improve your AI applications, helping you build more trustworthy solutions with your custom models.

Understanding log probabilities

In language models, a log probability represents the logarithm of the probability that the model assigns to a token in a sequence. These values indicate how confident the model is about each token it generates or processes. Log probabilities are expressed as negative numbers, with values closer to zero indicating higher confidence. For example, a log probability of -0.1 corresponds to approximately 90% confidence, while a value of -3.0 corresponds to about 5% confidence. By examining these values, you can identify when a model is highly certain versus when it’s making less confident predictions. Log probabilities provide a quantitative measure of how likely the model considered each generated token, offering valuable insight into the confidence of its output. By analyzing them you can,

- Gauge confidence across a response: Assess how confident the model was in different sections of its output, helping you identify where it was certain versus uncertain.

- Score and compare outputs: Compare overall sequence likelihood (by adding or averaging log probabilities) to rank or filter multiple model outputs.

- Detect potential hallucinations: Identify sudden drops in token-level confidence, which can flag segments that might require verification or review.

- Reduce RAG costs with early pruning: Run short, low-cost draft generations based on retrieved contexts, compute log probabilities for those drafts, and discard low-scoring candidates early, avoiding unnecessary full-length generations or expensive reranking while keeping only the most promising contexts in the pipeline.

- Build confidence-aware applications: Adapt system behavior based on certainty levels—for example, trigger clarifying prompts, provide fallback responses, or flagging for human review.

Overall, log probabilities are a powerful tool for interpreting and debugging model responses with measurable certainty—particularly valuable for applications where understanding why a model responded in a certain way can be as important as the response itself.

Prerequisites

To use log probability support with custom model import in Amazon Bedrock, you need:

- An active AWS account with access to Amazon Bedrock

- A custom model created in Amazon Bedrock using the Custom Model Import feature after July 31, 2025, when the log probabilities support was released

- Appropriate AWS Identity and Access Management (IAM) permissions to invoke models through the Amazon Bedrock Runtime

Introducing log probabilities support in Amazon Bedrock

With this release, Amazon Bedrock now allows models imported using the Custom Model Import feature to return token-level log probabilities as part of the inference response.

When invoking a model through Amazon Bedrock InvokeModel API, you can access token log probabilities by setting "return_logprobs": true in the JSON request body. With this flag enabled, the model’s response will include additional fields providing log probabilities for both the prompt tokens and the generated tokens, so that customers can analyze the model’s confidence in its predictions. These log probabilities let you quantitatively assess how confident your custom models are when processing inputs and generating responses. The granular metrics allow for better evaluation of response quality, troubleshooting of unexpected outputs, and optimization of prompts or model configurations.

Let’s walk through an example of invoking a custom model on Amazon Bedrock with log probabilities enabled and examine the output format. Suppose you have already imported a custom model (for instance, a fine-tuned Llama 3.2 1B model) into Amazon Bedrock and have its model Amazon Resource Name (ARN). You can invoke this model using the Amazon Bedrock Runtime SDK (Boto3 for Python in this example) as shown in the following example:

In the preceding code, we send a prompt—"The quick brown fox jumps"—to our custom imported model. We configure standard inference parameters: a maximum generation length of 50 tokens, a moderate temperature of 0.5 for moderate randomness, and a stop condition (either a period or a newline). The "return_logprobs":True parameter tells Amazon Bedrock to return log probabilities in the response.

The InvokeModel API returns a JSON response containing three main components: the standard generated text output, metadata about the generation process, and now log probabilities for both prompt and generated tokens. These values reveal the model’s internal confidence for each token prediction, so you can understand not just what text was produced, but how certain the model was at each step of the process. The following is an example response from the "quick brown fox jumps" prompt, showing log probabilities (appearing as negative numbers):

The raw API response provides token IDs paired with their log probabilities. To make this data interpretable, we need to first decode the token IDs using the appropriate tokenizer (in this case, the Llama 3.2 1B tokenizer), which maps each ID back to its actual text token. Then we convert log probabilities to probabilities by applying the exponential function, translating these values into more intuitive probabilities between 0 and 1. We have implemented these transformations using custom code (not shown here) to produce a human-readable format where each token appears alongside its probability, making the model’s confidence in its predictions immediately clear.

Let’s break down what this tells us about the model’s internal processing:

generation: This is the actual text generated by the model (in our example, it’s a continuation of the prompt that we sent to the model). This is the same field you would get normally from any model invocation.prompt_token_countandgeneration_token_count: These indicate the number of tokens in the input prompt and in the output, respectively. In our example, the prompt was tokenized into six tokens, and the model generated five tokens in its completion.stop_reason: The reason the generation stopped ("stop"means the model naturally stopped at a stop sequence or end-of-text,"length"means it hit the max token limit, and so on). In our case it shows"stop", indicating the model stopped on its own or because of the stop condition we provided.prompt_logprobs: This array provides log probabilities for each token in the prompt. As the model processes your input, it continuously predicts what should come next based on what it has seen so far. These values measure which tokens in your prompt were expected or surprising to the model.- The first entry is

Nonebecause the very first token has no preceding context. The model cannot predict anything without prior information. Each subsequent entry contains token IDs mapped to their log probabilities. We have converted these IDs to readable text and transformed the log probabilities into percentages for easier understanding. - You can observe the model’s increasing confidence as it processes familiar sequences. For example, after seeing The quick brown, the model predicted fox with 95.1% confidence. After seeing the full context up to fox, it predicted jumps with 81.1% confidence.

- Many positions show multiple tokens with their probabilities, revealing alternatives the model considered. For instance, at the second position, the model evaluated both The (2.7%) and Question (30.6%), which means the model considered both tokens viable at that position. This added visibility helps you understand where the model weighted alternatives and can reveal when it was more uncertain or had difficulty choosing from multiple options.

- Notably low probabilities appear for some tokens—quick received just 0.01%—indicating the model found these words unexpected in their context.

- The overall pattern tells a clear story: individual words initially received low probabilities, but as the complete quick brown fox jumps phrase emerged, the model’s confidence increased dramatically, showing it recognized this as a familiar expression.

- When multiple tokens in your prompt consistently receive low probabilities, your phrasing might be unusual for the model. This uncertainty can affect the quality of completions. Using these insights, you can reformulate prompts to better align with patterns the model encountered in its training data.

- The first entry is

logprobs: This array contains log probabilities for each token in the model’s generated output. The format is similar: a dictionary mapping token IDs to their corresponding log probabilities.- After decoding these values, we can see that the tokens over, the, lazy, and dog all have high probabilities. This demonstrates the model recognized it was completing the well-known phrase the quick brown fox jumps over the lazy dog—a common pangram that the model appears to have strong familiarity with.

- In contrast, the final period (newline) token has a much lower probability (30.3%), revealing the model’s uncertainty about how to conclude the sentence. This makes sense because the model had multiple valid options: ending the sentence with a period, continuing with additional content, or choosing another punctuation mark altogether.

Practical use cases of log probabilities

Token-level log probabilities from the Custom Model Import feature provide valuable insights into your model’s decision-making process. These metrics transform how you interact with your custom models by revealing their confidence levels for each generated token. Here are impactful ways to use these insights:

Ranking multiple completions

You can use log probabilities to quantitatively rank multiple generated outputs for the same prompt. When your application needs to choose between different possible completions—whether for summarization, translation, or creative writing—you can calculate each completion’s overall likelihood by averaging or adding the log probabilities across all its tokens.

Example:

Prompt: Translate the phrase "Battre le fer pendant qu'il est chaud"

- Completion A:

"Strike while the iron is hot"(Average log probability: -0.39) - Completion B:

"Beat the iron while it is hot."(Average log probability: -0.46)

In this example, Completion A receives a higher log probability score (closer to zero), indicating the model found this idiomatic translation more natural than the more literal Completion B. This numerical approach enables your application to automatically select the most probable output or present multiple candidates ranked by the model’s confidence level.

This ranking capability extends beyond translation to many scenarios where multiple valid outputs exist—including content generation, code completion, and creative writing—providing an objective quality metric based on the model’s confidence rather than relying solely on subjective human judgment.

Detecting hallucinations and low-confidence answers

Models might produce hallucinations—plausible-sounding but factually incorrect statements—when handling ambiguous prompts, complex queries, or topics outside their expertise. Log probabilities provide a practical way to detect these instances by revealing the model’s internal uncertainty, helping you identify potentially inaccurate information even when the output appears confident.

By analyzing token-level log probabilities, you can identify which parts of a response the model was potentially uncertain about, even when the text appears confident on the surface. This capability is especially valuable in retrieval-augmented generation (RAG) systems, where responses should be grounded in retrieved context. When a model has relevant information available, it typically generates answers with higher confidence. Conversely, low confidence across multiple tokens suggests the model might be generating content without sufficient supporting information.

Example:

- Prompt:

- Model output:

In this example, we intentionally asked about a fictional metric—Portfolio Synergy Quotient (PSQ)—to demonstrate how log probabilities reveal uncertainty in model responses. Despite producing a professional-sounding definition for this non-existent financial concept, the token-level confidence scores tell a revealing story. The confidence scores shown below are derived by applying the exponential function to the log probabilities returned by the model.

- PSQ shows medium confidence (63.8%), indicating that the model recognized the acronym format but wasn’t highly certain about this specific term.

- Common finance terminology like classes (98.2%) and portfolio (92.8%) exhibit high confidence, likely because these are standard concepts widely used in financial contexts.

- Critical connecting concepts show notably low confidence: measure (14.0%) and diversification (31.8%), reveal the model’s uncertainty when attempting to explain what PSQ means or does.

- Functional words like is (45.9%) and of (56.6%) hover in the medium confidence levels, suggesting uncertainty about the overall structure of the explanation.

By identifying these low-confidence segments, you can implement targeted safeguards in your applications—such as flagging content for verification, retrieving additional context, generating clarifying questions, or applying confidence thresholds for sensitive information. This approach helps create more reliable AI systems that can distinguish between high-confidence knowledge and uncertain responses.

Monitoring prompt quality

When engineering prompts for your application, log probabilities reveal how well the model understands your instructions. If the first few generated tokens show unusually low probabilities, it often signals that the model struggled to interpret what you are asking.

By tracking the average log probability of the initial tokens—typically the first 5–10 generated tokens—you can quantitatively measure prompt clarity. Well-structured prompts with clear context typically produce higher probabilities because the model immediately knows what to do. Vague or underspecified prompts often yield lower initial token likelihoods as the model hesitates or searches for direction.

Example:

Prompt comparison for customer service responses:

- Basic prompt:

- Average log probability of first five tokens: -1.215 (lower confidence)

- Optimized prompt:

- Average log probability of first five tokens: -0.333 (higher confidence)

The optimized prompt generates higher log probabilities, demonstrating that precise instructions and clear context reduce the model’s uncertainty. Rather than making absolute judgments about prompt quality, this approach lets you measure relative improvement between versions. You can directly observe how specific elements—role definitions, contextual details, and explicit expectations—increase model confidence. By systematically measuring these confidence scores across different prompt iterations, you build a quantitative framework for prompt engineering that reveals exactly when and how your instructions become unclear to the model, enabling continuous data-driven refinement.

Reducing RAG costs with early pruning

In traditional RAG implementations, systems retrieve 5–20 documents and generate complete responses using these retrieved contexts. This approach drives up inference costs because every retrieved context consumes tokens regardless of actual usefulness.

Log probabilities enable a more cost-effective alternative through early pruning. Instead of immediately processing the retrieved documents in full:

- Generate draft responses based on each retrieved context

- Calculate the average log probability across these short drafts

- Rank contexts by their average log probability scores

- Discard low-scoring contexts that fall below a confidence threshold

- Generate the complete response using only the highest-confidence contexts

This approach works because contexts that contain relevant information produce higher log probabilities in the draft generation phase. When the model encounters helpful context, it generates text with greater confidence, reflected in log probabilities closer to zero. Conversely, irrelevant or tangential contexts produce more uncertain outputs with lower log probabilities.

By filtering contexts before full generation, you can reduce token consumption while maintaining or even improving answer quality. This shifts the process from a brute-force approach to a targeted pipeline that directs full generation only toward contexts where the model demonstrates genuine confidence in the source material.

Fine-tuning evaluation

When you have fine-tuned a model for your specific domain, log probabilities offer a quantitative way to assess the effectiveness of your training. By analyzing confidence patterns in responses, you can determine if your model has developed proper calibration—showing high confidence for correct domain-specific answers and appropriate uncertainty elsewhere.

A well-calibrated fine-tuned model should assign higher probabilities to accurate information within its specialized area while maintaining lower confidence when operating outside its training domain. Problems with calibration appear in two main forms. Overconfidence occurs when the model assigns high probabilities to incorrect responses, suggesting it hasn’t properly learned the boundaries of its knowledge. Under confidence manifests as consistently low probabilities despite generating accurate answers, indicating that training might not have sufficiently reinforced correct patterns.

By systematically testing your model across various scenarios and analyzing the log probabilities, you can identify areas needing additional training or detect potential biases in your current approach. This creates a data-driven feedback loop for iterative improvements, making sure your model performs reliably within its intended scope while maintaining appropriate boundaries around its expertise.

Getting started

Here’s how to start using log probabilities with models imported through the Amazon Bedrock Custom Model Import feature:

- Enable log probabilities in your API calls: Add

"return_logprobs": trueto your request payload when invoking your custom imported model. This parameter works with both theInvokeModelandInvokeModelWithResponseStreamAPIs. Begin with familiar prompts to observe which tokens your model predicts with high confidence compared to which it finds surprising. - Analyze confidence patterns in your custom models: Examine how your fine-tuned or domain-adapted models respond to different inputs. The log probabilities reveal whether your model is appropriately calibrated for your specific domain—showing high confidence where it should be certain.

- Develop confidence-aware applications: Implement practical use cases such as hallucination detection, response ranking, and content verification to make your applications more robust. For example, you can flag low-confidence sections of responses for human review or select the highest-confidence response from multiple generations.

Conclusion

Log probability support for Amazon Bedrock Custom Model Import offers enhanced visibility into model decision-making. This feature transforms previously opaque model behavior into quantifiable confidence metrics that developers can analyze and use.

Throughout this post, we have demonstrated how to enable log probabilities in your API calls, interpret the returned data, and use these insights for practical applications. From detecting potential hallucinations and ranking multiple completions to optimizing RAG systems and evaluating fine-tuning quality, log probabilities offer tangible benefits across diverse use cases.

For customers working with customized foundation models like Llama, Mistral, or Qwen, these insights address a fundamental challenge: understanding not just what a model generates, but how confident it is in its output. This distinction becomes critical when deploying AI in domains requiring high reliability—such as finance, healthcare, or enterprise applications—where incorrect outputs can have significant consequences.

By revealing confidence patterns across different types of queries, log probabilities help you assess how well your model customizations have affected calibration, highlighting where your model excels and where it might need refinement. Whether you are evaluating fine-tuning effectiveness, debugging unexpected responses, or building systems that adapt to varying confidence levels, this capability represents an important advancement in bringing greater transparency and control to generative AI development on Amazon Bedrock.

We look forward to seeing how you use log probabilities to build more intelligent and trustworthy applications with your custom imported models. This capability demonstrates the commitment from Amazon Bedrock to provide developers with tools that enable confident innovation while delivering the scalability, security, and simplicity of a fully managed service.

About the authors

Manoj Selvakumar is a Generative AI Specialist Solutions Architect at AWS, where he helps organizations design, prototype, and scale AI-powered solutions in the cloud. With expertise in deep learning, scalable cloud-native systems, and multi-agent orchestration, he focuses on turning emerging innovations into production-ready architectures that drive measurable business value. He is passionate about making complex AI concepts practical and enabling customers to innovate responsibly at scale—from early experimentation to enterprise deployment. Before joining AWS, Manoj worked in consulting, delivering data science and AI solutions for enterprise clients, building end-to-end machine learning systems supported by strong MLOps practices for training, deployment, and monitoring in production.

Yanyan Zhang is a Senior Generative AI Data Scientist at Amazon Web Services, where she has been working on cutting-edge AI/ML technologies as a Generative AI Specialist, helping customers use generative AI to achieve their desired outcomes. Yanyan graduated from Texas A&M University with a PhD in Electrical Engineering. Outside of work, she loves traveling, working out, and exploring new things.

Yanyan Zhang is a Senior Generative AI Data Scientist at Amazon Web Services, where she has been working on cutting-edge AI/ML technologies as a Generative AI Specialist, helping customers use generative AI to achieve their desired outcomes. Yanyan graduated from Texas A&M University with a PhD in Electrical Engineering. Outside of work, she loves traveling, working out, and exploring new things.

Lokeshwaran Ravi is a Senior Deep Learning Compiler Engineer at AWS, specializing in ML optimization, model acceleration, and AI security. He focuses on enhancing efficiency, reducing costs, and building secure ecosystems to democratize AI technologies, making cutting-edge ML accessible and impactful across industries.

Lokeshwaran Ravi is a Senior Deep Learning Compiler Engineer at AWS, specializing in ML optimization, model acceleration, and AI security. He focuses on enhancing efficiency, reducing costs, and building secure ecosystems to democratize AI technologies, making cutting-edge ML accessible and impactful across industries.

Revendra Kumar is a Senior Software Development Engineer at Amazon Web Services. In his current role, he focuses on model hosting and inference MLOps on Amazon Bedrock. Prior to this, he worked as an engineer on hosting Quantum computers on the cloud and developing infrastructure solutions for on-premises cloud environments. Outside of his professional pursuits, Revendra enjoys staying active by playing tennis and hiking.

Revendra Kumar is a Senior Software Development Engineer at Amazon Web Services. In his current role, he focuses on model hosting and inference MLOps on Amazon Bedrock. Prior to this, he worked as an engineer on hosting Quantum computers on the cloud and developing infrastructure solutions for on-premises cloud environments. Outside of his professional pursuits, Revendra enjoys staying active by playing tennis and hiking.

Books, Courses & Certifications

Migrate from Anthropic’s Claude 3.5 Sonnet to Claude 4 Sonnet on Amazon Bedrock

This post is co-written with Gareth Jones from Anthropic.

Anthropic’s Claude 4 Sonnet model has launched on Amazon Bedrock, marking a significant advancement in foundation model capabilities. Consequently, the deprecation timeline for Anthropic’s Claude 3.5 Sonnet (v1 and v2) was announced. This evolution creates a dual imperative for production AI applications: the opportunity to harness enhanced performance and the operational necessity to migrate before deprecation. Organizations must treat model migrations as a core component of their AI inference strategy, because poor execution can result in service disruptions, performance regressions, and cost overruns.

This post provides a systematic approach to migrating from Anthropic’s Claude 3.5 Sonnet to Claude 4 Sonnet on Amazon Bedrock. We examine the key model differences, highlight essential migration considerations, and deliver proven best practices to transform this necessary transition into a strategic advantage that drives measurable value for your organization.

Overview of model differences

Understanding the specific changes between model versions is the first step in planning a successful migration. The migration from Anthropic’s Claude Sonnet 3.5 Sonnet to Claude 4 Sonnet introduces capability and behavioral shifts that you can take advantage of:

- Increased context window – Anthropic’s Claude 4 Sonnet expands the context window from 200,000 tokens to 1 million tokens (beta). This allows applications to process and reason over codebases, financial reports, or lengthy legal documents in a single prompt, simplifying complex workflows.

- Native reasoning mechanisms – Whereas Anthropic’s Claude 3.5 Sonnet models rely on the chain-of-thought (CoT) prompting technique, Claude 4 models introduce built-in, API-enabled reasoning features like extended thinking and interleaved thinking. These give the model dedicated computational time to reason before answering, dramatically improving performance on complex problems.

- Advanced tool use – Anthropic’s Claude 4 Sonnet model significantly upgrades tool use capabilities. They can execute multiple tools in parallel and use extended thinking between tool calls, enabling more sophisticated and efficient agentic workflows compared to the sequential tool use of older models.

To find out more about the model differences, refer to the Complete Model Comparison Guide.

A successful migration hinges on a few critical technical and strategic considerations, which we discuss in the following sections. Addressing these points proactively can minimize risk and accelerate your path to production.

Prerequisites

Before you can start using Anthropic’s Claude 4 Sonnet model, you must enable access to these models in Amazon Bedrock. For instructions, see Request access to an Amazon Bedrock foundation model. Make sure you review and accept the model’s End User License Agreement (EULA) proceeding with the model access request. It is crucial to first confirm that the Claude 4 Sonnet is available in your intended AWS Region, because model support can differ depending on location. For an updated list of supported Regions, refer to Model support by AWS Region in Amazon Bedrock. Additionally, you can use cross-Region inference (CRIS) by specifying an inference profile when running model inference, which can help improve throughput and maximize resource availability. For a list of supported cross-Region inference profiles, refer to Supported Regions and models for inference profiles.

API changes and code updates

When migrating on Amazon Bedrock, you can use either the model-specific InvokeModel API or the unified Converse API.

If you use the InvokeModel API, the migration is straightforward. You only need to update the modelId in your code, because the request body structure for Anthropic’s Messages API remains consistent between versions:

- Old model ID –

'anthropic.claude-3-5-sonnet-20240620-v1:0'or'anthropic.claude-3-5-sonnet-20241022-v2:0' - New model ID –

'anthropic.claude-4-sonnet-20240514-v1:0'

If you use a CRIS profile for the model, be sure to specify the correct inference profile ID in one of the source Regions, for example, 'us.anthropic.claude-sonnet-4-20250514-v1:0'.

This migration is an excellent opportunity to switch to the Converse API. It provides a standardized request/response format for language models on Amazon Bedrock, making future migrations to different models or providers much simpler. See the following code:

The following are some key changes:

- Updated text editor tool – Developers using Anthropic’s built-in text editor tool for agentic workflows must be aware that the tool definition has been updated. The tool type is now

text_editor_20250124and tool name is nowstr_replace_based_edit_tool. Thecomputer-use-2024-10-22in theanthropic_betaheader can be removed because the text editor tool is generally available in Anthropic’s Claude 4. Any code using this tool must be updated to reflect these changes. For more details, see Anthropic defined tools. - Removed

undo_editcommand – Theundo_editcommand is no longer supported in Anthropic’s Claude 4 Sonnet. Any code using this command should be removed. - New

refusalstop reason – The model now introduces a new refusal stop reason. This reason is returned when the model declines to generate content due to safety policies. Application logic that handles model responses must be updated to recognize and manage this new stop reason to help prevent unexpected behavior or errors. For example, when you receive arefusalstop reason in a multi-turn interaction, you must reset the conversation context by either removing or updating the turn that was refused.

Prompt engineering and behavioral shifts

Don’t assume your existing prompts will work perfectly with the new model. Adherence to model-specific best practices is key.

Refer to Claude 4 prompt engineering best practices released by Anthropic to achieve optimal results. For example, Anthropic’s Claude models perform best when prompts are structured with XML tags (for example, ) to clearly separate different parts of the input.

Anthropic’s Claude 4 Sonnet is designed to follow instructions more precisely. This is a major advantage, but might mean it is less verbose or elaborative than Claude 3.5 Sonnet unless explicitly prompted to be. Your system prompts defining the AI’s persona might need adjustments.

New reasoning features

Anthropic’s Claude 4 Sonnet model’s built-in extended thinking is a powerful capability. To unlock the deep reasoning capabilities of the new models, developers can enable extended thinking by including the thinking keyword argument in their API call. But make sure to use it strategically—extended thinking incurs additional costs because reasoning tokens are billed as output tokens at standard model rates. You will be charged for the full thinking process (not just the summary you see), and costs can scale with the thinking budget you set.

We recommend enabling extended thinking for tasks requiring deep, multi-step analysis where accuracy is paramount (for example, complex scientific problems or advanced coding challenges).

We recommend disabling extended thinking for simple queries or latency-sensitive applications. The feature adds to both cost (reasoning tokens are billed) and response time. For many tasks, a well-crafted CoT prompt is still the most efficient choice.

To use extended thinking, simply update the Converse API call by adding the additionalModelRequestFields parameter with the thinking configuration. You can specify the maximum thinking tokens that the model can use by setting a value for budget_tokens. Note that maxTokens should be larger than budget_tokens for extended thinking. See the following code:

The following table shows an example of extended thinking and how it can improve response accuracy. We prompt Anthropic’s Claude 3.5 Sonnet v2 and Claude 4 Sonnet (with thinking enabled) with an example prompt:

The table shows responses from the two models. With extended thinking, the model is able to carefully reason through the problem and arrive at the correct answer.