A new report has found that almost three-quarters of organisations globally have already incorporated artificial intelligence (AI) into their cybersecurity defences. The research, released by Arctic...

The artificial intelligence industry faces a fundamental paradox. While machines can now process data at massive scales, the learning remains surprisingly inefficient, facing the challenge of...

In an era of extraordinary geopolitical volatility, the U.S. Intelligence Community (IC) is confronting increasingly complex challenges that demand innovative technological solutions. Unprecedented amounts of data...

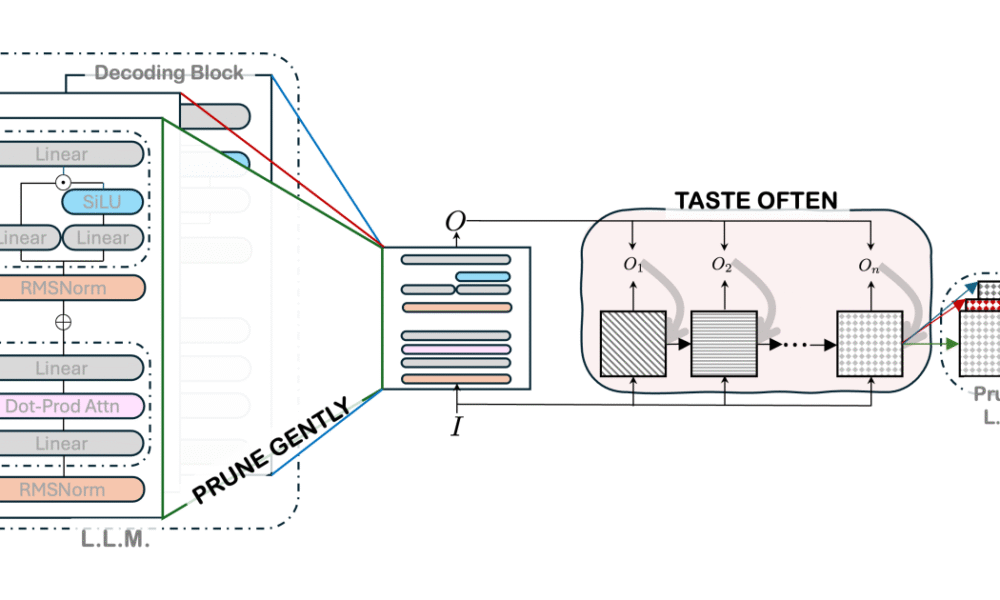

In recent years, large language models (LLMs) have revolutionized the field of natural-language processing and made significant contributions to computer vision, speech recognition, and language translation....

August 8, 2025, 10:40 am IDT The launch of a new GPT model invariably ignites fervent anticipation, a sentiment Mark Chen, Head of Research at OpenAI,...

AI agents are everywhere. From virtual customer service bots to marketing automation tools, tech innovators are scrambling to launch agents that promise faster service, smarter decisions...

Gartner’s latest Hype Cycle for Artificial Intelligence identifies AI agents and AI-ready data as the most rapidly advancing technologies of 2025, reflecting a shift from generative...

Since January 2025, ten elite university teams from around the world have taken part in the first-ever Amazon Nova AI Challenge, the Amazon Nova AI Challenge...

Foundation models (FMs) such as large language models and vision-language models are growing in popularity, but their energy inefficiency and computational cost remain an obstacle to...

The rapid advancement of artificial intelligence (AI) has fundamentally transformed human-technology interactions. These innovations represent a groundbreaking shift in the human-tech nexus, introducing new opportunities and...