Advancements in artificial intelligence (AI) have long been driven by the belief that increasing data and computational power can improve performance. This “brute force” approach has...

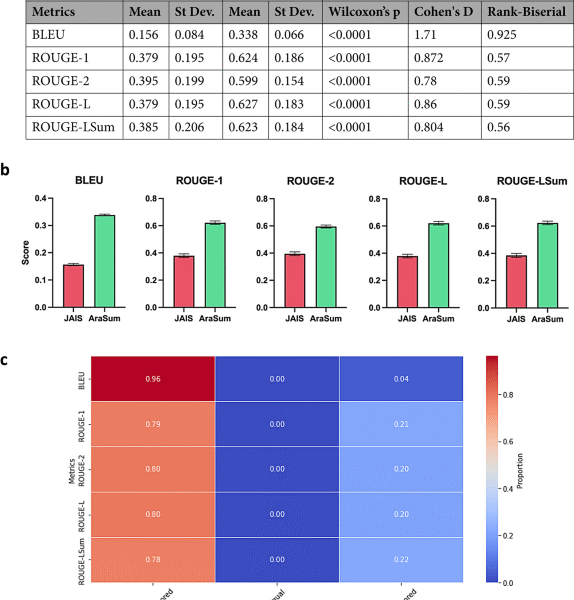

Data source To overcome the lack of accessible and vouched medical conversation datasets exclusively in Arabic, we adopted a synthetic data generation approach commonly seen in...

Large machine learning models based on the transformer architecture have recently demonstrated extraordinary results on a range of vision and language tasks. But such large models...

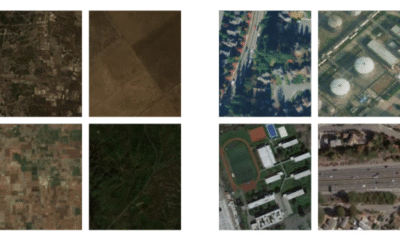

Geospatial technologies have rapidly ascended to a position of paramount importance across the globe. By providing a better understanding of Earth’s ever-evolving landscape and our intricate...

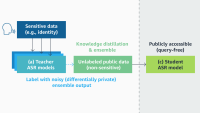

Knowledge distillation (KD) is one of the most effective ways to deploy large-scale language models in environments where low latency is essential. KD involves transferring the...

Teaching large language models (LLMs) to reason is an active topic of research in natural-language processing, and a popular approach to that problem is the so-called...

Knowledge distillation is a popular technique for compressing large machine learning models into manageable sizes, to make them suitable for low-latency applications such as voice assistants....

Validation curves in a five-task multitask learning setup, where training minimizes the sum of the task losses. The tasks corresponding to the blue, purple, and red...

The models behind machine learning (ML) services are continuously being updated, and the new models are usually more accurate than the old ones. But an overall improvement in...

Sound detection is a popular application of today’s smart speakers. Alexa customers who activate Alexa Guard when they leave the house, for instance, receive notifications if their Alexa-enabled...