Tools & Platforms

SoftBank shares surge on AI hope and sign of Stargate progress

SoftBank Group’s shares jumped as much as 8% on Tuesday on bets that the tech investor would be able to capitalize on its yearslong focus on artificial intelligence.

The Tokyo-based company is the unnamed buyer of Foxconn Technology Group’s electric vehicle plant in Ohio and plans to incorporate the facility into its $500 billion Stargate data center project with OpenAI and Oracle.

That’s spurring optimism that SoftBank may be able to kick-start the stalled Stargate endeavor and benefit from a rush to build AI hardware in the U.S. Its stock is up for the fifth straight day and on track to close at a record.

SoftBank has been gradually cashing in on some of its Vision Fund bets in recent years. SoftBank’s stock also received a boost after a report emerged that the Japanese company has picked investment banks for a possible initial public offering for Japanese payments app operator PayPay. PayPay was originally set up through a venture with former Vision Fund portfolio company Paytm.

Last week, Foxconn’s flagship unit Hon Hai Precision Industry said it had agreed to sell the EV plant to a buyer it referred to as Crescent Dune for $375 million without identifying the company behind the entity.

Tools & Platforms

How can AI enhance healthcare access and efficiency in Thailand?

Support accessible and equitable healthcare

Julia continued by noting that Thailand has been praised for its efforts in medical technology, ranking as a leader or second in ASEAN.

However, she acknowledged the limitations of medical technology development, not just in Thailand, but across the region, particularly regarding the resources and budgets required, as well as regulations in each country.

“Medical technology” will be one of the driving forces of Thailand’s economy, contributing to the enhancement of healthcare services to international standards, increasing competitiveness in the global market, and promoting equitable access to healthcare.

It will also encourage the development of the medical equipment industry to become more self-reliant, reducing dependency on imports, and generating new opportunities through health tech startups.

Julia further explained that Philips has supported Thailand’s medical technology sector from the past to the present, working towards improving access to healthcare and ensuring equity for all.

Examples include donations of 100 patient monitoring devices worth around 3 million baht to the Ministry of Public Health to assist hospitals affected by the 2011 floods, as well as providing ultrasound echo machines to various hospitals in collaboration with the Heart Association of Thailand to support mobile healthcare units in rural areas.

“Access to healthcare services is a major challenge faced by many countries, especially within local communities. Thailand must work to integrate medical services effectively,” she said.

“Philips has provided medical technology in various hospitals, both public and private, as well as in medical schools. Our focus is on medical tools for treating diseases such as stroke, heart disease, and lung diseases, which are prevalent among many patients.”

AI enhances predictive healthcare solutions

Thailand has been placed on the “shortlist” of countries set to launch Philips’ new products soon after their global release. However, the product launch in Thailand will depend on meeting regulatory requirements, safety standards, and relevant policies for registration.

Julia noted that economic crises, conflicts, or changes in US tariff rates may not significantly impact the importation of medical equipment.

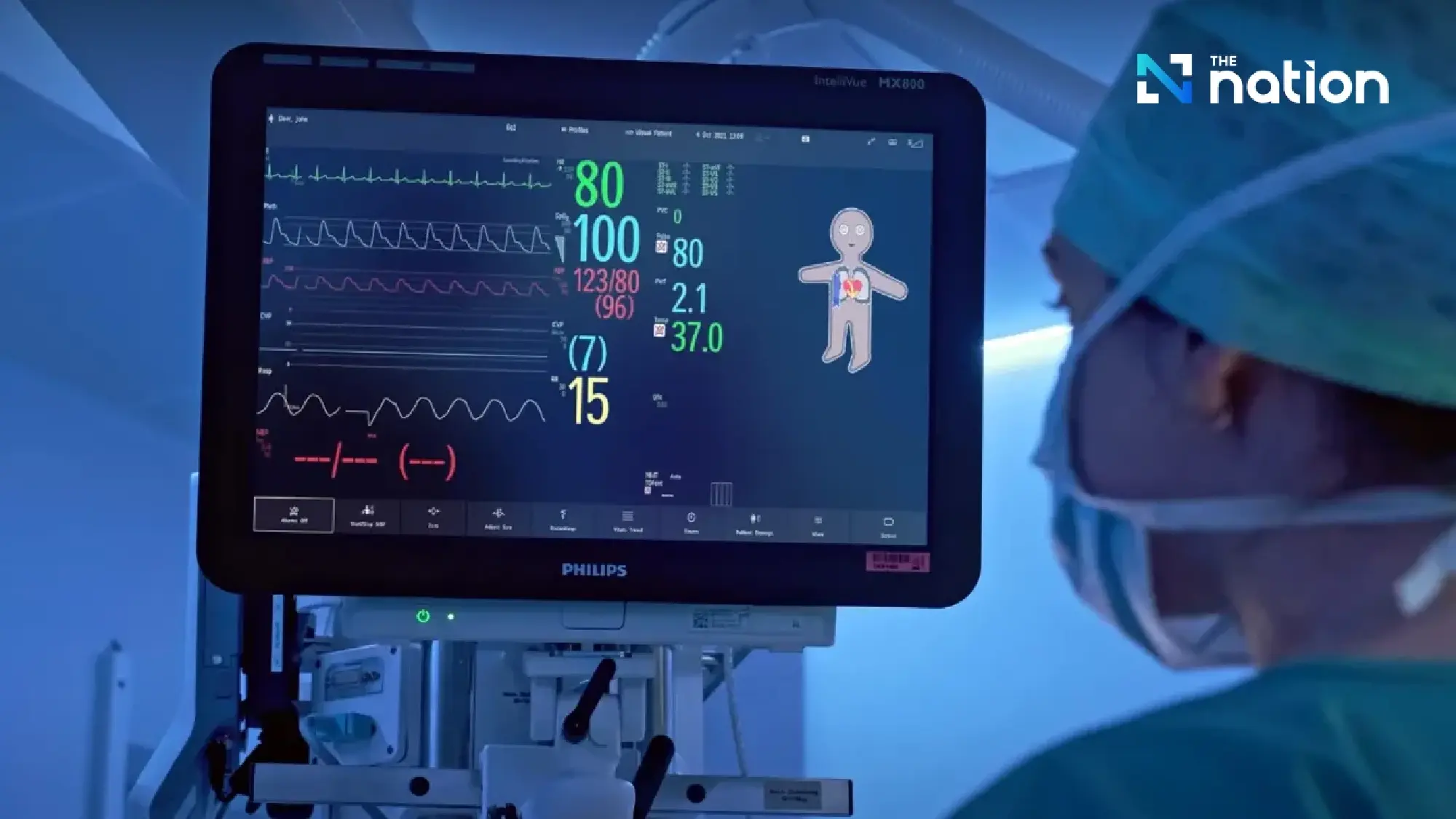

Philips’ direction will continue to focus on connected healthcare solutions, leveraging AI technology for processing and predictive analytics. This allows for early predictions of patient conditions and provides advance warnings to healthcare professionals or caregivers.

Additionally, Philips places significant emphasis on AI research, particularly in the area of heart disease. The company collaborates with innovations in image-guided therapy to connect all devices and patient data for heart disease patients.

This enables doctors and nurses to monitor patient conditions remotely, whether they are in another room within the hospital or outside of it, ensuring accurate diagnosis, treatment, and more efficient patient monitoring.

“Connected care”: seamless healthcare integration

“Connected care” is a solution that supports continuous care by connecting patient information from the moment they arrive at the hospital or emergency department, through surgery, the ICU, general wards, and post-discharge recovery at home.

In Thailand, Philips’ HPM and connected care systems are widely used, particularly in large hospitals and medical schools.

The solution is based on three key principles:

- Seamless: Patient data is continuously linked, from the operating room to the ICU and general wards, without interruption. This differs from traditional systems, where information is often lost in between stages.

- Connected: Medical devices at the bedside, such as drug dispensers, saline drips, and laboratory data, are connected to monitors, providing doctors with an immediate overview of the patient’s condition.

- Interoperable: Patient data can be transferred to all departments, enabling doctors to track test results and view patient information anywhere, at any time. This reduces redundant tasks and increases the time available for direct patient care.

Tools & Platforms

Bridging the AI Regulatory Gap Through Product Liability

Scholar proposes applying product liability principles to strengthen AI regulation.

In a world where artificial intelligence (AI) is evolving at an exponential pace, its presence steadily reshapes relationships and risks. Although some actors can abuse AI technology to harm others, other AI technologies can cause harm without malicious human intent involved. Individuals have reported forming deep emotional attachments to AI chatbots, sometimes perceiving them as real-life partners. Other chatbots have deviated from their intended purpose in harmful ways—such as a mental health chatbot that, rather than providing emotional support, inadvertently prescribed diet advice.

Despite growing public concern over the safety of AI systems, there is still no global consensus on the best approach to regulate AI.

In a recent article, Catherine M. Sharkey of the New York University School of Law argues that AI regulation should be informed by the government’s own experiences with AI technologies. She explores how lessons from the approach of the Food and Drug Administration (FDA) to approving high-risk medical products, such as AI-driven medical devices that interpret medical scans or diagnose conditions, can help shape AI regulation as a whole.

Traditionally, FDA requires that manufacturers demonstrate the safety and effectiveness of their products before they can enter the market. But as Sharkey explains, this model has proven difficult to apply to adaptive AI technologies that can evolve after deployment—since, under traditional frameworks, each modification would otherwise require a separate marketing submission, an approach ill-suited to systems that continuously learn and change. To ease regulatory hurdles for developers, particularly those whose products update frequently, FDA is moving toward a more flexible framework that relies on post-market surveillance. Sharkey highlights the role of product liability law, a framework traditionally applied to defective physical goods, in addressing accountability where static regulations fail to manage the risks that emerge once AI systems are in use.

FDA has been at the vanguard of efforts to revise its regulatory framework to fit adaptative AI technologies. Sharkey highlights that FDA shifted from a model emphasizing pre-market approval, where products must meet safety and effectiveness standards before entering the market, to one centered on post-market surveillance, which monitors products’ performance and risks after AI medical products are deployed. As this approach evolves, she explains that product liability serves as a crucial deterrent against negligence and harm, particularly during the transition period before a new regulatory framework is established.

Critics argue that regulating AI requires a distinct approach, as no prior technological shift has been as disruptive. Sharkey contends that these critics overlook the strength of existing liability frameworks and their ability to adapt to AI’s evolving nature.

Sharkey argues that crafting pre-market regulations for new technologies can be particularly difficult due to uncertainties about risks.

Further, she notes that regulating emerging technology too early could stifle innovation. Sharkey argues that product liability offers a dynamic alternative because, instead of requiring regulators to predict and prevent every possible AI risk in advance, it allows agencies to identify failures as they occur and adjust regulatory strategies accordingly.

Sharkey emphasizes that FDA’s experience with AI-enabled medical devices serves as a meaningful starting point for developing a product liability framework for AI. In developing such framework, she draws parallels to the pharmaceutical’s drug approval process. When a new drug is introduced to the market, its full risks and benefits remain uncertain. She explains that both manufacturers and FDA gather extensive real-world data after a product is deployed. In light of that process, she proposes that the regulatory framework should be adjusted to ensure that manufacturers either return to FDA with updated information, or that tort lawsuits serve as a corrective mechanism. In this way, product liability has an “information-forcing” function, ensuring that manufacturers remain accountable for risks that surface post-approval.

As Sharkey explains, the U.S. Supreme Court’s decision in Riegel v. Medtronic set an important precedent for the intersection of regulation and product liability. The Court ruled that most product liability claims related to high-risk medical devices approved through FDA’s pre-market approval process—a rigorous review that assesses the device’s safety and effectiveness—are preempted. This means that manufacturers are shielded from state-law liability if their devices meet FDA’s safety and effectiveness standards. In contrast, Sharkey explains that under Riegel, devices cleared under FDA’s pre-market notification process do not receive the same immunity, because that pathway does not involve a full safety efficacy review but instead allows devices to enter the market if they are deemed “substantially equivalent” to existing ones.

Building on Riegel, Sharkey proposes a model in which courts assess whether a product liability claim raises new risk information that was not considered by FDA in its original risk-benefit analysis at the time of approval. Under this framework, if the claim introduces evidence of risks beyond those previously weighted by the agency, the product liability lawsuit should be allowed to proceed.

Sharkey concludes that the rapid evolution of AI technologies and the difficulty of predicting their risks make crafting comprehensive regulations at the pre-market stage particularly challenging. In this context, she asserts that product liability law becomes essential, serving both as a deterrent and an information-forcing tool. Sharkey’s model presents a promise to address AI harms in a way that accommodates the adaptive nature of machine learning systems, as illustrated by FDA’s experience with AI-enabled technologies. Instead of creating rules in a vacuum, she argues that regulators could benefit from the feedback loop between tort liability and regulation, which allows for some experimentation of standards before the regulator commits to a formal pre-market rule.

Tools & Platforms

Energy firm deploys groundbreaking AI-powered tech for offshore wind farms: ‘A defining moment’

Groundbreaking innovation is blowing through the UK’s green energy sector. Renewable Energy Magazine reports the unlikely alignment between AI and wind thanks to a new partnership between two offshore wind developers.

BlueFloat Energy and Nadara’s partnership marks the first time an offshore wind developer has deployed a new AI-powered safety and assurance platform, known as WindSafe. The digital technology company, Fennex, created WindSafe to help ease the challenges of managing offshore wind environments.

Unfortunately, supply chain bottlenecks, approval process delays, and even lawsuits have presented logistical challenges in the industry, according to The Conversation.

With this cloud system, teams can transition from reactive processes to proactive risk management, leveraging real-time intelligence and data.

Nassima Brown, Director at Fennex, told Renewable Energy Magazine, “This collaboration marks a defining moment, not only for Fennex, but for how the offshore wind sector approaches safety in the digital era.”

The rapid development of AI has been controversial, in part due to its impact on the electrical grid and poor water conservation, as reported by MIT. However, its role in WindSafe may offset some of that by making offshore wind energy growth more efficient.

|

Want to go solar but not sure who to trust? EnergySage has your back with free and transparent quotes from fully vetted providers that can help you save as much as $10k on installation.

|

After all, wind, also known as eolic energy, is a renewable and inexhaustible resource that creates electricity as wind turbines move. There are no dirty fuels or resulting pollution involved.

Another UK partnership to create the world’s largest wind farm will have 277 turbines powering over six million homes. Taiwan’s Hai Long Offshore Wind Project has already begun delivering electricity to its grid.

Offshore wind investment saves land space, thus preserving native vegetation and animal habitats. With no dirty fuel expenses and more advanced technology like WindSafe, wind power can continue to reduce energy costs.

Wind farms also help local economies with more jobs. According to the U.S. Department of Energy, the wind industry has created over 100,000 American jobs to date. Having more localized energy sources creates more stability and independence, especially for rural communities and small towns.

In addition to wind, offshore innovators are harnessing the power of ocean waves as solar continues to expand. For those interested in slashing energy bills and pollution at home, free resources like EnergySage are helping them receive vetted quotes and save thousands on solar installation costs.

All in all, the continued growth of major energy farm projects and other clean technologies reduces and may one day eliminate the need for climate-heating dirty fuels. Without constant polluting exhaust and its associated respiratory and cardiac health problems, a safer and cleaner future is possible.

Join our free newsletter for weekly updates on the latest innovations improving our lives and shaping our future, and don’t miss this cool list of easy ways to help yourself while helping the planet.

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoAERDF highlights the latest PreK-12 discoveries and inventions