AI Insights

Scientists Reveal a Human Brain-Like Breakthrough in AI Design

- Current AI technology has hit a wall that prevents it from reaching artificial general intelligence.

- The next design leap involves adding a type of complexity that attempts to mimic the way the human brain works.

- If it reaches its potential, AI intelligence could skyrocket into thinking more like a human being, including having intuition. It could even help us understand our brains better.

At the heart of what we call artificial intelligence are artificial neural networks. Their very name implies a biologically inspired innovation, designed to mimic the function of neural networks within the brain. And much like our brains, these networks operate in a three-dimensional space, building both wide and deep layers of artificial neurons.

The groundbreaking nature of this brain-inspired mimicry can’t be overstated. Two of the leading minds behind this breakthrough—physicists John J. Hopfield and Geoffrey E. Hinton—even bagged the Nobel Prize last year. While the AI models inspired by their work have reimagined technology, these brain-like systems, especially in the age of AI, could also unlock longtime mysteries of our own brains.

But now, artificial intelligence systems could truly become more like human brains, and some researchers believe they know how: AI design must be elevated to another dimension—literally. While current models have certain parameters of width and depth already, AI can be refashioned to have an additional, structured complexity researchers are calling the “height” dimension. Two scientists at the Rensselaer Polytechnic Institute in New York and the City University of Hong Kong explain this brain-inspired idea in a new study published in the journal Patterns this past April.

AI models already have width, the number of nodes in a layer. Each node is a fundamental processing unit that takes inputs and creates outputs within the larger network, like the neurons in your brain working together. There’s also depth, the number of layers in a network. Here’s how Rensselaer Polytechnic Institute’s Ge Wang, Ph.D., a co-author of the study, explains it: “Imagine a city: width is the number of buildings on a street, depth is how many streets you go through, and height is how tall each building is. Any room in any building can communicate with other rooms in the city,” he says in an email. When you add height, as internal wiring or shortcuts within a layer, it creates “richer interactions among neurons without increasing depth or width. It reflects how local neural circuits in the brain … interact to improve information processing.”

Wang and his co-author Feng-Lei Fan, Ph.D., achieve this increased dimensionality within neural networks by introducing intra-layer links and feedback loops. Intra-layer links, Wang says, resemble the lateral connections found in the cortical column of the brain, which is linked with higher-level cognitive functions. These links form connections among neurons in the same layer. Feedback loops, meanwhile, are similar to recurrent signaling, which is where outputs impact inputs. Wang says this could improve a system’s memory, perception, and cognition.

“Together, they help networks evolve over time and settle into stable, meaningful patterns, like how your brain can recognize a face even from a blurry image,” Wang says. “These structures enrich AI’s ability to refine decisions over time, just like the brain’s iterative reasoning.”

AI’ most compelling leap into humanlike behavior may be in the birth of large language models like ChatGPT. On June 12, 2017, eight researchers from Google wrote the paper “All You Need Is Attention,” introducing a new deep-learning architecture known as “transformer,” a technique that focused on attention mechanisms that drastically decreased training times for A.I. models. This paper largely fueled the subsequent AI boom—and is why AI suddenly seemed to be everywhere all at once.

However, it didn’t materialize the “holy grail” of most AI research: artificial general intelligence (AGI). This is the moment when AI systems adapt to truly “think” like a human being. Some futurists consider AGI to be a key pathway to reaching the hypothetical singularity, the point when AI surpasses human cognitive powers.

The reason transformer architecture didn’t fulfill AGI requirements, according to study co-author, Wang, is that it has some inherent limitations. Reuters reported in 2024 that AI companies were no longer seeing the previously observed exponential growth in AI capability. AI’s “scaling law” —the idea that larger data sets and more resources would make models better and better—was no longer working. This prompted some experts to believe that new innovations were needed to push these models even further. This new study suggests that integrating intra-layer links and feedback loops could enable models to relate, reflect, and refine outputs, making them both smarter, as well as more energy efficient.

“One misconception is that our perspective merely proposes ‘more complexity,’ Wang says. “Our goal is structured complexity, adding dimensions and dynamics that reflect how intelligence arises in nature and works logically, not just stacking more layers or parameters.”

One fascinating example of how the authors propose adding this complexity is in feedback loops, with “phase transitions” that would foster intelligent behaviors. The core idea is that these transitions can create new behaviors that were not originally present in the system’s individual components. Just as ice melts into water, so too could AI systems “evolve” to stable states.

“For AI, this could mean a system shifting from vague or uncertain outputs to confident, coherent ones as it gathers more context or feedback,” Wang says. “These transitions can mark a point where the AI truly ‘understands’ a task or pattern, much like human intuition kicking in.”

Feedback loops and intra-layer links that mimic ways the human mind arrives at insight and meaning isn’t enough to instantly herald the era of AGI. But, as Wang mentions in his study, these techniques could help take AI models a step beyond transformer architecture, a crucial step if we ever hope to reach that “holy grail” of AI research.

Introducing more brain-like features into AI architecture could not only make AI more transparent (i.e., we know how it arrived at certain conclusions), but it could also help us investigate the mysterious and daunting puzzle of how our own human minds work. The authors note that this application could provide a model for scientists to explore human cognition while also investigating neurological disorders, such as Alzheimer’s and epilepsy.

Although taking inspiration from human biology provides benefits, Wang sees the future of AI as one where neuromorphic architectures—those like the human brain—co-exist with other systems. Potentially even quantum systems, which govern the behavior of subatomic particles and we are still struggling to understand fully.

“Brain-inspired AI offers elegant solutions to complex problems, especially in perception and adaptability. Meanwhile, AI will also explore strategies unique to digital, analog or even quantum systems,” Wang says. “The sweet spot likely lies in hybrid designs, borrowing from nature and our imagination beyond nature.”

Darren lives in Portland, has a cat, and writes/edits about sci-fi and how our world works. You can find his previous stuff at Gizmodo and Paste if you look hard enough.

AI Insights

To ChatGPT or not to ChatGPT: Professors grapple with AI in the classroom

As shopping period settles, students may notice a new addition to many syllabi: an artificial intelligence policy. As one of his first initiatives as associate provost for artificial intelligence, Michael Littman PhD’96 encouraged professors to implement guidelines for the use of AI.

Littman also recommended that professors “discuss (their) expectations in class” and “think about (their) stance around the use of AI,” he wrote in an Aug. 20 letter to faculty. But, professors on campus have applied this advice in different ways, reflecting the range of attitudes towards AI.

In her nonfiction classes, Associate Teaching Professor of English Kate Schapira MFA’06 prohibits AI usage entirely.

“I teach nonfiction because evidence … clarity and specificity are important to me,” she said. AI threatens these principles at a time “when they are especially culturally devalued” nationally.

She added that an overreliance on AI goes beyond the classroom. “It can get someone fired. It can screw up someone’s medication dosage. It can cause someone to believe that they have justification to harm themselves or another person,” she said.

Nancy Khalek, an associate professor of religious studies and history, said she is intentionally designing assignments that are not suitable for AI usage. Instead, she wants students “to engage in reflective assignments, for which things like ChatGPT and the like are not particularly useful or appropriate.”

Khalek said she considers herself an “AI skeptic” — while she acknowledged the tool’s potential, she expressed opposition to “the anti-human aspects of some of these technologies.”

But AI policies vary within and across departments.

Professors “are really struggling with how to create good AI policies, knowing that AI is here to stay, but also valuing some of the intermediate steps that it takes for a student to gain knowledge,” said Aisling Dugan PhD’07, associate teaching professor of biology.

In her class, BIOL 0530: “Principles of Immunology,” Dugan said she allows students to choose to use artificial intelligence for some assignments, but that she requires students to critique their own AI-generated work.

She said this reflection “is a skill that I think we’ll be using more and more of.”

Dugan added that she thinks AI can serve as a “study buddy” for students. She has been working with her teaching assistants to develop an AI chatbot for her classes, which she hopes will eventually answer student questions and supplement the study videos made by her TAs.

Despite this, Dugan still shared concerns over AI in classrooms. “It kind of misses the mark sometimes,” she said, “so it’s not as good as talking to a scientist.”

For some assignments, like primary literature readings, she has a firm no-AI policy, noting that comprehending primary literature is “a major pedagogical tool in upper-level biology courses.”

“There’s just some things that you have to do yourself,” Dugan said. “It (would be) like trying to learn how to ride a bike from AI.”

Assistant Professor of the Practice of Computer Science Eric Ewing PhD’24 is also trying to strike a balance between how AI can support and inhibit student learning.

This semester, his courses, CSCI 0410: “Foundations of AI and Machine Learning” and CSCI 1470: “Deep Learning,” heavily focus on artificial intelligence. He said assignments are no longer “measuring the same things,” since “we know students are using AI.”

While he does not allow students to use AI on homework, his classes offer projects that allow them “full rein” use of AI. This way, he said, “students are hopefully still getting exposure to these tools, but also meeting our learning objectives.”

Get The Herald delivered to your inbox daily.

Ewing also added that the skills required of graduated students are shifting — the growing presence of AI in the professional world requires a different toolkit.

He believes students in upper level computer science classes should be allowed to use AI in their coding assignments. “If you don’t use AI at the moment, you’re behind everybody else who’s using it,” he said.

Ewing says that he identifies AI policy violations through code similarity — last semester, he found that 25 students had similarly structured code. Ultimately, 22 of those 25 admitted to AI usage.

Littman also provided guidance to professors on how to identify the dishonest use of AI, noting various detection tools.

“I personally don’t trust any of these tools,” Littman said. In his introductory letter, he also advised faculty not to be “overly reliant on automated detection tools.”

Although she does not use detection tools, Schapira provides specific reasons in her syllabi to not use AI in order to convince students to comply with her policy.

“If you’re in this class because you want to get better at writing — whatever “better” means to you — those tools won’t help you learn that,” her syllabus reads. “It wastes water and energy, pollutes heavily, is vulnerable to inaccuracies and amplifies bias.”

In addition to these environmental concerns, Dugan was also concerned about the ethical implications of AI technology.

Khalek also expressed her concerns “about the increasingly documented mental health effects of tools like ChatGPT and other LLM-based apps.” In her course, she discussed with students how engaging with AI can “resonate emotionally and linguistically, and thus impact our sense of self in a profound way.”

Students in Schapira’s class can also present “collective demands” if they find the structure of her course overwhelming. “The solution to the problem of too much to do is not to use an AI tool. That means you’re doing nothing. It’s to change your conditions and situations with the people around you,” she said.

“There are ways to not need (AI),” Schapira continued. “Because of the flaws that (it has) and because of the damage (it) can do, I think finding those ways is worth it.”

AI Insights

This Artificial Intelligence (AI) Stock Could Outperform Nvidia by 2030

When investors think about artificial intelligence (AI) and the chips powering this technology, one company tends to dominate the conversation: Nvidia (NASDAQ: NVDA). It has become an undisputed barometer for AI adoption, riding the wave with its industry-leading GPUs and the sticky ecosystem of its CUDA software that keep developers in its orbit. Since the launch of ChatGPT about three years ago, Nvidia stock has surged nearly tenfold.

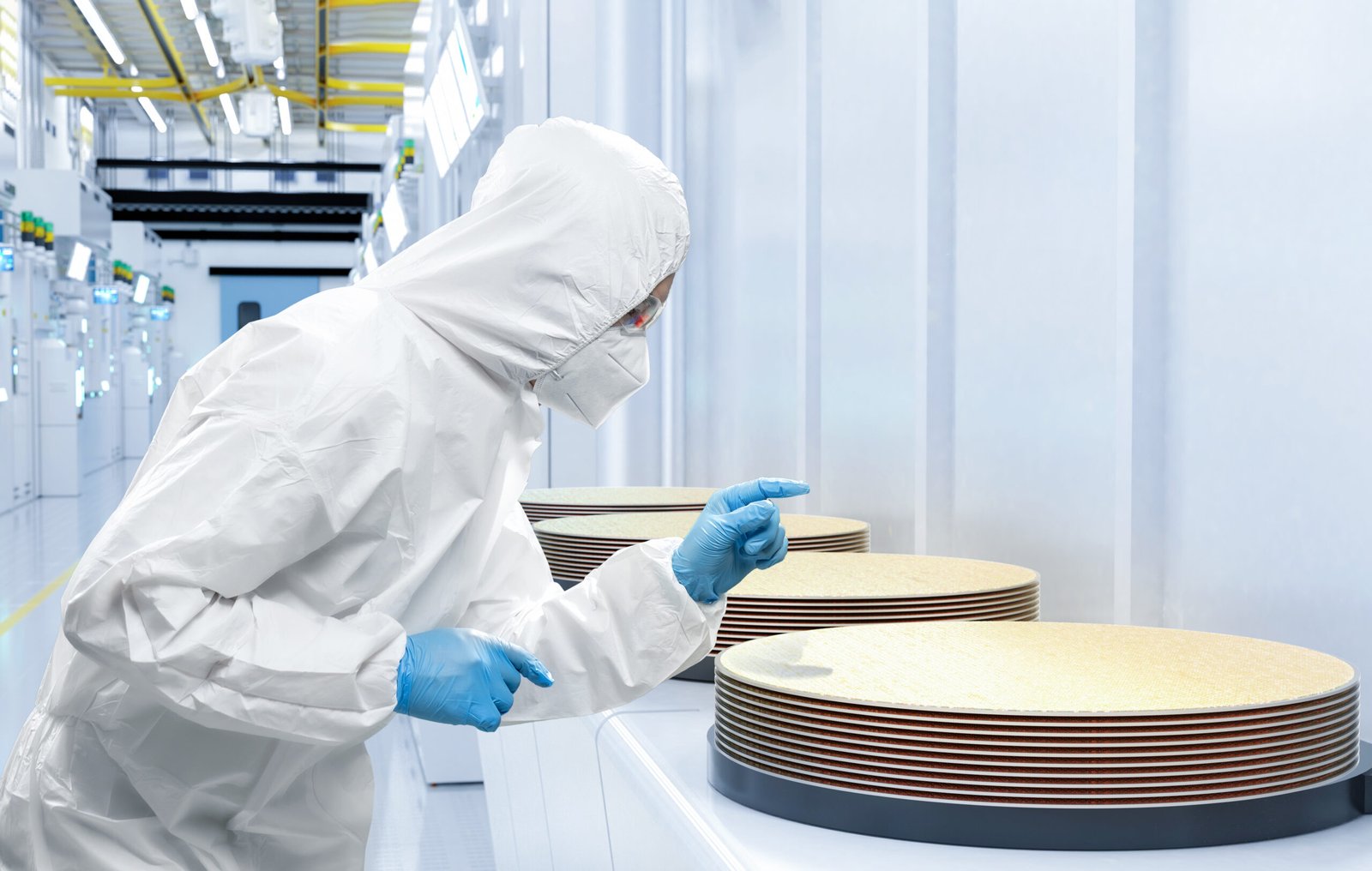

Here’s the twist: While Nvidia commands the spotlight today, it may be Taiwan Semiconductor Manufacturing (NYSE: TSM) that holds the real keys to growth as we look toward the next decade. Below, I’ll unpack why Taiwan Semi — or TSMC, as it’s often called — isn’t just riding the AI wave, but rather is building the foundation that brings the industry to life.

What makes Taiwan Semi so critical is its role as the backbone of the semiconductor ecosystem. Its foundry operations serve as the lifeblood of the industry, transforming complex chip designs into the physical processors that power myriad generative AI applications.

Source Fool.com

AI Insights

Albania puts AI-created ‘minister’ in charge of public procurement | Albania

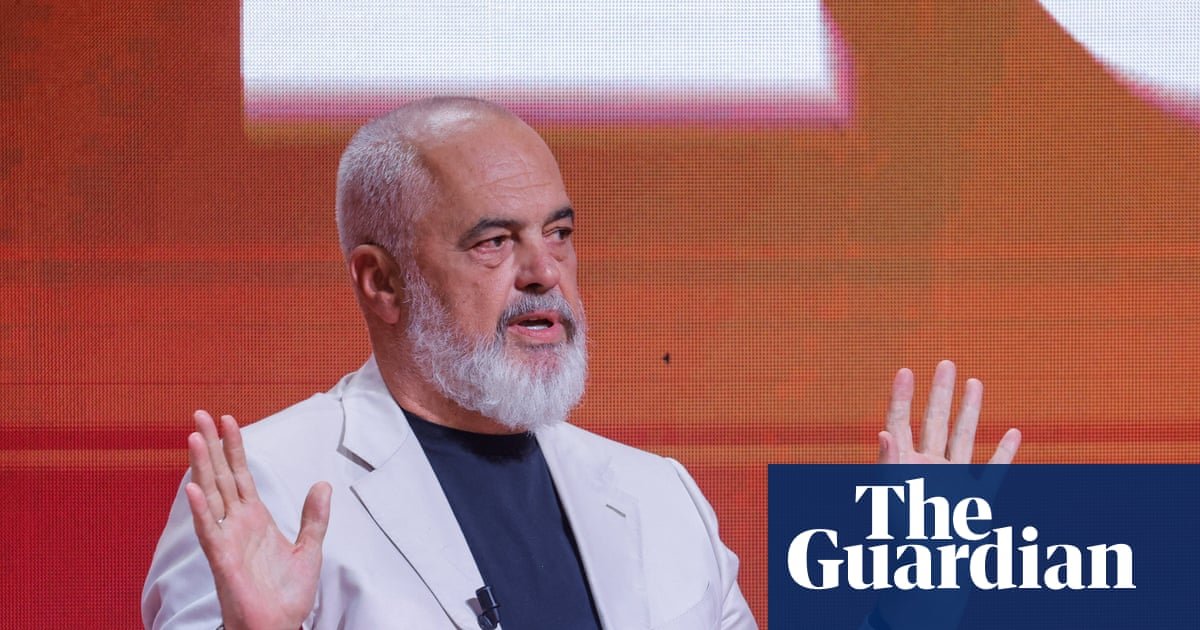

A digital assistant that helps people navigate government services online has become the first “virtually created” AI cabinet minister and put in charge of public procurement in an attempt to cut down on corruption, the Albanian prime minister has said.

Diella, which means Sun in Albanian, has been advising users on the state’s e-Albania portal since January, helping them through voice commands with the full range of bureaucratic tasks they need to perform in order to access about 95% of citizen services digitally.

“Diella, the first cabinet member who is not physically present, but has been virtually created by AI”, would help make Albania “a country where public tenders are 100% free of corruption”, Edi Rama said on Thursday.

Announcing the makeup of his fourth consecutive government at the ruling Socialist party conference in Tirana, Rama said Diella, who on the e-Albania portal is dressed in traditional Albanian costume, would become “the servant of public procurement”.

Responsibility for deciding the winners of public tenders would be removed from government ministries in a “step-by-step” process and handled by artificial intelligence to ensure “all public spending in the tender process is 100% clear”, he said.

Diella would examine every tender in which the government contracts private companies and objectively assess the merits of each, said Rama, who was re-elected in May and has previously said he sees AI as a potentially effective anti-corruption tool that would eliminate bribes, threats and conflicts of interest.

Public tenders have long been a source of corruption scandals in Albania, which experts say is a hub for international gangs seeking to launder money from trafficking drugs and weapons and where graft has extended into the upper reaches of government.

Albanian media praised the move as “a major transformation in the way the Albanian government conceives and exercises administrative power, introducing technology not only as a tool, but also as an active participant in governance”.

after newsletter promotion

Not everyone was convinced, however. “In Albania, even Diella will be corrupted,” commented one Facebook user.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi