Around the world, health care workers are in short supply, with a shortage of 4.5 million nurses expected by 2030, according to the World Health Organization (WHO).

Nurses are already feeling the pressure: around one-third of nurses globally are experiencing burnout symptoms, like emotional exhaustion, and the profession has a high turnover rate.

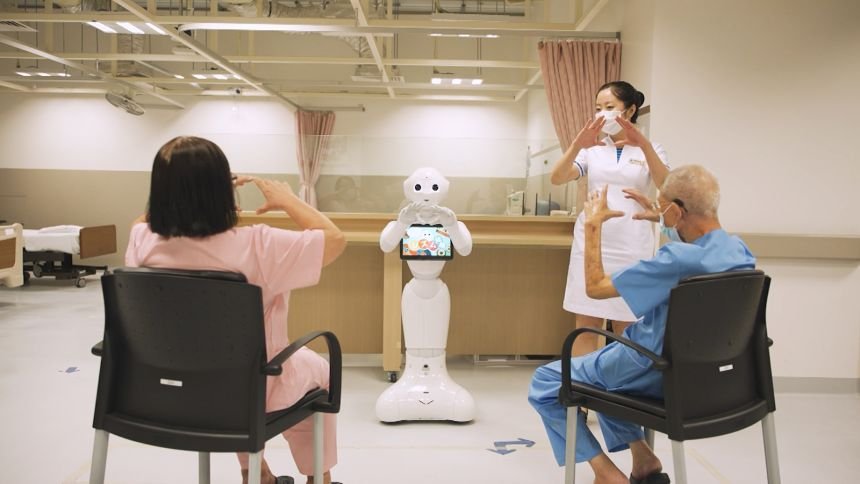

That’s where Nurabot comes in. The autonomous, AI-powered nursing robot is designed to help nurses with repetitive or physically demanding tasks, such as delivering medication or guiding patients around the ward.

According to Foxconn, the Taiwanese multinational behind Nurabot, the humanoid can reduce nurses’ workload by up to 30%.

“This is not a replacement of nurses, but more like accomplishing a mission together,” says Alice Lin, director of user design at Foxconn, also known as Hon Hai Technology Group in Taiwan.

By taking on repetitive tasks, Nurabot frees up nurses for “tasks that really need them, such as taking care of the patients and making judgment calls on the patient’s conditions, based on their professional experience,” Lin told CNN in a video call.

Nurabot, which took just 10 months to develop, has been undergoing testing at a hospital in Taiwan since April 2025 — and now, the company is readying the robot for commercial launch early next year. Foxconn does not currently have an estimate for its retail price.

Foxconn partnered with Japanese robotics company Kawasaki Heavy Industries to build Nurabot’s hardware.

The firm adapted Kawasaki’s “Nyokkey” service robot model, which moves around autonomously on wheels, uses its two robotic arms to lift and hold items, and has multiple cameras and sensors to help it recognize its surroundings.

Based on its initial research on nurses’ daily routines and pain points — such as walking long distances across the ward to deliver samples — Foxconn added features, like a space to safely store bottles and vials.

The robot uses Foxconn’s Chinese large language model for its communication, while US tech giant NVIDIA provided Nurabot’s core AI and robotics infrastructure. NVIDIA says it combined multiple proprietary AI platforms to create Nurabot’s programming, which enables the bot to navigate the hospital independently, schedule tasks, and react to verbal and physical cues.

AI was also used to train and test the robot in a virtual version of the hospital, which Foxconn says helped its speedy development.

AI allows Nurabot to “perceive, reason, and act in a more human-like way” and adapt its behavior “based on the specific patient, context, and situation,” David Niewolny, director of business development for health care and medical at NVIDIA, told CNN in an email.

Staffing shortages aren’t the only issue facing the health care sector.

The world’s elderly population is growing rapidly: the number of people aged 60 and over is expected to increase by 40% by 2030, compared to 2019, according to the WHO. By the mid-2030s, the UN predicts that the number of individuals aged 80 and older will outnumber infants.

Over the past decade, the number of health care workers has steadily increased, but not fast enough to beat population growth and aging. Southeast Asia is expected to be one of the worst-impacted regions for health care workforce shortages.

With these impending stressors on the health care system, AI-enhanced systems can provide huge time and cost savings, says nursing and public health professor Rick Kwan, associate dean at Tung Wah College in Hong Kong.

“AI-assisted robots can really replace some repetitive work, and save lots of manpower,” says Kwan.

There will be challenges, though: Kwan highlights patient preference for human interaction and the need for infrastructure changes in hospitals.

“You can look at the hospitals in Hong Kong: very crowded and everywhere is very narrow, so it doesn’t really allow robots to travel around,” says Kwan. Hospitals are designed around human needs and systems, and if robots are to become central to the workflow, this will need to be reimagined in hospital design going forward, he adds.

Safety is also paramount, says Kwan — not just in terms of mitigating physical risks, but the development of ethical and data protection protocols, too — and he encourages a slow and cautious approach that allows for rigorous testing and assessment.

Robots are not entirely new to health care: surgical robots, like da Vinci, have been around for decades and help improve accuracy during operations.

But increasingly, free-moving humanoids are assisting hospital staff and patients.

In Singapore, Changi General Hospital currently has more than 80 robots helping doctors and nurses with everything from administrative work to medicine delivery.

And in the US, nearly 100 “Moxi” autonomous health care bots, built by Texas-based Diligent Robots with NVIDIA’s AI platforms, carry medications, samples, and supplies across hospital wards, according to NVIDIA.

But the jury is still out on how helpful nursing robots are to staff. A recent review of robots in nursing found that, while there was a perception among nurses of increased efficiency and reduced workload, there is a lack of experiential evidence to confirm this — and technical malfunctions, communication difficulties and the need for ongoing training all presented challenges.

Tech companies are investing heavily health care: in addition to NVIDIA, the likes of Amazon and Google are both exploring new opportunities in the $9.8 trillion health care market.

The smart hospital sector is a small, but rapidly expanding, component of this. It was estimated at $72.24 billion in 2025, according to market research company Mordor Intelligence, with the Asia Pacific region the fastest-growing market.

Nurabot is currently being piloted in Taichung Veterans General Hospital in Taiwan, on a ward that treats diseases associated with the lungs, face and neck, including lung cancer and asthma.

During this experimental phase, the robot has limited access to the hospital’s data system, and Foxconn is “stress testing” its functionality on the ward. This includes tracking metrics like the reduction in walking distance for nurses and the delivery accuracy, as well as qualitative feedback from patients and nurses. Early results indicate that Nurabot is reducing the daily nursing workload by around 20–30%, according to Foxconn.

Taichung Veterans General Hospital declined to comment on Nurabot for this story.

According to Lin, Nurabot will be formally integrated into daily nursing operations later this year, including connecting to the hospital information system and running tasks autonomously, ahead of its commercial debut in early 2026.

While Nurabot won’t solve the lack of nurses, Lin says it can help “alleviate the problems caused by an aging society, and hospitals losing talent.”