AI Research

Researchers using AI for weather forecasting

Weather forecasting is not easy. The truth is that predicting future weather conditions over broad, or even narrow, swaths of Earth’s surface comes down to complex microphysical processes, and as College of Engineering Associate Professor and UConn Atmospheric and Air Quality Modeling Group Leader Marina Astitha puts it, nature is chaotic.

Astitha and her research group are at the forefront of exploring ways to improve weather prediction using AI and machine learning to enhance existing physics-based models. They developed new methods for the prediction of snowfall accumulation and wind gusts associated with extreme weather events in three recent papers published in the Journal of Hydrology, Artificial Intelligence for the Earth Systems, and another in the Journal of Hydrology.

Postdoctoral researcher Ummul Khaira Ph.D. ’24 led the snowfall prediction work during her time as a Ph.D student. Ph.D candidate Israt Jahan is passionate about building models that improve predictions of damaging wind gusts from storms.

The researchers met with UConn Today to discuss the importance and everyday applications of enhanced forecasting capabilities using these new technologies.

Are there forecasting challenges that are unique to the Northeast?

Astitha: There are characteristics about the Northeast that make it particularly difficult to make weather predictions for. This is especially true for winter weather because we have Nor’easters that can come from either the center of the country or from the Gulf. Some move slowly, and they are highly predictable. Some can be what we call a bomb cyclone, where they rush up here and dump a lot of snow in a small amount of time.

For weather forecasting, we traditionally use numerical weather prediction models that are based on physics principles and have seen large improvements over the last 20–30 years. We have been running our own weather forecasting system at UConn since 2014, based on physical models. However, numerical weather prediction comes with its own challenges due to uncertainty in parameterizations that are necessary when no physical laws are known for a specific process.

For windstorms, wind gusts specifically are a complicated variable. It’s wind, but the way we observe it and the way we model it is different.

Can you explain more about the physics used in numerical weather prediction models?

Astitha: Precipitation is a microscale process. As air rises and cools, clouds form, and within those clouds, tiny cloud droplets develop through complex microphysical interactions. Over time, some of these droplets grow large enough to become raindrops or snowflakes. Once they reach a critical size, gravity causes them to fall to the ground as precipitation. This entire process is governed by microphysical processes.

We try to predict such microphysical processes embedded in numerical weather models by solving many equations and parameterizations. These models describe our atmosphere as a 3D grid, dividing it into discrete boxes where we solve equations based on first principles (motion, thermodynamics, and more). This approach poses a major challenge: even with increased resolution, each grid cell often represents a large volume of air, typically one to four square kilometers. Despite efforts to refine the grid, these cells still encompass vast areas, limiting the model’s ability to resolve smaller-scale processes.

Numerical prediction is what got me here. 20 years ago, I could run a code to numerically solve physics equations of the atmosphere, and then I could tell approximately what the weather would be like the next day. That, to me, was mind-blowing!

Once you run one deterministic model, you get one answer that the temperature is going to be, say 75 degrees tomorrow in Storrs. That’s one potential realization of the future. Models like that are not capable of giving us an exact answer, because nature is chaotic. I’ve always had the mindset of looking at multiple models to have an idea of that uncertainty and variability, and if 10 different realizations give you 74, 75, or 76 degrees, you know you’re close.

Khaira: Few things are more humbling than a snowfall that defies prediction. My work lies in embracing that uncertainty in the chaos and building models not to promise perfection, but to offer communities and decision-makers a clearer window into what might lie ahead.

How is your recent research helping with the challenges of numerical weather prediction?

Astitha: Imagine a Nor’easter coming our way during wintertime; they come with a lot of snow and wind. We work with the Eversource Energy Center and we’re interested not only in the scientific advancement, but also the impact and accuracy in predicting when and where that storm is going to happen in Connecticut. Weather prediction accuracy influences the estimation of impacts; for example, power outages. We might underestimate or overestimate the impact by a lot. That makes winter storms of particular interest because of the impact they have on our society, our transportation networks, and electrical power distribution networks.

Five years ago, we decided to test whether a machine learning framework could help with wind gust and snowfall prediction. It comes with its own challenges and uncertainties, but we quickly saw that there is a lot of promise for these tools to correct errors and do better than what numerical weather prediction can do and at a fraction of the time. Machine learning and AI can help improve the analysis of wind gusts and snowfall, but these systems are not perfect either.

We want to be able to better predict storms over Connecticut and the Northeast U.S., which is why we started this exploration with ML/AI, even though most of the research out there about how to implement AI in weather prediction is either at the global scale or much coarser resolution, but we’re getting there.

Can you talk about the everyday impact of the research?

Astitha: An example is when the trees are full of leaves like they are in late spring and summer, and a storm comes in with a lot of rain and intense wind. Whole trees can come down and topple the power lines, which causes many disruptions around the state.

Our close collaboration with the Eversource Energy Center involves our immediate collaborators taking this weather prediction information and operationally predicting power outages for Connecticut and other service territories. That information can go to the utility managers, so they can prepare two to three days in advance, indicating a direct link from science and engineering to the application and to the manager.

I understand people’s frustrations and the need for answers about weather forecasts and impacts of storms. You want to know if your family is going to be safe and if you should or should not be out during particular times of the day. We’re doing this research to improve the reliability and accuracy of weather forecasting, so communities and stakeholders are aware of what’s happening when the storm hits their area and can take appropriate actions.

Jahan: It’s incredibly rewarding to know that my work has the potential to improve early warnings and give communities more time to prepare. By combining AI and uncertainty analysis, we’re not just making gust predictions more accurate—we are helping decision-makers plan with greater confidence.

More information:

Ummul Khaira et al, Investigating the role of temporal resolution and multi-model ensemble data on WRF/XGB integrated snowfall prediction for the Northeast United States, Journal of Hydrology (2025). DOI: 10.1016/j.jhydrol.2025.133313

Israt Jahan et al, Storm Gust Prediction with the Integration of Machine Learning Algorithms and WRF Model Variables for the Northeast United States, Artificial Intelligence for the Earth Systems (2024). DOI: 10.1175/AIES-D-23-0047.1

Ummul Khaira et al, Integrating physics-based WRF atmospheric variables and machine learning algorithms to predict snowfall accumulation in Northeast United States, Journal of Hydrology (2024). DOI: 10.1016/j.jhydrol.2024.132113

Provided by

University of Connecticut

Citation:

Researchers using AI for weather forecasting (2025, July 8)

retrieved 8 July 2025

from https://phys.org/news/2025-07-ai-weather.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

AI Research

UCR Researchers Bolster AI Against Rogue Rewiring

As generative AI models move from massive cloud servers to phones and cars, they’re stripped down to save power. But what gets trimmed can include the technology that stops them from spewing hate speech or offering roadmaps for criminal activity.

To counter this threat, researchers at the University of California, Riverside, have developed a method to preserve AI safeguards even when open-source AI models are stripped down to run on lower-power devices.

Unlike proprietary AI systems, open‑source models can be downloaded, modified, and run offline by anyone. Their accessibility promotes innovation and transparency but also creates challenges when it comes to oversight. Without the cloud infrastructure and constant monitoring available to closed systems, these models are vulnerable to misuse.

The UCR researchers focused on a key issue: carefully designed safety features erode when open-source AI models are reduced in size. This happens because lower‑power deployments often skip internal processing layers to conserve memory and computational power. Dropping layers improves the models’ speed and efficiency, but could also result in answers containing pornography, or detailed instructions for making weapons.

“Some of the skipped layers turn out to be essential for preventing unsafe outputs,” said Amit Roy-Chowdhury, professor of electrical and computer engineering and senior author of the study. “If you leave them out, the model may start answering questions it shouldn’t.”

The team’s solution was to retrain the model’s internal structure so that its ability to detect and block dangerous prompts is preserved, even when key layers are removed. Their approach avoids external filters or software patches. Instead, it changes how the model understands risky content at a fundamental level.

“Our goal was to make sure the model doesn’t forget how to behave safely when it’s been slimmed down,” said Saketh Bachu, UCR graduate student and co-lead author of the study.

To test their method, the researchers used LLaVA 1.5, a vision‑language model capable of processing both text and images. They found that certain combinations, such as pairing a harmless image with a malicious question, could bypass the model’s safety filters. In one instance, the altered model responded with detailed instructions for building a bomb.

After retraining, however, the model reliably refused to answer dangerous queries, even when deployed with only a fraction of its original architecture.

“This isn’t about adding filters or external guardrails,” Bachu said. “We’re changing the model’s internal understanding, so it’s on good behavior by default, even when it’s been modified.”

Bachu and co-lead author Erfan Shayegani, also a graduate student, describe the work as “benevolent hacking,” a way of fortifying models before vulnerabilities can be exploited. Their ultimate goal is to develop techniques that ensure safety across every internal layer, making AI more robust in real‑world conditions.

In addition to Roy-Chowdhury, Bachu, and Shayegani, the research team included doctoral students Arindam Dutta, Rohit Lal, and Trishna Chakraborty, and UCR faculty members Chengyu Song, Yue Dong, and Nael Abu-Ghazaleh. Their work is detailed in a paper presented this year at the International Conference on Machine Learning in Vancouver, Canada.

“There’s still more work to do,” Roy-Chowdhury said. “But this is a concrete step toward developing AI in a way that’s both open and responsible.”

AI Research

Should AI Get Legal Rights?

In one paper Eleos AI published, the nonprofit argues for evaluating AI consciousness using a “computational functionalism” approach. A similar idea was once championed by none other than Putnam, though he criticized it later in his career. The theory suggests that human minds can be thought of as specific kinds of computational systems. From there, you can then figure out if other computational systems, such as a chabot, have indicators of sentience similar to those of a human.

Eleos AI said in the paper that “a major challenge in applying” this approach “is that it involves significant judgment calls, both in formulating the indicators and in evaluating their presence or absence in AI systems.”

Model welfare is, of course, a nascent and still evolving field. It’s got plenty of critics, including Mustafa Suleyman, the CEO of Microsoft AI, who recently published a blog about “seemingly conscious AI.”

“This is both premature, and frankly dangerous,” Suleyman wrote, referring generally to the field of model welfare research. “All of this will exacerbate delusions, create yet more dependence-related problems, prey on our psychological vulnerabilities, introduce new dimensions of polarization, complicate existing struggles for rights, and create a huge new category error for society.”

Suleyman wrote that “there is zero evidence” today that conscious AI exists. He included a link to a paper that Long coauthored in 2023 that proposed a new framework for evaluating whether an AI system has “indicator properties” of consciousness. (Suleyman did not respond to a request for comment from WIRED.)

I chatted with Long and Campbell shortly after Suleyman published his blog. They told me that, while they agreed with much of what he said, they don’t believe model welfare research should cease to exist. Rather, they argue that the harms Suleyman referenced are the exact reasons why they want to study the topic in the first place.

“When you have a big, confusing problem or question, the one way to guarantee you’re not going to solve it is to throw your hands up and be like ‘Oh wow, this is too complicated,’” Campbell says. “I think we should at least try.”

Testing Consciousness

Model welfare researchers primarily concern themselves with questions of consciousness. If we can prove that you and I are conscious, they argue, then the same logic could be applied to large language models. To be clear, neither Long nor Campbell think that AI is conscious today, and they also aren’t sure it ever will be. But they want to develop tests that would allow us to prove it.

“The delusions are from people who are concerned with the actual question, ‘Is this AI, conscious?’ and having a scientific framework for thinking about that, I think, is just robustly good,” Long says.

But in a world where AI research can be packaged into sensational headlines and social media videos, heady philosophical questions and mind-bending experiments can easily be misconstrued. Take what happened when Anthropic published a safety report that showed Claude Opus 4 may take “harmful actions” in extreme circumstances, like blackmailing a fictional engineer to prevent it from being shut off.

AI Research

Trends in patent filing for artificial intelligence-assisted medical technologies | Smart & Biggar

[co-authors: Jessica Lee, Noam Amitay and Sarah McLaughlin]

Medical technologies incorporating artificial intelligence (AI) are an emerging area of innovation with the potential to transform healthcare. Employing techniques such as machine learning, deep learning and natural language processing,1 AI enables machine-based systems that can make predictions, recommendations or decisions that influence real or virtual environments based on a given set of objectives.2 For example, AI-based medical systems can collect medical data, analyze medical data and assist in medical treatment, or provide informed recommendations or decisions.3 According to the U.S. Food and Drug Administration (FDA), some key areas in which AI are applied in medical devices include: 4

- Image acquisition and processing

- Diagnosis, prognosis, and risk assessment

- Early disease detection

- Identification of new patterns in human physiology and disease progression

- Development of personalized diagnostics

- Therapeutic treatment response monitoring

Patent filing data related to these application areas can help us see emerging trends.

Table of contents

Analysis strategy

We identified nine subcategories of interest:

- Image acquisition and processing

- Medical image acquisition

- Pre-processing of medical imaging

- Pattern recognition and classification for image-based diagnosis

- Diagnosis, prognosis and risk management

- Early disease detection

- Identification of new patterns in physiology and disease

- Development of personalized diagnostics and medicine

- Therapeutic treatment response monitoring

- Clinical workflow management

- Surgical planning/implants

We searched patent filings in each subcategory from 2001 to 2023. In the results below, the number of patent filings are based on patent families, each patent family being a collection of patent documents covering the same technology, which have at least one priority document in common.5

What has been filed over the years?

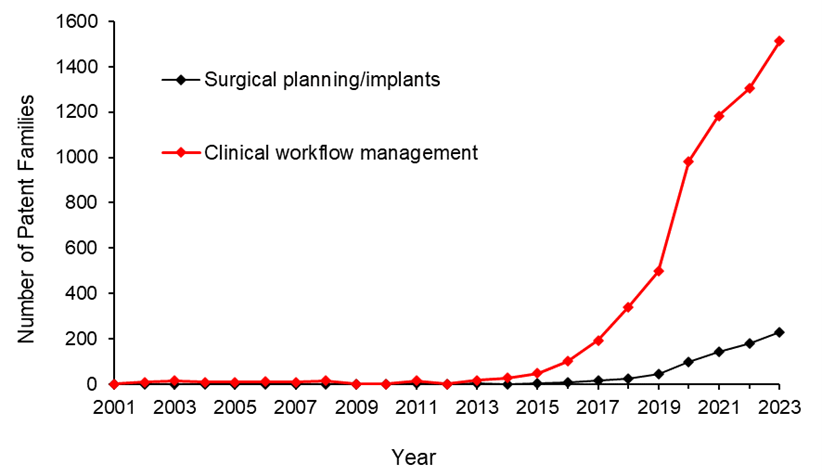

The number of patents filed in each subcategory of AI-assisted applications for medical technologies from 2001 to 2023 is shown below.

We see that patenting activities are concentrating in the areas of treatment response monitoring, identification of new patterns in physiology and disease, clinical workflow management, pattern recognition and classification for image-based diagnosis, and development of personalized diagnostics and medicine. This suggests that research and development efforts are focused on these areas.

What do the annual numbers tell us?

Let’s look at the annual number of patent filings for the categories and subcategories listed above. The following four graphs show the global patent filing trends over time for the categories of AI-assisted medical technologies related to: image acquisition and processing; diagnosis, prognosis and risk management; treatment response monitoring; and workflow management.

When looking at the patent filings on an annual basis, the numbers confirm the expected significant uptick in patenting activities in recent years for all categories searched. They also show that, within the four categories, the subcategories showing the fastest rate of growth were: pattern recognition and classification for image-based diagnosis, identification of new patterns in human physiology and disease, treatment response monitoring, and clinical workflow management.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to image acquisition and processing.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to more accurate diagnosis, prognosis and risk management.

Above: Global patent filing trends over time for AI-assisted medical technologies related to treatment response monitoring.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to workflow management.

Where is R&D happening?

By looking at where the inventors are located, we can see where R&D activities are occurring. We found that the two most frequent inventor locations are the United States (50.3%) and China (26.2%). Both Australia and Canada are amongst the ten most frequent inventor locations, with Canada ranking seventh and Australia ranking ninth in the five subcategories that have the highest patenting activities from 2001-2023.

Where are the destination markets?

The filing destinations provide a clue as to the intended markets or locations of commercial partnerships. The United States (30.6%) and China (29.4%) again are the pace leaders. Canada is the seventh most frequent destination jurisdiction with 3.2% of patent filings. Australia is the eighth most frequent destination jurisdiction with 3.1% of patent filings.

Takeaways

Our analysis found that the leading subcategories of AI-assisted medical technology patent applications from 2001 to 2023 include treatment response monitoring, identification of new patterns in human physiology and disease, clinical workflow management, pattern recognition and classification for image-based diagnosis as well as development of personalized diagnostics and medicine.

In more recent years, we found the fastest growth in the areas of pattern recognition and classification for image-based diagnosis, identification of new patterns in human physiology and disease, treatment response monitoring, and clinical workflow management, suggesting that R&D efforts are being concentrated in these areas.

We saw that patent filings in the areas of early disease detection and surgical/implant monitoring increased later than the other categories, suggesting these may be emerging areas of growth.

Although, as expected, the United States and China are consistently the leading jurisdictions in both inventor location and destination patent offices, Canada and Australia are frequently in the top ten.

Patent intelligence provides powerful tools for decision makers in looking at what might be shaping our future. With recent geopolitical changes and policy updates in key primary markets, as well as shifts in trade relationships, patent filings give us insight into how these aspects impact innovation. For everyone, it provides exciting clues as to what emerging technologies may shape our lives.

References

1. Alowais et.al., Revolutionizing healthcare: the role of artificial intelligence in clinical practice (2023), BMC Medical Education, 23:689.

2. U.S. Food and Drug Administration (FDA), Artificial Intelligence and Machine Learning in Software as a Medical Device.

3. Bitkina et.al., Application of artificial intelligence in medical technologies: a systematic review of main trends (2023), Digital Health, 9:1-15.

4. Artificial Intelligence Program: Research on AI/ML-Based Medical Devices | FDA.

5. INPADOC extended patent family.

[View source.]

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics