It’s been nearly three years since Chat GPT was first released to the world. Since then, artificial intelligence has rapidly become a regular part of many people’s daily lives and has continued to improve at a breakneck speed.

Guelph-based ethicist Christopher Di Carlo is worried that artificial intelligence may soon surpass human intelligence.

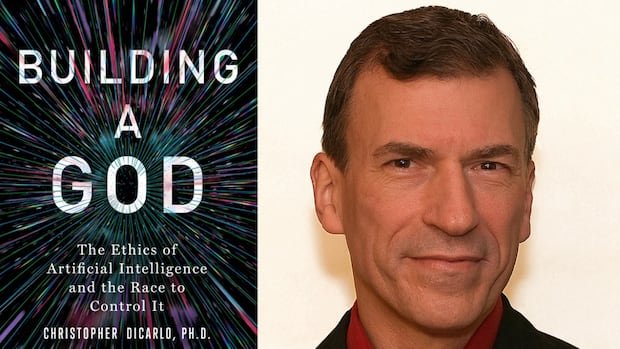

Di Carlo wrote about his concerns for an AI future in his new book Building a God: The Ethics of Artificial Intelligence and the Race to Control It.

Di Carlo joined CBC K-W’s The Morning Edition host Craig Norris to talk about the promises, perils and ethical dilemmas of AI.

Audio of this interview can be found at the bottom of this story. This interview has been edited for length and clarity.

Craig Norris: In the book you talk about artificial general intelligence and artificial narrow intelligence. What’s the difference?

Christopher Di Carlo: Right now we have ANI, or artificial narrow intelligence. That’s Siri, Alexa, autonomous vehicles, even your Roomba. These are narrow in the sense that they can really not do anything outside of what their very limited algorithms tell them to do. But AGI or artificial general intelligence, well, that’s the Holy Grail of all of business right now in the world.

That’s what about five billionaire tech bros are racing to try to achieve. That’s the level at which AI becomes very human-like. It’s more generalized. It doesn’t just do one thing, it can do many things at a time, and it can do it 1,000 times better than any human.

Craig Norris: How could a government control a computer that is smarter than humans?

Christopher Di Carlo: Nobody knows right now, and that’s the problem. These tech bros are racing ahead and the guardrails aren’t in place. You have a gentleman like Mr. [Donald] Trump who ripped up the Harris-Biden executive order.

He’s opened up the floodgates to drill, baby drill with AI. But, the fact of the matter is we don’t have the guardrails in place and we very much need to have them in place before we get to the point at which AGI becomes real.

Craig Norris: Like any technological advancement, obviously there’s good and bad. What good can come out of advancements in AI?

Christopher Di Carlo: There’s a lot of good. The great thing about AI is we’re living in this unique time period where we’re right in between how things used to be done and how they’re about to be done. And AI is going to bring a lot of great things to this world, especially in medicine.

We’re already seeing fast improvements in diagnostic abilities, medicine, development, treatment for the elderly, reconstructing tissues, growing organs, fixing the climate problem, working on world hunger, and coming up with new energy sources. AI has an awful lot to offer, and it will in many ways make the world a far better place.

Craig Norris: Was there a moment where you went, ‘oh man, I’ve got to write about this?’ What prompted this for you?

Christopher Di Carlo: So back in the 90s, I tried to build this machine, this big brain called The Oz Talk Project, the Onion Skin Theory of Knowledge. It was basically a model and information theory. I approached a number of politicians and university presidents about why not put Canada on the map?

We have telescopes to see farther into the universe and microscopes to see down to the atom. Why aren’t we building a big brain to help us think through difficult issues? I got nothing but positive feedback from everybody, but I couldn’t get a single dime from any of them to fund this project.

At that point, I realized it was going to be built at some point. So I drafted up a universal global constitution basically for the world, for when this thing actually does get built. I thought I’m going to put this aside and I’m going to focus more on critical thinking and try to get that into the high schools. Then November 2022 comes along and Sam Altman releases GPT 3. So the turning point was these large language models using neural nets.

Craig Norris: Advancements in AI have many people worried about the future, their jobs, and where they’re going to fit in. Talk a bit about what you think this will do to a person’s mental health.

Christopher Di Carlo: It’s going to generate anxiety, depression, and psychosis on a number of levels. My colleague Jonathan Hyde at NYU has done extensive studies on how the smart phone has affected our youth, and it has, I mean greatly.

Now, once we get AI, look at the things we’re seeing now with AI psychosis and chat bots. This is where people believe they’re actually alive. That’s just one area. My prediction is once the world finds out that these tech bros are racing toward building essentially a super intelligent machine God that we might not be able to control, I believe that’s going to generate a kind of a global angst similar to how people reacted when nuclear weaponry was introduced.

LISTEN | The ethics behind AI creation:

The Morning Edition – K-WThe ethics behind AI creation

Guelph-based ethicist, Christopher DiCarlo, talks about his concerns that artificial intelligence may soon surpass human intelligence in his new book Building a God: The Ethics of Artificial Intelligence and the Race to Control It.