AI Insights

Prediction: These 2 Artificial Intelligence Stocks Will Be the World’s Most Valuable Companies in 5 Years

At this point, it seems highly likely that artificial intelligence (AI) is on track to be the most impactful new technology since the internet. While the progression of the tech trend will certainly bring some twists and turns for investors, there’s a good chance that the AI revolution is still in relatively early innings.

Companies with heavy exposure to AI have been some of the market’s best performers in recent years and helped push major indexes to new highs, and long-term investors still have opportunities to score wins with top players in the space. With that in mind, read on to see why two Motley Fool contributors think that two companies, in particular, with leading positions in AI will stand as the world’s most valuable businesses five years from now.

Image source: Getty Images.

The biggest name in AI today, and probably tomorrow

Jennifer Saibil (Nvidia): Nvidia (NVDA 1.28%) has rocketed to the point where it is now the world’s most valuable company, passing Microsoft and Apple along the way. There’s good reason to expect it will still be at the top of the podium five years from now.

A big reason for expectation is that Nvidia is growing much faster than Microsoft and Apple, as well as the rest of the world’s most valuable companies. Revenue increased 69% year over year in its fiscal 2026 first quarter (ended April 27), and management is guiding for a 50% increase in the second quarter. It has incredibly high gross margins, which came in at 71.3% in the first quarter without a one-time charge, and the net profit margin was 52% in the quarter.

Nvidia has a massive opportunity over the next few years as AI gets incorporated more into what people do. It partners with most of the major AI companies, like Amazon (AMZN 1.62%) and Microsoft, and as they roll out their AI platforms, there’s an even greater demand for Nvidia’s products. The demand for data centers alone, which AI companies use to help power generative AI operations, is exploding. Nvidia’s data center revenue set the pace for the company overall, increasing 73% year over year in the first quarter.

According to Statista, the AI market is expected to increase at a compound annual growth rate of 26% over the next five years, surpassing $1 trillion by 2031. As the leader in the chip industry, with the most powerful products and as much as 95% of the market share, it will be one of the main beneficiaries of that growth.

Nvidia keeps launching new and more powerful chips to handle the increasing demand and power load. It’s still bringing out the Blackwell technology that it launched last year, and it’s seeing a huge need for its products to drive the inference part of generative AI. Nvidia management says its GPUs are being incorporated into 100 of what it calls AI factories (AI-focused data centers) under development in the first quarter, double last year’s number, and the number of graphics processing units powering each factory doubled as well. Management expects this segment of the business to continue growing at a rapid pace. It’s now launching Blackwell Ultra, a more powerful tool for AI reasoning, which is the next step after inference and requires greater capacity.

CEO Jensen Huang envisions a future not too far off where AI is used in everything we do, and Nvidia is going to play a huge role in that shift.

Amazon has a massive AI-driven opportunity ahead

Keith Noonan (Amazon): As the leading provider of cloud infrastructure services, Amazon stands to be a major beneficiary of the AI revolution. The development, launch, and scaling of artificial intelligence applications stands to be a powerful tailwind for the company’s Amazon Web Services (AWS) cloud business, and the Bedrock suite and other generative AI tools should help to encourage clients to continue building within its ecosystem.

With AWS standing as Amazon’s most profitable segment by far, AI-related sales for the segment should help to drive strong earnings growth over the next five years. Artificial intelligence being integrated into the company’s fast-growing digital advertising business should also help to improve targeting and demand and create another positive catalyst for the company’s bottom line. But there’s an even bigger AI-related opportunity on the table — and it could make Amazon the world’s most valuable company within the next half-decade.

Even though AWS generates most of Amazon’s profits, the company’s e-commerce business still accounts for the majority of its revenue. The catch is that e-commerce has historically been a relatively low-margin business. Due to the emphasis that Amazon has placed on expanding its retail sales base and the high operating costs involved with running the business, e-commerce accounts for a surprisingly small share of the company’s profits despite the massive scale of the unit. That will likely change with time.

With AI and robotics paving the way for warehouse and factory automation and potentially opening the door for a variety of autonomous delivery options, operating expenses for the e-commerce business are poised to fall substantially. There’s admittedly a significant amount of guesswork involved in charting how quickly this transformation will take shape, but it’s a trend that’s worth betting on.

Given the incredible sales base that Amazon has built for its online retail wing, margin improvements look poised to unlock billions of dollars in fresh net income for the business. If AI-driven robotics and automation initiatives start to accelerate substantially for the company over the next five years, the e-commerce side of the business will quickly command a much higher valuation premium. If so, Amazon has a clear path to being one of the world’s most valuable companies.

John Mackey, former CEO of Whole Foods Market, an Amazon subsidiary, is a member of The Motley Fool’s board of directors. Keith Noonan has no position in any of the stocks mentioned. The Motley Fool has positions in and recommends Amazon, Apple, Microsoft, and Nvidia. The Motley Fool recommends the following options: long January 2026 $395 calls on Microsoft and short January 2026 $405 calls on Microsoft. The Motley Fool has a disclosure policy.

AI Insights

General Counsel’s Job Changing as More Companies Adopt AI

The general counsel’s role is evolving to include more conversations around policy and business direction, as more companies deploy artificial intelligence, panelists at a University of California Berkeley conference said Thursday.

“We are not just lawyers anymore. We are driving a lot of the policy conversations, the business conversations, because of the geopolitical issues going on and because of the regulatory, or lack thereof, framework for products and services,” said Lauren Lennon, general counsel at Scale AI, a company that uses data to train AI systems.

Scattered regulation and fraying international alliances are also redefining the general counsel’s job, panelists …

AI Insights

AI a 'Game Changer' for Assistance, Q&As in NJ Classrooms – GovTech

AI Insights

Analysis on expected use of artificial intelligence by businesses in Canada, third quarter of 2025

As artificial intelligence (AI) continues to expand its role in business operations across Canada, questions about its future use and influence have gained attention. What was once considered an emerging technology limited to select sectors is now being increasingly integrated into a range of industries. According to data from the Canadian Survey on Business Conditions, in the second quarter of 2025, 12.2%Note of businesses reported using AI to produce goods or deliver services over the last 12 months, up from 6.1%Note in the same period a year earlier.

This article presents data on how businesses in Canada expect to use AI over the next 12 months for producing goods and delivering services. While both the second quarters of 2024 and 2025 focused on AI use over that previous year, the current release revisits forward looking questions, first introduced in the third quarter of 2024. It explores the types of AI applications businesses intend to adopt, anticipated impacts on employment and operations, and reasons businesses provided for not planning to use AI.

The Canadian Survey on Business Conditions was conducted between July 2 and August 6, 2025, to collect information on the environment businesses are currently operating in and their expectations moving forward, including expectations for AI use in the year ahead.Note The proportion of businesses planning to adopt AI over the next 12 months has grown since last year’s third quarter, with 14.5% of businesses now reporting plans to use the technology, up from the 10.6%Note in the third quarter of 2024. This reflects a gradual increase for interest in AI’s long-term potential to reshape operations.

Most businesses still do not plan to use artificial intelligence in the next 12 months

While growth has been observed, adoption of AI among businesses in Canada remains limited. In the third quarter of 2025, 14.5% of businesses reported plans to use AI over the next 12 months, while two-thirds (66.7%) of businesses reported no plans and 18.9% were uncertain. These findings are consistent with results from the third quarter of 2024, where 71.8% of businesses reported no plans to implement AI in their operations.

Among businesses not planning to adopt AI over the next 12 months, 78.1% reported that AI was not relevant to the goods or services they currently provide. Other reported reasons included a lack of knowledge about AI capabilities (11.3%), concerns about privacy and security (8.1%), and the view that AI is not yet a mature enough technology (7.6%). These findings are similar to those from the third quarter of 2024, when 74.2% of businesses reported lack of relevance as the main reason, followed by a lack of knowledge on AI capabilities (9.3%).

| Third quarter of 2025 | Third quarter of 2024 | |

|---|---|---|

| percent of businesses | ||

| Notes: The results in this table are based on the survey that was in collection from July 2 to August 6 2025 and from July 2 to August 6, 2024, and respondents were asked what their expectations would be over the next 12-month period. As a result, those 12 months could range from July 2 to August 6 2025 and from July 2 to August 6, 2024, depending on when the business responded. Source: Canadian Survey on Business Conditions, third quarter of 2025 (Table 33-10-1046-01) and third quarter of 2024 (Table 33-10-0879-01). |

||

| Lack of knowledge on the capabilities of AI | 11.3 | 9.3 |

| Concerns about privacy or security | 8.1 | 6.8 |

| AI is not a mature enough technology yet | 7.6 | 8.8 |

| Too expensive | 4.7 | 6.1 |

| Lack of skilled workforce | 4.6 | 2.3 |

| Concerns about bias | 3.2 | 1.7 |

| Lack of required data | 3.0 | 1.6 |

| Laws and regulations prevent or restrict use of AI | 2.2 | 1.4 |

| Previous or current use of AI did not meet expectations | 1.5 | 1.0 |

| Other reason | 1.7 | 3.8 |

| AI is not relevant to the goods produced or services delivered | 78.1 | 74.2 |

Businesses in information and cultural industries continue to lead in expected artificial intelligence adoption

Of the businesses planning to use AI over the next year (14.5%), those in information and cultural industries were most likely to report this, at 38.6%. This was followed by businesses in finance and insurance (31.5%), and professional, scientific and technical services (26.3%). These findings are similar with results from the third quarter of 2024, when businesses in information and culture industries (29.7%) were also the most likely to report plans to use AI in that coming year.

These forward-looking expectations by industry align with past reported AI use. In the second quarter of 2025, 35.6% of businesses in information and cultural industries reported having used AI in the previous year, followed by 31.7% in professional, scientific and technical services and 30.6% in finance and insurance. Businesses in these industries also reported the highest usage rates in the second quarter of 2024, at 20.9% for information and cultural industries, 13.7% for professional, scientific and technical services and 10.9% for finance and insurance.

Between the third quarter of 2024 and the third quarter of 2025, expected AI usage varied by industry. Businesses in finance and insurance had the largest increase in expected AI usage, growing from 17.9% to 31.5% between the third quarter of 2024 and third quarter of 2025. The proportion of businesses in health care and social assistance expecting to use AI also grew from 11.4% to 23.2% over the same period. In contrast, manufacturing experienced the largest decline, falling from 13.1% in third quarter of 2024 to 7.2% in third quarter of 2025.

| Third quarter of 2025 | Third quarter of 2024 | |

|---|---|---|

| percent of businesses | ||

| Notes: The results in this table are based on the survey that was in collection from July 2 to August 6 2025 and from July 2 to August 6, 2024, and respondents were asked what their expectations would be over the next 12-month period. As a result, those 12 months could range from July 2 to August 6 2025 and from July 2 to August 6, 2024, depending on when the business responded. Source: Canadian Survey on Business Conditions, third quarter of 2025 (Table 33-10-1045-01) and third quarter of 2024 (Table 33-10-0878-01). |

||

| All businesses | 14.5 | 10.6 |

| Agriculture, forestry, fishing and hunting | 4.9 | 4.8 |

| Mining, quarrying, and oil and gas extraction | 3.4 | 4.6 |

| Construction | 9.2 | 3.2 |

| Manufacturing | 7.2 | 13.1 |

| Wholesale trade | 7.8 | 12.2 |

| Retail trade | 5.7 | 5.1 |

| Transportation and warehousing | 6.6 | 4.2 |

| Information and cultural industries | 38.6 | 29.7 |

| Finance and insurance | 31.5 | 17.9 |

| Real estate and rental and leasing | 24.4 | 13.0 |

| Professional, scientific and technical services | 26.3 | 24.6 |

| Administrative and support, waste management and remediation services | 11.6 | 12.7 |

| Health care and social assistance | 23.2 | 11.4 |

| Arts, entertainment and recreation | 15.2 | 18.5 |

| Accommodation and food services | 14.8 | 6.0 |

| Other services (except public administration) | 8.7 | 6.5 |

Virtual agents or chatbots most expected artificial intelligence application

Of the businesses planning to use AI over the next 12 months (14.5%), the most common applications reported were virtual agents or chatbots (34.8%) and data analytics (32.9%). The proportion of businesses planning to use virtual agents or chatbots increased from 18.7% in the third quarter of 2024, to 34.8% in 2025, indicating an increase in customer facing applications. Other reported applications included text analytics (28.5%), marketing automation (25.6%) and natural language processing (20.9%).

| Third quarter of 2025 | Third quarter of 2024 | |

|---|---|---|

| percent of businesses | ||

| Notes: The results in this table are based on the survey that was in collection from July 2 to August 6 2025 and from July 2 to August 6, 2024, and respondents were asked what their expectations would be over the next 12-month period. As a result, those 12 months could range from July 2 to August 6 2025 and from July 2 to August 6, 2024, depending on when the business responded. Source: Canadian Survey on Business Conditions, third quarter of 2025 (Table 33-10-1045-01) and third quarter of 2024 (Table 33-10-0878-01). |

||

| AI use planned in producing goods or delivering services | 14.5 | 10.6 |

| Virtual agents or chatbots | 34.8 | 18.7 |

| Data analytics using AI | 32.9 | 26.7 |

| Text analytics using AI | 28.5 | 27.2 |

| Marketing automation using AI | 25.6 | 19.4 |

| Natural language processing | 20.9 | 15.4 |

| Speech or voice recognition using AI | 20.2 | 11.9 |

| Large language models | 19.9 | 9.3 |

| Machine learning | 18.4 | 18.8 |

| Recommendation systems using AI | 14.0 | 11.7 |

| Decision making systems based on AI | 13.1 | 6.3 |

| Deep learning | 8.9 | 5.3 |

| Image or pattern recognition | 7.6 | 7.5 |

| Machine or computer vision | 3.2 | 3.2 |

| Robotics process automation | 3.2 | 6.2 |

| Neural networks | 2.2 | 2.6 |

| Augmented reality | 2.0 | 1.3 |

| Biometrics | 1.6 | 1.9 |

| Other | 7.3 | 6.2 |

Among businesses in information and cultural industries who plan to use AI over the next year (38.6%), the most common intended uses are virtual agents or chatbots (51.2%), followed by text analytics (49.9%) and large language models (48.1%). Meanwhile, among businesses in finance and insurance planning to use AI (31.5%), the most common expected use is data analytics (57.3%), followed by virtual agents or chatbots (35.1%). Of the businesses in professional, scientific and technical services planning to use AI (26.3%), nearly half (45.3%) reported plans to use data analytics, followed by 39.9% planning to use large language models.

Plans to adopt artificial intelligence over the next 12 months vary by business size

While 14.5% of all businesses plan to use AI over the next 12 months to produce goods or deliver services, those with 100 or more employees were more likely to report such plans (20.5%), compared with 15.0% of businesses with 20 to 99 employees, 14.4% of businesses with 5 to 19 employees and 14.2% of those with 1 to 4 employees.

While adoption rates of AI varied by business size, the intended applications presented similar characteristics. Among larger businesses with 100 or more employees that plan to use AI in producing goods or delivering services over the next year, nearly half (48.0%) plan to use AI for data analytics, followed by text analytics (32.0%) and virtual agents or chatbots (20.7%). Similarly, among smaller businesses with 1 to 4 employees that plan to use AI, nearly one-third (31.8%) reported plans to use data analytics, followed by virtual agents or chatbots (29.8%), and text analytics (29.4%).

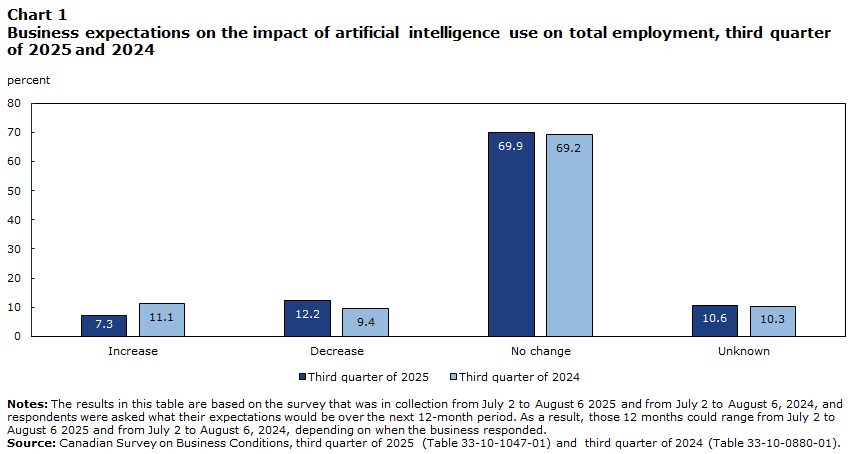

Most businesses still expect no change to employment levels after artificial intelligence adoption

Businesses in Canada continue to report limited expectations of employment change resulting from AI adoption. In the third quarter of 2025, among businesses planning to implement AI over the next 12 months (14.5%), 69.9% expected no change in employment levels. This is consistent with the third quarter of 2024, where 69.2% of the businesses planning to adopt AI that coming year (10.6%) reported they expected no employment changes.

At the same time, the proportion of businesses expecting employment decreases rose from 9.4% in the second quarter of 2024, to 12.2% in the second quarter of 2025. Meanwhile, businesses expecting employment gains went down from 11.1% to 7.3% over the same period.

Data table for Chart 1

| Increase | Decrease | No change | Unknown | |

|---|---|---|---|---|

| percent | ||||

| Notes: The results in this table are based on the survey that was in collection from July 2 to August 6 2025 and from July 2 to August 6, 2024, and respondents were asked what their expectations would be over the next 12-month period. As a result, those 12 months could range from July 2 to August 6 2025 and from July 2 to August 6, 2024, depending on when the business responded. Source: Canadian Survey on Business Conditions, third quarter of 2025 (Table 33-10-1047-01) and third quarter of 2024 (Table 33-10-0880-01). |

||||

| Third quarter of 2025 | 7.3 | 12.2 | 69.9 | 10.6 |

| Third quarter of 2024 | 11.1 | 9.4 | 69.2 | 10.3 |

These employment expectations correspond to the experiences of businesses already using AI to produce goods or deliver services. Of the businesses using AI in the second quarter of 2025 (12.2%), a vast majority (89.4%) reported no change in their employment levels, while 6.3% reported a decrease and 4.3% reported an increase. Similar results were observed in the second quarter of 2024, when 84.9% of the businesses using AI over the previous 12 months reported no change to their employment, while 6.3% reported a decrease and 8.8% reported an increase.

Training staff remains the most common operational response to artificial intelligence

Among businesses planning to use AI over the next 12 months to produce goods or deliver services (14.5%), the most anticipated operational change is training existing employees to use AI. About half (49.8%) of businesses reported plans to provide staff with AI training once new systems are implemented. This is consistent with third quarter of 2024 results, where among businesses who planned to use AI over that year (10.6%), nearly half (48.7%) reported plans to provide staff with AI training after implementation.

Other expected changes by businesses after AI adoption in the third quarter of 2025 include the development of new workflows (41.9%), purchasing cloud services or storage (27.0%) and changing data collection or data management practices (25.6%).

These results align with the operational changes already put in place by businesses currently using AI. Among businesses using AI in the second quarter of 2025 (12.2%), the most reported operational changes in the previous 12 months were developing new workflows (40.1%), training current staff to use AI (38.9%) and purchasing cloud services or storage (25.7%).

Meanwhile, hiring new staff trained in AI remained relatively uncommon for businesses after adopting AI. It was the least reported change expected in the third quarter of 2025, at 12.6%, up from 10.2% in the third quarter of 2024. Furthermore, purchasing computing power or specialized equipment was also less common, reported by 14.2% of businesses in third quarter of 2025, down from 18.6% in third quarter of 2024.

| Third quarter of 2025 | Third quarter of 2024 | |

|---|---|---|

| percent of businesses | ||

| Notes: The results in this table are based on the survey that was in collection from July 2 to August 6 2025 and from July 2 to August 6, 2024, and respondents were asked what their expectations would be over the next 12-month period. As a result, those 12 months could range from July 2 to August 6 2025 and from July 2 to August 6, 2024, depending on when the business responded. Source: Canadian Survey on Business Conditions, third quarter of 2025 (Table 33-10-1048-01) and third quarter of 2024 (Table 33-10-0881-01). |

||

| Train current staff to use AI | 49.8 | 48.7 |

| Develop new workflows | 41.9 | 43.7 |

| Purchase cloud services or cloud storage | 27.0 | 25.2 |

| Change data collection or data management practices | 25.6 | 17.6 |

| Use vendors or consulting services to install or integrate AI | 16.0 | 16.2 |

| Purchase computing power or specialized equipment | 14.2 | 18.6 |

| Hire staff trained in AI | 12.6 | 10.2 |

| Other change | 0.2 | 1.0 |

| Unknown | 14.0 | 16.1 |

| None | 12.3 | 9.3 |

Methodology

From July 2 to August 6, 2025, representatives from businesses across Canada were invited to complete an online questionnaire about business conditions and business expectations moving forward. The Canadian Survey on Business Conditions uses a stratified random sample of business establishments with employees classified by geography, industry sector and size. Proportions are estimated using survey weights ensuring that the survey results are representative of all employer businesses in Canada. The total sample size for this iteration of the survey was 21,406, and results are based on responses from a total of 9,494 businesses or organizations.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi