Tools & Platforms

Page Unavailable – ABC News

This page either does not exist or is currently unavailable.

From here you can either hit the “back” button on your browser to return to the previous page, or visit the ABCNews.com Home Page. You can also search for something on our site below.

STATUS CODE: 404

Tools & Platforms

AI edge cloud service provisioning for knowledge management smart applications

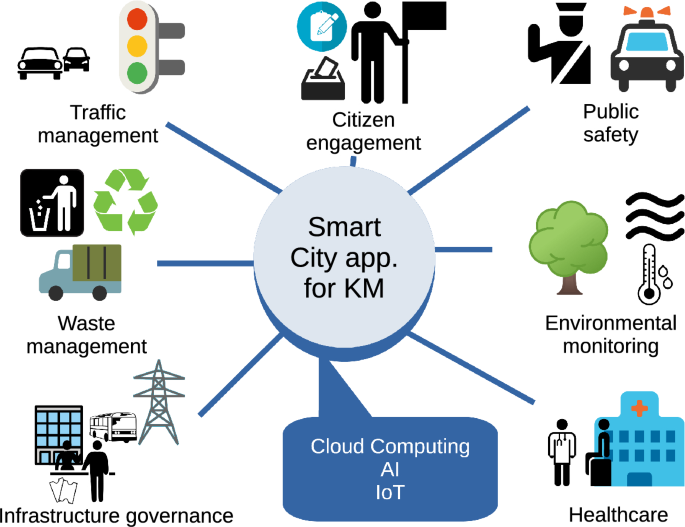

Smart cities enhance the quality of life of its residents, and promote transparent management of public resources, including financial assets, natural resources, and infrastructure. Successful smart city initiatives rely on three core components in knowledge management: technology, people, and institutions. Together, these elements support urban infrastructure and governance, improving public services and citizen engagement. Technology-driven services leverage data to gain insights, crowdsource ideas, and deliver enhanced public services. Additionally, smart applications empower citizens to participate in shaping public policy, enriching both decision-making processes and the value of business operations.

KM in a smart city forms a cyber-physical social system that encourages collaboration among organizations and stakeholders, creating a sophisticated technological network within the urban ecosystem. Key components of this network are AI, IoT and Big Data, which play critical roles in knowledge co-creation, restructuring KM processes, and facilitating the exchange of human and organizational knowledge. Stakeholders contribute essential data, and trust is a crucial factor in fostering agreements between them and the municipality to effectively deploy smart technologies and successfully implement smart initiatives6,29.

For transformative innovations that reshape how organizations and companies deliver benefits within the ecosystem, a supportive framework must emerge. This includes investments in new systems and skill development by firms, as well as an adaptation to the new approach by the customers, learning to use it to generate value. Over time, customers and citizens themselves become more adept at using information to manage their lives as workers, consumers, and travellers.

Modern platforms depend on the ability of businesses and individuals to create, access, and analyse vast amounts of data across various devices. Digital technologies such as social networks and mobile applications are driving the expansion of platforms into smart applications. These platforms utilize Big Data to collect, store, and manage extensive data sets30. A major challenge for the integration of smart applications into knowledge management processes is the workforce skill gap. To avoid the cost of acquiring new talents with the right expertise, Kolding et al.31 conclude that organizations should plan ahead training programs to update the skills of the employee base in order to meet long-term development goals and other enterprise-wide priorities.

Figure 1 shows examples of smart city applications that involve knowledge management processes. The following sections will explore the technologies that establish platforms for knowledge management in smart cities.

Cloud computing

Cloud computing is a computing paradigm where the resources, be it applications and software, data, frameworks, servers or hardware, are stored on remote servers and accessed over the internet. This makes those resources available from anywhere with an internet connection. “Weak” computing devices send their computations to cloud servers, a practice known as Computing Offloading32.

The cloud brings numerous key benefits. It enables ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction33. It is a rapidly evolving field that has gained significant traction in recent years. It encompasses a range of technologies and market players, and is expected to continue growing in the future34.

Organizations gain a set of advantages by using the cloud, including cost saving, scalability, reliability and flexibility7. It also allows organizations to focus on their core competencies instead of managing IT infrastructure. Additionally, cloud computing can help organizations improve their agility, since they no longer need to wait for hardware to be provisioned and deployed. Furthermore, cloud computing enables organizations to access powerful applications and services that they may not have been able to afford on their own8.

Cloud resources range from data and software to concrete hardware resources. The following list provides an overview of the typical applications of cloud computing.

-

Web-based applications: Cloud computing provides a platform for hosting web applications, allowing businesses to deploy and scale their applications without the need for significant upfront investment in hardware infrastructure.

-

Data storage and backup: Cloud storage services offer scalable and reliable data storage solutions, allowing businesses to store and back up their data securely in the cloud.

-

Software as a service (SaaS): Cloud-based SaaS applications enable users to access software applications over the internet, eliminating the need for local installation and maintenance.

-

Infrastructure as a Service (IaaS): IaaS providers offer virtualized computing resources, enabling businesses to build and manage their IT infrastructure in the cloud.

-

Platform as a service (PaaS): PaaS offerings provide a platform for developers to build, deploy, and manage applications without the complexity of managing underlying infrastructure.

-

Big data analytics: Cloud computing platforms provide the scalability and computing power required for processing and analyzing large volumes of data, making it easier for organizations to derive insights from their data.

-

Internet of things: Cloud platforms offer services for managing and processing data generated by IoT devices, enabling real-time analytics and decision-making.

-

Artificial intelligence: and Machine Learning: Cloud-based AI and machine learning services provide access to powerful algorithms and computing resources for training and deploying machine learning models.

-

Content delivery networks (CDNs): Cloud-based CDNs distribute content such as web pages, images, and videos to users worldwide, reducing latency and improving performance, which in term translates to better user experience.

-

Development and testing environments: Cloud platforms offer on-demand access to development and testing environments, allowing developers to quickly provision resources and collaborate on projects.

-

Disaster recovery and business continuity: Cloud-based disaster recovery services provide organizations with the ability to replicate and recover their IT infrastructure and data in the event of a disaster.

To smart city applications, offloading allows them to deploy complex applications on low power mobile devices. An intrinsic problem for an offloading application is resource allocation. Smart City applications need to allocate remote computing and networking resources to satisfy35,36,37. One of the main challenges of the offloading model is complying with the Service Level Agreement (SLA) between the cloud provider and the client. Generally, cloud platforms optimize resource scheduling and latency. These techniques strive to maintain deadlines during task execution due to urgency and resource budged limits. These extra resources needed during peak demand, known as marginal resources, are a challenge due to the variability of demand38,39. There are several strategies to this problem, such as decision support models using top-k nearest neighbour algorithm38 or minority game theory40. Other authors incorporate deep learning into their resource allocation schemes35,41.

Cloud computing helps knowledge management processes. It reduces the barrier to entry, as it eliminates the need to invest in IT infrastructure to implement knowledge management systems. Instead of investing in expensive infrastructure and hardware, organizations can leverage cloud resources on a pay-as-you-go basis, reducing upfront costs and operational expenses. It provides an alternative to the classical approach, as it provides the mechanisms to control, virtualize and externalize the infrastructure42. It is specially important SMEs, where the lack of adequate technical capabilities can hinder their implementations of knowledge management strategies43 A framework for Knowledge Management as a Service (KMaaS) allows the users to access services from anytime, anywhere, and from any devices based on the user subscription for a specific domain. The knowledge management processes are impacted by this technology, specially knowledge sharing, knowledge creation, and knowledge transfer44. The implementation of cloud computing technologies impact in the overall organizational agility. Cloud computing gives them the capacity to deploy mass computing technology quickly, responding quickly to changes in the market. As a result, the performance of an organization is positively affected by cloud computing43. Practical applications of Cloud Computing in Knowledge Management include45:

-

Knowledge storage and sharing: Cloud platforms facilitate centralized storage, allowing SMEs to store, retrieve, and share knowledge in real time.

-

Collaboration and communication: Cloud-based tools (e.g., Google Drive, Microsoft Teams) enhance teamwork, remote work, and knowledge exchange.

-

Data security and backup: Cloud computing provides secure environments with automated backups, minimizing data loss risks.

-

Scalability and cost-efficiency: SMEs can scale their KM systems without significant infrastructure investments.

-

AI and analytics integration: Cloud computing enables AI-driven knowledge discovery, pattern recognition, and decision-making.

-

Knowledge process automation: Automating workflows and document management improves efficiency in KM processes.

Edge computing

Edge computing has emerged as a transformative paradigm in distributed computing, bringing computational and storage resources closer to the point of origin or consumption of data. This paradigm shift addresses the critical limitations of traditional cloud computing by reducing latency, saving bandwidth, and enabling real-time data processing46. Unlike centralized cloud architectures, edge computing strategically places resources at the network edge, facilitating ultra-responsive systems and location-aware applications47.

The importance of edge computing is further underscored by its potential to support latency-sensitive applications in areas such as IoT, intelligent manufacturing, and autonomous systems. By processing data locally, edge nodes alleviate network congestion and enhance system reliability48. Moreover, edge computing is uniquely positioned to complement existing cloud infrastructure by acting as a bridge, ensuring seamless data transfer and computational efficiency49.

This paradigm also introduces new opportunities and challenges in distributed system design, including efficient resource allocation, robust system architectures, and scalable management solutions. Edge computing’s adaptability to evolving computational demands and its proximity to users make it a cornerstone of next-generation digital ecosystems50. By leveraging its unique capabilities, edge computing enhances existing technologies but also to paves the way for innovative applications across diverse domains.

Edge computing plays a pivotal role in enhancing knowledge management processes by addressing the critical challenges of data accessibility, processing efficiency, and timely decision-making. Its ability to integrate seamlessly with existing technologies and facilitate localized data processing has made it invaluable for knowledge-intensive operations.

One notable application of edge computing in knowledge management is in fostering collaboration across industrial systems. For instance, multi-access edge computing (MEC) frameworks enable the creation of knowledge-sharing environments within smart manufacturing contexts, such as intelligent machine tool swarms in Industry 4.0. This integration supports real-time data exchange and decision-making, critical for efficient knowledge dissemination51.

Furthermore, edge computing contributes significantly to green supply chain management by facilitating the sharing of critical knowledge across enterprises. It enhances transparency and reduces resource consumption by integrating blockchain technologies to secure data transactions52. In open manufacturing ecosystems, edge computing has been employed to establish cross-enterprise knowledge exchange frameworks. By integrating blockchain and edge technologies, these frameworks ensure secure and efficient management of trade secrets and regional constraints53. From an enterprise innovation perspective, edge computing-based knowledge bases allow for enhanced data processing and storage at the edge, aligning with organizational goals of speed and reliability54. Edge computing also demonstrates its versatility in master data management by processing data at the source. This capability minimizes latency and ensures real-time updates for critical knowledge databases, particularly in dynamic business environments55. Finally, edge computing’s integration into virtualized communication systems has paved the way for knowledge-centric architectures. Such systems optimize data collection and sharing, ensuring that actionable knowledge reaches stakeholders effectively56.

There are several examples of the adoption of edge computing in KM processes. For example, Coppino57 studied Italian SMEs for Industry 4.0 adoption for knowledge management. The research highlights that edge computing, when integrated with knowledge management enables SMEs to scale knowledge-sharing processes while improving real-time decision-making. However, SMEs struggle with insufficient IT infrastructure and lack of expertise, which limits overall adoption. For that reason, the author recommends developing frameworks to reduce technological investments. Stadnika et al.58 perform a survey of representatives of companies from mainly European countries. Their results show that enterprises use edge data processing to increase data security and reduce latency.

Serverless computing

Serverless computing is a paradigm within the realm of cloud computing where developers can focus solely on writing and deploying applications without concerning themselves with the underlying infrastructure. In a serverless architecture, the cloud provider dynamically manages the allocation and provisioning of servers, allowing developers to create event based applications without having to explicitly manage servers or scaling concerns59,60.

Instead of traditional server-based models where developers need to provision, scale, and manage servers to run applications, serverless computing abstracts away the infrastructure layer entirely. Developers simply upload their application, define the events that trigger its execution (such as HTTP requests, database changes, file uploads, etc.), and the cloud provider handles the rest, automatically scaling resources up or down as needed61,62.

This model offers several advantages:

-

Scalability: Serverless platforms automatically scale resources based on demand. Applications can handle sudden spikes in demand without manual intervention.

-

Cost-effectiveness: With serverless computing, you only pay for the actual compute resources consumed during the execution. There are no charges for idle time, which can lead to cost savings, especially for applications with sporadic or unpredictable workloads.

-

Simplified operations: Since there’s no need to manage servers, infrastructure provisioning, or scaling, developers can focus more on developing applications and less on system configuration. This can accelerate development cycles and reduce operational overhead.

-

High availability: Serverless platforms typically offer built-in high availability and fault tolerance features. Cloud providers manage the underlying infrastructure redundancies and ensure that applications remain available even in the event of failures.

-

Faster time to market: By abstracting away infrastructure concerns and simplifying operations, serverless computing allows developers to deploy applications more quickly, enabling faster iteration and innovation.

Serverless computing is being explored as a solution for smart society applications, due to its ability to automatically execute lightweight functions in response to events. It offers benefits such as lower development and management barriers for service integration and roll-out. Typically, IoT applications use a computing outsourcing architecture with three major components for the processing of knowledge: Sensor Nodes, Networked Devices and Actuators. In the data gathering point, sensors gather data from specific locations or sites and submit it to the cloud service. Later, the analysis of the sensor data is carried out at cloud servers, where the data is processed to get useful knowledge from it. Applications have connection points for the clients to get access to the knowledge in the form of web applications63,64.

Using the traditional cloud approach, the services should always be active to listen to service requests from clients or cloud users. The implementation of microservices is not a viable solution when considering the green aspect of systems. Holding servers for a longer period consequently increases the cost of the cloud services, as cloud computing is a pay-as-you-go computing model. With the serverless model, when an event is triggered by a request of the application, the needed computing resources to execute the function are provisioned, and released after they are not needed (scale to zero). With this model, applications, processes and platforms benefit from reduced cost of operation, as only useful computing time is paid for65. In fact, case studies show that entities that adopt the serverless paradigm achieve a lower cost of operation and faster response times66.

Serverless edge computing

With the increasing number of IoT devices, the load on cloud servers continues to grow, making it essential to minimize data transfers and computations sent to the cloud11. Edge computing addresses this need by relocating computations closer to where data is gathered, utilizing IoT devices or local edge servers to perform processing tasks67. This proximity enhances latency, bandwidth efficiency, trust, and system survivability.

Serverless edge computing enables running code at edge locations without managing servers. It introduces a pay-per-use, event-driven model with “scale-to-zero” capability and automatic scaling at the edge. Applications in this model are structured as independent, stateless functions that can run in parallel67. Edge networks typically consist of a diverse range of devices, and a serverless framework allows applications to be developed independently of the specific infrastructure68. With the infrastructure fully managed by the provider, serverless edge applications are simpler to develop than traditional ones, making them ideal for latency-sensitive use cases.

Numerous studies have examined the challenges and opportunities within this model, proposing various approaches to leverage its strengths. Reduced latency and cost-effective computation are especially valuable in real-time data analytics69. Organizations benefit from the scalability of the serverless paradigm, which supports a flexible, expansive data product portfolio70. In this context, serverless edge computing enables more affordable data processing and improves user experience by reducing latency in data access and knowledge delivery interfaces.

Today, there are several serverless edge providers offering their services71. We studied ten different providers (Akamai Edge, Cloudflare Workers, IBM Edge Functions, AWS, EDJX, Fastly, Azure IoT Edge, Google Distributed Cloud, Stackpath and Vercel Serverless Functions) to understand how they offer their services. These providers can be divided into two main categories. The first category improves traditional CDN functionality by leveraging serverless functions to modify HTTP requests before they are sent to the user. The second group offers serverless edge computing frameworks that integrate edge infrastructure with their cloud platforms, enabling clients to build and incorporate their own private edge infrastructure into the public cloud. Additionally, we identified one provider, EDJX, that employs a unique Peer-to-Peer (P2P) technology to execute its serverless edge functions.

The technologies used by these providers are similar to their traditional serverless cloud counterparts. To implement the applications, high-level programming languages are typically used, like JavaScript, Python, Java. Usually, development is streamlined through the provider framework, which provides all the tools needed.

AI in KM processes

Knowledge management processes benefit significantly from AI through enhanced data processing, analysis, automation, and decision-making capabilities. AI brings sophisticated tools to KM that help organizations capture, organize, share, and apply knowledge more effectively. The following key points were extracted from the literature review:

-

Knowledge discovery and extraction. AI tools, particularly natural language processing (NLP) and machine learning, can extract insights from vast amounts of unstructured data (e.g., documents, emails, reports, and social media). NLP enables AI to parse and understand text, identifying valuable patterns, relationships, and topics within data sources. For instance, AI can automatically categorize and tag documents, identify key insights, and detect trends that are relevant to the organization. In scientific research or industry, AI-driven tools can mine research papers, patents, and technical documents to highlight emerging technologies and innovations72,73.

-

Organizing and structuring knowledge. AI-driven categorization, clustering, and tagging help organize knowledge into structured formats for easier retrieval. Using machine learning algorithms, AI can automatically classify information based on its content and relevance, enabling employees to find information more quickly. Semantic analysis, powered by AI, also groups related documents and concepts, creating a connected network of information that mirrors human knowledge organization74.

-

Knowledge sharing and recommendation systems. AI-powered recommendation systems suggest relevant knowledge resources to users based on their roles, recent activities, or queries. It is most common used to recommend content in digital platforms such as YouTube, Netflix, or Amazon. By analysing usage patterns and preferences, AI-driven KM systems can suggest documents, experts, or solutions that match immediate needs. This tailored approach to knowledge sharing ensures that clients and users receive the most relevant information without needing to sift through large repositories75.

-

Automating knowledge capture. AI technologies, such as robotic process automation (RPA) and machine learning, facilitate automatic knowledge capture by monitoring and recording daily activities and processes within an organization. For example, AI-powered chatbots can log interactions with customers or employees, storing valuable insights from these interactions in a knowledge base. AI also captures information from emails, meetings, or customer support calls, automatically adding relevant details to knowledge repositories76.

-

Enhancing knowledge retrieval with search and NLP. AI improves knowledge retrieval by enabling more sophisticated search mechanisms. With NLP, AI systems understand user queries in natural language, refining search results based on the intent behind queries rather than just matching keywords. Advanced AI-driven search engines in KM systems also employ semantic search to understand contextual relationships between terms, improving the accuracy and relevance of results77,78.

-

Contextualizing and personalizing knowledge. AI can personalize the KM experience by tailoring knowledge delivery based on an employee’s role, department, or project involvement. Using machine learning, KM platforms analyse patterns in user behaviour to predict and deliver information that aligns with individual needs. This contextualization makes knowledge sharing more effective, ensuring that the right knowledge reaches the right person at the right time79.

-

Augmenting decision-making and expertise. AI supports decision-making by providing analytical insights drawn from historical data, documents, and external sources. In KM, AI-driven predictive analytics and machine learning models assess past data to offer insights, identify risks, and make informed predictions. Expert systems can also use AI to simulate the decision-making processes of human experts, providing guidance on complex tasks80.

-

Developing virtual assistants for knowledge management. AI-based virtual assistants, like chatbots, facilitate KM by answering employee questions, providing document links, or assisting with common tasks. NLP-powered chatbots in KM systems help employees access the knowledge they need by interacting through natural language queries. These assistants can handle frequently asked questions, provide guided instructions, and retrieve information, making KM accessible and interactive, which ends up increasing overall productivity81.

-

Supporting knowledge creation through insights and innovation. AI-driven analytics can highlight trends, patterns, and gaps in an organization’s knowledge, encouraging innovation and knowledge creation. By identifying emerging trends, AI helps organizations remain competitive and proactive in knowledge development. AI tools can also support research and development by generating insights from internal and external data, suggesting new ideas or directions for innovation82.

Applications and platforms for knowledge management in the smart city

Research on service provisioning models for KM in smart cities reveals a variety of approaches, emphasizing the integration of technological and organizational strategies to optimize services.

Several studies highlight the critical role of structured frameworks and platforms to facilitate efficient service delivery. For instance, Prasetyo et al.83 propose a service platform that aligns with smart city architecture, promoting digital service introduction in dynamic urban environments. Similarly, Yoon et al.84 describe HERMES, a platform that uses GS1 standards to streamline service sharing and discovery, enabling citizens to efficiently engage with services in a geographically and linguistically optimized manner.

Smart city service models also emphasize interoperability and tailored service offerings for diverse urban needs. The study by Kim et al.85 discusses adapting service provisioning models according to urban types, underscoring the need for knowledge services tailored to the unique characteristics of each city. Additionally, Weber & Zarko86 argue for regulatory frameworks to support interoperability, ensuring that services can be consistently deployed across various smart city contexts.

Technological advancements, including AI, IoT and big data are also foundational to KM service provisioning in smart cities. Sadhukhan87 develops a framework that integrates IoT for data collection and processing, addressing the challenges posed by heterogeneous technologies in smart city infrastructures. In the same vein, 5G technology is viewed as a transformative tool for enhancing service efficiency, especially in traffic management, healthcare, and public safety domains88.

The convergence of these platforms and frameworks signals a broader move towards knowledge-driven, citizen-centric service models. Efforts like Caputo et al.89 model illustrate the importance of stakeholder engagement in building sustainable, effective service chains within smart cities. Ultimately, these models aim to transform urban living by making smart city services accessible, efficient, and responsive to the needs of the citizens.

Findings

Knowledge management has driven organizations and companies into investing heavily to harness, store and share information in knowledge networks. Through the smart city context, it has meant the sprawl of innovative platforms and applications. They are based on technologies such as AI, big data and IoT. To process the vast amount of data, they need a supporting computing architecture. In this regard, cloud computing comes as a great way to provide the necessary resources, due to its flexibility and pay per use model.

Serverless edge computing has gained significant attention recently for its potential to reduce costs and simplify development. Numerous technologies have emerged to enhance performance and minimize latency, reflecting strong industry interest. This trend is evident in the growing number of providers now offering serverless edge services. For knowledge management, it enables faster data processing, reduced latency, improved scalability, and enhanced reliability. The serverless edge paradigm also reduces the costs of developing and maintaining new knowledge management systems, which is specially for SMEs.

KM in smart cities requires structured frameworks and platforms to enable efficient service delivery. Frameworks aligned with smart city architecture support the smooth integration of digital services, while platforms that prioritize interoperability and adaptability ensure that services meet diverse urban needs. Foundational technologies such as AI, IoT, and big data play a critical role in facilitating KM, as they streamline data collection, processing, and sharing. These technologies, combined with 5G connectivity, support essential services like traffic management, healthcare, and public safety. The convergence of these tools indicates a shift toward knowledge-driven, citizen-centric models that prioritize accessibility and responsiveness, with stakeholder engagement emerging as a vital component for building effective, sustainable smart city services.

Particularly, AI significantly enhances KM by improving data processing, analysis, automation, and decision-making. Key aspects include knowledge discovery, where AI tools extract insights from unstructured data, and organization, where AI-driven categorization and clustering make knowledge more accessible. AI also aids in knowledge sharing by recommending relevant resources to users based on their roles and activities. Automation supports the capture of knowledge from daily activities, while enhanced retrieval techniques allow AI systems to understand and respond accurately to user queries. Personalization and contextualization further tailor the KM experience, ensuring that knowledge reaches the right individuals. Additionally, AI augments decision-making by providing predictive insights and supports innovation by identifying trends, gaps, and opportunities for knowledge creation.

Providers have leveraged existing infrastructure to deliver their services, with most vendors basing their offerings on established CDNs. These CDNs provide a global network of strategically located computing nodes, enabling reduced latency compared to traditional cloud servers. Many vendors also offer frameworks that facilitate easy integration of clients’ own edge infrastructure. However, despite their improved latency, CDN servers remain centralized in specific geographic locations90.

Related work

The integration of artificial intelligence and edge cloud computing has made advances in various applications. Duan et al.91 provide a comprehensive survey on distributed AI that uses edge cloud computing, highlighting its use in various AIoT applications and enabling technologies. Similarly, Walia et al.92 focus on resource management challenges and opportunities in Distributed IoT (DIoT) applications.

The synergy of cloud and edge computing to optimize service provisioning is well-documented. Wu et al.93 discuss cloud-edge orchestration for IoT applications, emphasizing real-time AI-powered data processing. Hossain et al.94 highlight the integration of AI with edge computing for real-time decision-making in smart cities, focusing on intelligent traffic systems and other data-driven applications. Kumar et al.95 presents a deadline-aware, cost-effective and energy-efficient resource allocation approach for mobile edge computing, which outperforms existing methods in reducing processing time, cost, and energy consumption.

The taxonomy and systematic reviews by Gill et al.96 provide a detailed overview of AI on the edge, emphasizing applications, challenges, and future directions. Their research underscores the potential of AI-driven edge-cloud frameworks for improved scalability and resource management.

The synergy between edge and cloud computing has been applied to improve predictive maintenance systems. For example, Sathupadi et al.97 highlight how real-time analysis of sensor networks can enhance outcomes through AI integration. Additionally, Campolo et al.98 explore how distributing AI across edge nodes can effectively support intelligent IoT applications.

Iftikhar et al.99 contribute to the discussion on AI-based systems in fog and edge computing, presenting a taxonomy for task offloading, resource provisioning, and application placement. Gu et al.100 propose a collaborative computing architecture for smart grids, blending cloud-edge-terminal layers for enhanced network efficiency. Jazayeri et al.101 propose a latency-aware and energy-efficient computation offloading approach for mobile fog computing using a Hidden Markov Model-based Auto-scaling Offloading (HMAO) method, which optimally distributes computation tasks between mobile devices, fog nodes, and the cloud to balance execution time and energy consumption.

Lastly, Ji et al.102 delve into AI-powered mobile edge computing for vehicle systems, emphasizing distributed architecture and IT-cloud provisions. These studies collectively underscore the transformative impact of AI-integrated edge-cloud frameworks across domains, particularly in knowledge management and smart applications. The novelty of our work lies in the conceptualization of a general model architecture for these use cases, where resources can be more efficiently used across applications.

Table 2 provides a summary of studies on AI-based edge-cloud architectures. While this topic has already been discussed extensively, the aim of this paper is to integrate this architecture with knowledge management based AI applications.

Tools & Platforms

Geopolitical and Technological Implications for Global AI Infrastructure Investment

In 2025, Abu Dhabi-backed technology group G42 has embarked on a transformative journey to diversify its AI chip supply chain, signaling a pivotal shift in global infrastructure investment. By reducing reliance on Nvidia—the dominant force in AI semiconductors—G42 is not only reshaping its own technological ecosystem but also catalyzing a broader trend of supplier diversification driven by geopolitical tensions, supply chain resilience, and the urgent need for competitive hardware innovation. For investors, this shift presents both risks and opportunities, demanding a nuanced understanding of the interplay between geopolitics, technological evolution, and market dynamics.

The Geopolitical Imperative: Diversification as a Strategic Necessity

G42’s decision to pivot from Nvidia is emblematic of a larger geopolitical recalibration. The UAE’s $5 gigawatt AI campus, a cornerstone of its economic diversification strategy, is being designed to avoid over-reliance on any single vendor. This approach aligns with U.S. government initiatives to secure critical technology supply chains, such as the CHIPS Act and export control policies aimed at curbing China’s access to advanced semiconductors. By engaging with U.S. firms like AMD, Cerebras, and Qualcomm, G42 is embedding itself in a geopolitical framework that prioritizes American technological leadership while mitigating risks associated with U.S.-China tensions.

The UAE’s alignment with U.S. tech giants—exemplified by a $1.5 billion investment from Microsoft and the integration of G42 into the Azure cloud ecosystem—further underscores this strategy. Microsoft President Brad Smith’s involvement on G42’s board highlights the company’s commitment to “responsible AI” in collaboration with U.S. and UAE regulators. Meanwhile, G42’s divestment from Chinese chipmaker Huawei reflects a deliberate move to comply with U.S. export restrictions and avoid entanglements in the Sino-American tech rivalry.

Technological Diversification: Beyond the Nvidia Monoculture

G42’s supplier diversification is not merely a geopolitical maneuver but a technological one. The initial phase of its AI campus, “Stargate,” will deploy Nvidia’s Grace Blackwell GB300 systems for 20% of its capacity. However, the remaining 80% will leverage alternative architectures, including AMD’s Instinct MI350 series, Cerebras’ wafer-scale engines, and Qualcomm’s edge AI solutions. This multi-vendor approach allows G42 to optimize for different AI workloads:

- AMD’s MI350X offers 288 GB of HBM3E memory and 8.0 TB/s bandwidth, competing directly with Nvidia’s Blackwell architecture.

- Cerebras’ wafer-scale chips, with 850,000 cores, provide unparalleled parallelism for large-scale AI training.

- Qualcomm’s edge AI accelerators cater to low-latency, on-device processing, expanding the campus’s versatility.

This diversification reduces bottlenecks and fosters innovation by enabling G42 to leverage specialized architectures for specific tasks. For instance, Cerebras’ wafer-scale chips could revolutionize generative AI training, while AMD’s high-memory GPUs might excel in recommendation systems or large language models.

Risks and Opportunities for Investors

The shift toward supplier diversification introduces both risks and opportunities for investors in semiconductor and AI infrastructure sectors:

Risks

- Geopolitical Volatility: U.S. export controls and shifting trade policies could disrupt supply chains. For example, AMD’s $1.5 billion revenue loss in 2025 due to China export restrictions highlights the fragility of markets in a bifurcated global landscape.

- Technological Uncertainty: Niche players like Cerebras, while innovative, face challenges in scaling adoption and competing with Nvidia’s CUDA ecosystem.

- Market Competition: Saudi Arabia’s HUMAIN project, with its $77 billion AI infrastructure plan, is intensifying regional rivalry, potentially fragmenting the AI market.

Opportunities

- Growth in Alternative Suppliers: AMD’s Q1 2025 revenue growth of 36% and data center revenue surge of 57% demonstrate the potential of diversified chipmakers. Investors could benefit from AMD’s $13–15 billion AI chip sales target for 2025.

- Niche Innovators: Cerebras’ wafer-scale architecture, though unproven at scale, could capture a niche in high-performance computing (HPC) and AI research.

- Edge AI Expansion: Qualcomm’s focus on edge computing positions it to capitalize on decentralized AI workloads, a $200 billion market by 2030.

Actionable Investment Strategies

For investors navigating this transformation, the following strategies are recommended:

- Diversify Semiconductor Portfolios: Allocate capital across both established players (e.g., AMD, TSMC) and niche innovators (e.g., Cerebras, Groq). This balances exposure to proven revenue streams with high-growth potential.

- Monitor Geopolitical Developments: Track U.S. export policy shifts and China’s self-sufficiency goals. For example, the U.S. “small yard, high fence” strategy could favor domestic chipmakers like TSMC and AMD.

- Invest in AI Infrastructure Ecosystems: Prioritize companies with strong partnerships in AI infrastructure, such as Microsoft (Azure integration with G42) and Oracle (multi-generational AI supercomputers with AMD).

- Consider Regional AI Hubs: The UAE and Saudi Arabia’s AI projects represent $100+ billion in infrastructure investment. Positioning in firms supplying these hubs (e.g., Cerebras, Qualcomm) could yield long-term gains.

Conclusion: A New Era in AI Infrastructure

G42’s strategic shift from Nvidia is a harbinger of a more fragmented yet dynamic global AI landscape. As geopolitical tensions and technological innovation converge, investors must adopt a dual focus: hedging against supply chain risks while capitalizing on the rise of alternative suppliers. The UAE’s AI campus, with its multi-vendor approach and geopolitical alignment, exemplifies how infrastructure investment can drive both technological progress and strategic autonomy. For those willing to navigate the complexities of this transformation, the rewards could be substantial.

Tools & Platforms

Krupp Moving & Storage Becomes First Movers in Cincinnati to Deploy AI-Powered Route Optimization, Reducing Moving Times by 35% While Cutting Customer Costs

A Krupp Moving & Storage truck parked in a residential neighborhood, ready to assist with a household move.

Krupp Moving and Storage, the top moving company in Cincinnati, has introduced AI-powered route optimization systems, revolutionizing the relocation industry. This innovation enhances efficiency and cost-effectiveness across Ohio, addressing long-standing challenges in the sector. After months of development and testing, Krupp is the first to integrate this cutting-edge technology into traditional moving services.

Cincinnati’s relocation industry has reached a technological milestone with Krupp Moving and Storage, the #1 Cincinnati Moving Company, introducing artificial intelligence-powered route optimization systems. This innovation marks a significant advancement in how professional relocation services operate, setting new standards for efficiency and cost-effectiveness across Ohio’s moving industry.

The implementation represents months of development and testing, positioning Krupp Moving and Storage as the pioneering company to integrate innovative technology solutions into traditional moving operations. This breakthrough addresses long-standing challenges that have affected both service providers and customers throughout the region.

Advanced Technology Transforms Traditional Moving Operations

The AI-powered system analyzes multiple data points, including traffic patterns, weather conditions, construction zones, and optimal loading sequences, to determine the most efficient routes for each relocation. This sophisticated technology processes real-time information to adapt routes dynamically, ensuring trucks reach destinations using the fastest possible paths. With these innovations, the company sets a new standard among professional movers Cincinnati residents trust for efficiency and reliability.

Krupp Moving and Storage invested significant resources into developing this proprietary system, working with technology partners to create algorithms specifically designed for the moving industry. The system accounts for factors unique to relocation services, including truck size restrictions, parking limitations, and loading dock accessibility.

The technology integration required extensive training for the Krupp Moving and Storage team members, ensuring seamless adoption across all Cincinnati operations. Staff members now receive real-time route updates and traffic information through mobile devices, enabling immediate adjustments when road conditions change.

Documented Performance Improvements Benefit Cincinnati Residents

Initial performance data from the AI system shows remarkable improvements in operational efficiency. Moving times have decreased by an average of 35%, translating directly into cost savings for customers who pay hourly rates for relocation services.

The system has reduced fuel consumption by 28%, contributing to both environmental benefits and lower operational costs. These savings enable Krupp Moving and Storage to maintain competitive pricing while delivering enhanced service quality to Cincinnati area residents and businesses.

Customer satisfaction scores have increased significantly since the implementation of AI began. Clients report reduced stress levels during relocations, attributed to more predictable arrival times and faster completion of moving projects.

Industry Recognition for Innovation Leadership

The Ohio Moving and Storage Association has acknowledged Krupp Moving and Storage for this technological advancement, noting the potential impact on industry standards. Professional moving companies across the state are monitoring the results as a potential model for future industry developments.

Technology industry publications have featured the implementation as an example of AI applications in traditional service sectors. The successful integration demonstrates how established businesses can adapt cutting-edge technology to improve customer outcomes while maintaining operational excellence.

Local business organizations have recognized Krupp Moving and Storage for innovation leadership, highlighting the company’s commitment to advancing professional standards within the Cincinnati business community.

Comprehensive Service Portfolio Maintains Quality Standards

The AI integration complements Krupp Moving and Storage’s existing service offerings without compromising the personalized attention that has built the company’s reputation since 2005. Residential relocations continue to receive the same careful handling, with background-checked staff members providing professional service for homes, apartments, and condominiums.

Commercial clients benefit from the enhanced efficiency while receiving specialized business relocation services designed to minimize operational disruptions. The AI system helps coordinate complex office moves more effectively, reducing downtime for Cincinnati businesses during relocations.

Storage solutions remain available for clients requiring temporary or long-term options, with the improved logistics helping coordinate deliveries from storage facilities more efficiently than traditional scheduling methods.

Economic Impact Strengthens Local Business Community

The technological advancements position Krupp Moving and Storage for continued growth within Ohio’s competitive moving market. The company’s investment in AI technology creates opportunities for additional hiring, as operational efficiency improvements enable expanded service capacity.

Local suppliers and service partners benefit from the increased operational volume that results from faster project completion times. The ripple effect strengthens the broader Cincinnati business ecosystem while maintaining the company’s commitment to supporting local economic development.

The innovation attracts attention from potential commercial clients seeking technologically advanced services. This advancement solidifies the company’s position among top-rated movers in Cincinnati, establishing the region as a forward-thinking market for businesses considering relocation.

Future Development Plans Build on Current Success

Krupp Moving and Storage plans to expand the AI system’s capabilities based on performance data collected during the initial implementation period. Future enhancements may include predictive scheduling algorithms and automated inventory management systems.

The company is exploring partnerships with other Cincinnati businesses to share best practices for AI integration in traditional service industries. This collaborative approach could accelerate technology adoption across multiple sectors within the local business community.

Research and development efforts continue as Krupp Moving and Storage evaluates additional applications for artificial intelligence within moving and storage operations. The success of route optimization provides a foundation for exploring other areas where technology can improve customer experiences and operational efficiency.

About Krupp Moving And Storage

Founded in 2005, Krupp Moving and Storage operates as a regional moving company serving the Ohio communities of Cincinnati, Columbus, Cleveland, and surrounding counties. The company maintains state licensing and insurance requirements while employing background-verified staff members. Operating from multiple locations in Ohio, the company offers residential and commercial relocation services across Summit, Wayne, Holmes, Richland-Ashland, and Medina Counties.

Media Contact

Company Name: Krupp Moving And Storage

Contact Person: TIM

Email: Send Email

Phone: +15136139542

Address:151 W 4th St suite 502

City: Cincinnati

State: Ohio 45202

Country: United States

Website: https://kruppmoving.com/cincinnati/

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies