Colton Masi checked off every box in his quest to land a good job in the computer science industry after college.

AI Insights

OpenAI, Microsoft back new academy to bring AI into classrooms

OpenAI, Microsoft Corp. and Anthropic are partnering with one of the largest teachers unions in the US to establish a new training center to help educators use artificial intelligence tools in classrooms across the country.

The National Academy for AI Instruction will provide access to AI training workshops and seminars free of cost to educators, with the goal of supporting 400,000 K-12 educators over the next five years, the American Federation of Teachers said on Tuesday. The initiative is supported by $23 million in funding from the three AI companies, with Microsoft serving as the single biggest backer.

The new education effort reflects a growing push in the US to ensure that teachers and students are able to adapt to the rapidly evolving technology. In April, President Donald Trump signed an executive order that established a White House Task Force on AI Education and called for public-private partnerships to provide resources for K-12 education about AI and the use of artificial intelligence tools in academia.

AI developers have also increasingly focused on schools as a growth area for their businesses. In February, OpenAI announced a partnership with the California State University system to bring its software to 500,000 students and faculty. In April, Anthropic introduced Claude for Education, a version of its chatbot tailored for higher education. Alphabet Inc.’s Google has also struck deals to bring its AI tools to public schools and universities.

“When it comes to AI in schools, the question is whether it is being used to disrupt education for the benefit of students and teachers or at their expense,” Chris Lehane, chief global affairs officer of OpenAI, said in a statement. “We want this technology to be used by teachers for their benefit, by helping them to learn, to think and to create.”

The teachers union said the academy would open a bricks-and-mortar facility in Manhattan, New York, with more locations expected. The academy will also offer online training sessions.

AI Insights

AI job market: Careers are being upturned by artificial intelligence.

The 23-year-old attended Drexel University, a Philadelphia school distinguished by its focus on real-life job experience. And he majored in software engineering, a discipline he had been hearing his whole life was synonymous with stable, high-paying work. It was all part of his plan to avoid the fate that befell so many millennials after the Great Recession.

“When I was 13, I was online all the time.” Colton told Today, Explained co-host Noel King. “I was on Tumblr, and I was seeing a lot of these currently graduating young adults kind of talk about their struggles with the job market and getting themselves established…I was always like, ‘Oh no, I need to do something that’s going to get me a job.’”

So Masi took the advice offered by everyone from Joe Biden to Chris Bosh to Ashton Kutcher in that era: he learned to code.

But Masi graduated from Drexel this past June into a historically bad job market for entry-level computer science positions. Since then he’s applied to about 100 jobs — none have even offered an interview.

“It’s like, you do everything right. You follow the instructions, but the field changes,” Colton said. “There’s nothing you can do about it. It’s just: keep it pushing until you find something.”

Masi’s situation is increasingly common for recent college graduates and others seeking to break into white-collar industries like computer science and marketing.

“I hear about a lot of rejection from job seekers,” Lindsay Ellis, a reporter for the Wall Street Journal who has been crunching the numbers on the entry-level job decline, told Noel King. “[The] market feels kind of stuck to a lot of people.”

Ellis talked to King about why big companies are planning on a future with far fewer entry-level employees, the wild lengths people are going to to find a job, and what career advice executives are giving their own kids.

Below is an excerpt of their conversation, edited for length and clarity. There’s much more in the full podcast, so listen to Today, Explained wherever you get podcasts, including Apple Podcasts, Pandora, and Spotify.

If I were to guess at what’s going on, I would say this must have something to do with AI. Is that it?

That’s a factor, and I think is layered on top of a bunch of other factors that have caused the white-collar market to slow considerably over the last few years.

You know, starting in maybe late 2022, early 2023, companies and hiring managers were really pumping the brakes in a lot of sectors. There were the tons of tech layoffs that started in ’23, but from inflation [and] geopolitical conflict, then the looming election and a lot of uncertainty — in terms of policy — [about] which way things were going to go. If a hiring manager is saying, “Hey, can we hold off on making this hire and maybe have a little bit more buffer in terms of headcount, in terms of payroll costs,” they might see how long they can last without making that hire.

And then you add in AI as a layer on top of all of this, and the calculation is totally different. I talked to James Hornick, who’s the chief growth officer at the Chicago-based recruiting firm Hirewell. And he told me that clients have all but stopped requesting entry-level staff. Those young grads were once in high demand, but their work is now a home run for AI.

We’re always trying to figure out what is data and what is anecdata. You can hear one story about someone who applied for three or four jobs a day for a month and got nothing, and that will be the thing that sticks in your brain forever.

But the unemployment rate in the US right now is around 4.2 percent, which is super low, right? Is there a tension between the one extreme story and the actual trend?

Behind that number, I think you’ll see a couple of other trends that suggest that the picture is a little bit more complicated.

Number one is sort of labor data on the time it takes to find a job. And there are two things that my colleagues and I have been looking at. One is for unemployed Americans, it now takes them on average 24 weeks to find a job after losing one, and that’s nearly a month longer than a year prior.

And the number of long-term unemployed Americans — that’s people who are unemployed for at least 27 weeks — that figure is now 1.8 million people a year. Prior, it was like 1.5 [million]. So that’s an uptick too.

The other factor here is you think about which sectors are hiring at the moment, [and] much of the jobs growth is coming from state and local government, or sectors like health care, social assistance, leisure and hospitality, construction. A white-collar project manager probably wouldn’t be qualified for a role in health care or might not be looking for a local government job in a different state. So I think it’s also a question of matching opportunity to skillset and how that goes.

The job application process for a long time has been: There’s maybe a portal and you submit your resume, or you send an email to a hiring manager. Is AI changing the way we apply for jobs?

Oh my god, you have no idea.

This has been a total fascination of mine. The job application process now in many ways can in my mind be described as a robot-versus-robot arms race, basically.

What you hear from applicants is that they are super frustrated with corporate hiring software, which for many years will scan an applicant’s resume and cover letter and basic details and sort of rank them based on their qualifications. And they feel like that artificial intelligence basically forces good people to slip through the cracks.

So in response, [applicants are] using AI of their own to craft cover letters and resumes, using the job description and their own stuff to basically incorporate all of the keywords, [to] show how they’re responding to specific job responsibilities. There are even tools, though, that scan the entire internet for potential jobs and then just spray out a candidate’s application in seconds.

The whole thing has left applicants and employers super irritated, because employers are totally — all of their portals are getting clogged up, and it’s really hard to tell who is actually interested versus who is using really good prompts or keywords. Applicants are really frustrated because they will look at a job posting on LinkedIn, and it’ll say how many people have applied, and it’s like, Shoot, I have no chance here. Should I even still do this? Then if they do put time into their application, they might get a rejection hours later or at 2 in the morning on a Sunday. It just feels super impersonal, and both sides of the table are really frustrated.

What are young people being told to do now? What are the options?

I’ve been asking executives the same question. I mean both from a [perspective of], what are you talking to universities about — because there’s a lot of correspondence between business and higher ed — but also, what are you telling your own kids?

I talked to the chief executive of a consulting firm in Ohio, and he basically said, I’m telling my kids to really focus on jobs that really require in-person or client-facing communication. One of his children is becoming a police officer, and he said, while AI will affect the way he does his job, nothing replaces those relationships that are forged face-to-face in a community.

And now, chief executives are talking openly about AI’s immense capabilities, and how those might lead to job cuts, even more so than [just] at the entry levels. I mean, you had executives at Amazon, JPMorgan in recent weeks saying that they expect their workforces to shrink considerably. The CEO of Ford said he expects AI will replace half of the white-collar workforce in the US. Those are figures that suggest that people in various roles, various experience levels, should expect significant disruption.

You have spent a lot of time, all over the country, talking to people who are really struggling. What do you think about how these folks — many of them young people — are going to deal with all this?

Many people feel quite low. It’s a really hard stretch, and it’s a hard time to be on the market, and I don’t want to sugarcoat that.

I talked to some people who say, what’s really helped me is to get outside, do some gardening, go for a run, go swimming. Swimming is great. You can’t really have your phone in your hand. I will say, though: A lot of them are spending a lot of money to be able to hopefully speed up this process and stand out to employers and potential employers.

I talked to one guy who said he spent $10,000 on basically a marketing firm that’s treating him as the product, to basically get his resume out there, make him a website, try and introduce him to hiring managers and people who might know of jobs that aren’t posted publicly.

So I think for some people, it helps when they can funnel their frustration into, I’m going to do this; I’m going to really push myself hard. Other people have been telling me, look, this is a marathon, not a sprint. I need to make sure I’m taking time outside of this hunt to really keep my mental health steady.

AI Insights

Artificial Intelligence at Fifth Third Bank

Fifth Third Bank, a leading regional financial institution with over 1,100 branches in 11 states, operates four main businesses: commercial banking, branch banking, consumer lending, and wealth and asset management. Founded in 1858 and headquartered in Cincinnati, the bank has assets in excess of $211 billion. During the first quarter of 2025, Fifth Third Bank saw loan growth, net interest margin expansion, and expense discipline, which led to positive operating leverage.

Fifth Third Bank has been at the forefront of technological innovation for decades. Press materials surrounding new systems note that the bank is proud to have launched the first online automated teller system and shared ATM network in the U.S. in 1977 by allowing customers 24/7 banking access.

Additionally, this drastically increased the number of ATMs customers could use since they were able to access ATMs owned by other banks. Fifth Third directly invests in AI to enhance its core banking operations and indirectly invests in AI to improve healthcare through Big Data Healthcare, a wholly owned indirect subsidiary.

Fifth Third’s annual filing in 2024 reflects an uptick in the bank’s technology and communications spending, showing a roughly 14% increase compared to 2022. Fifth Third takes a highly intentional and risk-minded approach to AI, having spent the better part of 2024 focused on establishing key governance foundations before rolling out AI capabilities. Additionally, they concentrate deployment on areas where AI brings genuine business value.

This article examines two AI use cases at Fifth Third Bank:

- Conversational AI for streamlined customer service delivery: Leveraging natural language processing (NLP) to reduce calls received by human agents by double-digit percentages and saving millions in costs.

- Surfacing customer satisfaction scores: Leveraging analytics to analyze customer interactions and improve agent performance.

Redefining Customer Service with Conversational AI

The onset of the pandemic in early 2020 brought the stress of heavy call volumes of contact centers to the forefront. One study emphasizes how a voice-first approach is needed to facilitate contract centers’ ability to handle a sudden surge in calls.

Like many companies, Fifth Third Bank saw customer inquiries skyrocket at the start of the pandemic. The bank was already piloting the concept of a chatbot in the immediate years leading up to the pandemic. Then, in early 2020, as the pandemic unfolded, Fifth Third quickly realized it had to help its call center, which was overwhelmed.

Before Jeanie 2.0, customer service agents on the call center floor were required to scroll up to read the entire conversation history to understand customers’ needs and personalized requests better. Additionally, while Jeanie 1.0 could provide step-by-step instructions to complete specific tasks, it had the following issues:

- Inability to always provide the correct answers to customers’ questions

- Connected customers to agents when it wasn’t necessary

- Required agents to manually search for and use a variety of content to perform different tasks when helping customers, and find the specific script telling them what information they need to send to the customer

- Create their own scripts to answer questions of a more complex range

Screenshot of the Zelle Flow for Jeanie 1.0 showing the problematic early default to agent escalation (Source: UC Center for Business Analytics)

Jeanie’s natural language understanding model is built on LivePerson’s conversational platform and uses traditional AI, according to Senior Director of Conversational AI at Fifth Third Bank, Michelle Grimm.

Jeanie 2.0 is more user-friendly for the Fifth Third agents using it. It uses LivePerson’s predefined content library to streamline and increase efficiency for agents when they are helping customers. The documentation outlines the benefits of using predefined content, including:

- Time savings for agents

- Ensures consistent, error-free responses

- Maintains a professional tone of voice

Fifth Third Bank’s Annual Report highlights the following benefits of Jeanie:

- Reduced calls requiring a live agent by nearly 10%

- Generated over $10 million in annual savings

- Improved customer satisfaction

- Improved employee retention

- Shortened account opening times by more than 60%

The same report also states that Jeanie underwent a significant update in October 2023, resulting in expanding Jeanie’s capabilities by over 300%.

Alex Ross, Sr. Content Producer for LivePerson, explained additional benefits in a blog post, including Jeanie’s ability to respond to over 150 intents and over 30,000 phrases with over 95% accuracy.

Improving Customer Experience (CX) with AI-surfaced Customer Satisfaction Scores

Traditionally, companies use a survey-based system to manage customer experience and agent performance, and Fifth Third Bank was no exception. The bank primarily relied on customer surveys to gain insight into how customers viewed their interactions with the contact center. However, this method has notable shortcomings, especially in an industry where 88% of bank customers report that customer experience is as essential, or even more important, than the actual products and services. These limitations include:

- Low visibility for managers into agent contributions to customer experience

- Identification of only a limited range of coachable topics

Also, metrics such as average handle time were used to evaluate agent performance. With an increase in automation, average handle time loses its ability to serve as an effective metric. The reason is that automation results in the more complex issues being surfaced to agents, which leads to an increase in average handle time. Fifth Third transitioned away from focusing on surveys in favor of sentiment analysis.

According to the case study published by NiCE, Fifth Third had multiple goals related to customer experience and satisfaction, including:

- Reach the top of independent third-party customer experience rankings

- Gain customer sentiment metrics from every interaction

- Obtain insights from a more representative group of bank customers

- Replace the costly, limited-utility survey program

Fifth Third Bank began using Enlighten AI and Nexidia Analytics by NiCE in the hopes of reaching those goals and improving how they coach 700 agents across 3 locations. More specifically, the sentiment score from Enlighten allows Fifth Third to find an ideal range for average handle time for its agents.

In the video below, Michelle Grimm, Senior Director of Conversational AI at Fifth Third Bank, explains how the bank has improved agent feedback with Enlighten AI, citing metrics like average handle time at around the 1:21 mark:

Grimm explains how Fifth Third was able to use NICE Enlighten to identify the optimal AHT range beyond which sentiment declines once the upper end of the range is reached; the optimal range identified was 3-5 minutes.

Fifth Third uses the sentiment scores to refine the coaching process and ultimately improve customer interactions. Grimm mentions that her team uses a color-coded system consisting of a standard red, yellow, and green distribution to focus on positive behaviors for reinforcement and address areas for improvement.

The sentiment scores have served as a basis for improved collaboration across Fifth Third’s three geographically distributed call sites: Cincinnati, Grand Rapids, and the Philippines.

The bank also rolled out Nexidia Analytics in 2021. As part of that effort, they analyzed over 15.7 million interactions involving 2,300 agents.

With the shift from survey to sentiment, Fifth Third Bank saw some immediate benefits, including improved employee productivity, higher employee compliance, and lower costs as speech analytics identified processes that could be automated.

Increased sentiment scores can have wide-reaching effects across the entire enterprise over time. In the above video, Grimm is emphatic that positive feedback to agents translates into customer experience. This is why Grimm says that it’s essential for agents to hone areas in which they’re good as well as focus on areas of improvement. She fully recognizes how customer-based the banking industry is, which is why improving sentiment scores is so vital.

Over time, sustained increased sentiment helps to:

- Strengthen customer loyalty

- Reinforce the bank’s reputation

- Reduce customer churn

- Reduce compliance risks

A 2023 Bain & Company report shows consumers who give high loyalty scores cost less to serve and spend more with their bank. Additionally, they are more likely to recommend their bank to family and friends.

While Fifth Third has not published quantifiable numbers, the bank has indicated in press materials that the use of NiCE Enlighten AI and Nexidia Analytics resulted in increased sentiment scores and lower costs.

AI Insights

AI shame grips the present generation: What’s driving Gen Z’s anxiety over artificial intelligence

Artificial intelligence is no longer a futuristic concept—it is the invisible engine powering modern workplaces. Yet, for Gen Z, this engine often hums with an undercurrent of anxiety. While older generations might approach AI with caution or curiosity, the youngest professionals find themselves thrust into a paradox: they are expected to master AI tools instantly, yet rarely receive guidance, training, or institutional support. The result is a pervasive tension, now recognized as “AI shame,” where employees hide their reliance on technology or feign expertise to avoid judgment.A 2025 survey by WalkMe, an SAP company, highlights the stark reality. Nearly 89% of Gen Z workers use AI to complete professional tasks—but 62% hide their AI usage, and 55% pretend to understand tools in meetings. In essence, the generation most comfortable with technology is also the most anxious about being judged for it. This tension is intensified by a lack of formal training: only 6.8% of Gen Z employees report receiving extensive guidance, while 13.5% receive none. With little structured support, most are left to navigate complex AI tools independently, often resorting to unsanctioned solutions that further heighten workplace insecurity.

The roots of Gen Z anxiety

The unease is not just about capability; it is about expectation and pressure. AI, while designed to increase productivity, paradoxically slows down many young workers: 65% of Gen Z respondents report that AI complicates rather than simplifies their workflows. Meanwhile, 68% feel pressured to produce more, and nearly one in three are deeply concerned about AI’s long-term impact on their careers.Another critical factor is the emerging “AI class divide.” Training and support are often disproportionately provided to executives and senior employees. Only 3.7% of entry-level staff—largely Gen Z—receive substantial guidance, compared with 17% of C-suite leaders. The consequence is a workforce where the heaviest users of AI are the least supported, intensifying stress and limiting the effective use of technology.

Turning anxiety into opportunity: Practical strategies for Gen Z

Despite these challenges, there are actionable ways for Gen Z to reclaim agency over AI:

- Proactive skill-building: Seek structured learning opportunities—online courses, internal workshops, or mentorship programs—to gain confidence and reduce reliance on guesswork.

- Collaborative learning: Form peer networks within or outside the organization to share AI strategies, troubleshoot issues, and create a support system for knowledge exchange.

- Transparent documentation: Keep records of AI-assisted work. Transparency in how AI is applied can reduce fear of judgment and demonstrate competence to supervisors.

- Prioritize critical thinking: AI is a tool, not a replacement for human judgment. Developing problem-solving skills ensures decisions remain robust and independent, reducing over-reliance on automation.

- Experiment strategically: Controlled experimentation with AI allows workers to learn without fear. Framing errors as learning opportunities fosters resilience and self-confidence.

The broader implication: Rethinking workplace AI culture

The Gen Z experience illustrates a broader challenge for organizations: AI adoption without support creates not efficiency, but anxiety. MIT research suggests a 95% failure rate for generative AI pilots at large enterprises, reflecting the gap between the theoretical promise of AI and its real-world application. Companies that fail to train and support employees risk a workforce where innovation coexists with stress, and potential remains unrealized.Ultimately, for Gen Z, mastering AI is not only a technological challenge but a psychological one. By embracing education, collaboration, and critical thinking, young professionals can transform AI from a source of silent anxiety into a catalyst for career growth. The task for organizations is clear: provide guidance, encourage experimentation, and recognize that the human side of AI is just as important as its computational power.

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

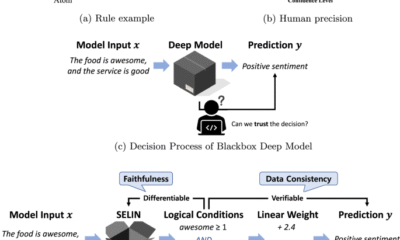

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies