Ethics & Policy

Open Letter & MAIEI’s Next Chapter, AI Risks in Healthcare, Governance Gaps, and more.

Welcome to this edition of the Montreal AI Ethics Institute’s newsletter. Published bi-weekly, The AI Ethics Brief is designed to keep you informed about the rapidly evolving world of AI ethics by providing summaries of key research, insightful reporting, and thoughtful commentary. Learn more about us at montrealethics.ai/about.

Dear MAIEI Community,

The past few months have been a time of profound loss and reflection for all of us at the Montreal AI Ethics Institute (MAIEI). On September 30, we lost our dear friend and founder, Abhishek Gupta. His vision, brilliance, and tireless dedication to fostering AI ethics and responsible AI shaped MAIEI into what it is today and has inspired countless individuals worldwide, including myself.

As co-founder of MAIEI, I’ve reflected deeply on the path forward, consulting with close friends, colleagues, advisors, and our small but dedicated team. In moments of grief, closing MAIEI felt like the most straightforward option, but I quickly realized that the work we’ve built and the global community we’ve fostered over the past seven years are far too important to let go.

Abhishek’s loss is immeasurable.

My goal is not to fill his shoes but to honour his legacy by stewarding MAIEI into its next chapter—building a financially sustainable organization, expanding our reach, and ensuring its long-term resilience.

Here’s how we plan to move forward:

-

Growing Our Impact: Continuing The AI Ethics Brief bi-weekly, starting with this edition, to grow our subscriber base from 15,000 to 100,000 and beyond.

-

Strengthening Our Community: Inviting past contributors and partners to reconnect with MAIEI, share updates on your work, and collaborate on new opportunities.

-

Honouring Abhishek’s Legacy: Developing a memorial scholarship or seminar series at McGill University, his alma mater, to inspire future leaders in AI ethics.

-

Ensuring Sustainability and Growth: Building a fully functioning institute with paid staff, researchers, and funded projects supported by direct donations, corporate sponsorships, and grant opportunities.

You can read my full reflections: Open Letter: Moving Forward Together – MAIEI’s Next Chapter.

In addition, you can find my remarks from the recent Montreal Startup Community Awards 2024 here. At the event, we honoured Abhishek’s life and legacy. Many thanks to Ilias Benjelloun, Simran Kanda, and the Montréal NewTech and Startupfest teams for their kind invitation and support.

Abhishek’s parents, Mr. Ashok Gupta and Mrs. Asha Gupta, and his brother Abhijay were present to celebrate his remarkable contributions. A memorial for Abhishek is being planned in Montreal for January 2025, with details to follow.

Thank you for being part of this journey as we navigate this challenging transition and continue shaping the future of AI ethics together.

Renjie Butalid

Co-founder & Director

Montreal AI Ethics Institute

Watch the tribute video below, presented at the Montreal Startup Community Awards on November 28, 2024. (Read BetaKit’s coverage here). Featuring Abhishek’s own words, this video beautifully captures his vision and passion. Edited by George Popi, Khaos.

Video credits (YouTube): Green Software Foundation, Brookfield Institute for Innovation + Entrepreneurship, Peter Carr.

💖 Help us keep The AI Ethics Brief free and accessible for everyone. Consider becoming a paid subscriber on Substack for the price of a ☕ or make a one-time or recurring donation at montrealethics.ai/donate.

Your support sustains our mission of Democratizing AI Ethics Literacy, honours Abhishek Gupta’s legacy through initiatives like a planned memorial scholarship or seminar series at McGill University, his alma mater, and ensures we can continue serving our community.

For corporate partnerships or larger donations, please contact us at support@montrealethics.ai.

-

A collection of principles for guiding and evaluating large language models

-

Risk of AI in Healthcare: A Study Framework

-

FairQueue: Rethinking Prompt Learning for Fair Text-to-Image Generation (NeurIPS 2024)

-

AI Missteps Could Unravel Global Peace and Security – IEEE Spectrum

-

I’m a neurology ICU nurse. The creep of AI in our hospitals terrifies me – Coda

-

AI as an Invasive Species – Digital Public

-

Atlantic Council – AI Connect II webinar: Sustainable development and inclusive growth of AI featuring Abhishek Gupta.

-

U.S. Department of Commerce & U.S. Department of State Launch the International Network of AI Safety Institutes at Inaugural Convening in San Francisco

The recent killing of Brian Thompson, CEO of UnitedHealthcare—the largest health insurance company in the US—has drawn fresh attention to the ethical challenges of AI deployment in healthcare, particularly in insurance claims processing.

STAT News, a 2024 Pulitzer Prize Finalist in Investigative Reporting, uncovered that UnitedHealth pressured employees to use nH Predict, an AI model by NaviHealth, to prematurely deny payments by predicting patient lengths of stay. The model often overrode doctors’ judgments, wrongfully denying critical health coverage to elderly patients. Unlike clinical AI tools, these algorithms lack proper oversight despite their growing influence in coverage decisions.

As STAT reports:

One of the biggest and most controversial companies behind these models, NaviHealth, is now owned by UnitedHealth Group… These tools are becoming increasingly influential in decisions about patient care and coverage. For all of AI’s power to crunch data, insurers with huge financial interests are leveraging it to make life-altering decisions with little independent oversight.

AI models used by physicians to detect diseases such as cancer, or suggest the most effective treatment, are evaluated by the Food and Drug Administration. But tools used by insurers in deciding whether those treatments should be paid for are not subjected to the same scrutiny, even though they also influence the care of the nation’s sickest patients.

The impact is stark:

This has resulted in patients being kicked out of rehabilitation programs and care facilities far too early, forcing them to drain their life savings to obtain needed care that should be covered under their government-funded Medicare Advantage Plan. (Source: Ars Technica)

Key ethical concerns:

-

Transparency gaps: Insurers’ algorithms lack the oversight applied to clinical AI tools.

-

Patient harm: Flawed predictions lead to life-altering decisions, undermining medically necessary care.

-

Regulatory mismatch: While clinical AI faces FDA regulation, insurer algorithms influencing coverage remain unregulated.

This case raises urgent questions about accountability and governance in using AI to influence healthcare decisions.

Read the full series of investigations by STAT News here.

Did we miss anything?

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours, and we’ll answer it in the upcoming editions.

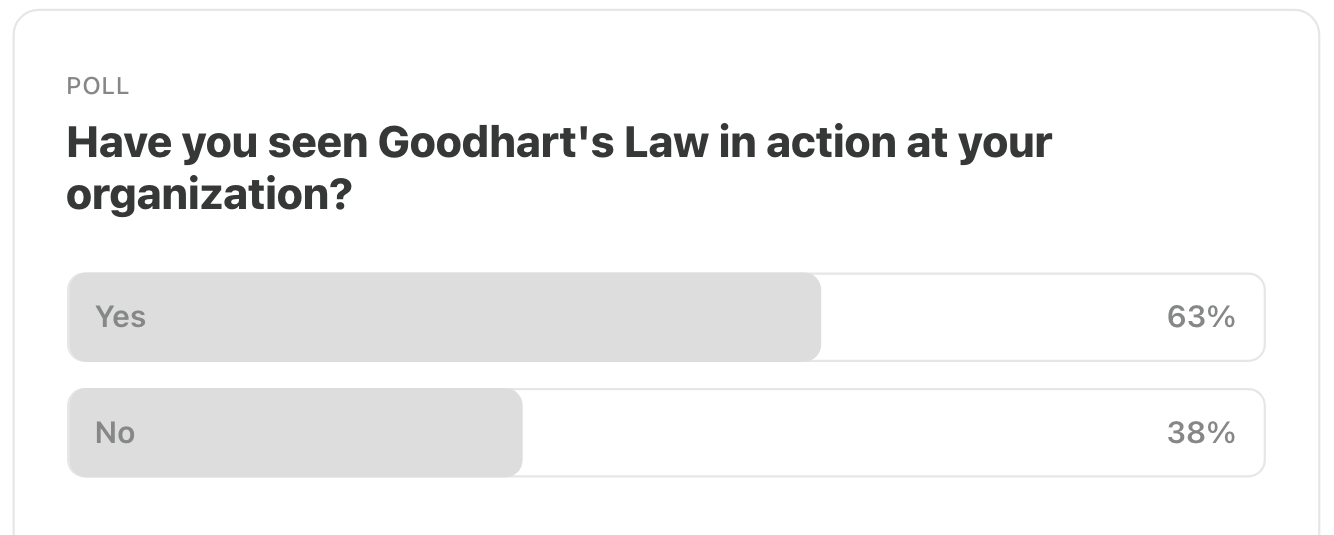

Here are the results from the previous edition for this segment:

The results from the poll in our previous edition revealed that 63% of respondents have observed Goodhart’s Law in action within their organizations. This principle, which states that “when a measure becomes a target, it ceases to be a good measure,” highlights the risks of over-relying on metrics. This is especially relevant in programs like Responsible AI (RAI), where metrics are introduced to track progress and align goals but can sometimes lead to system gaming.

A recent article in the MIT Technology Review titled “The way we measure progress in AI is terrible,” illustrates this challenge in the context of AI benchmarks:

Every time a new AI model is released, it’s typically touted as acing its performance against a series of benchmarks. OpenAI’s GPT-4o, for example, was launched in May with a compilation of results that showed its performance topping every other AI company’s latest model in several tests.

The problem is that these benchmarks are poorly designed, the results hard to replicate, and the metrics they use are frequently arbitrary, according to new research. That matters because AI models’ scores against these benchmarks will determine the level of scrutiny and regulation they receive.

This disconnect between benchmarks and real-world outcomes creates significant risks, as poorly designed metrics can drive organizations to optimize for superficial targets rather than meaningful progress.

How is your organization approaching AI adoption amidst all the hype? Are you prioritizing impactful projects, experimenting broadly, or waiting for proven use cases? Or is aligning AI initiatives with your goals a challenge?

Share your thoughts with the MAIEI community:

Should we communicate with the dead to assuage our grief? An Ubuntu perspective on using griefbots

MAIEI’s Director of Partnerships, Connor Wright, recently explored this question in a thought-provoking paper. Drawing on Ubuntu Ethics—a Southern African philosophy centered on community and relationships—Connor examines the emerging use of griefbots (digital representations of deceased loved ones). His work argues that griefbots can offer meaningful connections for the bereaved, providing moral and ethical frameworks for their use.

The paper also addresses important considerations, such as privacy, commercialization, and the potential risks of replacing human relationships. Ultimately, Connor concludes that an Ubuntu perspective offers a valuable toolkit for navigating this sensitive intersection of grief and technology.

To dive deeper, read the full paper here.

Canada’s upcoming G7 presidency in 2025 offers a unique opportunity for MAIEI to shape global policy discussions. Through the Think7 (T7) process, organized by the Centre for International Governance Innovation (CIGI), contributions are being invited for policy briefs on key areas such as transformative technologies, digitalization of the global economy, environmental sustainability, and global peace and security.

Drawing on insights from our community of over 15,000 subscribers, we aim to highlight the most critical issues in AI ethics today.

Which AI governance gaps should we bring to the G7’s attention?

We’d love to hear your thoughts! If your institution is interested in partnering with us on this initiative, please get in touch. Leave a comment using the button below or reach out directly here.

A collection of principles for guiding and evaluating large language models

This paper addresses the challenges in assessing and guiding the behavior of large language models (LLMs). It proposes a set of core principles based on an extensive review of literature from a wide variety of disciplines, including explainability, AI system safety, human critical thinking, and ethics.

To dive deeper, read the full summary here.

Risk of AI in Healthcare: A Study Framework

AI and its applications have found their way into many industrial and day-to-day activities through advanced devices and consumer’s reliance on the technology. One such domain is the multibillion-dollar healthcare industry, which relies heavily on accurate diagnosis and precision-based treatment, ensuring that the patient is relieved from his illness in an efficient and timely manner. This paper explores the risks of AI in healthcare by meticulously exploring the current literature and developing a concise study framework to help industrial and academic researchers better understand the other side of AI.

To dive deeper, read the full summary here.

FairQueue: Rethinking Prompt Learning for Fair Text-to-Image Generation (NeurIPS 2024)

This paper will be presented at NeurIPS 2024 which takes place in Vancouver, Canada from December 10-15, 2024. It explores advancements in fair text-to-image (T2I) diffusion models, contributing to the growing body of research on fairness in generative AI. The study introduces FairQueue, a novel framework designed to achieve high-quality and fair T2I generation. Current T2I methods, like Stable Diffusion, often suffer from bias and quality degradation when addressing fairness. FairQueue tackles these challenges using two key strategies—Prompt Queuing and Attention Amplification—enhancing image quality, semantic preservation, and competitive fairness.

To dive deeper, read the full summary here.

AI Missteps Could Unravel Global Peace and Security – IEEE Spectrum

-

What happened: Earlier this year, an article co-authored by Abhishek Gupta and a global team of experts was published in IEEE Spectrum. The piece highlights the potential risks AI technologies pose to international peace and security, emphasizing the role of AI practitioners in mitigating these risks throughout the lifecycle of AI development. It details the dual-use nature of AI, with applications ranging from benign innovations to tools for disinformation, cyberattacks, and even biological weapons production. The article also examines indirect implications, such as decisions about open-sourcing AI technologies, which could have significant geopolitical consequences.

-

Why it matters: As advancements in AI accelerate, their integration into civilian and military applications poses unprecedented challenges. The authors argue that AI companies, researchers, and developers must take responsibility for the societal and security impacts of their work. They emphasize that education and training in responsible AI practices are essential to equipping practitioners to identify and address risks. This message highlights a critical need within the AI ethics community, particularly as discussions about global regulation gain momentum. The article serves as a vital call to action for policymakers to address the gaps in governance frameworks for dual-use AI technologies.

-

Between the lines: This article stands as one of Abhishek Gupta’s final contributions to the field, reflecting his enduring commitment to responsible AI innovation. Its publication reinforces the importance of equipping AI practitioners with both the technical and ethical tools needed to navigate the complex societal impacts of their work. The authors suggest concrete steps, such as integrating courses on AI governance into academic curriculums and encouraging lifelong learning through professional development programs. As the global community increasingly confronts the challenges posed by AI, this work provides a foundational perspective for aligning innovation with ethical standards and international security goals.

To dive deeper, read the full article here.

I’m a neurology ICU nurse. The creep of AI in our hospitals terrifies me – Coda

-

What happened: A neurology ICU nurse shares her firsthand experience with the integration of AI systems in hospital settings, emphasizing the unsettling challenges they pose. From predictive algorithms to robotic companions, AI is increasingly shaping healthcare decisions, often at the expense of human intuition and patient-centered care. The article highlights instances where AI-driven tools have led to questionable outcomes, raising concerns about their over reliance and lack of accountability.

-

Why it matters: The article highlights the ethical and practical dilemmas of embedding AI in critical care. While AI promises to improve efficiency and outcomes, its deployment without adequate oversight risks compromising patient safety and eroding trust. The nurse’s perspective sheds light on the limitations of AI tools, particularly when they override medical judgment or fail to adapt to complex, real-world scenarios. These issues stress the importance of balancing technological advancement with human expertise in healthcare.

-

Between the lines: This narrative illustrates the broader implications of unchecked AI integration in mission-critical domains. It calls for a re-evaluation of how and where AI is applied, advocating for greater collaboration between technologists and medical professionals. The article serves as a reminder that while AI has transformative potential, its deployment must be cautious, ethical, and centred around the needs of patients and providers.

To dive deeper, read the full article here.

AI as an Invasive Species – Digital Public

-

What happened: This article explores the concept of artificial intelligence (AI) as an “invasive species” within the digital and societal ecosystems. Drawing parallels to invasive biological species, the piece critiques how AI technologies—originally developed to optimize human activities—often lead to unintended, widespread consequences that disrupt ecosystems. It highlights the fundamental flaws in the business models underpinning AI technologies, which incentivize scale and dependency over sustainable and ethical growth.

-

Why it matters: The article introduces a thought-provoking analogy that positions AI as a force reshaping human relationships and ecosystems, often to their detriment. By focusing on rapid adoption, commodification, and scalability, AI systems risk exacerbating inequality, environmental degradation, and societal harms. This framing is crucial for policymakers and technologists to reconsider the unchecked proliferation of AI and ensure it aligns with broader societal and ecological goals.

-

Between the lines: The author highlights the extractive and exploitative nature of AI deployment, likening its growth to historical trends in industrial and technological revolutions. It challenges the prevailing narratives of AI as a purely beneficial force and urges governments, technology providers, and investors to prioritize not just regulation but also restorative measures. By embracing this perspective, stakeholders can address the root causes of AI’s disruptions while working to preserve the ecosystems—both human and natural—it impacts.

To dive deeper, read the full article here.

How is “Ethical Debt” relevant to AI Ethics?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

In the fourth webinar of AI Connect II, hosted by the US Department of State and the Atlantic Council’s GeoTech Center on June 5, 2024, a discussion featuring Abhishek Gupta focused on sustainable development and inclusive growth through artificial intelligence (AI).

To dive deeper, read more details here.

Last month, the U.S. Departments of Commerce and State launched the International Network of AI Safety Institutes during a convening in San Francisco. The United States will serve as the inaugural chair of this initiative, whose initial members include Australia, Canada, the European Union, France, Japan, Kenya, the Republic of Korea, Singapore, the United Kingdom, and the United States. This network aims to address AI safety and governance challenges through global collaboration, best practices, and multidisciplinary research, with over $11 million committed to advancing its goals. This launch coincides with Canada establishing its own Canadian Artificial Intelligence Safety Institute (CAISI), further emphasizing the growing global focus on ethical and secure AI development

For further details, read the full announcement here.

Ethics in Artificial Intelligence (AI) can emerge in many ways. This paper addresses developmental methodologies, including top-down, bottom-up, and hybrid approaches to ethics in AI, from theoretical, technical, and political perspectives. Examples through case studies of the complexity of AI ethics are discussed to provide a global perspective when approaching this challenging and often overlooked area of research.

To dive deeper, read the full article here.

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

Ethics & Policy

5 interesting stats to start your week

Third of UK marketers have ‘dramatically’ changed AI approach since AI Act

More than a third (37%) of UK marketers say they have ‘dramatically’ changed their approach to AI, since the introduction of the European Union’s AI Act a year ago, according to research by SAP Emarsys.

Additionally, nearly half (44%) of UK marketers say their approach to AI is more ethical than it was this time last year, while 46% report a better understanding of AI ethics, and 48% claim full compliance with the AI Act, which is designed to ensure safe and transparent AI.

The act sets out a phased approach to regulating the technology, classifying models into risk categories and setting up legal, technological, and governance frameworks which will come into place over the next two years.

However, some marketers are sceptical about the legislation, with 28% raising concerns that the AI Act will lead to the end of innovation in marketing.

Source: SAP Emarsys

Shoppers more likely to trust user reviews than influencers

Nearly two-thirds (65%) of UK consumers say they have made a purchase based on online reviews or comments from fellow shoppers, as opposed to 58% who say they have made a purchase thanks to a social media endorsement.

Nearly two-thirds (65%) of UK consumers say they have made a purchase based on online reviews or comments from fellow shoppers, as opposed to 58% who say they have made a purchase thanks to a social media endorsement.

Sports and leisure equipment (63%), decorative homewares (58%), luxury goods (56%), and cultural events (55%) are identified as product categories where consumers are most likely to find peer-to-peer information valuable.

Accurate product information was found to be a key factor in whether a review was positive or negative. Two-thirds (66%) of UK shoppers say that discrepancies between the product they receive and its description are a key reason for leaving negative reviews, whereas 40% of respondents say they have returned an item in the past year because the product details were inaccurate or misleading.

According to research by Akeeno, purchases driven by influencer activity have also declined since 2023, with 50% reporting having made a purchase based on influencer content in 2025 compared to 54% two years ago.

Source: Akeeno

77% of B2B marketing leaders say buyers still rely on their networks

When vetting what brands to work with, 77% of B2B marketing leaders say potential buyers still look at the company’s wider network as well as its own channels.

When vetting what brands to work with, 77% of B2B marketing leaders say potential buyers still look at the company’s wider network as well as its own channels.

Given the amount of content professionals are faced with, they are more likely to rely on other professionals they already know and trust, according to research from LinkedIn.

More than two-fifths (43%) of B2B marketers globally say their network is still their primary source for advice at work, ahead of family and friends, search engines, and AI tools.

Additionally, younger professionals surveyed say they are still somewhat sceptical of AI, with three-quarters (75%) of 18- to 24-year-olds saying that even as AI becomes more advanced, there’s still no substitute for the intuition and insights they get from trusted colleagues.

Since professionals are more likely to trust content and advice from peers, marketers are now investing more in creators, employees, and subject matter experts to build trust. As a result, 80% of marketers say trusted creators are now essential to earning credibility with younger buyers.

Source: LinkedIn

Business confidence up 11 points but leaders remain concerned about economy

Business leader confidence has increased slightly from last month, having risen from -72 in July to -61 in August.

Business leader confidence has increased slightly from last month, having risen from -72 in July to -61 in August.

The IoD Directors’ Economic Confidence Index, which measures business leader optimism in prospects for the UK economy, is now back to where it was immediately after last year’s Budget.

This improvement comes from several factors, including the rise in investment intentions (up from -27 in July to -8 in August), the rise in headcount expectations from -23 to -4 over the same period, and the increase in revenue expectations from -8 to 12.

Additionally, business leaders’ confidence in their own organisations is also up, standing at 1 in August compared to -9 in July.

Several factors were identified as being of concern for business leaders; these include UK economic conditions at 76%, up from 67% in May, and both employment taxes (remaining at 59%) and business taxes (up to 47%, from 45%) continuing to be of significant concern.

Source: The Institute of Directors

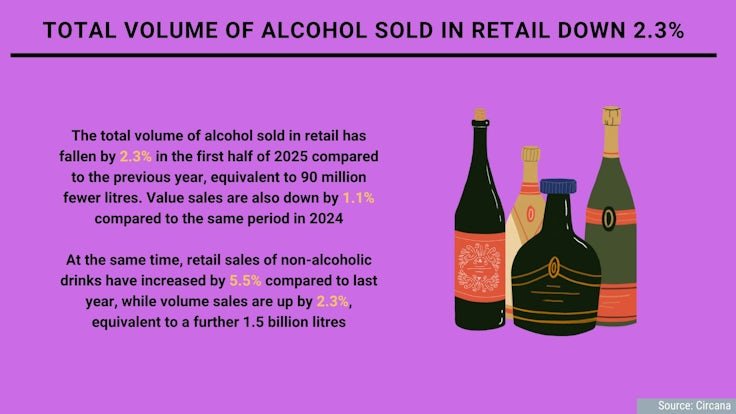

Total volume of alcohol sold in retail down 2.3%

The total volume of alcohol sold in retail has fallen by 2.3% in the first half of 2025 compared to the previous year, equivalent to 90 million fewer litres. Value sales are also down by 1.1% compared to the same period in 2024.

The total volume of alcohol sold in retail has fallen by 2.3% in the first half of 2025 compared to the previous year, equivalent to 90 million fewer litres. Value sales are also down by 1.1% compared to the same period in 2024.

At the same time, retail sales of non-alcoholic drinks have increased by 5.5% compared to last year, while volume sales are up by 2.3%, equivalent to a further 1.5 billion litres.

As the demand for non-alcoholic beverages grows, people increasingly expect these options to be available in their local bars and restaurants, with 55% of Brits and Europeans now expecting bars to always serve non-alcoholic beer.

As well as this, there are shifts happening within the alcoholic beverages category with value sales of no and low-alcohol spirits rising by 16.1%, and sales of ready-to-drink spirits growing by 11.6% compared to last year.

Source: Circana

Ethics & Policy

AI ethics under scrutiny, young people most exposed

New reports into the rise of artificial intelligence (AI) showed incidents linked to ethical breaches have more than doubled in just two years.

At the same time, entry-level job opportunities have been shrinking, partly due to the spread of this automation.

AI is moving from the margins to the mainstream at extraordinary speed and both workplaces and universities are struggling to keep up.

Tools such as ChatGPT, Gemini and Claude are now being used to draft emails, analyse data, write code, mark essays and even decide who gets a job interview.

Alongside this rapid rollout, a March report from McKinsey, one by the OECD in July and an earlier Rand report warned of a sharp increase in ethical controversies — from cheating scandals in exams to biased recruitment systems and cybersecurity threats — leaving regulators and institutions scrambling to respond.

The McKinsey survey said almost eight in 10 organisations now used AI in at least one business function, up from half in 2022.

While adoption promises faster workflows and lower costs, many companies deploy AI without clear policies. Universities face similar struggles, with students increasingly relying on AI for assignments and exams while academic rules remain inconsistent, it said.

The OECD’s AI Incidents and Hazards Monitor reported that ethical and operational issues involving AI have more than doubled since 2022.

Common concerns included accountability — who is responsible when AI errs; transparency — whether users understand AI decisions; and fairness, whether AI discriminates against certain groups.

Many models operated as “black boxes”, producing results without explanation, making errors hard to detect and correct, it said.

In workplaces, AI is used to screen CVs, rank applicants, and monitor performance. Yet studies show AI trained on historical data can replicate biases, unintentionally favouring certain groups.

Rand reported that AI was also used to manipulate information, influence decisions in sensitive sectors, and conduct cyberattacks.

Meanwhile, 41 per cent of professionals report that AI-driven change is harming their mental health, with younger workers feeling most anxious about job security.

LinkedIn data showed that entry-level roles in the US have fallen by more than 35 per cent since 2023, while 63 per cent of executives expected AI to replace tasks currently done by junior staff.

Aneesh Raman, LinkedIn’s chief economic opportunity officer, described this as “a perfect storm” for new graduates: Hiring freezes, economic uncertainty and AI disruption, as the BBC reported August 26.

LinkedIn forecasts that 70 per cent of jobs will look very different by 2030.

Recent Stanford research confirmed that employment among early-career workers in AI-exposed roles has dropped 13 per cent since generative AI became widespread, while more experienced workers or less AI-exposed roles remained stable.

Companies are adjusting through layoffs rather than pay cuts, squeezing younger workers out, it found.

In Belgium, AI ethics and fairness debates have intensified following a scandal in Flanders’ medical entrance exams.

Investigators caught three candidates using ChatGPT during the test.

Separately, 19 students filed appeals, suspecting others may have used AI unfairly after unusually high pass rates: Some 2,608 of 5,544 participants passed but only 1,741 could enter medical school. The success rate jumped to 47 per cent from 18.9 per cent in 2024, raising concerns about fairness and potential AI misuse.

Flemish education minister Zuhal Demir condemned the incidents, saying students who used AI had “cheated themselves, the university and society”.

Exam commission chair Professor Jan Eggermont noted that the higher pass rate might also reflect easier questions, which were deliberately simplified after the previous year’s exam proved excessively difficult, as well as the record number of participants, rather than AI-assisted cheating alone.

French-speaking universities, in the other part of the country, were not concerned by this scandal, as they still conduct medical entrance exams entirely on paper, something Demir said he was considering going back to.

Ethics & Policy

Governing AI with inclusion: An Egyptian model for the Global South

When artificial intelligence tools began spreading beyond technical circles and into the hands of everyday users, I saw a real opportunity to understand this profound transformation and harness AI’s potential to benefit Egypt as a state and its citizens. I also had questions: Is AI truly a national priority for Egypt? Do we need a legal framework to regulate it? Does it provide adequate protection for citizens? And is it safe enough for vulnerable groups like women and children?

These questions were not rhetorical. They were the drivers behind my decision to work on a legislative proposal for AI governance. My goal was to craft a national framework rooted in inclusion, dialogue, and development, one that does not simply follow global trends but actively shapes them to serve our society’s interests. The journey Egypt undertook can offer inspiration for other countries navigating the path toward fair and inclusive digital policies.

Egypt’s AI Development Journey

Over the past five years, Egypt has accelerated its commitment to AI as a pillar of its Egypt Vision 2030 for sustainable development. In May 2021, the government launched its first National AI Strategy, focusing on capacity building, integrating AI in the public sector, and fostering international collaboration. A National AI Council was established under the Ministry of Communications and Information Technology (MCIT) to oversee implementation. In January 2025, President Abdel Fattah El-Sisi unveiled the second National AI Strategy (2025–2030), which is built around six pillars: governance, technology, data, infrastructure, ecosystem development, and capacity building.

Since then, the MCIT has launched several initiatives, including training 100,000 young people through the “Our Future is Digital” programme, partnering with UNESCO to assess AI readiness, and integrating AI into health, education, and infrastructure projects. Today, Egypt hosts AI research centres, university departments, and partnerships with global tech companies—positioning itself as a regional innovation hub.

AI-led education reform

AI is not reserved for startups and hospitals. In May 2025, President El-Sisi instructed the government to consider introducing AI as a compulsory subject in pre-university education. In April 2025, I formally submitted a parliamentary request and another to the Deputy Prime Minister, suggesting that the government include AI education as part of a broader vision to prepare future generations, as outlined in Egypt’s initial AI strategy. The political leadership’s support for this proposal highlighted the value of synergy between decision-makers and civil society. The Ministries of Education and Communications are now exploring how to integrate AI concepts, ethics, and basic programming into school curricula.

From dialogue to legislation: My journey in AI policymaking

As Deputy Chair of the Foreign Affairs Committee in Parliament, I believe AI policymaking should not be confined to closed-door discussions. It must include all voices. In shaping Egypt’s AI policy, we brought together:

- The private sector, from startups to multinationals, will contribute its views on regulations, data protection, and innovation.

- Civil society – to emphasise ethical AI, algorithmic justice, and protection of vulnerable groups.

- International organisations, such as the OECD, UNDP, and UNESCO, share global best practices and experiences.

- Academic institutions – I co-hosted policy dialogues with the American University in Cairo and the American Chamber of Commerce (AmCham) to discuss governance standards and capacity development.

From recommendations to action: The government listening session

To transform dialogue into real policy, I formally requested the MCIT to host a listening session focused solely on the private sector. Over 70 companies and experts attended, sharing their recommendations directly with government officials.

This marked a key turning point, transitioning the initiative from a parliamentary effort into a participatory, cross-sectoral collaboration.

Drafting the law: Objectives, transparency, and risk-based classification

Based on these consultations, participants developed a legislative proposal grounded in transparency, fairness, and inclusivity. The proposed law includes the following core objectives:

- Support education and scientific research in the field of artificial intelligence

- Provide specific protection for individuals and groups most vulnerable to the potential risks of AI technologies

- Govern AI systems in alignment with Egypt’s international commitments and national legal framework

- Enhance Egypt’s position as a regional and international hub for AI innovation, in partnership with development institutions

- Support and encourage private sector investment in the field of AI, especially for startups and small enterprises

- Promote Egypt’s transition to a digital economy powered by advanced technologies and AI

To operationalise these objectives, the bill includes:

- Clear definitions of AI systems

- Data protection measures aligned with Egypt’s 2020 Personal Data Protection Law

- Mandatory algorithmic fairness, transparency, and auditability

- Incentives for innovation, such as AI incubators and R&D centres

Establishment of ethics committees and training programmes for public sector staff

The draft law also introduces a risk-based classification framework, aligning it with global best practices, which categorises AI systems into three tiers:

1. Prohibited AI systems – These are banned outright due to unacceptable risks, including harm to safety, rights, or public order.

2. High-risk AI systems – These require prior approval, detailed documentation, transparency, and ongoing regulatory oversight. Common examples include AI used in healthcare, law enforcement, critical infrastructure, and education.

3. Limited-risk AI systems – These are permitted with minimal safeguards, such as user transparency, labelling of AI-generated content, and optional user consent. Examples include recommendation engines and chatbots.

This classification system ensures proportionality in regulation, protecting the public interest without stifling innovation.

Global recognition: The IPU applauds Egypt’s model

The Inter-Parliamentary Union (IPU), representing over 179 national parliaments, praised Egypt’s AI bill as a model for inclusive AI governance. It highlighted that involving all stakeholders builds public trust in digital policy and reinforces the legitimacy of technology laws.

Key lessons learned

- Inclusion builds trust – Multistakeholder participation leads to more practical and sustainable policies.

- Political will matters – President El-Sisi’s support elevated AI from a tech topic to a national priority.

- Laws evolve through experience – Our draft legislation is designed to be updated as the field develops.

- Education is the ultimate infrastructure – Bridging the future digital divide begins in the classroom.

- Ethics come first – From the outset, we established values that focus on fairness, transparency, and non-discrimination.

Challenges ahead

As the draft bill progresses into final legislation and implementation, several challenges lie ahead:

- Training regulators on AI fundamentals

- Equipping public institutions to adopt ethical AI

- Reducing the urban-rural digital divide

- Ensuring national sovereignty over data

- Enhancing Egypt’s global role as a policymaker—not just a policy recipient

Ensuring representation in AI policy

As a female legislator leading this effort, it was important for me to prioritise the representation of women, youth, and marginalised groups in technology policymaking. If AI is built on biased data, it reproduces those biases. That’s why the policymaking table must be round, diverse, and representative.

A vision for the region

I look forward to seeing Egypt:

- Advance regional AI policy partnerships across the Middle East and Africa

- Embedd AI ethics in all levels of education

- Invest in AI for the public good

Because AI should serve people—not control them.

Better laws for a better future

This journey taught me that governing AI requires courage to legislate before all the answers are known—and humility to listen to every voice. Egypt’s experience isn’t just about technology; it’s about building trust and shared ownership. And perhaps that’s the most important infrastructure of all.

The post Governing AI with inclusion: An Egyptian model for the Global South appeared first on OECD.AI.

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies