Tools & Platforms

Never mind the botlickers, ‘AI’ is just normal technology – The Mail & Guardian

Demystify: Artificial intelligence has its uses, but it is the harms that should concern us. Photo: Flickr

Most of us know at least one slopper. They’re the people who use ChatGPT to reply to Tinder matches, choose items from the restaurant menu and write creepily generic replies to office emails. Then there’s the undergraduate slopfest that’s wreaking havoc at universities, to say nothing of the barrage of suspiciously em-dash-laden papers polluting the inboxes of academic journal editors.

Not content to merely participate in the ongoing game of slop roulette, the botlicker is a more proactive creature who is usually to be found confidently holding forth like some subpar regional TED Talk speaker about how “this changes everything”. Confidence notwithstanding, in most cases Synergy Greg from marketing and his fellow botlickers are dangerously ignorant about their subject matter — contemporary machine learning technologies — and are thus prone to cycling rapidly between awe and terror.

Indeed, for the botlicker, who possibly also has strong views on crypto, “AI” is simultaneously the worst and the best thing we’ve ever invented. It’s destroying the labour market and threatening us all with techno-fascism, but it’s also delivering us to a fully automated leisure society free of what David Graeber once rightly called “bullshit jobs”.

You’ll notice that I’m using scare quotes around the term “AI”. That’s because, as computational linguist Emily Bender and former Google research scientist Alex Hanna argue in their excellent recent book, The AI Con, there is nothing inherently intelligent about these technologies, which they describe with the more accurate term “synthetic text extrusion machines”. The acronym STEM is already taken, alas, but there’s another equally apt acronym we can use: Salami, or systematic approaches to learning algorithms and machine inferences.

The image of machine learning as a ground-up pile of random bits and pieces that is later squashed into a sausage-shaped receptacle to be consumed by people who haven’t read the health warnings is probably vastly more apposite than the notion that doing some clever — and highly computationally and ecologically expensive — maths on some big datasets somehow constitutes “intelligence”.

That said, perhaps we shouldn’t be so hard on those who, when confronted with the misleading vividness of ChatGPT and Co’s language-shaped outputs, resort to imputing all sorts of cognitive properties to what Bender and Hanna, also the hosts of the essential Mystery AI Hype Theater 3000 podcast, cheekily described as “mathy maths”. After all, as sci-fi author Arthur C Clarke reminded us, “any sufficiently advanced technology is indistinguishable from magic”, and in our disenchanted age some of us are desperate for a little more magic in our lives.

Slopholm Syndrome notwithstanding, if we are to have useful conversations about machine learning then it’s crucial that instead of succumbing to the cheap parlour tricks of Silicon Valley marketing strategies — which are, tellingly, constructed around the exact-same mix of infinite promise and terrifying existential risk their pro-bono shills the botlickers always invoke — we pay attention to the men behind the curtain and expose “AI” for what it is: normal technology.

This, of course, means steering away both from hyperbolic claims about the imminent emergence of “AGI” (artificial general intelligence) that will solve all of humanity’s most pressing problems as well as from the crude Terminator-style dystopian sci-fi scenarios that populate the fever dreams of the irrational rationalists (beware, traveller, for this way lie Roko’s Basilisk and the Zizians).

More fundamentally, it also means taking a step back to examine some of the underlying social drivers that have caused such widespread apophenia (a kind of hallucination where you see patterns that aren’t there — it’s not just the “AI” that hallucinates, it’s causing us to see things too).

Most obviously in this regard, when confronted with the seemingly intractable and compounded social and ecological crises of the current moment, deferring to techno-solutionism is a reasonable strategy to ward off the inevitable existential dread of life in the Anthropocene. For many people, things are as the philosopher of technology Heidegger once said at the end of his late-life interview: “Only a God can save us.” Albeit in this case a bizarre techno-theological object built from maths, server farms full of expensive graphics cards and other people’s dubiously obtained data.

Beyond this, we should acknowledge that the increasing social, political, technological and ethical complexity of the world can leave us all scrambling for ways to stabilise our meaning-making practices. As the rug of certainty is pulled from under our feet at an ever-accelerating pace, it’s no wonder that we tend to experience an increased need for some sense of certainty, whether grounded in fascist demagoguery, phobic responses to the leakiness and fluidity of socially constructed categories or the synthetic dulcet tones of chatbots that have, here in the Eremocene (the Age of Loneliness), become our friends, partners, therapists and infallible tomes of wisdom.

From the Pythia who served as the Oracles of Delphi, allaying the fears of ancient Greeks during times of unrest, to the Python code that allows us to interface with our new oracles, the desire for existential certainty is far from new. In a time where a sense of agency and sufficient grasp on the world has been wrested from most of us, however — where our feeds are a never-ending barrage of wars, genocides and ecological collapses we feel powerless to stop — the desire for some source of stable knowledge, some all-knowing benevolent force that grants us a sense of vicarious power if we can learn to master it (just prompt better) — has possibly never been stronger.

ChatGPT, Grok, Claude, Gemini and Co, however, are not oracles. They are mathematically sophisticated games played with giant statistical databases. Recall in this regard that very few people assume any kind of intelligence, reasoning or sensory experience when using Midjourney and other early image generators that are built using the same contemporary machine learning paradigm as LLMs. We know they are just clever code.

But if we don’t regard Midjourney as some kind of sentient algorithmic overlord simply because it produces outputs that cluster pixels together in interesting ways, why would we regard LLMs as more than maths and datasets just because they produce outputs that cluster syntax together in interesting ways? Just as a picture of a bird cannot fly no matter how realistically it is drawn, so too is a picture of the language-using faculties of human beings not language and thus not reflective of anything deeper than next token prediction, hence Bender and Hanna’s delightful term “language-shaped”.

In light of the above, I’d like to suggest that we approach these novel technologies from at least two angles. On the one hand, it’s urgent that we demystify them. The more we succumb to a contemporary narcissism-fuelled variation of th Barnum effect, the less we’ll be able to reach informed decisions about regulating “AI” and the more we’ll be stochastically parroting the good-cop, bad-cop variants of Silicon Valley boosterism to further line the pockets of the billionaire tech oligarchs riding the current speculative bubble while they bankroll neofascism.

On the other hand, we should start paying less attention to the TESCREALists (transhumanism, extropianism, singularitarianism, cosmism, rationalism, effective altruism and longtermism — you know the type) and their “AI” shock doctrine and focus more on the current real-world harms being caused by the zealous adoption of commercial “AI” products by every middle manager, university bureaucrat or confused citizen who doesn’t want to be left behind (which if left unchecked tends to lead to what critical “AI” theorist Dan MacQuillan terms algorithmic Thatcherism).

These two tasks need to be approached together. It’s no use trying to mitigate actual ethical harms — the violence caused by algorithmic bias, for instance — if we do not have at least a rudimentary grasp of what synthetic text extrusion machines do, and vice-versa. In approaching these tasks, we should also challenge the rhetoric of inevitability. No technology, whether laser discs, blockchain, VR or LLMs, necessarily ends up being adopted by society in the form intended by its most enthusiastic proselytes and the history of technology is also a history of failures and resistance.

Finally, and perhaps most importantly, we should take great care not to fall into the trap of believing that critical thought, whether at universities, in the workplace or in the halls of power, is something that can or should be algorithmically optimised. Despite the increasing neoliberalisation of these sectors, which itself encourages the logic of automation and quantifiable outputs, critical thought — real, difficult thought grounded in uncertainty, finitude and everything else that makes us human — has perhaps never been so important.

Tools & Platforms

How AI Can Strengthen Your Company’s Cybersecurity – New Technology

Key Takeaways:

- Using AI cybersecurity tools can help you detect threats

faster, reduce attacker dwell time, and improve your

organization’s overall risk posture.

- Generative AI supports cybersecurity compliance by accelerating

breach analysis, reporting, and regulatory disclosure

readiness.

- Automating cybersecurity tasks with AI helps your business

optimize resources, boost efficiency, and improve security program

ROI.

Cyber threats are evolving fast — and your organization

can’t afford to fall behind. Whether you’re in healthcare, manufacturing, entertainment, or another dynamic industry,

the need to protect sensitive data and maintain trust with

stakeholders is critical.

With attacks growing in volume and complexity, artificial

intelligence (AI) offers powerful support to help you detect

threats earlier, respond faster, and stay ahead of changing

compliance demands.

Why AI Is a Game-Changer in Cybersecurity

Your business is likely facing more alerts and threats than your

team can manually manage. Microsoft reports that companies face

over 600 million cyberattacks daily — far

beyond human capacity to monitor alone.

AI tools can help by automating key aspects of your cybersecurity strategy, including:

- Real-time threat detection: With

“zero-day attack detection”, machine learning identifies

anomalies outside of known attack signatures to flag new threats

instantly.

- Automated incident response: From triaging

alerts to launching containment measures without waiting on human

intervention.

- Security benchmarking: Measuring your defenses

against industry standards to highlight areas for improvement.

- Privacy compliance support: Tracking data

handling and reporting to meet regulatory requirements with less

manual oversight.

- Vulnerability prioritization and patch

management: AI can rank identified weaknesses by severity

and automatically push policies to keep systems up to date.

AI doesn’t replace your team — it amplifies their

ability to act with speed, precision, and foresight.

Practical AI Use Cases to Consider

Here are some ways AI is currently being used in cybersecurity

and where it’s headed next:

1. Summarize Incidents and Recommend Actions

Generative AI can instantly analyze a security event and draft

response recommendations. This saves time, supports disclosure

obligations, and helps your team update internal policies based on

real data.

2. Prioritize Security Alerts More Efficiently

AI triage tools analyze signals from across your environment to

highlight which threats require urgent human attention. This allows

your staff to focus where it matters most — reducing risk and

alert fatigue.

3. Automate Compliance and Reporting

From HIPAA to SEC rules to state-level privacy laws, the

regulatory landscape is more complex than ever. AI can help your

organization map internal controls to frameworks, generate

compliance reports, and summarize what needs to be disclosed

— quickly and accurately.

4. Monitor Behavior and Detect Threats

AI can track user behavior, spot anomalies, and escalate

suspicious actions (like phishing attempts or unauthorized access).

These tools reduce attacker dwell time and flag concerns in seconds

— not weeks or months.

5. The Next Frontier: Autonomous Security

The future of AI in cybersecurity includes agentic systems

— tools capable of acting independently when breaches occur.

For instance, if a user clicks a phishing link, AI could

automatically isolate the device or suspend access.

However, this level of automation must be used carefully. Human

oversight remains essential to prevent overreactions — such

as wiping a laptop unnecessarily. In short, AI doesn’t replace

your human cybersecurity team but augments it — automating

repetitive tasks, spotting hidden threats, and enabling faster,

smarter responses. As the technology matures, your governance

structures must evolve alongside it.

Building a Roadmap and Proving ROI

To unlock the benefits of AI, your business needs a strong data

and governance foundation. Move from defense to strategy by first

assessing whether your current systems can support AI —

identifying gaps in data structure, quality, and access.

Next, define clear goals and ROI metrics. For example:

- How much time does AI save in daily operations?

- How quickly are threats identified post-AI deployment?

- What are the cost savings from prevented incidents?

Begin with a pilot program using an off-the-shelf AI product. If

it shows value, scale into customized prompts or embedded tooling

that fits your specific business systems.

Prompt Engineering to Empower Your Team

Your teams can get better results from AI by using structured

prompts. A well-designed prompt ensures your AI tools deliver

clear, useful, business-ready outputs.

Example prompt:

“Summarize the Microsoft 365 event with ID

‘1234’ to brief executive leadership. Include the event

description, threat level, correlated alerts, and mitigation steps

— in plain language suitable for a 10-minute

presentation.”

This approach supports internal decision-making, board

reporting, and team communication — all essential for

managing cyber risks effectively.

Don’t Wait: Make AI Part of Your Cybersecurity

Strategy

AI is no longer a “nice to have”; it’s a core

component of resilient, responsive cybersecurity programs.

Organizations that act now and implement AI strategically will be

better equipped to manage both today’s threats and

tomorrow’s compliance demands.

The content of this article is intended to provide a general

guide to the subject matter. Specialist advice should be sought

about your specific circumstances.

Tools & Platforms

AI home appliances: new normal at IFA 2025 – 조선일보

Tools & Platforms

How can AI enhance healthcare access and efficiency in Thailand?

Support accessible and equitable healthcare

Julia continued by noting that Thailand has been praised for its efforts in medical technology, ranking as a leader or second in ASEAN.

However, she acknowledged the limitations of medical technology development, not just in Thailand, but across the region, particularly regarding the resources and budgets required, as well as regulations in each country.

“Medical technology” will be one of the driving forces of Thailand’s economy, contributing to the enhancement of healthcare services to international standards, increasing competitiveness in the global market, and promoting equitable access to healthcare.

It will also encourage the development of the medical equipment industry to become more self-reliant, reducing dependency on imports, and generating new opportunities through health tech startups.

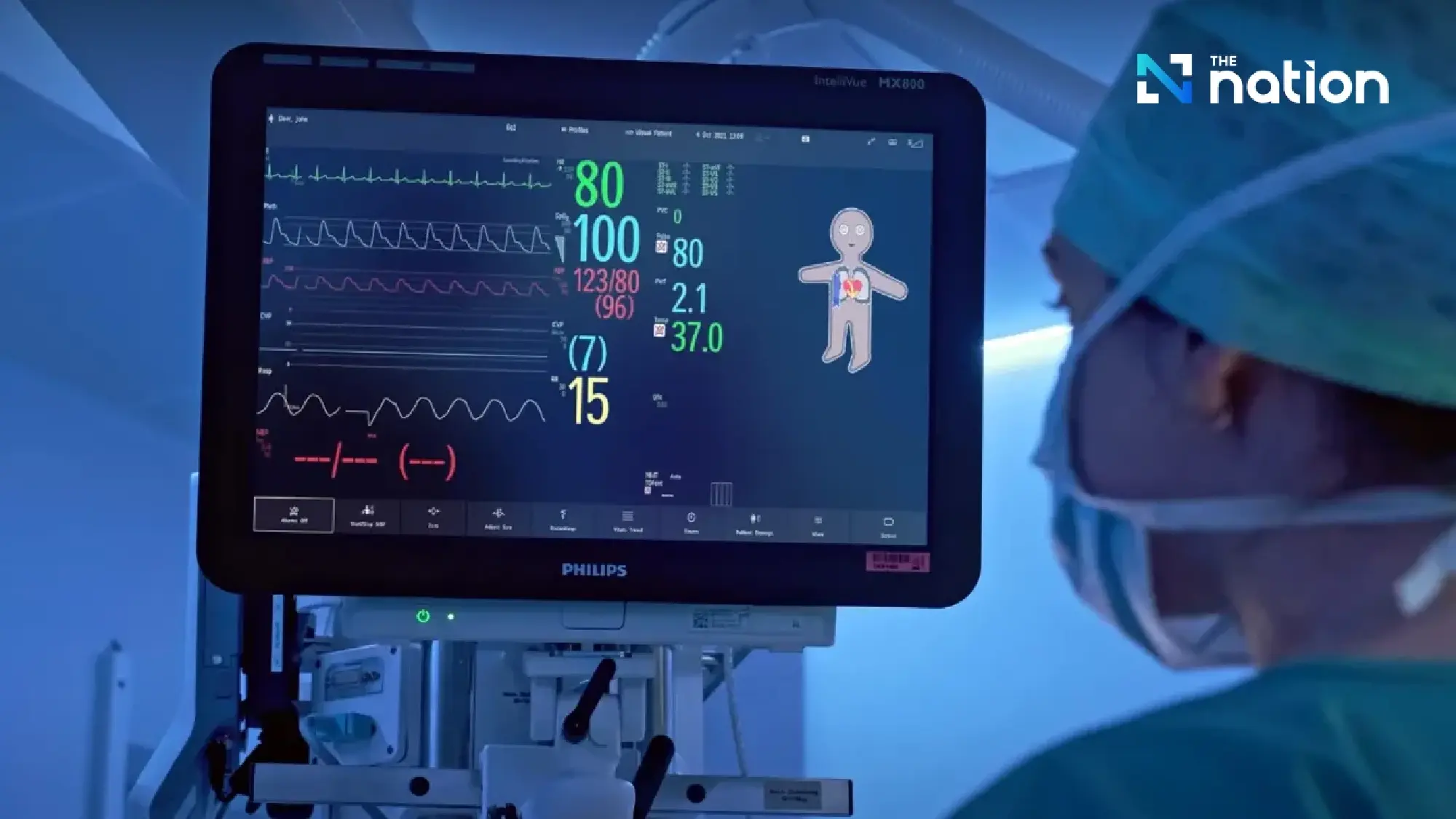

Julia further explained that Philips has supported Thailand’s medical technology sector from the past to the present, working towards improving access to healthcare and ensuring equity for all.

Examples include donations of 100 patient monitoring devices worth around 3 million baht to the Ministry of Public Health to assist hospitals affected by the 2011 floods, as well as providing ultrasound echo machines to various hospitals in collaboration with the Heart Association of Thailand to support mobile healthcare units in rural areas.

“Access to healthcare services is a major challenge faced by many countries, especially within local communities. Thailand must work to integrate medical services effectively,” she said.

“Philips has provided medical technology in various hospitals, both public and private, as well as in medical schools. Our focus is on medical tools for treating diseases such as stroke, heart disease, and lung diseases, which are prevalent among many patients.”

AI enhances predictive healthcare solutions

Thailand has been placed on the “shortlist” of countries set to launch Philips’ new products soon after their global release. However, the product launch in Thailand will depend on meeting regulatory requirements, safety standards, and relevant policies for registration.

Julia noted that economic crises, conflicts, or changes in US tariff rates may not significantly impact the importation of medical equipment.

Philips’ direction will continue to focus on connected healthcare solutions, leveraging AI technology for processing and predictive analytics. This allows for early predictions of patient conditions and provides advance warnings to healthcare professionals or caregivers.

Additionally, Philips places significant emphasis on AI research, particularly in the area of heart disease. The company collaborates with innovations in image-guided therapy to connect all devices and patient data for heart disease patients.

This enables doctors and nurses to monitor patient conditions remotely, whether they are in another room within the hospital or outside of it, ensuring accurate diagnosis, treatment, and more efficient patient monitoring.

“Connected care”: seamless healthcare integration

“Connected care” is a solution that supports continuous care by connecting patient information from the moment they arrive at the hospital or emergency department, through surgery, the ICU, general wards, and post-discharge recovery at home.

In Thailand, Philips’ HPM and connected care systems are widely used, particularly in large hospitals and medical schools.

The solution is based on three key principles:

- Seamless: Patient data is continuously linked, from the operating room to the ICU and general wards, without interruption. This differs from traditional systems, where information is often lost in between stages.

- Connected: Medical devices at the bedside, such as drug dispensers, saline drips, and laboratory data, are connected to monitors, providing doctors with an immediate overview of the patient’s condition.

- Interoperable: Patient data can be transferred to all departments, enabling doctors to track test results and view patient information anywhere, at any time. This reduces redundant tasks and increases the time available for direct patient care.

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoAERDF highlights the latest PreK-12 discoveries and inventions