AI Research

NCCN Policy Summit Explores Whether Artificial Intelligence Can Transform Cancer Care Safely and Fairly

WASHINGTON, D.C. [September 9, 2025] — Today, the National Comprehensive Cancer Network® (NCCN®)—an alliance of leading cancer centers devoted to patient care, research, and education—hosted a Policy Summit exploring where artificial intelligence (AI) currently stands as a tool for improving cancer care, and where it may be going in the future. Subject matter experts, including patients and advocates, clinicians, and policymakers, weighed in on where they saw emerging success and also reasons for concern.

Travis Osterman, DO, MS, FAMIA, FASCO, Director of Cancer Clinical Informatics, Vanderbilt-Ingram Cancer Center—a member of the NCCN Digital Oncology Forum—delivered a keynote address, stating: “Because of AI, we are at an inflection point in how technology supports the delivery of care to our patients with cancer. Thoughtful regulation can help us integrate these tools into everyday practice in ways that improve care delivery and support oncology practices. The decisions we make now will determine how AI innovations serve our patients and impact clinicians for years to come.”

Many speakers took a cautiously optimistic tone on AI, rooted in pragmatism.

“AI isn’t the future of cancer care… it’s already here, helping detect disease earlier, guide personalized treatment, and reduce clinical burdens,” said William Walders, Executive Vice President, Chief Digital and Information Officer, The Joint Commission. “To fully realize the promise of AI in oncology, we must implement thoughtful guardrails that not only build trust but actively safeguard patient safety and uphold the highest standards of care. At Joint Commission, our mission is to shape policy and guidance that ensures AI complements, never compromises, the human touch. These guardrails are essential to prevent unintended consequences and to ensure equitable, high-quality outcomes for all.”

Panelists noted the speed at which AI models are evolving. Some compared its potential to previous advances in care, such as the leap from paper to electronic medical records. Many expressed excitement over the possibilities it represents for improving efficiency and helping to support an overburdened oncology workforce and accelerate the pursuit of new cures.

“Artificial intelligence is transforming every industry, and oncology is no exception,” stated Jorge Reis-Filho, MD, PhD, FRCPath, Chief AI and Data Scientist, Oncology R&D, AstraZeneca. “With the advent of multimodal foundation models and agentic AI, there are unique opportunities to propel clinical development, empowering researchers and clinicians with the ability to generate a more holistic understanding of disease biology and develop the next generation of biomarkers to guide decision making.”

“AI has enormous potential to optimize cancer outcomes by making clinical trials accessible to patients regardless of their location and by simplifying complex trial processes for patients and research teams alike. I am looking forward to new approaches for safe evaluation and implementation so that we can effectively and responsibly use AI to gain maximum insight from every piece of patient data and drive progress,” commented Danielle Bitterman, MD, Clinical Lead for Data Science/AI, Mass General Brigham.

She continued: “As AI becomes integrated into clinical practice, stronger collaborations between oncologists and computer scientists will catalyze advances and will be key to directly addressing the most urgent challenges in cancer care.”

Regina Barzilay, PhD, School of Engineering Distinguished Professor for AI and Health, MIT, expressed her concern that adoption may not be moving quickly enough: “AI-driven diagnostics and treatment has potential to transform cancer outcomes. Unfortunately, today, these tools are not utilized enough in patient care. Guidelines could play a critical role in changing this status quo.”

She illustrated some specific AI technologies that she believes are ready to be implemented into patient care and asserted her wishes for keeping up with rapidly progressing technology.

Some of the panel participants raised issues about the potential challenges from AI adoption, including:

- How to implement quality control, accreditation, and fact-checking in a way that is fair and not burdensome

- How to determine appropriate governmental oversight

- How medical and technology organizations can work together to best leverage the expertise of both

- How to integrate functionality across various platforms

- How to avoid increasing disparities and technology gaps

- How to account for human error and bias while maintaining the human touch

“Many similar problems have been solved in different application environments,” concluded Allen Rush, PhD, MS, Co-Founder and Board Chairman, Jacqueline Rush Lynch Syndrome Cancer Foundation. “This will take teaming up with non-medical industry experts to find the best tools, fine-tune them, and apply ongoing learning. We need to ask the right questions and match them with the right AI platforms to unlock new possibilities for cancer detection and treatment.”

The topic of AI and cancer care was also featured in a plenary session during the NCCN 2025 Annual Conference. Visit NCCN.org/conference to view that session and others via the NCCN Continuing Education Portal.

Next up, on Tuesday, December 9, 2025, NCCN is hosting a Patient Advocacy Summit on addressing the unique cancer care needs of veterans and first responders. Visit NCCN.org/summits to learn more and register.

# # #

About the National Comprehensive Cancer Network

The National Comprehensive Cancer Network® (NCCN®) is marking 30 years as a not-for-profit alliance of leading cancer centers devoted to patient care, research, and education. NCCN is dedicated to defining and advancing quality, effective, equitable, and accessible cancer care and prevention so all people can live better lives. The NCCN Clinical Practice Guidelines in Oncology (NCCN Guidelines®) provide transparent, evidence-based, expert consensus-driven recommendations for cancer treatment, prevention, and supportive services; they are the recognized standard for clinical direction and policy in cancer management and the most thorough and frequently-updated clinical practice guidelines available in any area of medicine. The NCCN Guidelines for Patients® provide expert cancer treatment information to inform and empower patients and caregivers, through support from the NCCN Foundation®. NCCN also advances continuing education, global initiatives, policy, and research collaboration and publication in oncology. Visit NCCN.org for more information.

AI Research

Rice University creative writing course introduced Artificial Intelligence, AI

Courtesy Brandi Smith

Rice is bringing generative artificial intelligence into the creative writing world with this fall’s new course, “ENGL 306: AI Fictions.” Ian Schimmel, an associate teaching professor in the English and creative writing department, said he teaches the course to help students think critically about technology and consider the ways that AI models could be used in the creative processes of fiction writing.

The course is structured for any level of writer and also includes space to both incorporate and resist the influence of AI, according to its description.

“In this class, we never sit down with ChatGPT and tell it to write us a story and that’s that,” Schimmel wrote in an email to the Thresher. “We don’t use it to speed up the artistic process, either. Instead, we think about how to incorporate it in ways that might expand our thinking.”

Schimmel said he was stunned by the capabilities of ChatGPT when it was initially released in 2022, wondering if it truly possessed the ability to write. He said he found that the topic generated more questions than answers.

The next logical step, for Schimmel, was to create a course centered on exploring the complexities of AI and fiction writing, with assigned readings ranging from New York Times opinion pieces critical of its usage to an AI-generated poetry collection.

Schimmel said both students and faculty share concerns about how AI can help or harm academic progress and potentially cripple human creativity.

“Classes that engage students with AI might be some of the best ways to learn about what these systems can and cannot do,” Schimmel wrote. “There are so many things that AI is terrible at and incapable of. Seeing that firsthand is empowering. Whenever it hallucinates, glitches or makes you frustrated, you suddenly remember: ‘Oh right — this is a machine. This is nothing like me.”

“Fear is intrinsic to anything that shakes industry like AI is doing,” Robert Gray, a Brown College senior, wrote in an email to the Thresher. “I am taking this class so that I can immerse myself in that fear and learn how to navigate these new industrial landscapes.”

The course approaches AI from a fluid perspective that evolves as the class reads and writes more with the technology, Schimmel said. Their answers to the complex ethical questions surrounding AI usage evolve with this.

“At its core, the technology is fundamentally unethical,” Schimmel wrote. “It was developed and enhanced, without permission, on copyrighted text and personal data and without regard for the environment. So in that failed historical context, the question becomes: what do we do now? Paradoxically, the best way for us to formulate and evidence arguments against this technology might be to get to know it on a deep and personal level.”

Generative AI is often criticized for its ethicality, such as the energy output and water demanded for its data centers to function or how the models are trained based on data sets of existing copyrighted works.

Amazon and Google-backed Anthropic recently settled a class-action lawsuit with a group of U.S. authors who accused the company of using millions of pirated books to train its Claude chatbot to respond to human prompts.

With the assistance of AI, students will be able to attempt large-scale projects that typically would not be possible within a single semester, according to the course overview. AI will accelerate the writing process for drafting a book outline, and students can “collaborate” with AI to write the opening chapters of a novel for NaNoWriMo, a worldwide writing event held every November where participants would produce a 50,000-word first draft of a novel.

NaNoWriMo, short for National Novel Writing Month, announced its closing after more than 20 years in spring 2025. It received widespread press coverage for a statement released in 2024 that said condemnation of AI in writing “has classist and ableist undertones.” Many authors spoke out against the perceived endorsement of using generative AI for writing and the implication that disabled writers would require AI to produce work.

Each weekly class involves experimentation in dialogues and writing sessions with ChatGPT, with Schimmel and his students acknowledging the unknown and unexplored within AI and especially the visual and literary arts. Aspects of AI, from creative copyrights to excessive water usage to its accuracy as an editor, were discussed in one Friday session in the Wiess College classroom.

“We’re always better off when we pay attention to our attention. If there’s a topic (or tech) that creates worry, or upset, or raises difficult questions, then that’s a subject that we should pursue,” Schimmel wrote. “It’s in those undefined, sometimes uncomfortable places where we humans do our best, most important learning.”

AI Research

AI Startup Authentica Tackles Supply Chain Risk

AI Research

Artificial Intelligence (AI) Unicorn Anthropic Just Hit a $183 Billion Valuation. Here’s What It Means for Amazon Investors

Anthropic just closed on a $13 billion Series F funding round.

It’s been about a month since OpenAI unveiled its latest model, GPT-5. In that time, rival platforms have made bold moves of their own.

Perplexity, for instance, drew headlines with a $34.5 billion unsolicited bid for Alphabet‘s Google Chrome, while Anthropic — backed by both Alphabet and Amazon (AMZN 1.04%) — closed a $13 billion Series F funding round that propelled its valuation to an eye-popping $183 billion.

Since debuting its AI chatbot, Claude, in March 2023, Anthropic has experienced explosive growth. The company’s run-rate revenue surged from $1 billion at the start of this year to $5 billion by the end of August.

Image source: Getty Images.

While these gains are a clear win for the venture capital firms that backed Anthropic early on, the company’s trajectory carries even greater strategic weight for Amazon.

Let’s explore how Amazon is integrating Anthropic into its broader artificial intelligence (AI) ecosystem — and what this deepening alliance could mean for investors.

AWS + Anthropic: Amazon’s secret weapon in the AI arms race

Beyond its e-commerce dominance, Amazon’s largest business is its cloud computing arm — Amazon Web Services (AWS).

Much like Microsoft‘s integration of ChatGPT into its Azure platform, Amazon is positioning Anthropic’s Claude as a marquee offering within AWS. Through its Bedrock service, AWS customers can access a variety of large language models (LLMs) — with Claude being a prominent staple — to build and deploy generative AI applications.

In effect, Anthropic acts as both a differentiator and a distribution channel for AWS — giving enterprise customers the flexibility to test different models while keeping them within Amazon’s ecosystem. This expands AWS’s value proposition because it helps create stickiness in a fiercely intense cloud computing landscape.

Cutting Nvidia and AMD out of the loop

Another strategic benefit of Amazon’s partnership with Anthropic is the opportunity to accelerate adoption of its custom silicon, Trainium and Inferentia. These chips were specifically engineered to reduce dependence on Nvidia‘s GPUs and to lower the cost of both training and inferencing AI workloads.

The bet is that if Anthropic can successfully scale Claude on Trainium and Inferentia, it will serve as a proof point to the broader market that Amazon’s hardware offers a viable, cost-efficient alternative to premium GPUs from Nvidia and Advanced Micro Devices.

By steering more AI compute toward its in-house silicon, Amazon improves its unit economics — capturing more of the value chain and ultimately enhancing AWS’s profitability over time.

From Claude to cash flow

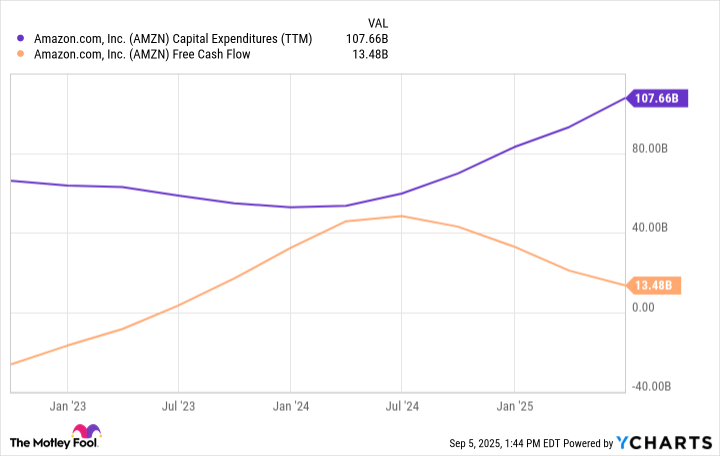

For investors, the central question is how Anthropic is translating into a tangible financial impact for Amazon. As the figures below illustrate, Amazon has not hesitated to deploy unprecedented sums into AI-related capital expenditures (capex) over the past few years. While this acceleration in spend has temporarily weighed on free cash flow, such investments are part of a deliberate long-term strategy rather than a short-term playbook.

AMZN Capital Expenditures (TTM) data by YCharts

Partnerships of this scale rarely yield immediate results. Working with Anthropic is not about incremental wins — it’s about laying the foundation for transformative outcomes.

In practice, Anthropic enhances AWS’s ability to secure long-term enterprise contracts — reinforcing Amazon’s position as an indispensable backbone of AI infrastructure. Once embedded, the switching costs for customers considering alternative models or rival cloud providers like Microsoft Azure or Google Cloud Platform (GCP) become prohibitively high.

Over time, these dynamics should enable Amazon to capture a larger share of AI workloads and generate durable, high-margin recurring fees. As profitability scales alongside revenue growth, Amazon is well-positioned to experience meaningful valuation expansion relative to its peers — making the stock a compelling opportunity to buy and hold for long-term investors right now.

Adam Spatacco has positions in Alphabet, Amazon, Microsoft, and Nvidia. The Motley Fool has positions in and recommends Advanced Micro Devices, Alphabet, Amazon, Microsoft, and Nvidia. The Motley Fool recommends the following options: long January 2026 $395 calls on Microsoft and short January 2026 $405 calls on Microsoft. The Motley Fool has a disclosure policy.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi