AI Research

MuZero, AlphaZero, and AlphaDev: Optimizing computer systems

As part of our aim to build increasingly capable and general artificial intelligence (AI) systems, we’re working to create AI tools with a broader understanding of the world. This can allow useful knowledge to be transferred between many different types of tasks.

Using reinforcement learning, our AI systems AlphaZero and MuZero have achieved superhuman performance playing games. Since then, we’ve expanded their capabilities to help design better computer chips, alongside optimizing data centers and video compression. And our specialized version of AlphaZero, called AlphaDev, has also discovered new algorithms for accelerating software at the foundations of our digital society.

Early results have shown the transformative potential of more general-purpose AI tools. Here, we explain how these advances are shaping the future of computing — and already helping billions of people and the planet.

Designing better computer chips

Specialized hardware is essential to making sure today’s AI systems are resource-efficient for users at scale. But designing and producing new computer chips can take years of work.

Our researchers have developed an AI-based approach to design more powerful and efficient circuits. By treating a circuit like a neural network, we found a way to accelerate chip design and take performance to new heights.

Neural networks are often designed to take user inputs and generate outputs, like images, text, or video. Inside the neural network, edges connect to nodes in a graph-like structure.

To create a circuit design, our team proposed circuit neural networks’, a new type of neural network which turns edges into wires and nodes into logic gates, and learns how to connect them together.

Animated illustration of a circuit neural network learning a circuit design. It determines which edges (wires) connect to which nodes (logic gates) to improve the overall circuit design.

We optimized the learned circuit for computational speed, energy efficiency, and size, while maintaining its functionality. Using ‘simulated annealing’, a classical search technique that looks one step into the future, we also tested different options to find its optimal configuration.

With this technique, we won the IWLS 2023 Programming Contest — with the best solution on 82% of circuit design problems in the competition.

Our team also used AlphaZero, which can look many steps into the future, to improve the circuit design by treating the challenge like a game to solve.

So far, our research combining circuit neural networks with the reward function of reinforcement learning has shown very promising results for building even more advanced computer chips.

Optimising data centre resources

Data centers manage everything from delivering search results to processing datasets. Like a game of multi-dimensional Tetris, a system called Borg manages and optimizes workloads within Google’s vast data centers.

To schedule tasks, Borg relies on manually-coded rules. But at Google’s scale, manually-coded rules can’t cover the variety of ever-changing workload distributions. So they are designed as one size to best fit all .

This is where machine learning technologies like AlphaZero are especially helpful: they are able to work at scale, automatically creating individual rules that are optimally tailored for the various workload distributions.

During its training, AlphaZero learned to recognise patterns in tasks coming into the data centers, and also learned to predict the best ways to manage capacity and make decisions with the best long-term outcomes.

When we applied AlphaZero to Borg in experimental trials, we found we could reduce the proportion of underused hardware in the data center by up to 19%.

An animated visualization of neat, optimized data storage, versus messy and unoptimized storage.

Compressing video efficiently

Video streaming makes up the majority of internet traffic. So finding ways to make streaming more efficient, however big or small, will have a huge impact on the millions of people watching videos every day.

We worked with YouTube to compress and transmit video using MuZero’s problem-solving abilities. By reducing the bitrate by 4%, MuZero enhanced the overall YouTube experience — without compromising on visual quality.

We initially applied MuZero to optimize the compression of each individual video frame. Now, we’ve expanded this work to help make decisions on how frames are grouped and referenced during encoding, leading to more bitrate savings.

Results from these first two steps show great promise of MuZero’s potential to become a more generalized tool, helping find optimal solutions across the entire video compression process.

A visualization demonstrating how MuZero compresses video files. It defines groups of pictures with visual similarities for compression. A single keyframe is compressed. MuZero then compresses other frames, using the keyframe as a reference. The process repeats for the rest of the video, until compression is complete.

Discovering faster algorithms

AlphaDev, a version of AlphaZero, made a novel breakthrough in computer science, when it discovered faster sorting and hashing algorithms. These fundamental processes are used trillions of times a day to sort, store, and retrieve data.

AlphaDev’s sorting algorithms

Sorting algorithms help digital devices process and display information, from ranking online search results and social posts, to user recommendations.

AlphaDev discovered an algorithm that increases efficiency for sorting short sequences of elements by 70% and by about 1.7% for sequences containing more than 250,000 elements, compared to the algorithms in the C++ library. That means results generated from user queries can be sorted much faster. When used at scale, this saves huge amounts of time and energy.

AlphaDev’s hashing algorithms

Hashing algorithms are often used for data storage and retrieval, like in a customer database. They typically use a key (e.g. user name “Jane Doe”) to generate a unique hash, which corresponds to the data values that need retrieving (e.g. “order number 164335-87”).

Like a librarian who uses a classification system to quickly find a specific book, with a hashing system, the computer already knows what it’s looking for and where to find it. When applied to the 9-16 bytes range of hashing functions in data centers, AlphaDev’s algorithm improved the efficiency by 30%.

The impact of these algorithms

We added the sorting algorithms to the LLVM standard C++ library — replacing sub-routines that have been used for over a decade. And contributed AlphaDev’s hashing algorithms to the abseil library.

Since then, millions of developers and companies have started using them across industries as diverse as cloud computing, online shopping, and supply chain management.

General-purpose tools to power our digital future

Our AI tools are already saving billions of people time and energy. This is just the start. We envision a future where general-purpose AI tools can help optimize the global computing ecosystem.

We’re not there yet — we still need faster, more efficient, and sustainable digital infrastructure.

Many more theoretical and technological breakthroughs are needed to create fully generalized AI tools. But the potential of these tools — across technology, science, and medicine — makes us excited about what’s on the horizon.

AI Research

Researchers Unlock 210% Performance Gains In Machine Learning With Spin Glass Feature Mapping

Quantum machine learning seeks to harness the power of quantum mechanics to improve artificial intelligence, and a new technique developed by Anton Simen, Carlos Flores-Garrigos, and Murilo Henrique De Oliveira, all from Kipu Quantum GmbH, alongside Gabriel Dario Alvarado Barrios, Juan F. R. Hernández, and Qi Zhang, represents a significant step towards realising that potential. The researchers propose a novel feature mapping technique that utilises the complex dynamics of a quantum spin glass to identify subtle patterns within data, achieving a performance boost in machine learning models. This method encodes data into a disordered quantum system, then extracts meaningful features by observing its evolution, and importantly, the team demonstrates performance gains of up to 210% on high-dimensional datasets used in areas like drug discovery and medical diagnostics. This work marks one of the first demonstrations of quantum machine learning achieving a clear advantage over classical methods, potentially bridging the gap between theoretical quantum supremacy and practical, real-world applications.

The core idea is to leverage the quantum dynamics of these annealers to create enhanced feature spaces for classical machine learning algorithms, with the goal of achieving a quantum advantage in performance. Key findings include a method to map classical data into a quantum feature space, allowing classical machine learning algorithms to operate on richer data. Researchers found that operating the annealer in the coherent regime, with annealing times of 10-40 nanoseconds, yields the best and most stable performance, as longer times lead to performance degradation.

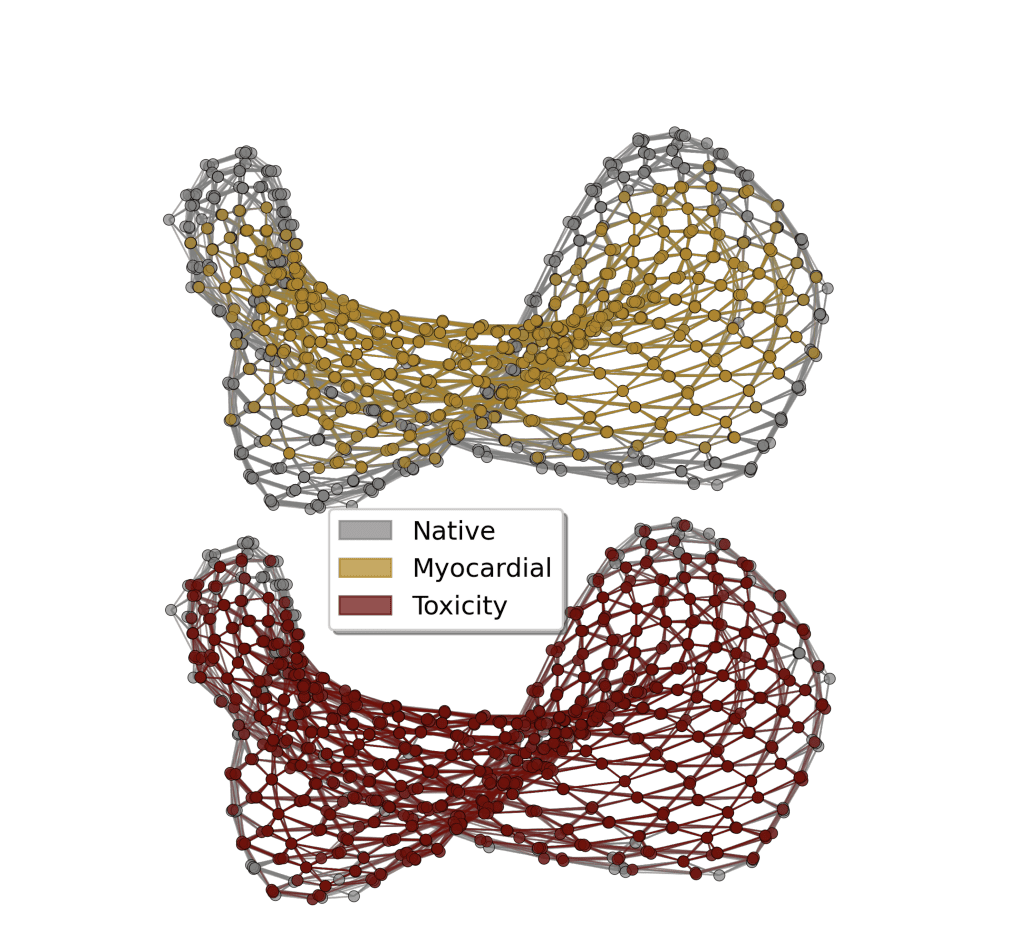

The method was tested on datasets related to toxicity prediction, myocardial infarction complications, and drug-induced autoimmunity, suggesting potential performance gains compared to purely classical methods. Kipu Quantum has launched an industrial quantum machine learning service based on these findings, claiming to achieve quantum advantage. The methodology involves encoding data into qubits, programming the annealer to evolve according to its quantum dynamics, extracting features from the final qubit state, and feeding this data into classical machine learning algorithms. Key concepts include quantum annealing, analog quantum computing, feature engineering, quantum feature maps, and the coherent regime. The team encoded information from datasets into a disordered quantum system, then used a process called “quantum quench” to generate complex feature representations. Experiments reveal that machine learning models benefit most from features extracted during the fast, coherent stage of this quantum process, particularly when the system is near a critical dynamic point. This analog quantum feature mapping technique was benchmarked on high-dimensional datasets, drawn from areas like drug discovery and medical diagnostics.

Results demonstrate a substantial performance boost, with the quantum-enhanced models achieving up to a 210% improvement in key metrics compared to state-of-the-art classical machine learning algorithms. Peak classification performance was observed at annealing times of 20-30 nanoseconds, a regime where quantum entanglement is maximized. The technique was successfully applied to datasets related to molecular toxicity, myocardial infarction complications, and drug-induced autoimmunity, using algorithms including support vector machines, random forests, and gradient boosting. By encoding data into a disordered quantum system and extracting features from its evolution, the researchers demonstrate performance improvements in applications including molecular toxicity classification, diagnosis of heart attack complications, and detection of drug-induced autoimmune responses. Comparative evaluations consistently show gains in precision, recall, and area under the curve, achieving improvements of up to 210% in certain metrics. Researchers found that optimal performance is achieved when the quantum system operates in a coherent regime, with longer annealing times leading to performance degradation due to decoherence. Further research is needed to explore more complex quantum feature encodings, adaptive annealing schedules, and broader problem domains. Future work will also investigate implementation on digital quantum computers and explore alternative analog quantum hardware platforms, such as neutral-atom quantum systems, to expand the scope and impact of this method.

AI Research

Prediction: This Artificial Intelligence (AI) Semiconductor Stock Will Join Nvidia, Microsoft, Apple, Alphabet, and Amazon in the $2 Trillion Club by 2028. (Hint: Not Broadcom)

This company is growing quickly, and its stock is a bargain at the current price.

Big tech companies are set to spend $375 billion on artificial intelligence (AI) infrastructure this year, according to estimates from analysts at UBS. That number will climb to $500 billion next year.

The biggest expense item in building out AI data centers is semiconductors. Nvidia (NVDA -3.38%) has been by far the biggest beneficiary of that spend so far. Its GPUs offer best-in-class capabilities for general AI training and inference. Other AI accelerator chipmakers have also seen strong sales growth, including Broadcom (AVGO -3.70%), which makes custom AI chips as well as networking chips, which ensure data moves efficiently from one server to another, keeping downtime to a minimum.

Broadcom’s stock price has increased more than fivefold since the start of 2023, and the company now sports a market cap of $1.4 trillion. Another year of spectacular growth could easily place it in the $2 trillion club. But another semiconductor stock looks like a more likely candidate to reach that vaunted level, joining Nvidia and the four other members of the club by 2028.

Image source: Getty Images.

Is Broadcom a $2 trillion company?

Broadcom is a massive company with operations spanning hardware and software, but its AI chips business is currently steering the ship.

To that end, AI revenue climbed 46% year over year last quarter to reach $4.4 billion. Management expects the current quarter to produce $5.1 billion in AI semiconductor revenue, accelerating growth to roughly 60%. AI-related revenue now accounts for roughly 30% of Broadcom’s sales, and that’s set to keep climbing over the next few years.

Broadcom’s acquisition of VMware last year is another growth driver. The software company is now fully integrated into Broadcom’s larger operations, and it’s seen strong success in upselling customers to the VMware Cloud Foundation, enabling enterprises to run their own private clouds. Over 87% of its customers have transitioned to the new subscription, resulting in double-digit growth in annual recurring revenue.

But Broadcom shares are extremely expensive. The stock garners a forward P/E ratio of 45. While its AI chip sales are growing quickly and it’s seeing strong margin improvement from VMware, it’s important not to lose sight of how broad a company Broadcom is. Despite the stellar growth in those two businesses, the company is still only growing its top line at about 20% year over year. Investors should expect only incremental margin improvements going forward as it scales the AI accelerator business. That means the business is set up for strong earnings growth, but not enough to justify its 45 times earnings multiple.

Another semiconductor stock trades at a much more reasonable multiple, and is growing just as fast.

The semiconductor giant poised to join the $2 trillion club by 2028

Both Broadcom and Nvidia rely on another company to ensure they can create the most advanced semiconductors in the world for AI training and inference. That company is Taiwan Semiconductor Manufacturing (TSM -3.05%), which actually prints and packages both companies’ designs. Almost every company designing leading-edge chips relies on TSMC for its technological capabilities. As a result, its market share of semiconductor manufacturing has climbed to more than two-thirds.

TSMC benefits from a virtuous cycle, ensuring it maintains and grows its massive market share. Its technology lead helps it win big contracts from companies like Nvidia and Broadcom. That gives it the capital to invest in expanding capacity and research and development for its next-generation process. As a result, it maintains its technology lead while offering enough capacity to meet the growing demand for manufacturing.

TSMC’s leading-edge process node, dubbed N2, will reportedly charge a 66% premium per silicon wafer over the previous generation (N3). That’s a much bigger step-up in price than it’s historically managed, but the demand for the process is strong as companies are willing to spend whatever it takes to access the next bump in power and energy efficiency. While TSMC typically experiences a significant drop off in gross margin as it ramps up a new expensive node with lower initial yields, its current pricing should help it maintain its margins for years to come as it eventually transitions to an even more advanced process next year.

Management expects AI-related revenue to average mid-40% growth per year from 2024 through 2029. While AI chips are still a relatively small part of TSMC’s business, that should produce overall revenue growth of about 20% for the business. Its ability to maintain a strong gross margin as it ramps up the next two manufacturing processes should allow it to produce operating earnings growth exceeding that 20% mark.

TSMC’s stock trades at a much more reasonable earnings multiple of 24 times expectations. Considering the business could generate earnings growth in the low 20% range, that’s a great price for the stock. If it can maintain that earnings multiple through 2028 while growing earnings at about 20% per year, the stock will be worth well over $2 trillion at that point.

Adam Levy has positions in Alphabet, Amazon, Apple, Microsoft, and Taiwan Semiconductor Manufacturing. The Motley Fool has positions in and recommends Alphabet, Amazon, Apple, Microsoft, Nvidia, and Taiwan Semiconductor Manufacturing. The Motley Fool recommends Broadcom and recommends the following options: long January 2026 $395 calls on Microsoft and short January 2026 $405 calls on Microsoft. The Motley Fool has a disclosure policy.

AI Research

Physicians Lose Cancer Detection Skills After Using Artificial Intelligence

Artificial intelligence shows great promise in helping physicians improve both their diagnostic accuracy of important patient conditions. In the realm of gastroenterology, AI has been shown to help human physicians better detect small polyps (adenomas) during colonoscopy. Although adenomas are not yet cancerous, they are at risk for turning into cancer. Thus, early detection and removal of adenomas during routine colonoscopy can reduce patient risk of developing future colon cancers.

But as physicians become more accustomed to AI assistance, what happens when they no longer have access to AI support? A recent European study has shown that physicians’ skills in detecting adenomas can deteriorate significantly after they become reliant on AI.

The European researchers tracked the results of over 1400 colonoscopies performed in four different medical centers. They measured the adenoma detection rate (ADR) for physicians working normally without AI vs. those who used AI to help them detect adenomas during the procedure. In addition, they also tracked the ADR of the physicians who had used AI regularly for three months, then resumed performing colonoscopies without AI assistance.

The researchers found that the ADR before AI assistance was 28% and with AI assistance was 28.4%. (This was a slight increase, but not statistically significant.) However, when physicians accustomed to AI assistance ceased using AI, their ADR fell significantly to 22.4%. Assuming the patients in the various study groups were medically similar, that suggests that physicians accustomed to AI support might miss over a fifth of adenomas without computer assistance!

This is the first published example of so-called medical “deskilling” caused by routine use of AI. The study authors summarized their findings as follows: “We assume that continuous exposure to decision support systems such as AI might lead to the natural human tendency to over-rely on their recommendations, leading to clinicians becoming less motivated, less focused, and less responsible when making cognitive decisions without AI assistance.”

Consider the following non-medical analogy: Suppose self-driving car technology advanced to the point that cars could safely decide when to accelerate, brake, turn, change lanes, and avoid sudden unexpected obstacles. If you relied on self-driving technology for several months, then suddenly had to drive without AI assistance, would you lose some of your driving skills?

Although this particular study took place in the field of gastroenterology, I would not be surprised if we eventually learn of similar AI-related deskilling in other branches of medicine, such as radiology. At present, radiologists do not routinely use AI while reading mammograms to detect early breast cancers. But when AI becomes approved for routine use, I can imagine that human radiologists could succumb to a similar performance loss if they were suddenly required to work without AI support.

I anticipate more studies will be performed to investigate the issue of deskilling across multiple medical specialties. Physicians, policymakers, and the general public will want to ask the following questions:

1) As AI becomes more routinely adopted, how are we tracking patient outcomes (and physician error rates) before AI, after routine AI use, and whenever AI is discontinued?

2) How long does the deskilling effect last? What methods can help physicians minimize deskilling, and/or recover lost skills most quickly?

3) Can AI be implemented in medical practice in a way that augments physician capabilities without deskilling?

Deskilling is not always bad. My 6th grade schoolteacher kept telling us that we needed to learn long division because we wouldn’t always have a calculator with us. But because of the ubiquity of smartphones and spreadsheets, I haven’t done long division with pencil and paper in decades!

I do not see AI completely replacing human physicians, at least not for several years. Thus, it will be incumbent on the technology and medical communities to discover and develop best practices that optimize patient outcomes without endangering patients through deskilling. This will be one of the many interesting and important challenges facing physicians in the era of AI.

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Business1 day ago

Business1 day agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoAstrophel Aerospace Raises ₹6.84 Crore to Build Reusable Launch Vehicle