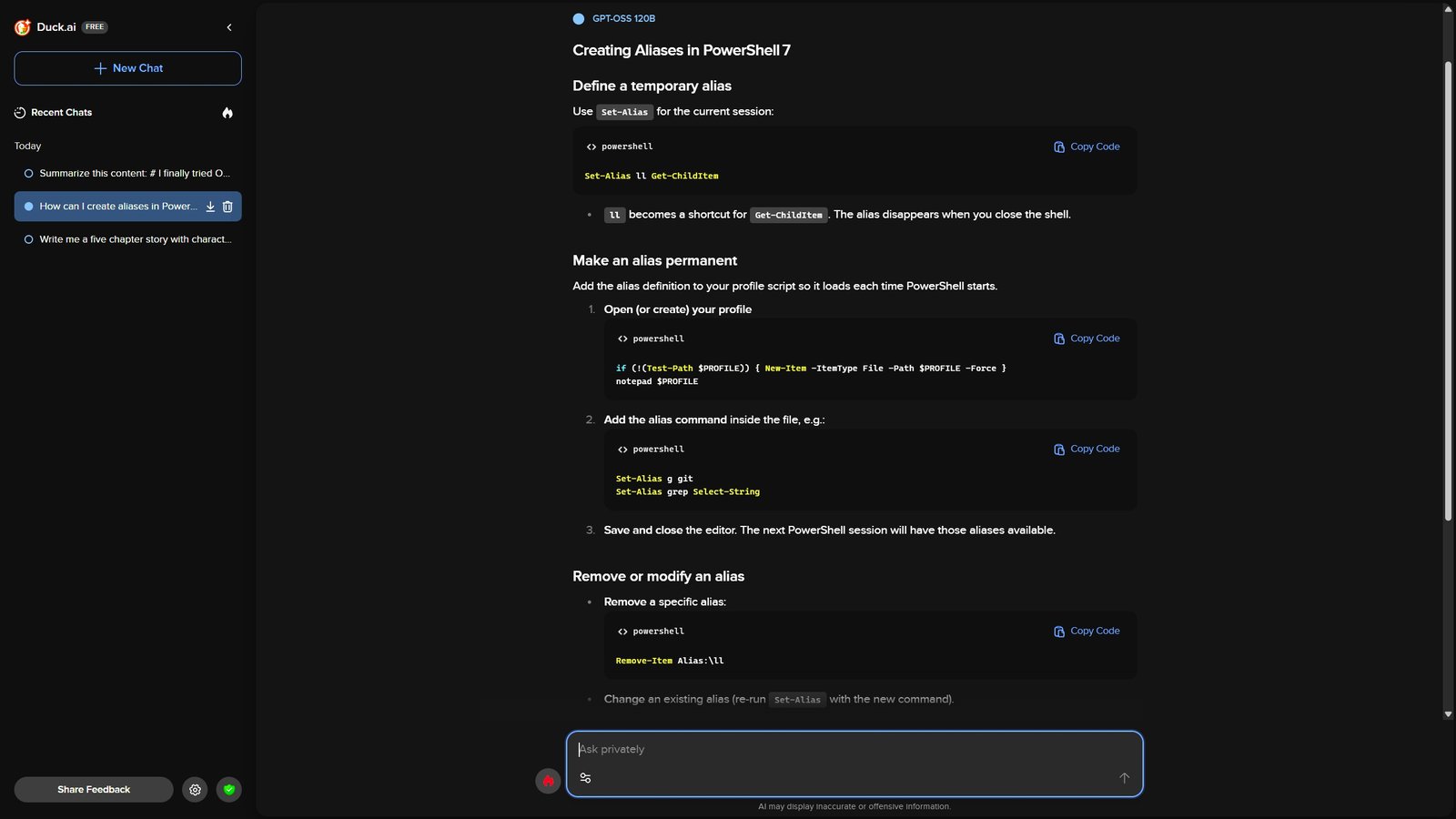

I’ll admit that until an email dropped into my inbox, I wasn’t even aware DuckDuckGo had an AI chatbot akin to ChatGPT on the go. But now I do, and per said email, it’s now able to run OpenAI’s gpt-oss:120b LLM.

Traditionally an open source model such as this is one you would run locally through a tool such as Ollama or LMStudio, but thanks to DuckDuckGo anyone can use it, use it privately, and for free.

Why does this matter? If you were to download gpt-oss:120b in all of its 120 billion parameter glory to use at its best in Ollama, you would need more VRAM than you would get from a pair of RTX 5090s.

It’s 65GB in size, so unless you have some monstrous GPU power in your rack, or something like an AMD Strix Halo-powered PC with all that lovely unified memory, it’s pretty tough to run on consumer hardware.

Especially tough to run well.

What Duck.ai is providing is free access to this model, but using their servers, not your own machine. As it’s provided by DuckDuckGo, a company well known for its commitment to privacy, it’s probably as trustworthy as you’ll get from an online tool of this kind.

DuckDuckGo even flat out states that all chats are anonymized, and like other free-to-use models, you don’t have to have an account. No sign-ups, no email address, just open the web page and start prompting your behind off.

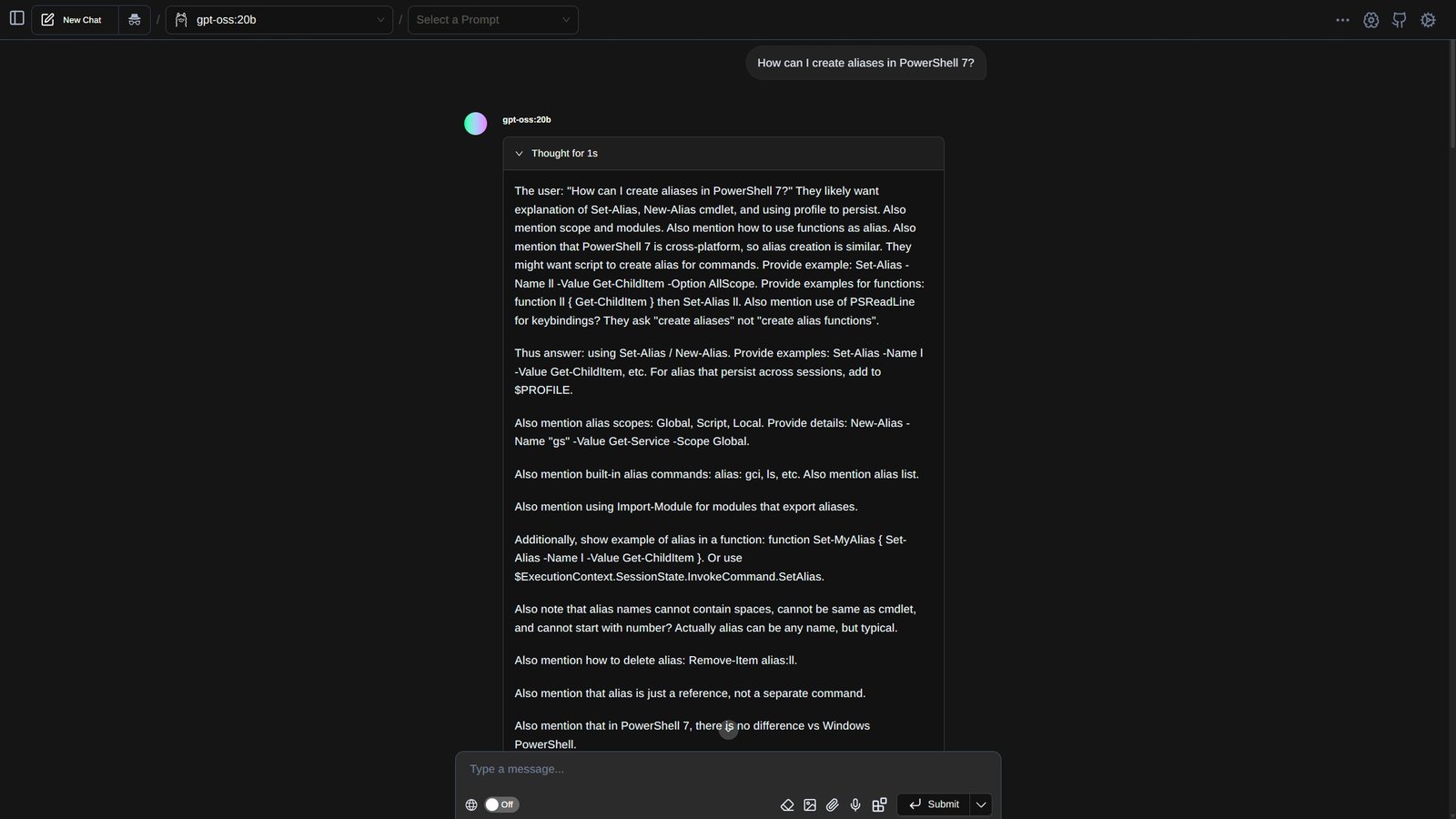

So, how is it? It’s fast. As if it wouldn’t be when it’s being powered by some massive cluster of hardware somewhere that isn’t your home. In my limited time playing with it so far, it seems at least as quick at generating responses as the 20b model does on my RTX 5090 — Duck.ai doesn’t show the tokens per second figure — but with one difference I’m not sure how I feel about yet.

Using gpt-oss:120b in Duck.ai, you don’t see the content of the thinking that’s done, it just throws out the response. The more I’ve been using gpt-oss on my own machine, the more I’ve started to appreciate being able to see this information.

Maybe it’s just me, but it’s always interesting, and sometimes enlightening, seeing how the model created the output that it serves. In some cases this is how I’ve learned where mistakes have been made, and I feel like it’s valuable information. I’d love it if Duck.ai even offered it as an option in settings to either show it or not.

You also can’t upload your own files to use with the model. Some of the other options have image upload support, but as far as I can tell, none allow you to upload other files such as documents or examples of code. This is perhaps part of the privacy angle, but it does add a limitation to how you may want to use it.

It’s generally really good, though, and since it’s built inside a web app that feels a lot like ChatGPT or Google Gemini, it’s welcoming and easy to use. It saves your recent chats in the sidebar, and the settings on hand to tweak how you want your responses are pretty thorough. These all apply, of course, to any of the models you use on Duck.ai, not just gpt-oss:120b.

I might have found a new gem in my own AI arsenal here, but to decide on that, I’ll have to play with it some more. For now, I’m just happy I can try gpt-oss:120b without having to have a GPU farm. Or an NVIDIA Blackwell Pro.