Ethics & Policy

Misuse of AI in War, the Commoditization of Intelligence, and more.

Welcome to The AI Ethics Brief, a bi-weekly publication by the Montreal AI Ethics Institute. Stay informed on the evolving world of AI ethics with key research, insightful reporting, and thoughtful commentary. Learn more at montrealethics.ai/about.

Follow MAIEI on Bluesky and LinkedIn.

-

Beyond the Façade: Challenging and Evaluating the Meaning of Participation in AI Governance – Tech Policy Press

-

Elon Musk staffer created a DOGE AI assistant for making government ‘less dumb’ – TechCrunch

Fireside Chat: Funding Landscape and the Future of the Digital Rights Ecosystem (RightsCon 2025, Taipei)

In an op-ed for MAIEI, Seher Shafiq of the Mozilla Foundation reflects on key discussions from RightsCon 2025 in Taipei, highlighting three urgent challenges in the digital rights space:

-

A deepening funding crisis threatens the ability of advocacy organizations to respond effectively to emerging digital threats. In 2023, foreign aid totaled $233 billion, but $78 billion in cuts this year—nearly one-third of the sector’s funding—vanished overnight, leaving a crisis still unfolding.

-

The weaponization of AI, particularly in war, conflict, and genocide, raises new concerns about the ethical and human rights implications of automated decision-making.

-

The lack of Global Majority representation in shaping AI governance frameworks highlights the need for more inclusive perspectives in decision-making. These voices matter now more than ever.

These challenges are interconnected. Funding shortfalls limit advocacy efforts at a time when AI systems are being deployed in high-stakes environments with little oversight. Meanwhile, those most affected by AI-driven harms—particularly in the Global Majority (also known as the Global South)—are often excluded from shaping solutions. Addressing these gaps requires not only new funding models and stronger AI governance frameworks, but also a fundamental shift in whose knowledge and experiences guide global AI policy.

As AI’s impact on human rights continues to grow, the conversation must evolve beyond technical fixes and policy frameworks to focus on community resilience, diverse coalitions, and a commitment to tech development that centers dignity and justice for all. The urgency felt at RightsCon 2025 wasn’t just about individual crises—it was about rethinking how the digital rights ecosystem itself must adapt to meet this moment.

📖 Read Seher’s op-ed on the MAIEI website.

Since our last post on AI agents, nearly every Big Tech company has rolled out a new agentic research feature—and they’re all practically named the same. OpenAI released DeepResearch within ChatGPT, Perplexity did the same with their own Deep Research (powered by DeepSeek’s R1 model, which we analyzed here), and Google launched Deep Research in its Gemini model back in December. X (formerly Twitter) took a slightly different path, calling its tool DeepSearch.

Beyond similarities in branding, the aim of these tools remains the same: to synthesize complex research in minutes that would otherwise take a human multiple hours to do. The basic principle involves the user prompting the model with a research direction, refining the research plan presented by the model, and then leaving it to scour the internet for insights and present its findings.

Benchmarks used to evaluate these models vary, including Humanity’s Last Exam (HLE), a test set of nearly 2,700 interdisciplinary questions designed to test LLMs on a broad range of expert-level academic subjects, and the General AI Assistant (GAIA) Benchmark, a benchmark designed to evaluate AI assistants on real-world problem solving tasks.

While the ability to save many hours of research is promising, said ability must be taken with a pinch of salt. These models still hallucinate, making up references and struggling to distinguish authoritative sources from misinformation. Furthermore, given the increase in processing time (e.g. ChatGPT could be compiling a research report for up to 30 minutes), the required amount of compute needed will increase, driving up the models’ environmental costs. As the agentic AI race heats up (both metaphorically and literally), the competition isn’t just about efficiency—it’s about whether these tools can truly justify their costs and risks.

Did we miss anything? Let us know in the comments below.

A recent conversation over coffee sparked this question: as AI agents and AI-generated content proliferate across the web at an unprecedented rate, what remains uniquely human? If AI can outperform us in IQ-based tasks—summarizing papers, answering complex questions, and writing compelling text—where does our distinct value lie?

While AI systems can generate articulate and even persuasive content, it lacks depth in understanding. It can explain, but it cannot truly comprehend. It can mimic empathy, but it cannot feel. This distinction matters, particularly in fields like mental health, education, and creative work, where emotional intelligence (EQ) is as crucial as cognitive intelligence (IQ).

An alarming example is AI’s role in therapy and emotional support. When LLMs are trained to optimize for the probability of the “next best word,” it lacks the contextual judgment required to prioritize ethical considerations. Recent instances where AI chatbots have encouraged self-harm illustrate the dangers of mistaking superficial empathy (articulating empathetic phrases) for genuine empathy (authentic care and understanding).

This raises a broader question: What is the value of human interpretation in an age of AI-generated content? As AI-generated knowledge becomes commodified, our human capabilities—analytical depth, emotional intelligence, and ethical reasoning—grow more valuable. Without thoughtful boundaries on Al usage, we risk a future where intelligence is cheap, but wisdom is rare.

At MAIEI, we recognize this challenge. Our editorial stance on AI tools in submissions requires explicit disclosure of any AI tool usage in developing content, including research summaries, op-eds, and original essays. We actively seek feedback from contributors, readers, and partners as we refine these standards. Our goal remains balancing technological innovation with the integrity of human intellectual contribution that advances AI ethics.

As part of this broader conversation, redefining literacy in an AI-driven world is becoming increasingly important. Kate Arthur, an advisor to MAIEI, explores this evolving concept in her newly published book, Am I Literate? Redefining Literacy in the Age of Artificial Intelligence. Reflecting on her journey as an educator and advocate for digital literacy, she examines how AI is reshaping our understanding of knowledge, communication, and critical thinking. Her insights offer a timely perspective on what it truly means to be literate in an era where AI-generated content is ubiquitous.

To dive deeper, read more details on “Am I Literate?” here.

Please share your thoughts with the MAIEI community:

In each edition, we highlight a question from the MAIEI community and share our insights. Have a question on AI ethics? Send it our way, and we may feature it in an upcoming edition!

We’re curious to get a sense of where readers stand on AI-generated content, especially as it becomes increasingly prevalent across industries From research reports to creative writing, AI is producing content at scale.

But does knowing something was AI-generated affect how much we trust or value it? Is the quality of content all that matters, or does authorship and authenticity still hold significance?

💡 Vote and share your thoughts!

-

It’s all about substance – If the content is accurate and useful, I don’t care who (or what) created it.

-

Human touch matters – I trust and value human-crafted content more, even if AI can generate similar results.

-

Context is key – Context matters (e.g., news vs. creative writing vs. research).

-

AI content should always be disclosed – Transparency matters, and AI-generated work should always be labeled.

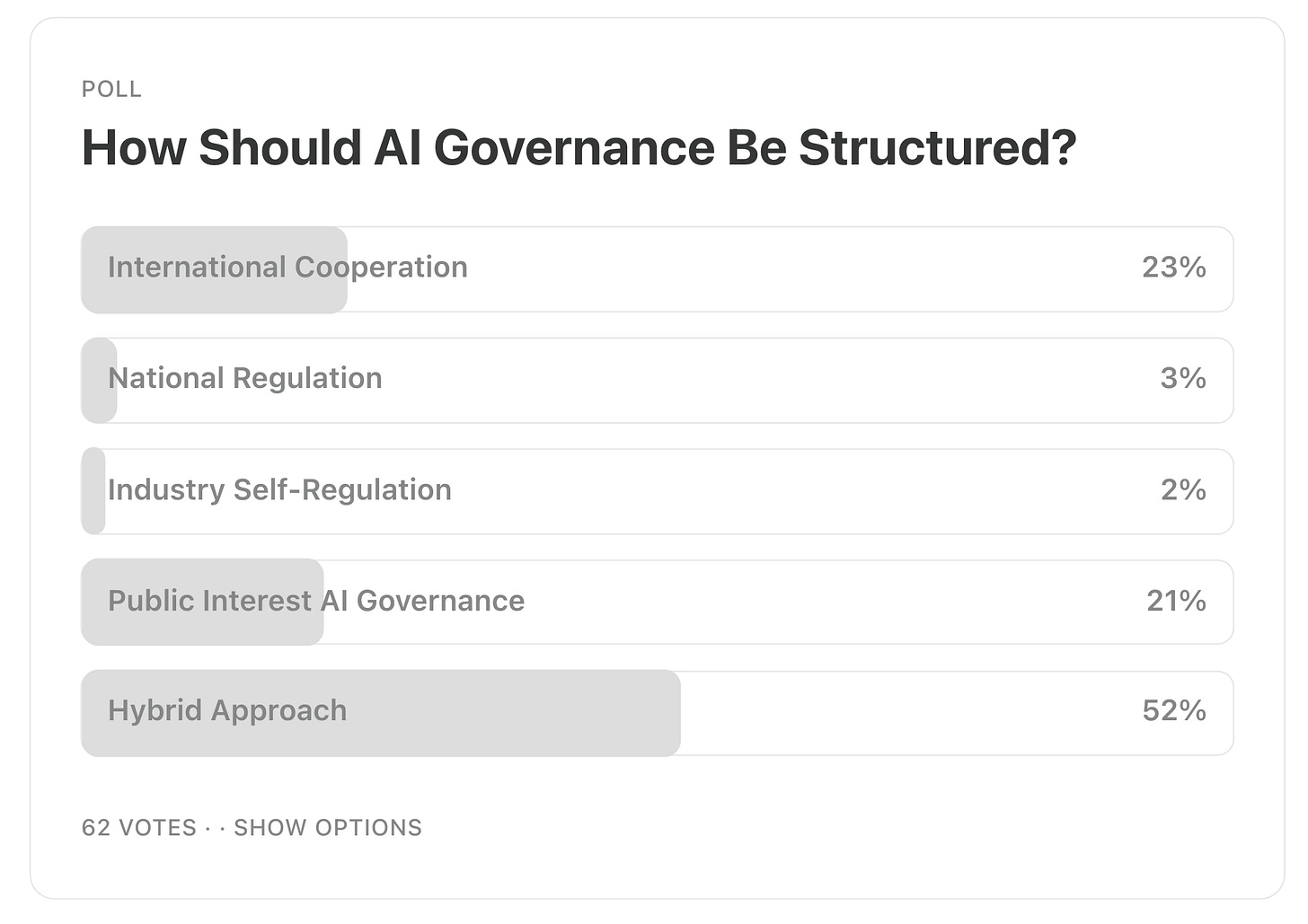

Our latest informal poll (n=62) suggests that a hybrid approach is the preferred model for AI governance, with 52% of respondents favoring a mix of government regulation, industry innovation, and public oversight. This reflects a broad consensus that AI governance should balance accountability and progress, integrating multiple stakeholders to ensure ethical and effective AI deployment.

International cooperation emerged as the second most favored option, securing 23% of the vote. This highlights the recognition that AI’s global impact requires cross-border regulatory frameworks to address challenges like bias, misinformation, and ethical concerns at an international level.

Public interest AI governance, which emphasizes civil society, academia, and advocacy groups shaping oversight, received 21% support. This suggests that many believe governance should be driven by broader societal values rather than just governments or corporations.

By contrast, national regulation (3%) and industry self-regulation (2%) received minimal support, indicating that respondents view government-only oversight or corporate-led governance as insufficient to address the complexities of AI governance effectively.

-

A hybrid approach dominates as the preferred governance model, emphasizing the need for collaborative oversight that includes governments, industry, civil society, academia, and other diverse stakeholders to ensure inclusive and effective AI governance.

-

International cooperation is seen as crucial, reinforcing the need for global AI standards and regulatory consistency.

-

Public interest AI governance holds strong support, reflecting a desire for civil society and academic institutions to play a central role in shaping AI policy.

-

Minimal support for national or industry self-regulation suggests that most respondents favor multi-stakeholder approaches over isolated governance models.

As AI governance debates continue, the challenge will be in defining effective structures that balance innovation, accountability, and global coordination to ensure AI serves the public good.

Please share your thoughts with the MAIEI community:

Teaching Responsible AI in a Time of Hype

As AI literacy becomes an urgent priority, organizations across sectors are rushing to provide training. But what should these courses truly accomplish? In this essay, Thomas Linder reflects on redesigning a responsible AI course at Concordia University in Montreal, originally developed by the late Abhishek Gupta before the generative AI boom. He highlights three key goals: decentering generative AI, unpacking AI concepts, and anchoring responsible AI in stakeholder perspectives. Linder concludes with recommendations to ensure AI education remains focused on ethical outcomes.

To dive deeper, read the full essay here.

Documenting the Impacts of Foundation Models – Partnership on AI

Our Director of Partnerships, Connor Wright, participated in Partnership on AI’s Post-Deployment Governance Practices Working Group, providing insights into how best to document the impacts of foundation models. The document presents the pain points involved in the process of documenting foundation model effects and offers recommendations, examples, and best practices for overcoming these challenges.

To dive deeper, download and read the full report here.

Help us keep The AI Ethics Brief free and accessible for everyone by becoming a paid subscriber on Substack for the price of a coffee or making a one-time or recurring donation at montrealethics.ai/donate

Your support sustains our mission of Democratizing AI Ethics Literacy, honours Abhishek Gupta’s legacy, and ensures we can continue serving our community.

For corporate partnerships or larger donations, please contact us at support@montrealethics.ai

Towards a Feminist Metaethics of AI

Despite numerous guidelines and codes of conduct about the ethical development and deployment of AI, neither academia nor practice has undertaken comparable efforts to explicitly and systematically evaluate the field of AI ethics itself. Such an evaluation would benefit from a feminist metaethics, which asks not only what ethics is but also what it should be like.

To dive deeper, read the full summary here.

Collectionless Artificial Intelligence

Learning from huge data collections introduces risks related to data centralization, privacy, energy efficiency, limited customizability, and control. This paper focuses on the perspective in which artificial agents are progressively developed over time by online learning from potentially lifelong streams of sensory data. This is achieved without storing the sensory information and without building datasets for offline learning purposes while pushing towards interactions with the environment, including humans and other artificial agents.

To dive deeper, read the full summary here.

-

What happened: In this reflection on the AI Safety Summit in Paris in February, the author hones in on the performative nature behind the event.

-

Why it matters: They note how enforcing AI safety through mechanisms such as AI audits will be rendered ineffective if such mechanisms do not have the power to decommission the AI systems found to be at fault. Furthermore, those who air their grievances at these summits are not given the tools to help resolve their situation, meaning they are subjected to corporate platitudes.

-

Between the lines: Effective governance must include the power to act upon its findings. For AI harms to be truly mitigated, those who are harmed ought to be equipped with the appropriate tools and know-how to help combat any injustices they experience.

To dive deeper, read the full article here.

Elon Musk staffer created a DOGE AI assistant for making government ‘less dumb’ – TechCrunch

-

What happened: A senior Elon Musk staffer working at both the White House and SpaceX has reportedly developed a Department of Governmental Efficiency (DOGE) AI assistant to help the department improve its efficiency. The chatbot was trained on 5 guiding principles for DOGE, including making government “less dumb” and optimizing business processes, amongst others.

-

Why it matters: This is another example of the extreme cost-cutting measures being undertaken by DOGE in the US government, which has already raised privacy and legal concerns.

-

Between the lines: Given the propensity for large language models (LLMs) to hallucinate, the consequences of doing so increase exponentially when the technology is applied to government: a notoriously sensitive area. For a truly transformative government bureaucracy experience, LLMs need to be far more robust than they currently are.

To dive deeper, read the full article here.

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

The Aider LLM Leaderboards provide a benchmark for evaluating large language models (LLMs) in code editing and programming tasks. By assessing models across multiple languages—including Python, C++, Java, and Rust—the leaderboard serves as a valuable resource for understanding how different AI models perform in real-world coding applications. Whether you’re a developer, researcher, or policymaker, this dataset offers key insights into LLM strengths, weaknesses, and cost-performance trade-offs in software engineering.

To dive deeper, read more details here.

Harnessing Collective Intelligence Under a Lack of Cultural Consensus

Harnessing collective intelligence (CI) to solve complex problems benefits from the ability to detect and characterize heterogeneity in consensus beliefs. This is particularly true in domains where a consensus amongst respondents defines an intersubjective “ground truth,” leading to a multiplicity of ground truths when subsets of respondents sustain mutually incompatible consensuses. In this paper, we extend Cultural Consensus Theory, a classic mathematical framework to detect divergent consensus beliefs, to allow culturally held beliefs to take the form of a deep latent construct: a fine-tuned deep neural network that maps features of a concept or entity to the consensus response among a subset of respondent via stick-breaking construction.

To dive deeper, read the full summary here.

We’d love to hear from you, our readers, about any recent research papers, articles, or newsworthy developments that have captured your attention. Please share your suggestions to help shape future discussions!

Ethics & Policy

Formulating An Artificial General Intelligence Ethics Checklist For The Upcoming Rise Of Advanced AI

While devising artificial general intelligence (AGI), AI developers will increasingly consult an AGI Ethics checklist.

getty

In today’s column, I address a topic that hasn’t yet gotten the attention it rightfully deserves. The matter entails focusing on the advancement of AI to become artificial general intelligence (AGI), along with encompassing suitable AGI Ethics mindsets and practices during and once we arrive at AGI. You see, there are already plenty of AI ethics guidelines for conventional AI, but few that are attuned to the envisioned semblance of AGI.

I offer a strawman version of an AGI Ethics Checklist to get the ball rolling.

Let’s talk about it.

This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here).

Heading Toward AGI And ASI

First, some fundamentals are required to set the stage for this discussion.

There is a great deal of research going on to further advance AI. The general goal is to either reach artificial general intelligence (AGI) or maybe even the outstretched possibility of achieving artificial superintelligence (ASI).

AGI is AI that is considered on par with human intellect and can seemingly match our intelligence. ASI is AI that has gone beyond human intellect and would be superior in many if not all feasible ways. The idea is that ASI would be able to run circles around humans by outthinking us at every turn. For more details on the nature of conventional AI versus AGI and ASI, see my analysis at the link here.

We have not yet attained AGI.

In fact, it is unknown as to whether we will reach AGI, or that maybe AGI will be achievable in decades or perhaps centuries from now. The AGI attainment dates that are floating around are wildly varying and wildly unsubstantiated by any credible evidence or ironclad logic. ASI is even more beyond the pale when it comes to where we are currently with conventional AI.

Doomers Versus Accelerators

AI insiders are generally divided into two major camps right now about the impacts of reaching AGI or ASI. One camp consists of the AI doomers. They are predicting that AGI or ASI will seek to wipe out humanity. Some refer to this as “P(doom),” which means the probability of doom, or that AI zonks us entirely, also known as the existential risk of AI (i.e., x-risk).

The other camp entails the upbeat AI accelerationists.

They tend to contend that advanced AI, namely AGI or ASI, is going to solve humanity’s problems. Cure cancer, yes indeed. Overcome world hunger, absolutely. We will see immense economic gains, liberating people from the drudgery of daily toils. AI will work hand-in-hand with humans. This benevolent AI is not going to usurp humanity. AI of this kind will be the last invention humans have ever made, but that’s good in the sense that AI will invent things we never could have envisioned.

No one can say for sure which camp is right, and which one is wrong. This is yet another polarizing aspect of our contemporary times.

For my in-depth analysis of the two camps, see the link here.

Trying To Keep Evil Away

We can certainly root for the upbeat side of advanced AI. Perhaps AGI will be our closest friend, while the pesky and futuristic ASI will be the evil destroyer. The overall sense is that we are likely to attain AGI first before we arrive at ASI.

ASI might take a long time to devise. But maybe the length of time will be a lot shorter than we envision if AGI will support our ASI ambitions. I’ve discussed that AGI might not be especially keen on us arriving at ASI, thus there isn’t any guarantee that AGI will willingly help propel us toward ASI, see my analysis at the link here.

The bottom line is that we cannot reasonably bet our lives that the likely first arrival, namely AGI, is going to be a bundle of goodness. There is an equally plausible chance that AGI could be an evildoer. Or that AGI will be half good and half bad. Who knows? It could be 1% bad, 99% good, which is a nice dreamy happy face perspective. That being said, AGI could be 1% good and 99% bad.

Efforts are underway to try and prevent AGI from turning out to be evil.

Conventional AI already has demonstrated that it is capable of deceptive practices, and even ready to perform blackmail and extortion (see my discussion at the link here). Maybe we can find ways to stop conventional AI from those woes and then use those same approaches to keep AGI on the upright path to abundant decency and high virtue.

That’s where AI ethics and AI laws come into the big picture.

The hope is that we can get AI makers and AI developers to adopt AI ethics techniques and abide by AI-devising legal guidelines so that current-era AI will stay within suitable bounds. By setting conventional AI on a proper trajectory, AGI might come out in the same upside manner.

AI Ethics And AI Laws

There is an abundance of conventional AI ethics frameworks that AI builders can choose from.

For example, the United Nations has an extensive AI ethics methodology (see my coverage at the link here), the NIST has a robust AI risk management scheme (see my coverage at the link here), and so on. They are easy to find. There isn’t an excuse anymore that an AI maker has nothing available to provide AI ethics guidance. Plenty of AI ethics frameworks exist and are readily available.

Sadly, some AI makers don’t care about such practices and see them as impediments to making fast progress in AI. It is the classic belief that it is better to ask forgiveness than to get permission. A concern with this mindset is that we could end up with AGI which has a full-on x-risk, after which things will be far beyond our ability to prevent catastrophe.

AI makers should also be keeping tabs on the numerous new AI laws that are being established and that are rapidly emerging, see my discussion at the link here. AI laws are considered the hard or tough side of regulating AI since laws usually have sharp teeth, while AI ethics is construed as the softer side of AI governance due to typically being of a voluntary nature.

From AI To AGI Ethics Checklist

We can stratify the advent of AGI into three handy stages:

- (1) Pre-AGI. This includes today’s conventional AI and the rest of the pathway up to attaining AGI.

- (2) Attained-AGI. This would be the time at which AGI has been actually achieved.

- (3) Post-AGI. This is after AGI has been attained and we are dealing with an AGI era upon us.

I propose here a helpful AGI Ethics Checklist that would be applicable across all three stages. I’ve constructed the checklist by considering the myriads of conventional AI versions and tried to boost and adjust to accommodate the nature of the envisioned AGI.

To keep the AGI Ethics Checklist usable for practitioners, I opted to focus on the key factors that AGI warrants. The numbering of the checklist items is only for convenience of reference and does not denote any semblance of priority. They are all important. Generally speaking, they are all equally deserving of attention.

Here then is my overarching AGI Ethics Checklist:

- (1) AGI Alignment and Safety Policies. Key question: How can we ensure that AGI acts in ways that are beneficial to humanity and avoid catastrophic risks (which, in the main, entail alignment with human values, and the safety of humankind)?

- (2) AGI Regulations and Governance Policies.Key question: What is the impact of AGI-related regulations such as new laws, existing laws, etc., and the emergence of efforts to instill AI governance modalities into the path to and attainment of AGI?

- (3) AGI Intellectual Property (IP) and Open Access Policies. Key question: In what ways will IP laws restrict or empower the advent of AGI, and likewise, how will open source versus closed source have an impact on AGI?

- (4) AGI Economic Impacts and Labor Displacement Policies. Key question: How will AGI and the pathway to AGI have economic impacts on society, including for example labor displacement?

- (5) AGI National Security and Geopolitical Competition Policies. Key question: How will AGI have impacts on national security such as bolstering the security and sovereignty of some nations and undermining other nations, and how will the geopolitical landscape be altered for those nations that are pursuing AGI or that attain AGI versus those that are not?

- (6) AGI Ethical Use and Moral Status Policies. Key question: How will the use of AGI in unethical ways impact the pathway and advent of AGI, how would positive ethical uses that are encoded into AGI be of benefit or detriment, and in what way would recognizing AGI as having legal personhood or moral status be an impact?

- (7) AGI Transparency and Explainability Policies. Key question: How will the degree of AGI transparency and interpretability or explainability impact the pathway and attainment of AGI?

- (8) AGI Control, Containment, and “Off-Switch” Policies. Key question: A societal concern is whether AGI can be controlled, and/or contained, and whether an off-switch or deactivation mechanism will be possible or might be defeated and readily overtaken by AGI (so-called runaway AGI) – what impact do these considerations have on the pathway and attainment of AGI?

- (9) AGI Societal Trust and Public Engagement Policies. Key question: During the pathway and the attainment of AGI, what impact will societal trust in AI and public engagement have, especially when considering potential misinformation and disinformation about AGI (along with secrecy associated with the development of AGI)?

- (10) AGI Existential Risk Management Policies. Key question: A high-profile worry is that AGI will lead to human extinction or human enslavement – what impact will this have on the pathway and attainment of AGI?

In my upcoming column postings, I will delve deeply into each of the ten. This is the 30,000-foot level or top-level perspective.

Related Useful Research

For those further interested in the overall topic of AI Ethics checklists, a recent meta-analysis examined a large array of conventional AI checklists to see what they have in common, along with their differences. Furthermore, a notable aim of the study was to try and assess the practical nature of such checklists.

The research article is entitled “The Rise Of Checkbox AI Ethics: A Review” by Sara Kijewski, Elettra Ronchi, and Effy Vayena, AI and Ethics, May 2025, and proffered these salient points (excerpts):

- “We identified a sizeable and highly heterogeneous body of different practical approaches to help guide ethical implementation.”

- “These include not only tools, checklists, procedures, methods, and techniques but also a range of far more general approaches that require interpretation and adaptation such as for research and ethical training/education as well as for designing ex-post auditing and assessment processes.”

- “Together, this body of approaches reflects the varying perspectives on what is needed to implement ethics in the different steps across the whole AI system lifecycle from development to deployment.”

Another insightful research study delves into the specifics of AGI-oriented AI ethics and societal implications, doing so in a published paper entitled “Navigating Artificial General Intelligence (AGI): Societal Implications, Ethical Considerations, and Governance Strategies” by Dileesh Chandra Bikkasani, AI and Ethics, May 2025, which made these key points (excerpts):

- “Artificial General Intelligence (AGI) represents a pivotal advancement in AI with far-reaching implications across technological, ethical, and societal domains.”

- “This paper addresses the following: (1) an in‐depth assessment of AGI’s transformative potential across different sectors and its multifaceted implications, including significant financial impacts like workforce disruption, income inequality, productivity gains, and potential systemic risks; (2) an examination of critical ethical considerations, including transparency and accountability, complex ethical dilemmas and societal impact; (3) a detailed analysis of privacy, legal and policy implications, particularly in intellectual property and liability, and (4) a proposed governance framework to ensure responsible AGI development and deployment.”

- “Additionally, the paper explores and addresses AGI’s political implications, including national security and potential misuse.”

What’s Coming Next

Admittedly, getting AI makers to focus on AI ethics for conventional AI is already an uphill battle. Trying to add to their attention the similar but adjusted facets associated with AGI is certainly going to be as much of a climb and probably even harder to promote.

One way or another, it is imperative and requires keen commitment.

We need to simultaneously focus on the near-term and deal with the AI ethics of conventional AI, while also giving due diligence to AGI ethics associated with the somewhat longer-term attainment of AGI. When I refer to the longer term, there is a great deal of debate about how far off in the future AGI attainment will happen. AI luminaries are brazenly predicting AGI within the next few years, while most surveys of a broad spectrum of AI experts land on the year 2040 as the more likely AGI attainment date.

Whether AGI is a few years away or perhaps fifteen years away, it is nonetheless a matter of vital urgency and the years ahead are going to slip by very quickly.

Eleanor Roosevelt eloquently made this famous remark about time: “Tomorrow is a mystery. Today is a gift. That is why it is called the present.” We need to be thinking about and acting upon AGI Ethics right now, presently, or else the future is going to be a mystery that is resolved in a means we all will find entirely and dejectedly unwelcome.

Ethics & Policy

How Nonprofits Can Harness AI Without Losing Their Mission

Artificial intelligence is reshaping industries at a staggering pace, with nonprofit leaders now facing the same challenges and opportunities as their corporate counterparts. According to a Harvard Business Review study of 100 companies deploying generative AI, four strategic archetypes are emerging—ranging from bold innovators to disciplined integrators. For nonprofits, the stakes are even higher: harnessing AI effectively can unlock access, equity, and efficiency in ways that directly impact communities.

How can mission-driven organizations adopt emerging technologies without compromising their purpose? And what lessons can for-profit leaders learn from nonprofits already navigating this balance of ethics, empowerment, and revenue accountability?

Welcome to While You Were Working, brought to you by Rogue Marketing. In this episode, host Chip Rosales sits down with futurist and technologist Nicki Purcell, Chief Technology Officer at Morgan’s. Their conversation spans the future of AI in nonprofits, the role of inclusivity in innovation, and why rigor and curiosity must guide leaders through rapid change.

The conversation delves into…

-

Empowerment over isolation: Purcell shares how Morgan’s embeds accessibility into every initiative, ensuring technology empowers both employees and guests across its inclusive parks, hotels, and community spaces.

-

Revenue with purpose: She explains how nonprofits can apply for-profit rigor—like quarterly discipline and expense analysis—while balancing the complexities of donor, grant, and state funding.

-

AI as a nonprofit advantage: Purcell argues that AI’s efficiency and cost-cutting potential makes it essential for nonprofits, while stressing the importance of ethics, especially around disability inclusion and data privacy.

Article written by MarketScale.

Ethics & Policy

Blackboard vs Whiteboard | Release Date, Reviews, Cast, and Where to Watch

Blackboard vs Whiteboard : Release Date, Trailer, Cast & Songs

| Title | Blackboard vs Whiteboard |

| Release status | Released |

| Release date | Apr 11, 2019 |

| Language | Hindi |

| Genre | DramaFamily |

| Actors | Raghubir YadavAshok SamarthAkhilendra Mishra |

| Director | Tarun Bisht Tarun s Bisht |

| Critic Rating | 5.8 |

| Streaming On | Airtel Xstream |

| Duration | 2 hr 32 mins |

Blackboard vs Whiteboard Storyline

Blackboard vs Whiteboard – Star Cast And Crew

Image Gallery

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi