AI Research

Meta’s AI Reorg: What to Know About Meta Superintelligence Labs

If it feels like Meta has been in the news a lot lately, you’re not imagining things. Just weeks after unveiling Meta Superintelligence Labs (MSL), the tech giant is restructuring again, carving the new division into four separate teams and placing a hard stop on all hiring, per the Wall Street Journal.

It’s the latest twist in what has been nothing short of a whirlwind summer for Meta’s artificial intelligence operations. MSL was billed as a moonshot — an ambitious bid to deliver “personal superintelligence” that surpasses human intelligence in every way. To get there, the company embarked on an aggressive hiring spree, shelling out massive signing bonuses to lure top talent away from rivals like OpenAI and Google DeepMind. It also brought on heavy-hitters like former GitHub CEO Nat Friedman and Alexandr Wang, who joined in June after Meta entered into a $14 billion deal to invest in his company Scale AI.

But the road has been far from smooth. Meta’s new Llama 4 language models landed with a thud back in April, reportedly prompting CEO Mark Zuckerberg to “handpick” MSL’s team himself. And tensions have started mounting between the new hires and Meta’s veteran AI researchers, some of whom have threatened to quit (a few are already gone).

“This is not a sign of being lost, it is a sign of being intentional. The reality is this technology is still relatively new, and even the biggest players are learning in real time how best to deploy it.”

Now, with news of yet another massive reorg and total hiring freeze, it’s tempting to read Meta’s moves as signs of panic, a scramble to stay relevant in an AI arms race it was already losing. But the shake-up may also be part of a much larger strategy. It’s the messy reality of a company willing to upend itself in pursuit of what is shaping up to be the most consequential technology of our lifetimes.

“Meta is entering a new phase of its AI push. They went full steam on hiring the top talent they wanted, and now the focus is on figuring out how those people and teams fit together,” Luke Pierce, founder of Boom Automations, told Built In. “This is not a sign of being lost, it is a sign of being intentional. The reality is this technology is still relatively new, and even the biggest players are learning in real time how best to deploy it.”

What We Know About Meta’s AI Restructure

According to a since-leaked internal memo written by Wang, who is now Meta’s Chief AI Officer, the company organizing its AI efforts into four dedicated teams — all of which are housed under the umbrella of Meta Superintelligence Labs:

- TBD Lab: A small team headed by Wang that is focused on training and scaling Meta’s largest models, with the ultimate goal of achieving superintelligence.

- FAIR (Fundamental AI Research): Meta’s long-standing AI research arm led by Director of AI Research Rob Fergus and Chief Scientist Yann LeCun.

- Products and Applied Research: Headed by former GitHub CEO Nat Friedman, this team is in charge of weaving Meta’s Llama models and other AI research into its consumer products.

- MSL Infrastructure (Infra): Led by former VP of engineering Aparna Ramini and former AGI Foundations head Amir Frenkel, this team is responsible for the infrastructure (GPUs, data centers) needed to power Meta’s AI research and development.

Wang’s memo also revealed the following:

- TBD Lab and FAIR will work together on research: FAIR will serve as an “innovation engine” for MSL, feeding its research directly into TBD Lab’s training runs. This is a major shift for FAIR, which has always functioned more like an independent academic lab than a tightly integrated part of Meta.

- TBD Lab is exploring an “omni” model: TBD’s work will involve exploring “new directions,” potentially including an “omni model.” While the memo does not explain what exactly an “omni model” is, it would likely operate similarly to a multimodal system that would handle text, visual, audio and other data types — which makes sense given MSL’s recent hires in those areas.

- Meta is dissolving AGI Foundations: Born out of Meta’s old GenAI division, AGI Foundations was created in May to continue developing the Llama language models. But the team drew criticism from executives after Llama 4’s lukewarm reception. Now, its members will be dispersed across MSL’s product, infrastructure and FAIR divisions. Notably, TBD was not mentioned as a destination for ex-AGI Foundation members.

- Almost everyone reports to Wang: FAIR heads Rob Fergus and Yann LeCun will report to Wang. As will MSL Infra leads Aparna Ramini and Amir Frenkel. The same goes for Nat Friedman, head of Products and Applied Research, which is interesting given that Friedman was originally positioned as leading MSL alongside Wang, not reporting to him. The only person not mentioned as reporting to Wang is ChatGPT co-creator Shengjia Zhao, who is now MSL’s chief research scientist.

This marks the fourth overhaul of Meta’s AI operations in less than six months, raising doubts about whether all this reshuffling will be enough to propel the company to the forefront of the industry — especially given that most other key players have managed to sustain far greater organizational stability over the years. But Wang seems confident.

“I recognize that org changes can be disruptive,” he said in the memo. “But I truly believe that taking the time to get this structure right now will allow us to reach superintelligence with more velocity over the long term.”

What We Know About Meta’s Hiring Freeze

Meta imposed its hiring freeze on MSL around the same time as the restructure, halting both external hires and internal transfers unless they’ve been personally approved by Wang, according to the Wall Street Journal. The company has not said how long the freeze will last.

The pause comes after a months-long hiring spree led largely by Zuckerberg himself. As of mid-August, Meta has poached more than 50 AI researchers and engineers, including more than 20 from OpenAI, at least 13 from Google, three from Apple, three from Elon Musk’s xAI and two from Anthropic, the WSJ, reports. Now, the New York Times says Wang and the team he and Zuckerberg assembled are scrapping Meta’s old frontier model, Behemoth, and starting anew. They’re also considering making this new model “closed,” a sharp break from the company’s long-standing practice of open-sourcing its model weights. The shift has reportedly intensified tensions between these newcomers and the old guard.

Internal squabbles aside, this influx of fresh talent has also cost Meta a fortune. Several of the new researchers received nine-figure pay packages to come aboard, and the company even offered to buy a stake in Nat Friedman’s venture firm to woo him and co-founder Daniel Gross. Zuckerberg reportedly offered Thinking Machines Lab co-founder Andrew Tulloch $1.5 billion to join, but Tulloch turned it down. During an investor call in July, Meta said its capital expenditures could be as much as $72 billion in 2025, with the bulk of it going toward building new data centers and hiring researchers.

Now, the company seems to be pumping the brakes. The Wall Street Journal says a Meta spokesperson characterized the hiring freeze as “basic organizational planning” — a way to build a “solid structure” for its superintelligence efforts after a surge of hiring and “yearly budgeting and planning exercises.”

“The freeze is less about saving money, and more about pausing the game of musical chairs,” Cat Valverde, founder of Enterprise AI Solutions, told Built In. “When you’ve restructured four times in six months, throwing new hires into that chaos just compounds the mess. My read is that Meta wants to stabilize reporting lines, prove the new org design actually works and only then start layering on fresh talent.”

What Does All of This Mean for the AI Industry?

News of MSL’s restructure and hiring freeze is coming at a time of rising scrutiny over the entire artificial intelligence industry, with experts warning that Big Tech’s rampant spending may not be sustainable for much longer. After all, Meta isn’t the only one burning billions of dollars to secure the talent and resources they need to keep their AI operations afloat. Every major player seems to be locked in the same costly battle.

In August, analysts at Morgan Stanley reportedly cautioned that the hefty, stock-based compensation packages being offered by companies like Meta are could “dilute shareholder value without any clear innovation gains.” Around the same time, MIT released a report claiming that 95 percent of organizations were seeing “zero return” on their AI investments — a data point that helped incite a massive selloff of tech stocks on the very day news of MSL’s hiring freeze broke. Even Sam Altman, the CEO of OpeAI, says he sees an AI bubble forming (though he is still confident in the technology’s long-term future).

All things considered, every AI company seems to be at a turning point right now, not just Meta. Whether it’s a sign that the bubble is on the verge of bursting or simply a necessary period recalibration depends on who you ask. What’s clear is that the AI industry appears to be reaching a moment of truth. And Meta is right in the thick of it.

“Investors aren’t wowed by flashy demos anymore, they want to see revenue. Meta’s turbulence is a symptom of that shift,” Valverde said. “In AI, everyone’s experimenting in real time. Even the giants are guessing their way forward, which makes it feel a bit like the blind leading the blind. Meta’s missteps just highlight that nobody has cracked the code on how to turn breakthrough research into durable, scaled products.”

What is Meta Superintelligence Labs?

Meta Superintelligence Labs (MSL) is a division that houses all of Meta’s AI teams and initiatives, spanning research, model training, infrastructure and product integration. Together these teams are working toward the realization of superintelligence — AI that exceeds human intelligence in all ways.

Who is in charge of Meta’s AI strategy?

Meta CEO Mark Zuckerberg sets the company’s overall direction and AI strategy, while Chief AI Officer Alexandr Wang is responsible for running Meta Superintelligence Labs itself, overseeing its day-to-day operations.

AI Research

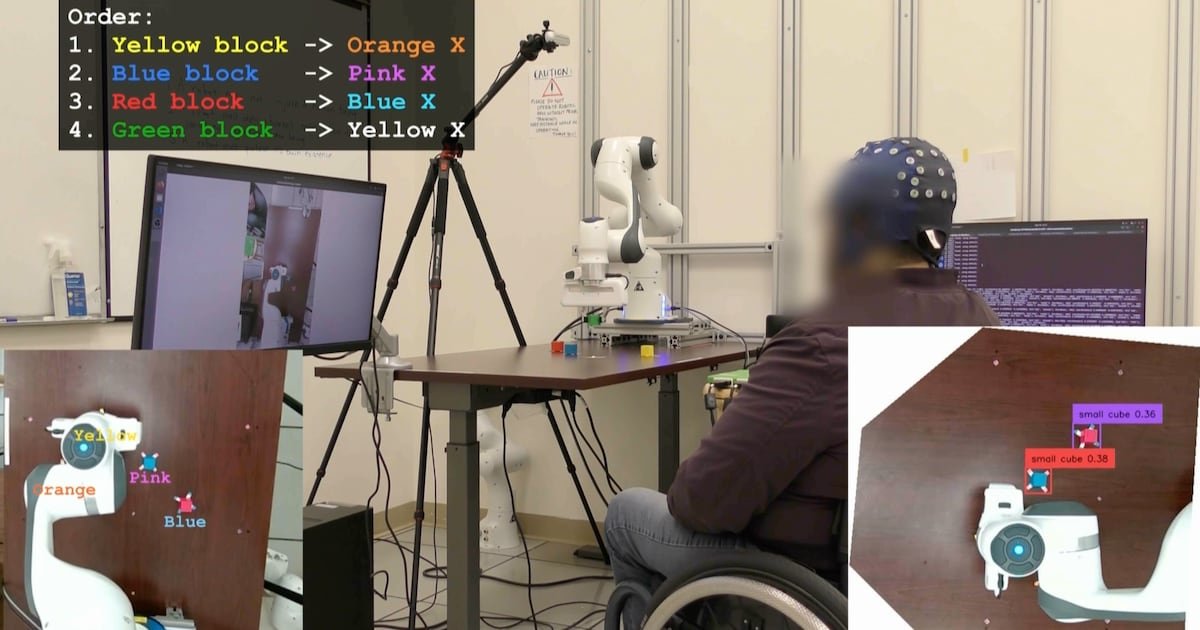

UCLA Researchers Enable Paralyzed Patients to Control Robots with Thoughts Using AI – CHOSUNBIZ – Chosun Biz

AI Research

Hackers exploit hidden prompts in AI images, researchers warn

Cybersecurity firm Trail of Bits has revealed a technique that embeds malicious prompts into images processed by large language models (LLMs). The method exploits how AI platforms compress and downscale images for efficiency. While the original files appear harmless, the resizing process introduces visual artifacts that expose concealed instructions, which the model interprets as legitimate user input.

In tests, the researchers demonstrated that such manipulated images could direct AI systems to perform unauthorized actions. One example showed Google Calendar data being siphoned to an external email address without the user’s knowledge. Platforms affected in the trials included Google’s Gemini CLI, Vertex AI Studio, Google Assistant on Android, and Gemini’s web interface.

Read More: Meta curbs AI flirty chats, self-harm talk with teens

The approach builds on earlier academic work from TU Braunschweig in Germany, which identified image scaling as a potential attack surface in machine learning. Trail of Bits expanded on this research, creating “Anamorpher,” an open-source tool that generates malicious images using interpolation techniques such as nearest neighbor, bilinear, and bicubic resampling.

From the user’s perspective, nothing unusual occurs when such an image is uploaded. Yet behind the scenes, the AI system executes hidden commands alongside normal prompts, raising serious concerns about data security and identity theft. Because multimodal models often integrate with calendars, messaging, and workflow tools, the risks extend into sensitive personal and professional domains.

Also Read: Nvidia CEO Jensen Huang says AI boom far from over

Traditional defenses such as firewalls cannot easily detect this type of manipulation. The researchers recommend a combination of layered security, previewing downscaled images, restricting input dimensions, and requiring explicit confirmation for sensitive operations.

“The strongest defense is to implement secure design patterns and systematic safeguards that limit prompt injection, including multimodal attacks,” the Trail of Bits team concluded.

AI Research

When AI Freezes Over | Psychology Today

A phrase I’ve often clung to regarding artificial intelligence is one that is also cloaked in a bit of techno-mystery. And I bet you’ve heard it as part of the lexicon of technology and imagination: “emergent abilities.” It’s common to hear that large language models (LLMs) have these curious “emergent” behaviors that are often coupled with linguistic partners like scaling and complexity. And yes, I’m guilty too.

In AI research, this phrase first took off after a 2022 paper that described how abilities seem to appear suddenly as models scale and tasks that a small model fails at completely, a larger model suddenly handles with ease. One day a model can’t solve math problems, the next day it can. It’s an irresistible story as machines have their own little Archimedean “eureka!” moments. It’s almost as if “intelligence” has suddenly switched on.

But I’m not buying into the sensation, at least not yet. A newer 2025 study suggests we should be more careful. Instead of magical leaps, what we’re seeing looks a lot more like the physics of phase changes.

Ice, Water, and Math

Think about water. At one temperature it’s liquid, at another it’s ice. The molecules don’t become something new—they’re always two hydrogens and an oxygen—but the way they organize shifts dramatically. At the freezing point, hydrogen bonds “loosely set” into a lattice, driven by those fleeting electrical charges on the hydrogen atoms. The result is ice, the same ingredients reorganized into a solid that’s curiously less dense than liquid water. And, yes, there’s even a touch of magic in the science as ice floats. But that magic melts when you learn about Van der Waals forces.

The same kind of shift shows up in LLMs and is often mislabeled as “emergence.” In small models, the easiest strategy is positional, where computation leans on word order and simple statistical shortcuts. It’s an easy trick that works just enough to reduce error. But scale things up by using more parameters and data, and the system reorganizes. The 2025 study by Cui shows that, at a critical threshold, the model shifts into semantic mode and relies on the geometry of meaning in its high-dimensional vector space. It isn’t magic, it’s optimization. Just as water molecules align into a lattice, the model settles into a more stable solution in its mathematical landscape.

The Mirage of “Emergence”

That 2022 paper called these shifts emergent abilities. And yes, tasks like arithmetic or multi-step reasoning can look as though they “switch on.” But the model hasn’t suddenly “understood” arithmetic. What’s happening is that semantic generalization finally outperforms positional shortcuts once scale crosses a threshold. Yes, it’s a mouthful. But happening here is the computational process that is shifting from a simple “word position” in a prompt (like, the cat in the _____) to a complex, hyperdimensional matrix where semantic associations across thousands of dimensions create amazing strength to the computation.

And those sudden jumps? They’re often illusions. On simple pass/fail tests, a model can look stuck at zero until it finally tips over the line and then it seems to leap forward. In reality, it was improving step by step all along. The so-called “light-bulb moment” is really just a quirk of how we measure progress. No emergence, just math.

Why “Emergence” Is So Seductive

Why does the language of “emergence” stick? Because it borrows from biology and philosophy. Life “emerges” from chemistry as consciousness “emerges” from neurons. It makes LLMs sound like they’re undergoing cognitive leaps. Some argue emergence is a hallmark of complex systems, and there’s truth to that. So, to a degree, it does capture the idea of surprising shifts.

But we need to be careful. What’s happening here is still math, not mind. Calling it emergence risks sliding into anthropomorphism, where sudden performance shifts are mistaken for genuine understanding. And it happens all the time.

A Useful Imitation

The 2022 paper gave us the language of “emergence.” The 2025 paper shows that what looks like emergence is really closer to a high-complexity phase change. It’s the same math and the same machinery. At small scales, positional tricks (word sequence) dominate. At large scales, semantic structures (multidimensional linguistic analysis) win out.

No insight, no spark of consciousness. It’s just a system reorganizing under new constraints. And this supports my larger thesis: What we’re witnessing isn’t intelligence at all, but anti-intelligence, a powerful, useful imitation that mimics the surface of cognition without the interior substance that only a human mind offers.

Artificial Intelligence Essential Reads

So the next time you hear about an LLM with “emergent ability,” don’t imagine Archimedes leaping from his bath. Picture water freezing. The same molecules, new structure. The same math, new mode. What looks like insight is just another phase of anti-intelligence that is complex, fascinating, even beautiful in its way, but not to be mistaken for a mind.

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies