Jobs & Careers

Meta Invests $3.5 Bn in Ray-Ban Maker EssilorLuxottica SA

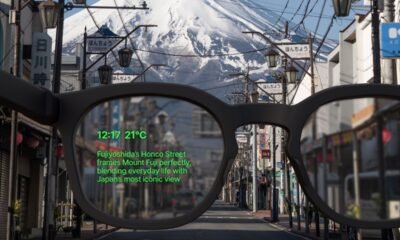

Meta has acquired a minority stake in EssilorLuxottica SA, the world’s leading eyewear manufacturer, which also makes Ray-Ban, for expanding the tech giant’s financial involvement in the smart glasses sector, as reported by Bloomberg.

Meta acquired slightly less than 3% of Ray-Ban producer EssilorLuxottica, a stake currently valued at about $3.5 billion based on market prices.

According to the report, the company is also contemplating additional investments that might boost its ownership to roughly 5% in the future, although these plans could still be subject to change.

Based in Menlo Park, California, Meta’s financial commitment to the eyewear company strengthens the alliance between the two firms, which have collaborated in recent years to create AI-driven smart glasses. Currently, Meta offers a pair of Ray-Ban glasses that launched in 2021, featuring integrated cameras and an AI assistant.

The glasses come with built-in Meta AI, enabling users to interact through voice commands. Users can communicate with phrases like “Hey Meta” to ask questions, stream music or podcasts, make phone calls, and broadcast live on Instagram or Facebook.

Meta states that the product is intended to help users “stay present and connected to who and what they care about most.”

Last month, the company unveiled Oakley-branded glasses in collaboration with EssilorLuxottica. Francesco Milleri, CEO of EssilorLuxottica, mentioned last year that Meta showed interest in acquiring a stake in the firm, but this plan did not materialise until now.

For EssilorLuxottica, this agreement enhances its involvement in the tech sector, which could be advantageous if Meta’s forward-looking initiatives succeed. Meta is also wagering on the possibility that people will eventually engage in work and leisure activities while using headsets or glasses, Bloomberg reported.

Jobs & Careers

Hexaware, Replit Partner to Bring Secure Vibe Coding to Enterprises

Hexaware Technologies has partnered with Replit to accelerate enterprise software development and make it more accessible through secure Vibe Coding. The collaboration combines Hexaware’s digital innovation expertise with Replit’s natural language-powered development platform, allowing both business users and engineers to create secure production-ready applications.

The partnership aims to help companies accelerate digital transformation by enabling teams beyond IT, such as product, design, sales and operations, to develop internal tools and prototypes without relying on traditional coding skills.

Amjad Masad, CEO of Replit, said, “Our mission is to empower entrepreneurial individuals to transform ideas into software—regardless of their coding experience or whether they’re launching a startup or innovating within an enterprise.”

Hexaware said the tie-up will facilitate faster innovation while maintaining security and governance.

Sanjay Salunkhe, president and global head of digital and software services at Hexaware Technologies, noted, “By combining our vibe coding framework with Replit’s natural language interface, we’re giving enterprises the tools to accelerate development cycles while upholding the rigorous standards their stakeholders demand.”

The partnership will enable enterprises to democratise software development by allowing employees across departments to build and deploy secure applications using natural language.

It will provide secure environments with features such as SSO, SOC 2 compliance and role-based access controls, further strengthened by Hexaware’s governance frameworks to meet enterprise IT standards.

Teams will benefit from faster prototyping, with product and design groups able to test and iterate ideas quickly, reducing time-to-market. Sales, marketing and operations functions can also develop custom internal tools tailored to their workflows, avoiding reliance on generic SaaS platforms or long IT queues.

In addition, Replit’s agentic software architecture, combined with Hexaware’s AI expertise, will drive automation of complex backend tasks, enabling users to focus on higher-level logic and business outcomes.

The post Hexaware, Replit Partner to Bring Secure Vibe Coding to Enterprises appeared first on Analytics India Magazine.

Jobs & Careers

NVIDIA Reveals Two Customers Accounted for 39% of Quarterly Revenue

NVIDIA disclosed on August 28, 2025, that two unnamed customers contributed 39% of its revenue in the July quarter, raising questions about the chipmaker’s dependence on a small group of clients.

The company posted record quarterly revenue of $46.7 billion, up 56% from a year ago, driven by insatiable demand for its data centre products.

In a filing with the U.S. Securities and Exchange Commission (SEC), NVIDIA said “Customer A” accounted for 23% of total revenue and “Customer B” for 16%. A year earlier, its top two customers made up 14% and 11% of revenue.

The concentration highlights the role of large buyers, many of whom are cloud service providers. “Large cloud service providers made up about 50% of the company’s data center revenue,” NVIDIA chief financial officer Colette Kress said on Wednesday. Data center sales represented 88% of NVIDIA’s overall revenue in the second quarter.

“We have experienced periods where we receive a significant amount of our revenue from a limited number of customers, and this trend may continue,” the company wrote in the filing.

One of the customers could possibly be Saudi Arabia’s AI firm Humain, which is building two data centers in Riyadh and Dammam, slated to open in early 2026. The company has secured approval to import 18,000 NVIDIA AI chips.

The second customer could be OpenAI or one of the major cloud providers — Microsoft, AWS, Google Cloud, or Oracle. Another possibility is xAI.

Previously, Elon Musk said xAI has 230,000 GPUs, including 30,000 GB200s, operational for training its Grok model in a supercluster called Colossus 1. Inference is handled by external cloud providers.

Musk added that Colossus 2, which will host an additional 550,000 GB200 and GB300 GPUs, will begin going online in the coming weeks. “As Jensen Huang has stated, xAI is unmatched in speed. It’s not even close,” Musk wrote in a post on X.Meanwhile, OpenAI is preparing for a major expansion. Chief Financial Officer Sarah Friar said the company plans to invest in trillion-dollar-scale data centers to meet surging demand for AI computation.

The post NVIDIA Reveals Two Customers Accounted for 39% of Quarterly Revenue appeared first on Analytics India Magazine.

Jobs & Careers

‘Reliance Intelligence’ is Here, In Partnership with Google and Meta

Reliance Industries chairman Mukesh Ambani has announced the launch of Reliance Intelligence, a new wholly owned subsidiary focused on artificial intelligence, marking what he described as the company’s “next transformation into a deep-tech enterprise.”

Addressing shareholders, Ambani said Reliance Intelligence had been conceived with four core missions—building gigawatt-scale AI-ready data centres powered by green energy, forging global partnerships to strengthen India’s AI ecosystem, delivering AI services for consumers and SMEs in critical sectors such as education, healthcare, and agriculture, and creating a home for world-class AI talent.

Work has already begun on gigawatt-scale AI data centres in Jamnagar, Ambani said, adding that they would be rolled out in phases in line with India’s growing needs.

These facilities, powered by Reliance’s new energy ecosystem, will be purpose-built for AI training and inference at a national scale.

Ambani also announced a “deeper, holistic partnership” with Google, aimed at accelerating AI adoption across Reliance businesses.

“We are marrying Reliance’s proven capability to build world-class assets and execute at India scale with Google’s leading cloud and AI technologies,” Ambani said.

Google CEO Sundar Pichai, in a recorded message, said the two companies would set up a new cloud region in Jamnagar dedicated to Reliance.

“It will bring world-class AI and compute from Google Cloud, powered by clean energy from Reliance and connected by Jio’s advanced network,” Pichai said.

He added that Google Cloud would remain Reliance’s largest public cloud partner, supporting mission-critical workloads and co-developing advanced AI initiatives.

Ambani further unveiled a new AI-focused joint venture with Meta.

He said the venture would combine Reliance’s domain expertise across industries with Meta’s open-source AI models and tools to deliver “sovereign, enterprise-ready AI for India.”

Meta founder and CEO Mark Zuckerberg, in his remarks, said the partnership is aimed to bring open-source AI to Indian businesses at scale.

“With Reliance’s reach and scale, we can bring this to every corner of India. This venture will become a model for how AI, and one day superintelligence, can be delivered,” Zuckerberg said.

Ambani also highlighted Reliance’s investments in AI-powered robotics, particularly humanoid robotics, which he said could transform manufacturing, supply chains and healthcare.

“Intelligent automation will create new industries, new jobs and new opportunities for India’s youth,” he told shareholders.

Calling AI an opportunity “as large, if not larger” than Reliance’s digital services push a decade ago, Ambani said Reliance Intelligence would work to deliver “AI everywhere and for every Indian.”

“We are building for the next decade with confidence and ambition,” he said, underscoring that the company’s partnerships, green infrastructure and India-first governance approach would be central to this strategy.

The post ‘Reliance Intelligence’ is Here, In Partnership with Google and Meta appeared first on Analytics India Magazine.

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies