Books, Courses & Certifications

Meet Boti: The AI assistant transforming how the citizens of Buenos Aires access government information with Amazon Bedrock

This post is co-written with Julieta Rappan, Macarena Blasi, and María Candela Blanco from the Government of the City of Buenos Aires.

The Government of the City of Buenos Aires continuously works to improve citizen services. In February 2019, it introduced an AI assistant named Boti available through WhatsApp, the most widely used messaging service in Argentina. With Boti, citizens can conveniently and quickly access a wide variety of information about the city, such as renewing a driver’s license, accessing healthcare services, and learning about cultural events. This AI assistant has become a preferred communication channel and facilitates more than 3 million conversations each month.

As Boti grows in popularity, the Government of the City of Buenos Aires seeks to provide new conversational experiences that harness the latest developments in generative AI. One challenge that citizens often face is navigating the city’s complex bureaucratic landscape. The City Government’s website includes over 1,300 government procedures, each of which has its own logic, nuances, and exceptions. The City Government recognized that Boti could improve access to this information by directly answering citizens’ questions and connecting them to the right procedure.

To pilot this new solution, the Government of the City of Buenos Aires partnered with the AWS Generative AI Innovation Center (GenAIIC). The teams worked together to develop an agentic AI assistant using LangGraph and Amazon Bedrock. The solution includes two main components: an input guardrail system and a government procedures agent. The input guardrail uses a custom LLM classifier to analyze incoming user queries, determining whether to approve or block requests based on their content. Approved requests are handled by the government procedures agent, which retrieves relevant procedural information and generates responses. Since most user queries focus on a single procedure, we developed a novel reasoning retrieval system to improve retrieval accuracy. This system initially retrieves comparative summaries that disambiguate similar procedures and then applies a large language model (LLM) to select the most relevant results. The agent uses this information to craft responses in Boti’s characteristic style, delivering short, helpful, and expressive messages in Argentina’s Rioplatense Spanish dialect. We focused on distinctive linguistic features of this dialect including the voseo (using “vos” instead of “tú”) and periphrastic future (using “ir a” before verbs).

In this post, we dive into the implementation of the agentic AI system. We begin with an overview of the solution, explaining its design and main features. Then, we discuss the guardrail and agent subcomponents and assess their performance. Our evaluation shows that the guardrails effectively block harmful content, including offensive language, harmful opinions, prompt injection attempts, and unethical behaviors. The agent achieves up to 98.9% top-1 retrieval accuracy using the reasoning retriever, which marks a 12.5–17.5% improvement over standard retrieval-augmented generation (RAG) methods. Subject matter experts found that Boti’s responses were 98% accurate in voseo usage and 92% accurate in periphrastic future usage. The promising results of this solution establish a new era of citizen-government interaction.

Solution overview

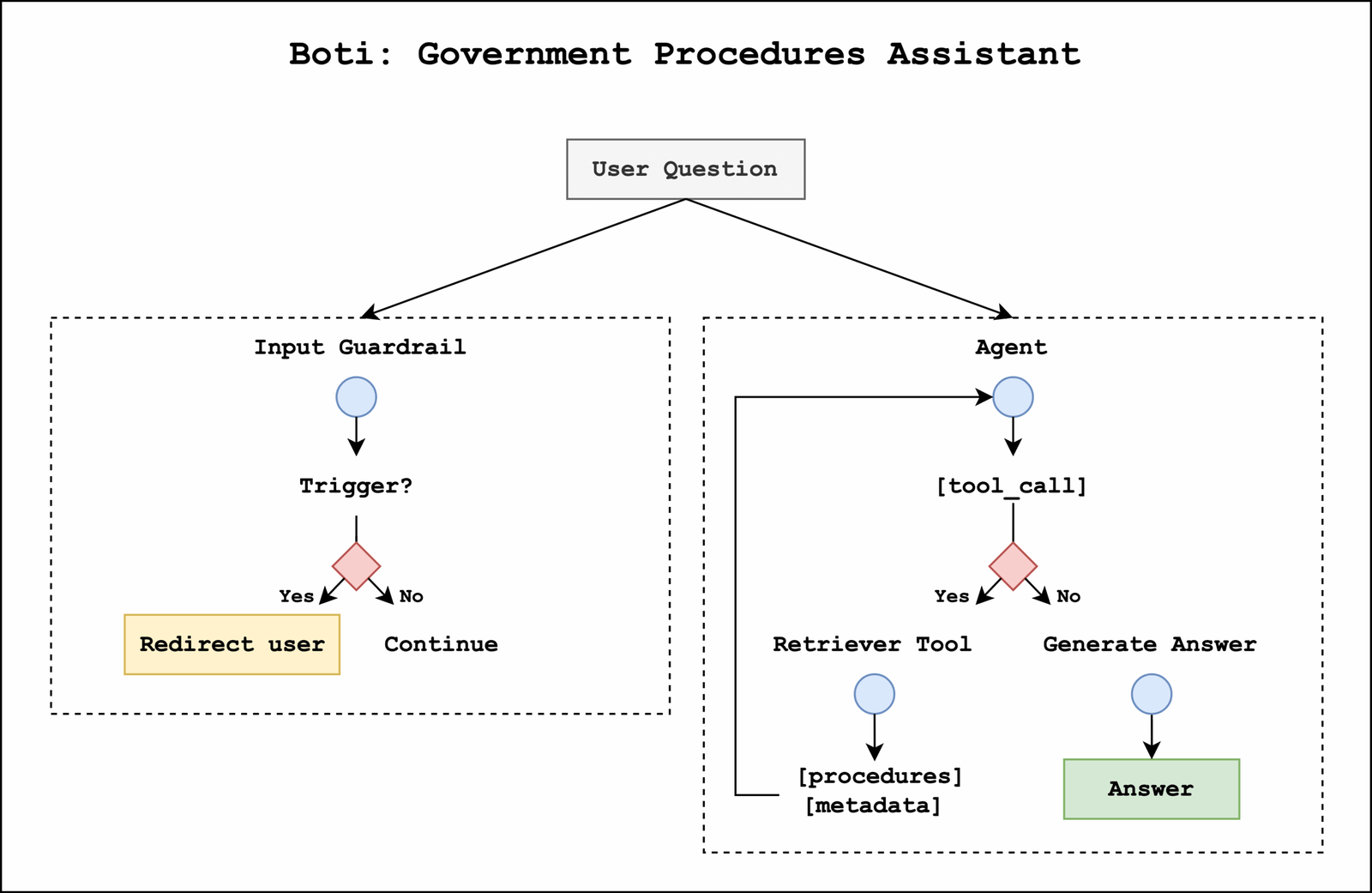

The Government of the City of Buenos Aires and the GenAIIC built an agentic AI assistant using Amazon Bedrock and LangGraph that includes an input guardrail system to enable safe interactions and a government procedures agent to respond to user questions. The workflow is shown in the following diagram.

The process begins when a user submits a question. In parallel, the question is passed to the input guardrail system and government procedures agent. The input guardrail system determines whether the question contains harmful content. If triggered, it stops graph execution and redirects the user to ask questions about government procedures. Otherwise, the agent continues to formulate its response. The agent either calls a retrieval tool, which allows it to obtain relevant context and metadata from government procedures stored in Amazon Bedrock Knowledge Bases, or responds to the user. Both the input guardrail and government procedures agent use the Amazon Bedrock Converse API for LLM inference. This API provides access to a wide selection of LLMs, helping us optimize performance and latency across different subtasks.

Input guardrail system

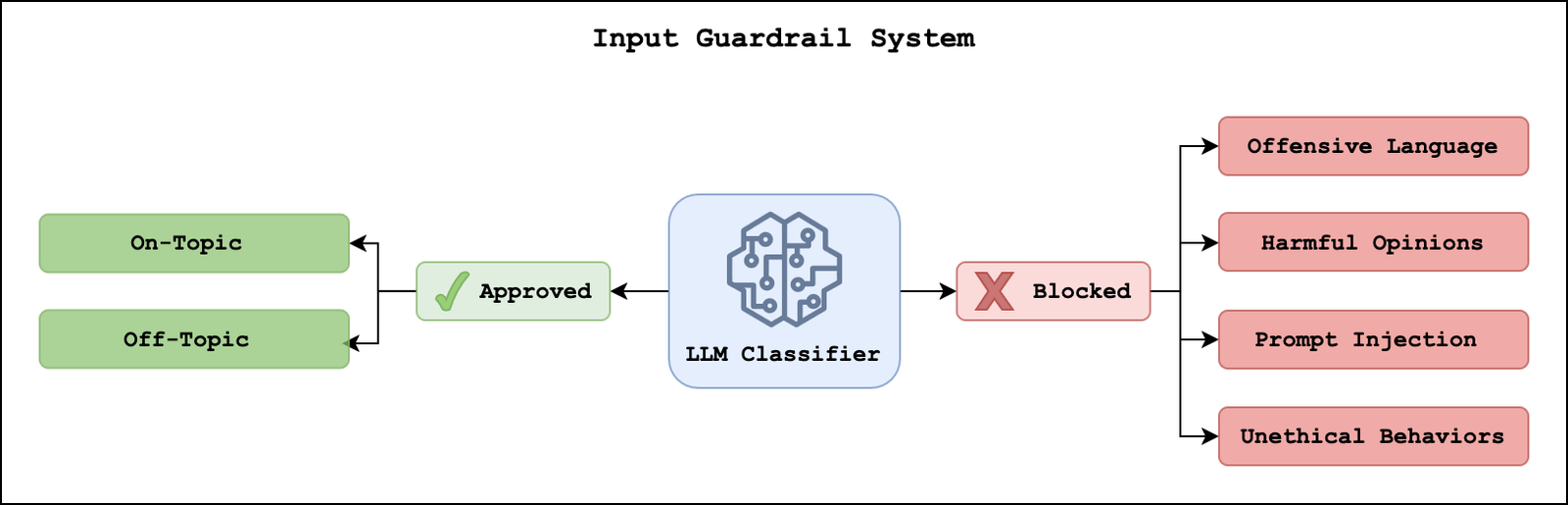

Input guardrails help prevent the LLM system from processing harmful content. Although Amazon Bedrock Guardrails offers one implementation approach with filters for specific words, content, or sensitive information, we developed a custom solution. This provided us greater flexibility to optimize performance for Rioplatense Spanish and monitor specific types of content. The following diagram illustrates our approach, in which an LLM classifier assigns a primary category (“approved” or “blocked”) as well as a more detailed subcategory.

Approved queries are within the scope of the government procedures agent. They consist of on-topic requests, which focus on government procedures, and off-topic requests, which are low-risk conversation questions that the agent responds to directly. Blocked queries contain high-risk content that Boti should avoid, including offensive language, harmful opinions, prompt injection attacks, or unethical behaviors.

We evaluated the input guardrail system on a dataset consisting of both normal and harmful user queries. The system successfully blocked 100% of harmful queries, while occasionally flagging normal queries as harmful. This performance balance makes sure that Boti can provide helpful information while maintaining safe and appropriate interactions for users.

Agent system

The government procedures agent is responsible for answering user questions. It determines when to retrieve relevant procedural information using its retrieval tool and generates responses in Boti’s characteristic style. In the following sections, we examine both processes.

Reasoning retriever

The agent can use a retrieval tool to provide accurate and up-to-date information about government procedures. Retrieval tools typically employ a RAG framework to perform semantic similarity searches between user queries and a knowledge base containing document chunks stored as embeddings, and then provide the most relevant samples as context to the LLM. Government procedures, however, present challenges to this standard approach. Related procedures, such as renewing and reprinting drivers’ licenses, can be difficult to disambiguate. Additionally, each user question typically requires information from one specific procedure. The mixture of chunks returned from standard RAG approaches increases the likelihood of generating incorrect responses.

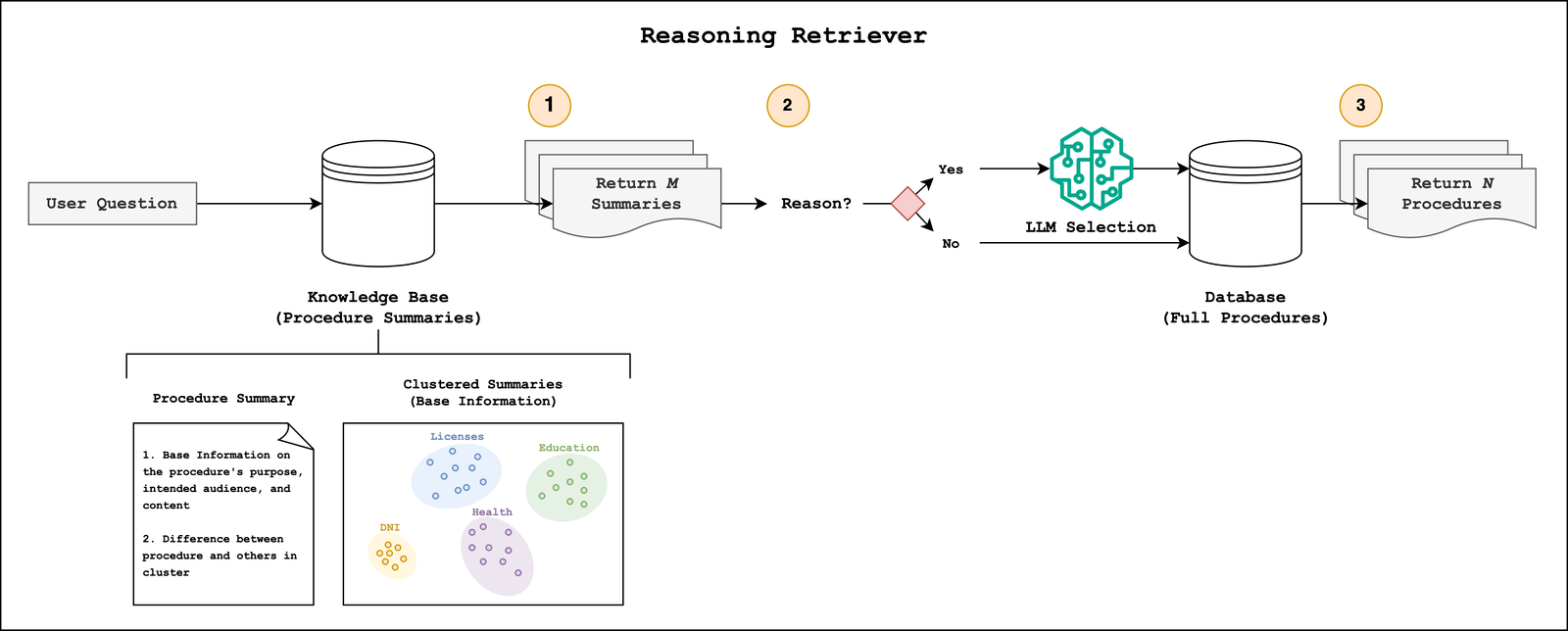

To better disambiguate government procedures, the Buenos Aires and GenAIIC teams developed a reasoning retrieval method that uses comparative summaries and LLM selection. An overview of this approach is shown in the following diagram.

A necessary preprocessing step before retrieval is the creation of a government procedures knowledge base. To capture both the key information contained in procedures and how they related to each other, we created comparative summaries. Each summary contains basic information, such as the procedure’s purpose, intended audience, and content, such as costs, steps, and requirements. We clustered the base summaries into small groups, with an average cluster size of 5, and used an LLM to generate descriptions about what made each procedure different from its neighbors. We appended the distinguishing descriptions to the base information to create the final summary. We note that this approach shares similarities to Anthropic’s Contextual Retrieval, which prepends explanatory context to document chunk.

With the knowledge base in place, we are able to retrieve relevant government procedures based on the user query. The reasoning retriever completes three steps:

- Retrieve M Summaries: We retrieve between 1 and M comparative summaries using semantic search.

- Optional Reasoning: In some cases, the initial retrieval surfaces similar procedures. To make sure that the most relevant procedures are returned to the agent, we apply an optional LLM reasoning step. The condition for this step occurs when the ratio of the first and second retrieval scores falls below a threshold value. An LLM follows a chain-of-thought (CoT) process in which it compares the user query to the retrieved summaries. It discards irrelevant procedures and reorders the remaining ones based on relevance. If the user query is specific enough, this process typically returns one result. By applying this reasoning step selectively, we minimize latency and token usage while maintaining high retrieval accuracy.

- Retrieve N Full-Text Procedures: After the most relevant procedures are identified, we fetch their complete documents and metadata from an Amazon DynamoDB table. The metadata contains information like the source URL and the sentiment of the procedure. The agent typically receives between 1 and N results, where N ≤ M.

The agent receives the retrieved full text procedures in its context window. It follows its own CoT process to determine the relevant content and URL source attributions when generating its answer.

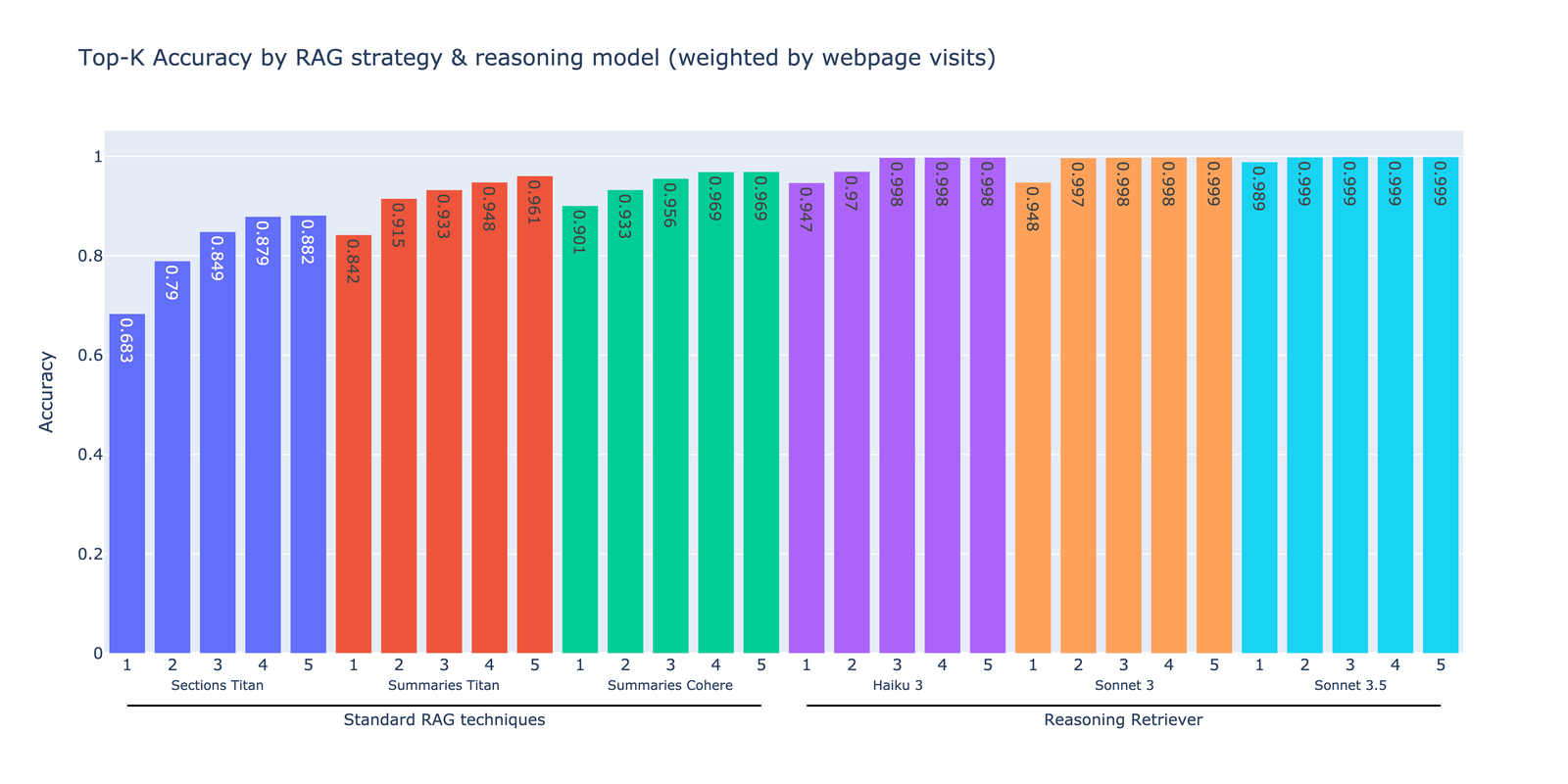

We evaluated our reasoning retriever against standard RAG techniques using a synthetic dataset of 1,908 questions derived from known source procedures. The performance was measured by determining whether the correct procedure appeared in the top-k retrieved results for each question. The following plot compares the top-k retrieval accuracy for each approach across different models, arranged in order of ascending performance from left to right. The metrics are proportionally weighted based on each procedure’s webpage visit frequency, making sure that our evaluation reflects real-world usage patterns.

The first three approaches represent standard vector-based retrieval methods. The first method, Section Titan, involved chunking procedures by document sections, targeting approximately 250 words per chunk, and then embedding the chunks using Amazon Titan Text Embeddings v2. The second method, Summaries Titan, consisted of embedding the procedure summaries using the same embedding model. By embedding summaries rather than document text, the retrieval accuracy improved by 7.8–15.8%. The third method, Summaries Cohere, involved embedding procedure summaries using Cohere Multilingual v3 on Amazon Bedrock. The Cohere Multilingual embedding model provided a noticeable improvement in retrieval accuracy compared to the Amazon Titan embedding models, with all top-k values above 90%.

The next three approaches use the reasoning retriever. We embedded the procedure summaries using the Cohere Multilingual model, retrieved 10 summaries during the initial retrieval step, and optionally applied the LLM-based reasoning step using either Anthropic’s Haiku 3, Claude 3 Sonnet, or Claude 3.5 Sonnet on Amazon Bedrock. All three reasoning retrievers consistently outperform standard RAG techniques, achieving 12.5–17.5% higher top-k accuracies. Anthropic’s Claude 3.5 Sonnet delivered the highest performance with 98.9% top-1 accuracy. These results demonstrate how combining embedding-based retrieval with LLM-powered reasoning can improve RAG performance.

Answer generation

After collecting the necessary information, the agent responds using Boti’s distinctive communication style: concise, helpful messages in Rioplatense Spanish. We maintained this voice through prompt engineering that specified the following:

- Personality – Convey a warm and friendly tone, providing quick solutions to everyday problems

- Response length – Limit responses to a few sentences

- Structure – Organize content using lists and highlights key information using bold text

- Expression – Use emojis to mark important requirements and add visual cues

- Dialect – Incorporate Rioplatense linguistic features, including voseo, periphrastic future, and regional vocabulary (for example, “acordate,” “entrar,” “acá,” and “allá”).

Government procedures often address sensitive topics, like accidents, health, or security. To facilitate appropriate responses, we incorporated sentiment analysis into our knowledge base as metadata. This allows our system to route to different prompt templates. Sensitive topics are directed to prompts with reduced emoji usage and more empathetic language, whereas neutral topics receive standard templates.

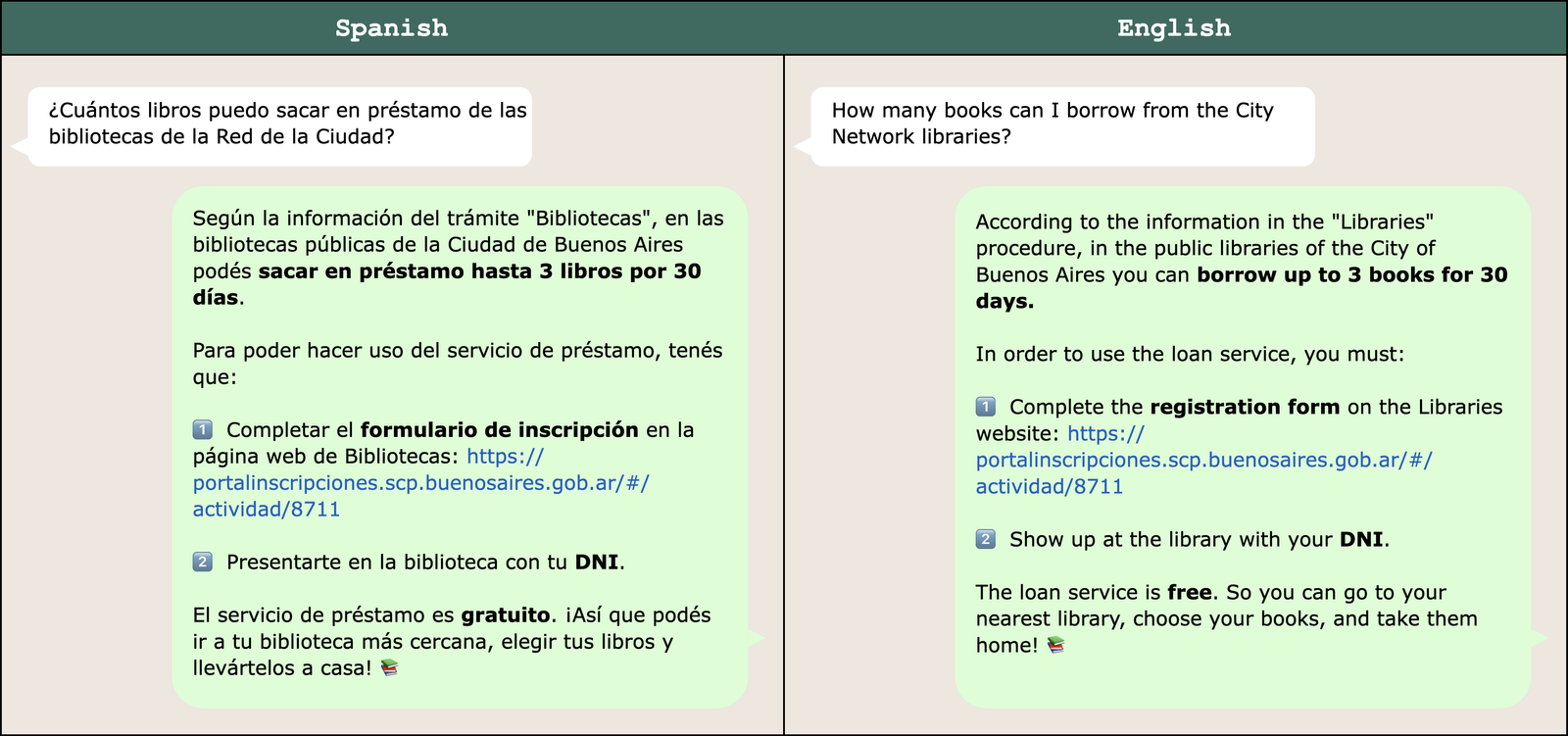

The following figure shows a sample response to a question about borrowing library books. It has been translated to English for convenience.

To validate our prompt engineering approach, subject matter experts at the Government of the City of Buenos Aires reviewed a sample of Boti’s responses. Their analysis confirmed high fidelity to Rioplatense Spanish, with 98% accuracy in voseo usage and 92% in periphrastic future usage.

Conclusion

This post described the agentic AI assistant built by the Government of the City of Buenos Aires and the GenAIIC to respond to citizens’ questions about government procedures. The solution consists of two primary components: an input guardrail system that helps prevent the system from responding to harmful user queries and a government procedures agent that retrieves relevant information and generates responses. The input guardrails effectively block harmful content, including queries with offensive language, harmful opinions, prompt injection, and unethical behaviors. The government procedures agent employs a novel reasoning retrieval method that disambiguates similar government procedures, achieving up to 98.9% top-1 retrieval accuracy and a 12.5–17.5% improvement over standard RAG methods. Through prompt engineering, responses are delivered in Rioplatense Spanish using Boti’s voice. Subject matter experts rated Boti’s linguistic performance highly, with 98% accuracy in voseo usage and 92% in periphrastic future usage.

As generative AI advances, we expect to continuously improve our solution. The expanding catalog of LLMs available in Amazon Bedrock makes it possible to experiment with newer, more powerful models. This includes models that process text, as explored in the solution in this post, as well as models that process speech, allowing for direct speech-to-speech interactions. We might also explore the fine-tuning capabilities of Amazon Bedrock to customize models so that they better capture the linguistic features of Rioplatense Spanish. Beyond model improvements, we can iterate on our agent framework. The agent’s tool set can be expanded to support other tasks associated with government procedures like account creation, form completion, and appointment scheduling. As the City Government develops new experiences for citizens, we can consider implementing multi-agent frameworks in which specialist agents, like the government procedures agent, handle specific tasks.

To learn more about Boti and AWS’s generative AI capabilities, check out the following resources:

About the authors

Julieta Rappan is Director of the Digital Channels Department of the Buenos Aires City Government, where she coordinates the landscape of digital and conversational interfaces. She has extensive experience in the comprehensive management of strategic and technological projects, as well as in leading high-performance teams focused on the development of digital products and services. Her leadership drives the implementation of technological solutions with a focus on scalability, coherence, public value, and innovation—where generative technologies are beginning to play a central role.

Macarena Blasi is Chief of Staff at the Digital Channels Department of the Buenos Aires City Government, working across the city’s main digital services, including Boti—the WhatsApp-based virtual assistant—and the official Buenos Aires website. She began her journey working in conversational experience design, later serving as product owner and Operations Manager and then as Head of Experience and Content, leading multidisciplinary teams focused on improving the quality, accessibility, and usability of public digital services. Her work is driven by a commitment to building clear, inclusive, and human-centered experiences in the public sector.

María Candela Blanco is Operations Manager for Quality Assurance, Usability, and Continuous Improvement at the Buenos Aires Government, where she leads the content, research, and conversational strategy across the city’s main digital channels, including the Boti AI assistant and the official Buenos Aires website. Outside of tech, Candela studies literature at UNSAM and is deeply passionate about language, storytelling, and the ways they shape our interactions with technology.

Leandro Micchele is a Software Developer focused on applying AI to real-world use cases, with expertise in AI assistants, voice, and vision solutions. He serves as the technical lead and consultant for the Boti AI assistant at the Buenos Aires Government and works as a Software Developer at Telecom Argentina. Beyond tech, his discipline extends to martial arts: he has over 20 years of experience and currently teaches Aikido.

Hugo Albuquerque is a Deep Learning Architect at the AWS Generative AI Innovation Center. Before joining AWS, Hugo had extensive experience working as a data scientist in the media and entertainment and marketing sectors. In his free time, he enjoys learning other languages like German and practicing social dancing, such as Brazilian Zouk.

Enrique Balp is a Senior Data Scientist at the AWS Generative AI Innovation Center working on cutting-edge AI solutions. With a background in the physics of complex systems focused on neuroscience, he has applied data science and machine learning across healthcare, energy, and finance for over a decade. He enjoys hikes in nature, meditation retreats, and deep friendships.

Diego Galaviz is a Deep Learning Architect at the AWS Generative AI Innovation Center. Before joining AWS, he had over 8 years of expertise as a data scientist across diverse sectors, including financial services, energy, big tech, and cybersecurity. He holds a master’s degree in artificial intelligence, which complements his practical industry experience.

Laura Kulowski is a Senior Applied Scientist at the AWS Generative AI Innovation Center, where she works with customers to build generative AI solutions. Before joining Amazon, Laura completed her PhD at Harvard’s Department of Earth and Planetary Sciences and investigated Jupiter’s deep zonal flows and magnetic field using Juno data.

Rafael Fernandes is the LATAM leader of the AWS Generative AI Innovation Center, whose mission is to accelerate the development and implementation of generative AI in the region. Before joining Amazon, Rafael was a co-founder in the financial services industry space and a data science leader with over 12 years of experience in Europe and LATAM.

Books, Courses & Certifications

When AI Writes Code, Who Secures It? – O’Reilly

In early 2024, a striking deepfake fraud case in Hong Kong brought the vulnerabilities of AI-driven deception into sharp relief. A finance employee was duped during a video call by what appeared to be the CFO—but was, in fact, a sophisticated AI-generated deepfake. Convinced of the call’s authenticity, the employee made 15 transfers totaling over $25 million to fraudulent bank accounts before realizing it was a scam.

This incident exemplifies more than just technological trickery—it signals how trust in what we see and hear can be weaponized, especially as AI becomes more deeply integrated into enterprise tools and workflows. From embedded LLMs in enterprise systems to autonomous agents diagnosing and even repairing issues in live environments, AI is transitioning from novelty to necessity. Yet as it evolves, so too do the gaps in our traditional security frameworks—designed for static, human-written code—revealing just how unprepared we are for systems that generate, adapt, and behave in unpredictable ways.

Beyond the CVE Mindset

Traditional secure coding practices revolve around known vulnerabilities and patch cycles. AI changes the equation. A line of code can be generated on the fly by a model, shaped by manipulated prompts or data—creating new, unpredictable categories of risk like prompt injection or emergent behavior outside traditional taxonomies.

A 2025 Veracode study found that 45% of all AI-generated code contained vulnerabilities, with common flaws like weak defenses against XSS and log injection. (Some languages performed more poorly than others. Over 70% of AI-generated Java code had a security issue, for instance.) Another 2025 study showed that repeated refinement can make things worse: After just five iterations, critical vulnerabilities rose by 37.6%.

To keep pace, frameworks like the OWASP Top 10 for LLMs have emerged, cataloging AI-specific risks such as data leakage, model denial of service, and prompt injection. They highlight how current security taxonomies fall short—and why we need new approaches that model AI threat surfaces, share incidents, and iteratively refine risk frameworks to reflect how code is created and influenced by AI.

Easier for Adversaries

Perhaps the most alarming shift is how AI lowers the barrier to malicious activity. What once required deep technical expertise can now be done by anyone with a clever prompt: generating scripts, launching phishing campaigns, or manipulating models. AI doesn’t just broaden the attack surface; it makes it easier and cheaper for attackers to succeed without ever writing code.

In 2025, researchers unveiled PromptLock, the first AI-powered ransomware. Though only a proof of concept, it showed how theft and encryption could be automated with a local LLM at remarkably low cost: about $0.70 per full attack using commercial APIs—and essentially free with open source models. That kind of affordability could make ransomware cheaper, faster, and more scalable than ever.

This democratization of offense means defenders must prepare for attacks that are more frequent, more varied, and more creative. The Adversarial ML Threat Matrix, founded by Ram Shankar Siva Kumar during his time at Microsoft, helps by enumerating threats to machine learning and offering a structured way to anticipate these evolving risks. (He’ll be discussing the difficulty of securing AI systems from adversaries at O’Reilly’s upcoming Security Superstream.)

Silos and Skill Gaps

Developers, data scientists, and security teams still work in silos, each with different incentives. Business leaders push for rapid AI adoption to stay competitive, while security leaders warn that moving too fast risks catastrophic flaws in the code itself.

These tensions are amplified by a widening skills gap: Most developers lack training in AI security, and many security professionals don’t fully understand how LLMs work. As a result, the old patchwork fixes feel increasingly inadequate when the models are writing and running code on their own.

The rise of “vibe coding”—relying on LLM suggestions without review—captures this shift. It accelerates development but introduces hidden vulnerabilities, leaving both developers and defenders struggling to manage novel risks.

From Avoidance to Resilience

AI adoption won’t stop. The challenge is moving from avoidance to resilience. Frameworks like Databricks’ AI Risk Framework (DASF) and the NIST AI Risk Management Framework provide practical guidance on embedding governance and security directly into AI pipelines, helping organizations move beyond ad hoc defenses toward systematic resilience. The goal isn’t to eliminate risk but to enable innovation while maintaining trust in the code AI helps produce.

Transparency and Accountability

Research shows AI-generated code is often simpler and more repetitive, but also more vulnerable, with risks like hardcoded credentials and path traversal exploits. Without observability tools such as prompt logs, provenance tracking, and audit trails, developers can’t ensure reliability or accountability. In other words, AI-generated code is more likely to introduce high-risk security vulnerabilities.

AI’s opacity compounds the problem: A function may appear to “work” yet conceal vulnerabilities that are difficult to trace or explain. Without explainability and safeguards, autonomy quickly becomes a recipe for insecure systems. Tools like MITRE ATLAS can help by mapping adversarial tactics against AI models, offering defenders a structured way to anticipate and counter threats.

Looking Ahead

Securing code in the age of AI requires more than patching—it means breaking silos, closing skill gaps, and embedding resilience into every stage of development. The risks may feel familiar, but AI scales them dramatically. Frameworks like Databricks’ AI Risk Framework (DASF) and the NIST AI Risk Management Framework provide structures for governance and transparency, while MITRE ATLAS maps adversarial tactics and real-world attack case studies, giving defenders a structured way to anticipate and mitigate threats to AI systems.

The choices we make now will determine whether AI becomes a trusted partner—or a shortcut that leaves us exposed.

Books, Courses & Certifications

How Much Freedom Do Teachers Have in the Classroom? In 2025, It’s Complicated.

A teacher’s classroom setup can reveal a lot about their approach to learning. April Jones, who is a ninth grade algebra teacher in San Antonio, Texas, has met more than 100 students this school year, in most cases for the first time. Part of what makes an effective teacher is an ability to be personable with students.

“If a kid likes coming to your class or likes chatting with you or seeing you, they’re more likely to learn from you,” said Jones. “Trying to do something where kids can come in and they see even one piece of information on a poster, and they go, ‘OK, she gets it,’ or ‘OK, she seems cool, I’m going to sit down and try,’ I think, is always my goal.”

One way she does this is by covering the muted yellow walls — a color she wouldn’t have chosen herself — with posters, signs and banners Jones has accumulated in the 10 years she’s been teaching; from colleagues, students and on her own dime.

Among the items taped near her desk are a poster of the women who made meaningful contributions to mathematics, a sign recognizing her as a 2025 teacher of the year and a collection of punny posters, one of which features a predictable miscommunication between Lisa and Homer Simpson over the meaning of Pi.

Until now, Jones has been decorating on autopilot. Realizing she’s saved the most controversial for last, she looks down at the “Hate Has No Home Here” sign that’s been the subject of scrutiny from her district and online. But it’s also given her hope.

At a time when states are enforcing laws challenging what teachers can teach, discuss and display in classrooms, many districts are signaling a willingness to overcomply with the Trump administration’s executive order that labeled diversity, equity and inclusion programs, policies and guidance an unlawful use of federal funding. How teachers are responding has varied based on where they live and work, and how comfortable they are with risk.

New Rules on Classroom Expression

Like many public school teachers in the U.S., Jones lives in a state, Texas, that recently introduced new laws concerning classroom expression that are broad in scope and subjective in nature. Texas’ Senate Bill 12 took effect Sept. 1. It prohibits programs, discussions and public performances related to race, ethnicity, gender identity and sexual orientation in public K-12 schools.

Administrators in Jones’ district asked that she take down the “Hate Has No Home Here” sign, which includes three hearts — two filled in to resemble the Pride and Transgender Pride flags, and one depicting a gradient of skin colors. Jones refused, garnering favorable media attention for her defiance, and widespread community support both at school board meetings and online, leaving her poised to prevail, at least in the court of public opinion. Then, all teachers of the North East Independent School District received the same directive: Pride symbolism needs to be covered for the 2025-26 school year.

Jones finished decorating her classroom by hanging the banner.

“I did fold the bottom so you can’t see the hearts,” Jones said, calling the decision heartbreaking. “It does almost feel like a defeat, but with the new law, you just don’t know.”

The new law is written ambiguously, while also affecting any number of actions or situations without guidance, leaving Texas educators to decode the law for themselves. Jones’ district is taking complaints on a case-by-case basis: With Jones’ sign, the district agreed the words themselves were OK as an anti-bullying message, but not the symbolism associated with the multicolored hearts.

Jones has sympathy for the district. Administrators have to ensure teachers are in compliance if the district receives a complaint. In the absence of a clear legal standard, administrators are forced to decide what is and isn’t allowed — a job “nobody wants to have to do,” Jones says.

This comes as Texas public school teachers faced mandates to display donated posters of the Ten Commandments in their classrooms, which is now being challenged in the courts. And in other states, such as Florida, Arkansas and Alabama, officials have passed laws banning the teaching of “divisive concepts.” Now, teachers in those states have to rethink their approach to teaching hard histories that have always been part of the curriculum, such as slavery and Civil Rights, and how to do so in a way that provides students a complete history lesson.

Meanwhile, PEN America identified more than a dozen states that considered laws prohibiting teachers from displaying flags or banners related to political viewpoints, sexual orientation and gender identity this year. Utah, Idaho and Montana passed versions of flag bans.

“The bills [aren’t] necessarily saying, ‘No LGBTQ+ flags or Black Lives Matter flags,’ but that’s really implied, especially when you look at what the sponsors of the bills are saying,” said Madison Markham, a program coordinator with PEN America’s Freedom to Read.

Montana’s HB25-819 does explicitly restrict flags representing any political party, race, sexual orientation, gender or political ideology. Co-sponsors of similar bills in other states have used the Pride flag as an example of what they’re trying to eliminate from classrooms. Earlier this year, Idaho State Rep. Ted Hill cited an instance involving a teacher giving a class via Zoom.

“There was the Pride flag in the background. Not the American flag, but the Pride flag,” said Hill during an Idaho House Education Committee presentation in January. “She’s doing a Zoom call, and that’s not OK.”

Markham at PEN America sees flag, sign and display bans as natural outgrowths of physical and digital book censorship. She first noticed a shift in legislation challenging school libraries that eventually evolved into Florida’s “Don’t Say Gay” law, where openly LGBTQ+ teachers began censoring themselves out of caution even before it fully took effect.

“Teachers who were in a same-sex relationship were taking down pictures of themselves and their partner in their classroom,” Markham recalled. “They took them down because they were scared of the implications.”

The next step, digital censorship, Markham says, involves web filtering or turning off district-wide access to ebooks, research databases and other collections that can be subjected to keyword searches that omit context.

“This language that we see often weaponized, like ‘harmful to minors’, ‘obscene materials,’ even though obscene materials [already] have been banned in schools — [lawmakers] are putting this language in essentially to intimidate districts into overcomplying,” said Markham.

State Flag Imbroglio

To understand how digital environments became susceptible to the same types of censorship as physical books, one doesn’t have to look farther than state laws that apply to online catalogs. In 2023, Texas’ READER Act standardized how vendors label licensed products to public schools. To accommodate Texas and other states with similar digital access restrictions, vendors have needed to add content warnings to materials. There have already been notable mishaps.

In an example that captured a lot of media attention earlier this year, the Lamar Consolidated Independent School District, outside Houston, turned off access to a lesson about Virginia because it had a picture of the Virginia state flag, which depicts the Roman goddess Virtus, whose bare breast is exposed. That image put the Virginia flag in violation of the district’s local library materials policy.

Anne Russey, co-founder of the Texas Freedom to Read Project and a parent herself, learned of the district’s action and started looking into what happened. She found the district went to great lengths to overcomply with the new READER Act by rewriting the library materials policy; it even went so far as to add more detailed descriptions of what is considered a breast. Now, Russey says, students can learn about all of the original 13 colonies, except, perhaps, Virginia.

“As parents, we don’t believe children need access at their schools to sexually explicit material or books that are pervasively vulgar,” said Russey. “[But] we don’t think the Virginia flag qualifies as that, and I don’t think most people think that it qualifies.”

Disturbing Trends

While there isn’t yet a complete picture of how these laws are transforming educational environments, trends are beginning to emerge. School boards and districts have already exercised unequivocal readings of the laws that can limit an entire district’s access to materials and services.

A recent study from FirstBook found a correlation between book bans and reading engagement among students at a moment when literacy rates are trending down nationally overall. The erosion of instructional autonomy in K-12 settings has led more teachers to look outside the profession, to other districts or to charter and private schools.

Rachel Perera, a fellow of the Brown Center on Education Policy, Teacher Rights and Private Schools with the Brookings Institute, says that private and charter schools offer varying degrees of operational autonomy, but there are some clear drawbacks: limited transparency and minimal regulations and government oversight of charter and private schools mean there are fewer legal protections for teachers in those systems.

“One cannot rely on the same highly regulated standard of information available in the public sector,” said Perera. “Teachers should be a lot more wary of private school systems. The default assumption of trust in the private sector leadership is often not warranted.”

Last year, English teacher John McDonough was at the center of a dispute at his former charter school in New Hampshire. Administrators received a complaint about his Pride flag and asked him to remove it. McDonough’s dismay over the request became an ongoing topic of discussion at the charter school board meetings.

“During one of the meetings about my classroom, we had people from the community come in and say that they were positive that I was like a Satanist,” McDonough recalled. “We had a board member that was convinced I was trying to send secret messages and code [about] anti-Christian messages through my room decor.”

The situation was made worse by what McDonough described as a loss of agency over his curriculum for the year.

“All of a sudden I was having the principal drop by my room and go, ‘OK here’s your deck of worksheets. These are the worksheets you’re going to be teaching this week, the next week, and the next week,’ until finally, everything was so intensely structured that there was zero time for me to adjust for anything,” he said. “The priority seemed to be not that all of the kids understand the concepts, but ‘are you sticking as rigidly to this set of worksheets as you can?’”

It didn’t come as a surprise when McDonough’s contract wasn’t renewed for the current school year. But he landed a teaching job at another nearby charter school. He described the whole ordeal as “eye-opening.”

Researchers argue that censorship begets further censorship. The restrictive approach used to remove books about race, sex, and gender creates the opportunity for politically- and ideologically-motivated challenges to other subjects and materials under the guise of protecting minors or maintaining educational standards. Without effective guidance from lawmakers or the courts, it can be hard to know what is or isn’t permissible, experts say.

Legal Experts Weigh In

First Amendment researchers and legal experts are trying to meet the moment. Jenna Leventhal, senior policy counsel at the ACLU, contends that the First Amendment does more to protect students than teachers, particularly on public school grounds.

As a result, Leventhal is hesitant to advise teachers. There is too much variability among who is most affected in terms of the subjects — she cited art, world history and foreign languages as examples — and where they live and the districts where they teach. In general, however, the First Amendment still protects controversial, disfavored and uncomfortable speech, she says.

“Let’s say you have a category of speech that you are banning,” Leventhal said. “Someone has to decide what fits in that category of speech and what doesn’t. And when you give that opportunity to the government, it’s ripe for abuse.”

Teachers are expected to use their professional judgment to create effective learning environments and students’ critical thinking, discovery and expression of their beliefs. And yet, in recent years, many states have proposed and passed laws that limit how teachers, librarians and administrators can discuss race, sex and gender, creating a void in what some students can learn about these subjects, which can affect how they understand their own identity, historical events and related risk factors for their personal safety.

The Limits of Freedom

McDonough in New Hampshire says when he first started displaying the Pride flag in his classroom, it was at the request of a student.

“I was just like, ‘this space is a shared space, and the kids deserve a voice in what it looks like,’” McDonough said.

This year, he left the choice of whether or not to hang the Pride flag in his new classroom up to his students. His students decided as a group that their community was safe and supportive, and therefore they didn’t need to hang a Pride flag.

Meanwhile in Texas, SB-12 has created a de facto parental notification requirement in many situations, including those involving gender and sexuality. Now, when Jones’ students start to tell her something, she is cautious.

She sometimes fields questions from students by asking if their parents know what they’re about to say.

“Because if not,” she warns them, “depending on what you tell me, they’re going to,” she said.

Jones wonders if her compliance with her state’s legal requirements is encroaching on her personal identity beyond the classroom.

“I don’t want to get myself into a situation where I’m mandated to report something, and if I make the choice not to, I could be held liable,” Jones said.

This isn’t the dynamic Jones wants to have with her students. She hopes that going forward, the new law doesn’t push her toward becoming a version of her teacher-self she doesn’t want to be.

“If a student trusts me to come out or to tell me something about their life, I want them to be able to do that,” she added.

Maintaining professional integrity and protecting their right to create a welcoming classroom environment are at the heart of the resistance among some schools and teachers that are defying state and federal guidance against inclusion language. Cases are being decided at the district level. In northern Virginia, a handful of districts are vowing to keep their DEI policies intact, even as the U.S. Department of Education threatens defunding. An Idaho teacher who last year refused a district request to remove an “All Are Welcome Here” sign from her classroom now works for the Boise School District. That district decided over the summer that it would allow teachers to hang similar signs, in spite of guidance to the contrary from the state’s attorney general.

Educators in other states have also refused orders to remove displays, books and otherwise water down their curriculums, galvanizing more attention to the realities of the environments teachers are having to navigate this fall. It’s the adoption of a mindset that censorship is a choice.

“I’m not teaching politics,” Jones said. “I’m not promoting anything. Choosing to have a rainbow heart or a pin on my lanyard — someone would have to look at that and then complain to someone [else] that they feel is above me. And that is a choice that they make rather than seeing this [object] and [choosing] to move on.”

Books, Courses & Certifications

Automate advanced agentic RAG pipeline with Amazon SageMaker AI

Retrieval Augmented Generation (RAG) is a fundamental approach for building advanced generative AI applications that connect large language models (LLMs) to enterprise knowledge. However, crafting a reliable RAG pipeline is rarely a one-shot process. Teams often need to test dozens of configurations (varying chunking strategies, embedding models, retrieval techniques, and prompt designs) before arriving at a solution that works for their use case. Furthermore, management of high-performing RAG pipeline involves complex deployment, with teams often using manual RAG pipeline management, leading to inconsistent results, time-consuming troubleshooting, and difficulty in reproducing successful configurations. Teams struggle with scattered documentation of parameter choices, limited visibility into component performance, and the inability to systematically compare different approaches. Additionally, the lack of automation creates bottlenecks in scaling the RAG solutions, increases operational overhead, and makes it challenging to maintain quality across multiple deployments and environments from development to production.

In this post, we walk through how to streamline your RAG development lifecycle from experimentation to automation, helping you operationalize your RAG solution for production deployments with Amazon SageMaker AI, helping your team experiment efficiently, collaborate effectively, and drive continuous improvement. By combining experimentation and automation with SageMaker AI, you can verify that the entire pipeline is versioned, tested, and promoted as a cohesive unit. This approach provides comprehensive guidance for traceability, reproducibility, and risk mitigation as the RAG system advances from development to production, supporting continuous improvement and reliable operation in real-world scenarios.

Solution overview

By streamlining both experimentation and operational workflows, teams can use SageMaker AI to rapidly prototype, deploy, and monitor RAG applications at scale. Its integration with SageMaker managed MLflow provides a unified platform for tracking experiments, logging configurations, and comparing results, supporting reproducibility and robust governance throughout the pipeline lifecycle. Automation also minimizes manual intervention, reduces errors, and streamlines the process of promoting the finalized RAG pipeline from the experimentation phase directly into production. With this approach, every stage from data ingestion to output generation operates efficiently and securely, while making it straightforward to transition validated solutions from development to production deployment.

For automation, Amazon SageMaker Pipelines orchestrates end-to-end RAG workflows from data preparation and vector embedding generation to model inference and evaluation all with repeatable and version-controlled code. Integrating continuous integration and delivery (CI/CD) practices further enhances reproducibility and governance, enabling automated promotion of validated RAG pipelines from development to staging or production environments. Promoting an entire RAG pipeline (not just an individual subsystem of the RAG solution like a chunking layer or orchestration layer) to higher environments is essential because data, configurations, and infrastructure can vary significantly across staging and production. In production, you often work with live, sensitive, or much larger datasets, and the way data is chunked, embedded, retrieved, and generated can impact system performance and output quality in ways that are not always apparent in lower environments. Each stage of the pipeline (chunking, embedding, retrieval, and generation) must be thoroughly evaluated with production-like data for accuracy, relevance, and robustness. Metrics at every stage (such as chunk quality, retrieval relevance, answer correctness, and LLM evaluation scores) must be monitored and validated before the pipeline is trusted to serve real users.

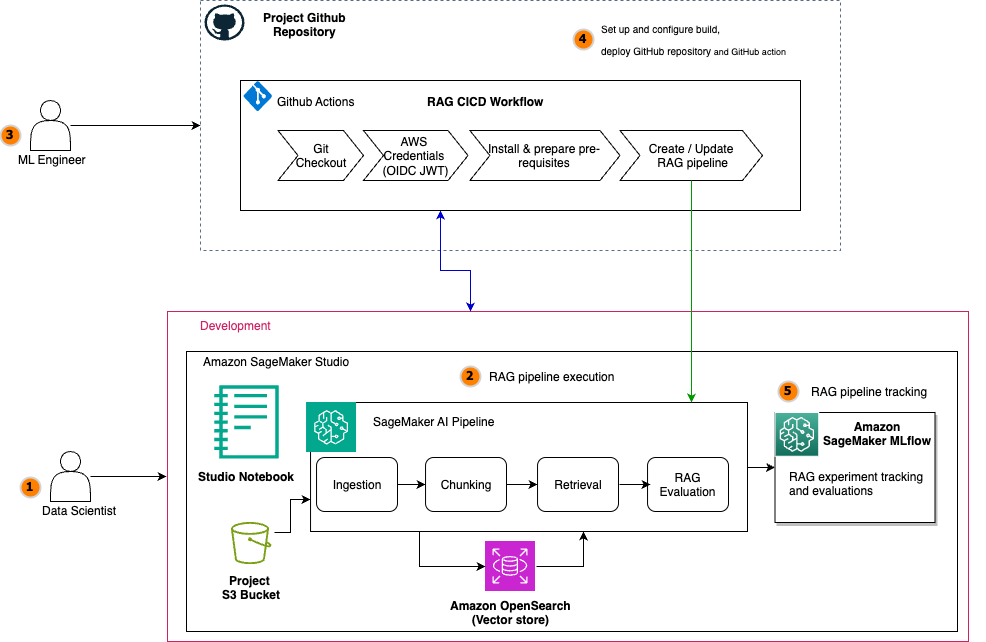

The following diagram illustrates the architecture of a scalable RAG pipeline built on SageMaker AI, with MLflow experiment tracking seamlessly integrated at every stage and the RAG pipeline automated using SageMaker Pipelines. SageMaker managed MLflow provides a unified platform for centralized RAG experiment tracking across all pipeline stages. Every MLflow execution run whether for RAG chunking, ingestion, retrieval, or evaluation sends execution logs, parameters, metrics, and artifacts to SageMaker managed MLflow. The architecture uses SageMaker Pipelines to orchestrate the entire RAG workflow through versioned, repeatable automation. These RAG pipelines manage dependencies between critical stages, from data ingestion and chunking to embedding generation, retrieval, and final text generation, supporting consistent execution across environments. Integrated with CI/CD practices, SageMaker Pipelines enable seamless promotion of validated RAG configurations from development to staging and production environments while maintaining infrastructure as code (IaC) traceability.

For the operational workflow, the solution follows a structured lifecycle: During experimentation, data scientists iterate on pipeline components within Amazon SageMaker Studio notebooks while SageMaker managed MLflow captures parameters, metrics, and artifacts at every stage. Validated workflows are then codified into SageMaker Pipelines and versioned in Git. The automated promotion phase uses CI/CD to trigger pipeline execution in target environments, rigorously validating stage-specific metrics (chunk quality, retrieval relevance, answer correctness) against production data before deployment. The other core components include:

- Amazon SageMaker JumpStart for accessing the latest LLM models and hosting them on SageMaker endpoints for model inference with the embedding model

huggingface-textembedding-all-MiniLM-L6-v2and text generation modeldeepseek-llm-r1-distill-qwen-7b. - Amazon OpenSearch Service as a vector database to store document embeddings with the OpenSearch index configured for k-nearest neighbors (k-NN) search.

- The Amazon Bedrock model

anthropic.claude-3-haiku-20240307-v1:0as an LLM-as-a-judge component for all the MLflow LLM evaluation metrics. - A SageMaker Studio notebook for a development environment to experiment and automate the RAG pipelines with SageMaker managed MLflow and SageMaker Pipelines.

You can implement this agentic RAG solution code from the GitHub repository. In the following sections, we use snippets from this code in the repository to illustrate RAG pipeline experiment evolution and automation.

Prerequisites

You must have the following prerequisites:

- An AWS account with billing enabled.

- A SageMaker AI domain. For more information, see Use quick setup for Amazon SageMaker AI.

- Access to a running SageMaker managed MLflow tracking server in SageMaker Studio. For more information, see the instructions for setting up a new MLflow tracking server.

- Access to SageMaker JumpStart to host LLM embedding and text generation models.

- Access to the Amazon Bedrock foundation models (FMs) for RAG evaluation tasks. For more details, see Subscribe to a model.

SageMaker MLFlow RAG experiment

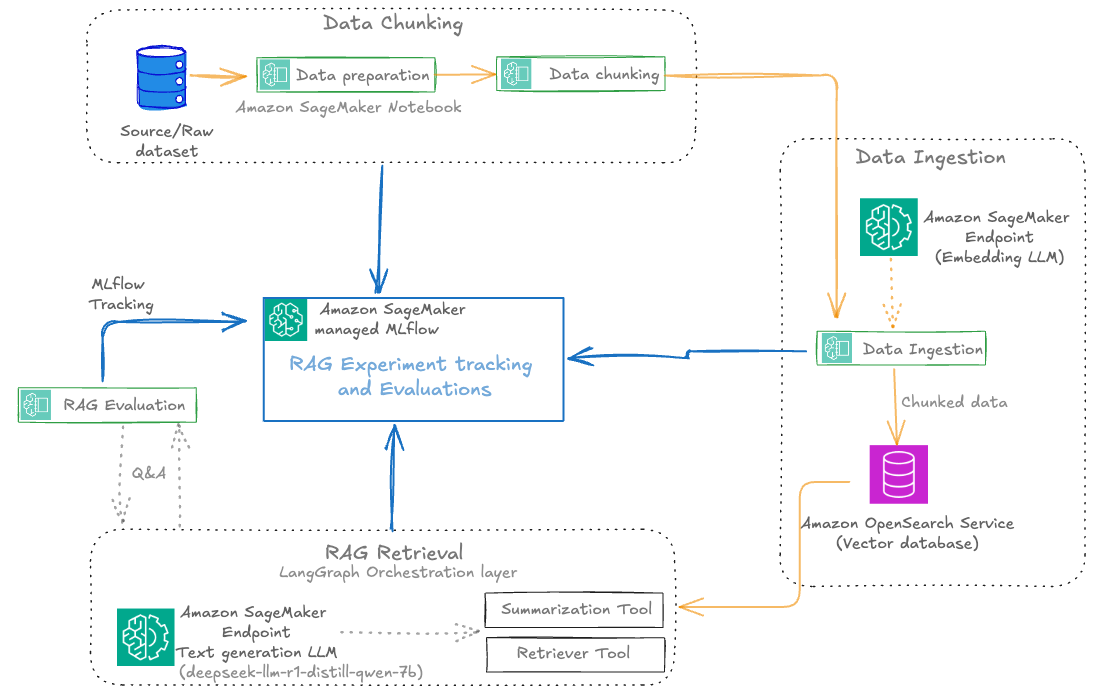

SageMaker managed MLflow provides a powerful framework for organizing RAG experiments, so teams can manage complex, multi-stage processes with clarity and precision. The following diagram illustrates the RAG experiment stages with SageMaker managed MLflow experiment tracking at every stage. This centralized tracking offers the following benefits:

- Reproducibility: Every experiment is fully documented, so teams can replay and compare runs at any time

- Collaboration: Shared experiment tracking fosters knowledge sharing and accelerates troubleshooting

- Actionable insights: Visual dashboards and comparative analytics help teams identify the impact of pipeline changes and drive continuous improvement

The following diagram illustrates the solution workflow.

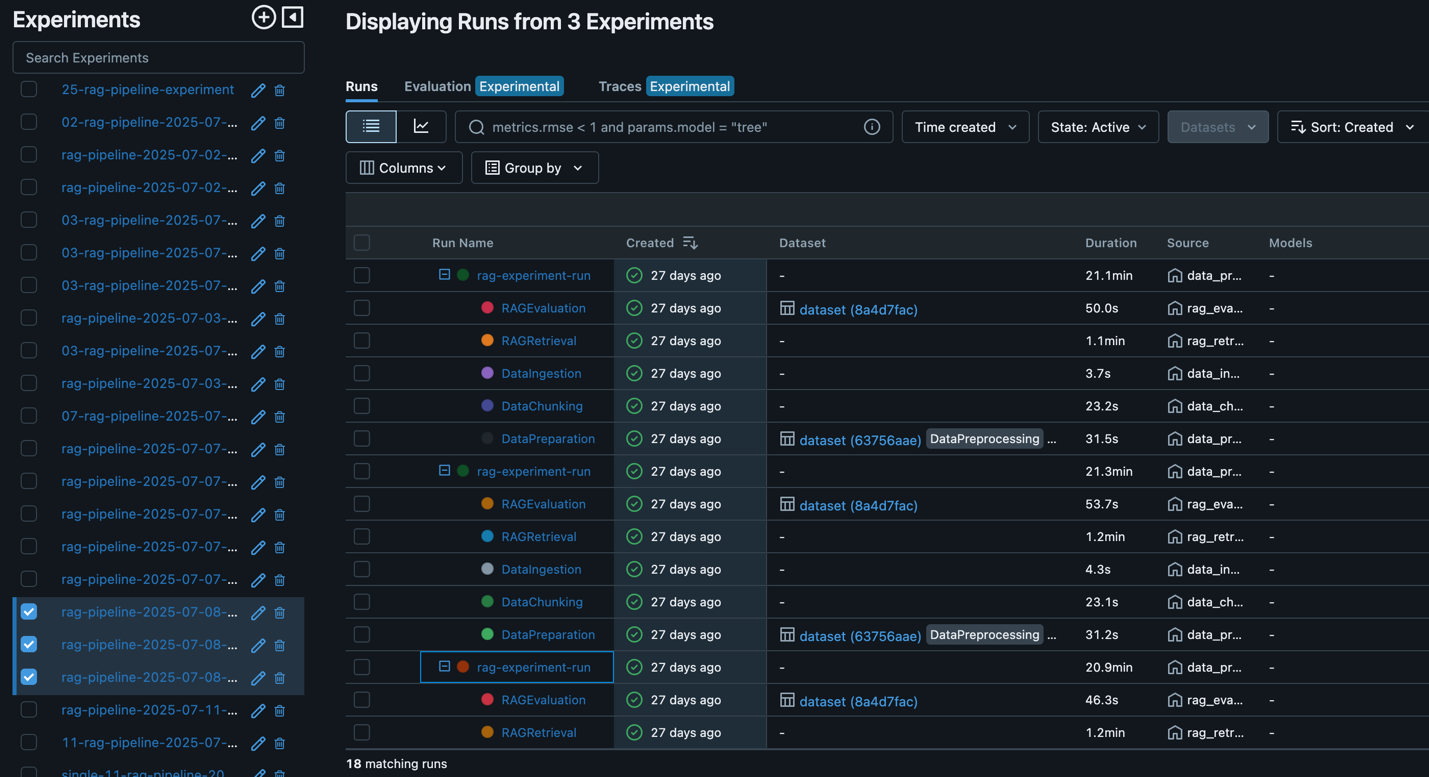

Each RAG experiment in MLflow is structured as a top-level run under a specific experiment name. Within this top-level run, nested runs are created for each major pipeline stage, such as data preparation, data chunking, data ingestion, RAG retrieval, and RAG evaluation. This hierarchical approach allows for granular tracking of parameters, metrics, and artifacts at every step, while maintaining a clear lineage from raw data to final evaluation results.

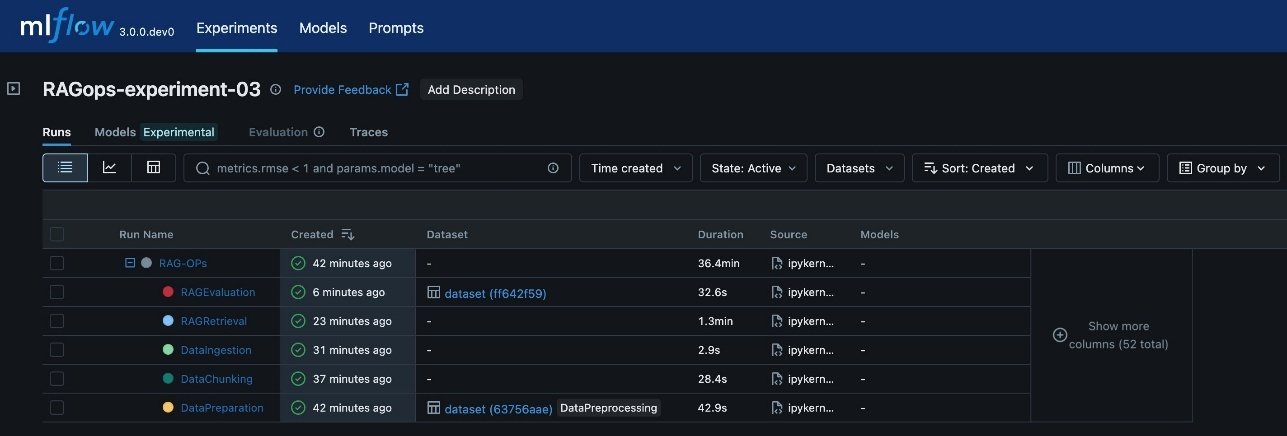

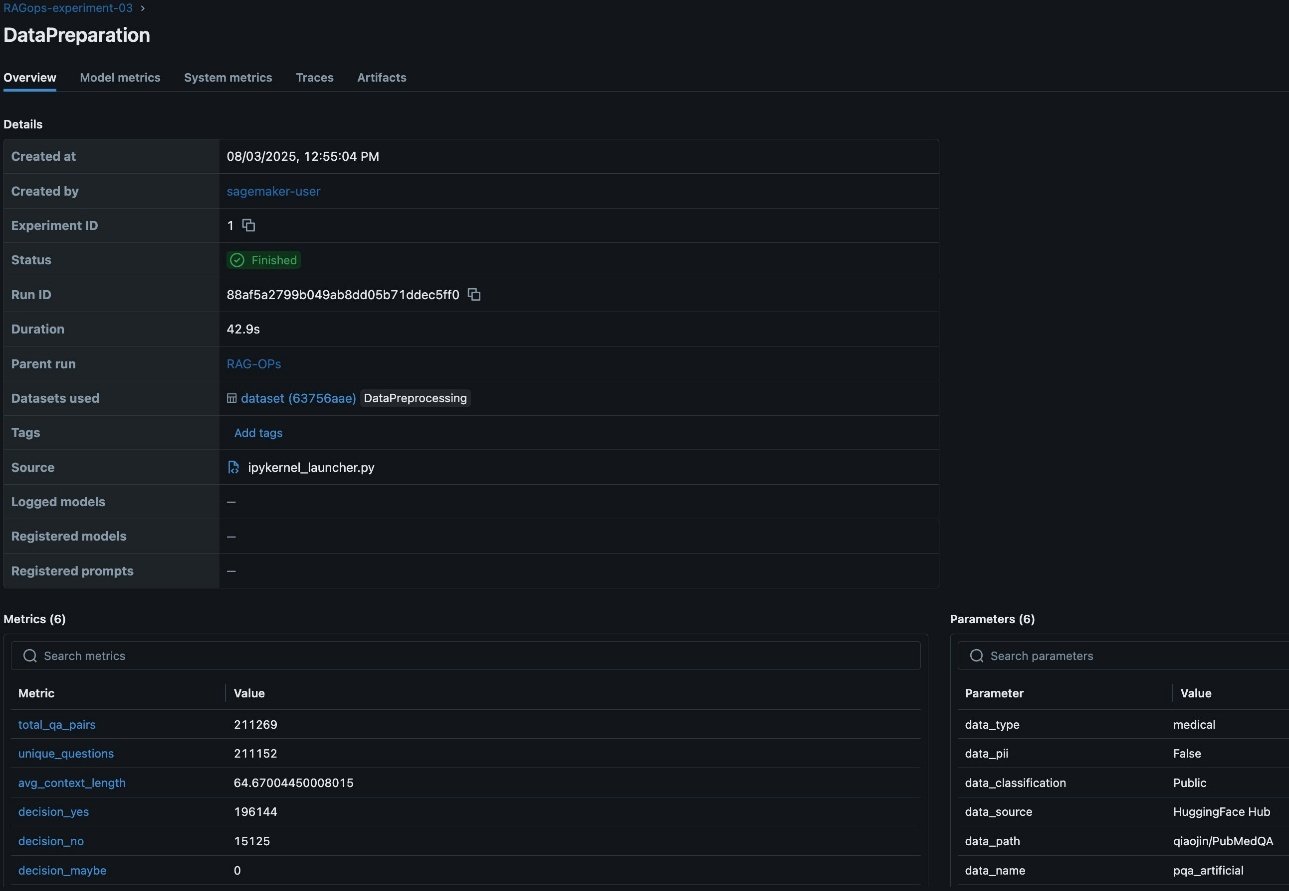

The following screenshot shows an example of the experiment details in MLflow.

The various RAG pipeline steps defined are:

- Data preparation: Logs dataset version, preprocessing steps, and initial statistics

- Data chunking: Records chunking strategy, chunk size, overlap, and resulting chunk counts

- Data ingestion: Tracks embedding model, vector database details, and document ingestion metrics

- RAG retrieval: Captures retrieval model, context size, and retrieval performance metrics

- RAG evaluation: Logs evaluation metrics (such as answer similarity, correctness, and relevance) and sample results

This visualization provides a clear, end-to-end view of the RAG pipeline’s execution, so you can trace the impact of changes at any stage and achieve full reproducibility. The architecture supports scaling to multiple experiments, each representing a distinct configuration or hypothesis (for example, different chunking strategies, embedding models, or retrieval parameters). MLflow’s experiment UI visualizes these experiments side by side, enabling side-by-side comparison and analysis across runs. This structure is especially valuable in enterprise settings, where dozens or even hundreds of experiments might be conducted to optimize RAG performance.

We use MLflow experimentation throughout the RAG pipeline to log metrics and parameters, and the different experiment runs are initialized as shown in the following code snippet:

RAG pipeline experimentation

The key components of the RAG workflow are ingestion, chunking, retrieval, and evaluation, which we explain in this section. The MLflow dashboard makes it straightforward to visualize and analyze these parameters and metrics, supporting data-driven refinement of the chunking stage within the RAG pipeline.

Data ingestion and preparation

In the RAG workflow, rigorous data preparation is foundational to downstream performance and reliability. Tracking detailed metrics on data quality, such as the total number of question-answer pairs, the count of unique questions, average context length, and initial evaluation predictions, provides essential visibility into the dataset’s structure and suitability for RAG tasks. These metrics help validate the dataset is comprehensive, diverse, and contextually rich, which directly impacts the relevance and accuracy of the RAG system’s responses. Additionally, logging critical RAG parameters like the data source, detected personally identifiable information (PII) types, and data lineage information is vital for maintaining compliance, reproducibility, and trust in enterprise environments. Capturing this metadata in SageMaker managed MLflow supports robust experiment tracking, auditability, efficient comparison, and root cause analysis across multiple data preparation runs, as visualized in the MLflow dashboard. This disciplined approach to data preparation lays the groundwork for effective experimentation, governance, and continuous improvement throughout the RAG pipeline. The following screenshot shows an example of the experiment run details in MLflow.

Data chunking

After data preparation, the next step is to split documents into manageable chunks for efficient embedding and retrieval. This process is pivotal, because the quality and granularity of chunks directly affect the relevance and completeness of answers returned by the RAG system. The RAG workflow in this post supports experimentation and RAG pipeline automation with both fixed-size and recursive chunking strategies for comparison and validations. However, this RAG solution can be expanded to many other chucking techniques.

FixedSizeChunkerdivides text into uniform chunks with configurable overlapRecursiveChunkersplits text along logical boundaries such as paragraphs or sentences

Tracking detailed chunking metrics such as total_source_contexts_entries, total_contexts_chunked, and total_unique_chunks_final is crucial for understanding how much of the source data is represented, how effectively it is segmented, and whether the chunking approach is yielding the desired coverage and uniqueness. These metrics help diagnose issues like excessive duplication or under-segmentation, which can impact retrieval accuracy and model performance.

Additionally, logging parameters such as chunking_strategy_type (for example, FixedSizeChunker), chunking_strategy_chunk_size (for example, 500 characters), and chunking_strategy_chunk_overlap provide transparency and reproducibility for each experiment. Capturing these details in SageMaker managed MLflow helps teams systematically compare the impact of different chunking configurations, optimize for efficiency and contextual relevance, and maintain a clear audit trail of how chunking decisions evolve over time. The MLflow dashboard makes it straightforward to visualize and analyze these parameters and metrics, supporting data-driven refinement of the chunking stage within the RAG pipeline. The following screenshot shows an example of the experiment run details in MLflow.

After the documents are chunked, the next step is to convert these chunks into vector embeddings using a SageMaker embedding endpoint, after which the embeddings are ingested into a vector database such as OpenSearch Service for fast semantic search. This ingestion phase is crucial because the quality, completeness, and traceability of what enters the vector store directly determine the effectiveness and reliability of downstream retrieval and generation stages.

Tracking ingestion metrics such as the number of documents and chunks ingested provides visibility into pipeline throughput and helps identify bottlenecks or data loss early in the process. Logging detailed parameters, including the embedding model ID, endpoint used, and vector database index, is essential for reproducibility and auditability. This metadata helps teams trace exactly which model and infrastructure were used for each ingestion run, supporting root cause analysis and compliance, especially when working with evolving datasets or sensitive information.

Retrieval and generation

For a given query, we generate an embedding and retrieve the top-k relevant chunks from OpenSearch Service. For answer generation, we use a SageMaker LLM endpoint. The retrieved context and the query are combined into a prompt, and the LLM generates an answer. Finally, we orchestrate retrieval and generation using LangGraph, enabling stateful workflows and advanced tracing:

With the GenerativeAI agent defined with LangGraph framework, the agentic layers are evaluated for each iteration of RAG development, verifying the efficacy of the RAG solution for agentic applications. Each retrieval and generation run is logged to SageMaker managed MLflow, capturing the prompt, generated response, and key metrics and parameters such as retrieval performance, top-k values, and the specific model endpoints used. Tracking these details in MLflow is essential for evaluating the effectiveness of the retrieval stage, making sure the returned documents are relevant and that the generated answers are accurate and complete. It is equally important to track the performance of the vector database during retrieval, including metrics like query latency, throughput, and scalability. Monitoring these system-level metrics alongside retrieval relevance and accuracy makes sure the RAG pipeline delivers correct and relevant answers and meets production requirements for responsiveness and scalability. The following screenshot shows an example of the Langraph RAG retrieval tracing in MLflow.

RAG Evaluation

Evaluation is conducted on a curated test set, and results are logged to MLflow for quick comparison and analysis. This helps teams identify the best-performing configurations and iterate toward production-grade solutions. With MLflow you can evaluate the RAG solution with heuristics metrics, content similarity metrics and LLM-as-a-judge. In this post, we evaluate the RAG pipeline using advanced LLM-as-a-judge MLflow metrics (answer similarity, correctness, relevance, faithfulness):

The following screenshot shows an RAG evaluation stage experiment run details in MLflow.

You can use MLflow to log all metrics and parameters, enabling quick comparison of different experiment runs. See the following code for reference:

By using MLflow’s evaluation capabilities (such as mlflow.evaluate()), teams can systematically assess retrieval quality, identify potential gaps or misalignments in chunking or embedding strategies, and compare the performance of different retrieval and generation configurations. MLflow’s flexibility allows for seamless integration with external libraries and evaluation libraries such as RAGAS for comprehensive RAG pipeline assessment. RAGAS is an open source library that provide tools specifically for evaluation of LLM applications and generative AI agents. RAGAS includes the method ragas.evaluate() to run evaluations for LLM agents with the choice of LLM models (evaluators) for scoring the evaluation, and an extensive list of default metrics. To incorporate RAGAS metrics into your MLflow experiments, refer to the following GitHub repository.

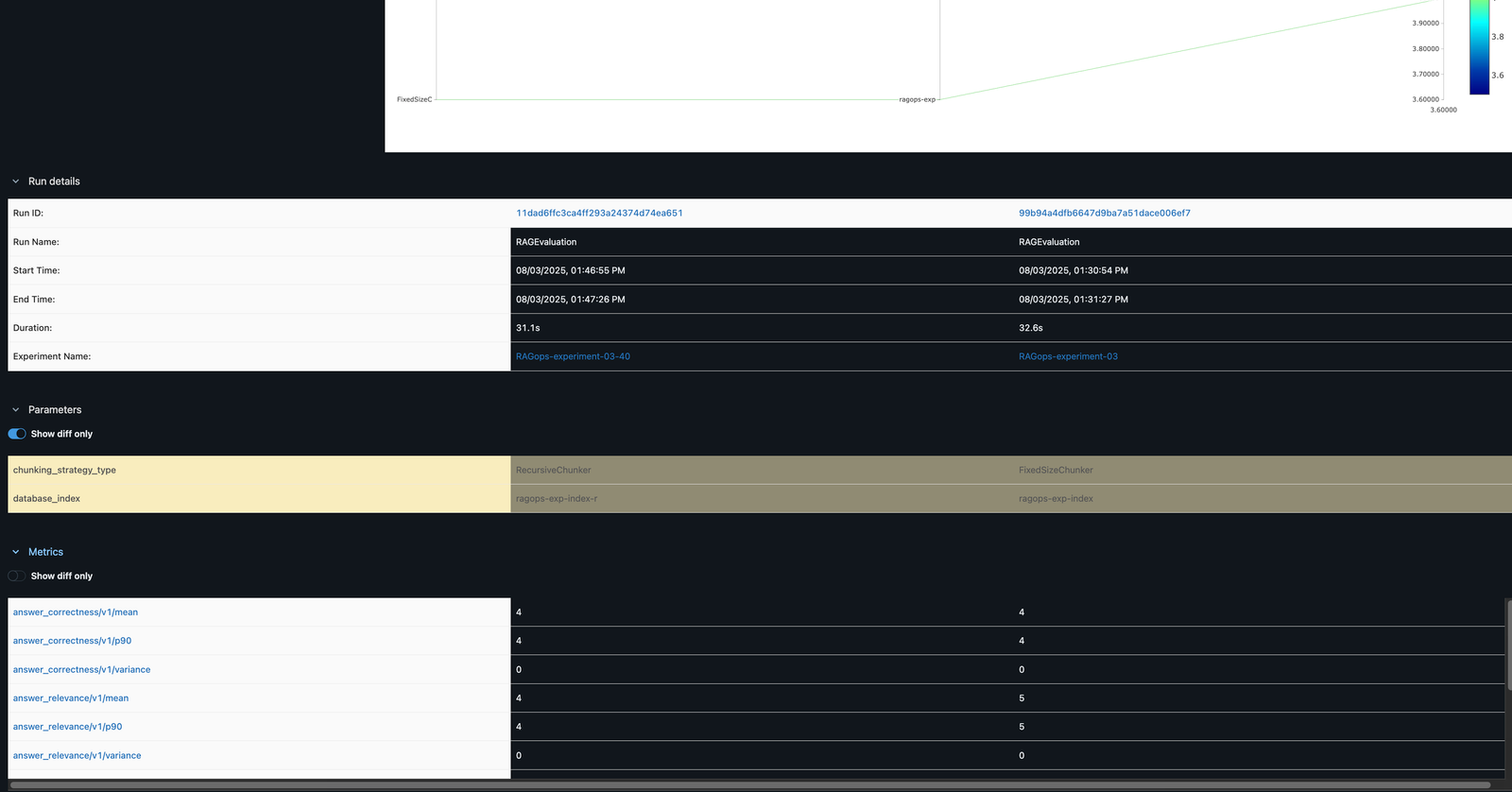

Comparing experiments

In the MLflow UI, you can compare runs side by side. For example, comparing FixedSizeChunker and RecursiveChunker as shown in the following screenshot reveals differences in metrics such as answer_similarity (a difference of 1 point), providing actionable insights for pipeline optimization.

Automation with Amazon SageMaker pipelines

After systematically experimenting with and optimizing each component of the RAG workflow through SageMaker managed MLflow, the next step is transforming these validated configurations into production-ready automated pipelines. Although MLflow experiments help identify the optimal combination of chunking strategies, embedding models, and retrieval parameters, manually reproducing these configurations across environments can be error-prone and inefficient.

To produce the automated RAG pipeline, we use SageMaker Pipelines, which helps teams codify their experimentally validated RAG workflows into automated, repeatable pipelines that maintain consistency from development through production. By converting the successful MLflow experiments into pipeline definitions, teams can make sure the exact same chunking, embedding, retrieval, and evaluation steps that performed well in testing are reliably reproduced in production environments.

SageMaker Pipelines offers a serverless workflow orchestration for converting experimental notebook code into a production-grade pipeline, versioning and tracking pipeline configurations alongside MLflow experiments, and automating the end-to-end RAG workflow. The automated Sagemaker pipeline-based RAG workflow offers dependency management, comprehensive custom testing and validation before production deployment, and CI/CD integration for automated pipeline promotion.

With SageMaker Pipelines, you can automate your entire RAG workflow, from data preparation to evaluation, as reusable, parameterized pipeline definitions. This provides the following benefits:

- Reproducibility – Pipeline definitions capture all dependencies, configurations, and executions logic in version-controlled code

- Parameterization – Key RAG parameters (chunk sizes, model endpoints, retrieval settings) can be quickly modified between runs

- Monitoring – Pipeline executions provide detailed logs and metrics for each step

- Governance – Built-in lineage tracking supports full audibility of data and model artifacts

- Customization – Serverless workflow orchestration is customizable to your unique enterprise landscape, with scalable infrastructure and flexibility with instances optimized for CPU, GPU, or memory-intensive tasks, memory configuration, and concurrency optimization

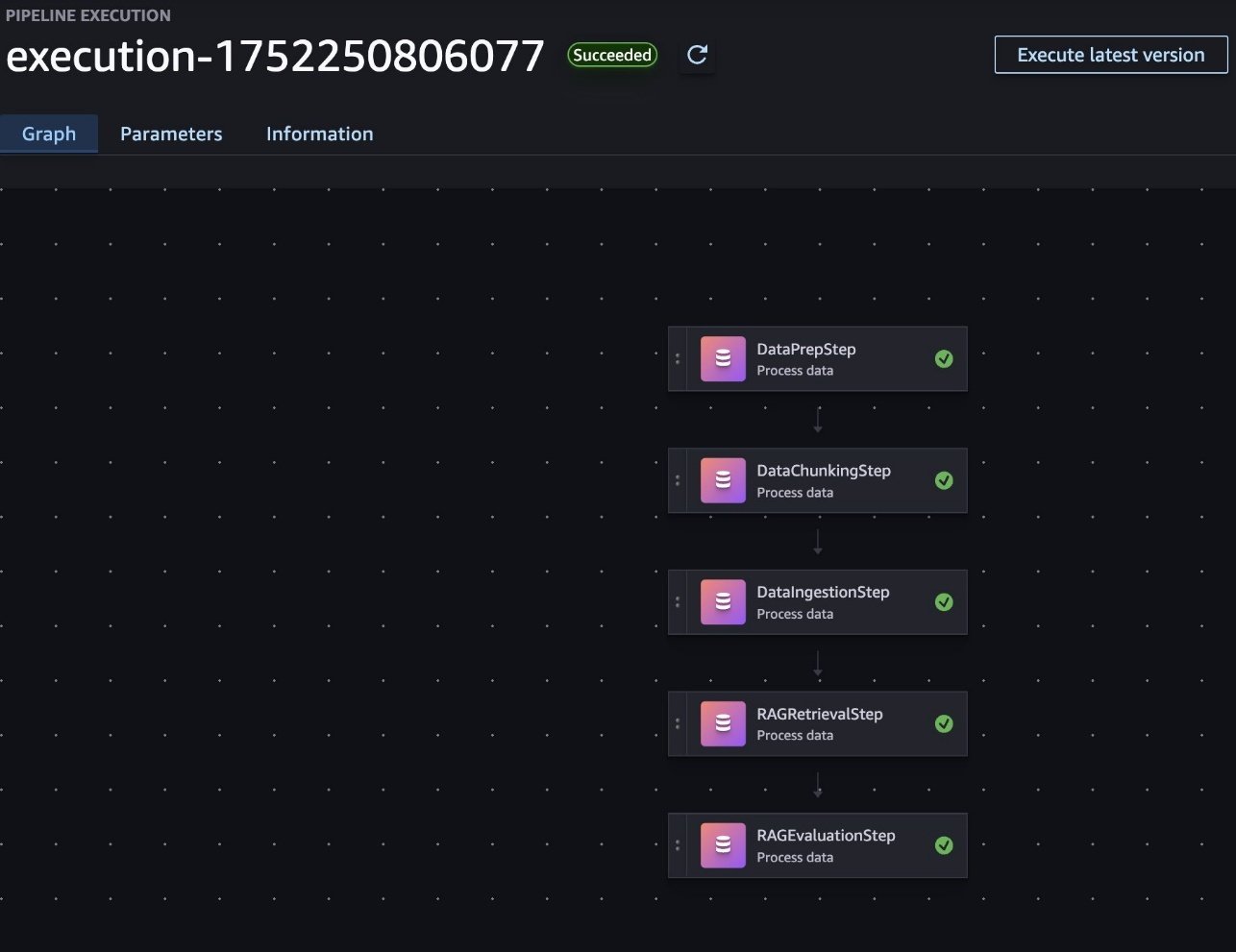

To implement a RAG workflow in SageMaker pipelines, each major component of the RAG process (data preparation, chunking, ingestion, retrieval and generation, and evaluation) is included in a SageMaker processing job. These jobs are then orchestrated as steps within a pipeline, with data flowing between them, as shown in the following screenshot. This structure allows for modular development, quick debugging, and the ability to reuse components across different pipeline configurations.

The key RAG configurations are exposed as pipeline parameters, enabling flexible experimentation with minimal code changes. For example, the following code snippets showcase the modifiable parameters for RAG configurations, which can be used as pipeline configurations:

In this post, we provide two agentic RAG pipeline automation approaches to building the SageMaker pipeline, each with own benefits: single-step SageMaker pipelines and multi-step pipelines.

The single-step pipeline approach is designed for simplicity, running the entire RAG workflow as one unified process. This setup is ideal for straightforward or less complex use cases, because it minimizes pipeline management overhead. With fewer steps, the pipeline can start quickly, benefitting from reduced execution times and streamlined development. This makes it a practical option when rapid iteration and ease of use are the primary concerns.

The multi-step pipeline approach is preferred for enterprise scenarios where flexibility and modularity are essential. By breaking down the RAG process into distinct, manageable stages, organizations gain the ability to customize, swap, or extend individual components as needs evolve. This design enables plug-and-play adaptability, making it straightforward to reuse or reconfigure pipeline steps for various workflows. Additionally, the multi-step format allows for granular monitoring and troubleshooting at each stage, providing detailed insights into performance and facilitating robust enterprise management. For enterprises seeking maximum flexibility and the ability to tailor automation to unique requirements, the multi-step pipeline approach is the superior choice.

CI/CD for an agentic RAG pipeline

Now we integrate the SageMaker RAG pipeline with CI/CD. CI/CD is important for making a RAG solution enterprise-ready because it provides faster, more reliable, and scalable delivery of AI-powered workflows. Specifically for enterprises, CI/CD pipelines automate the integration, testing, deployment, and monitoring of changes in the RAG system, which brings several key benefits, such as faster and more reliable updates, version control and traceability, consistency across environments, modularity and flexibility for customization, enhanced collaboration and monitoring, risk mitigation, and cost savings. This aligns with general CI/CD benefits in software and AI systems, emphasizing automation, quality assurance, collaboration, and continuous feedback essential to enterprise AI readiness.

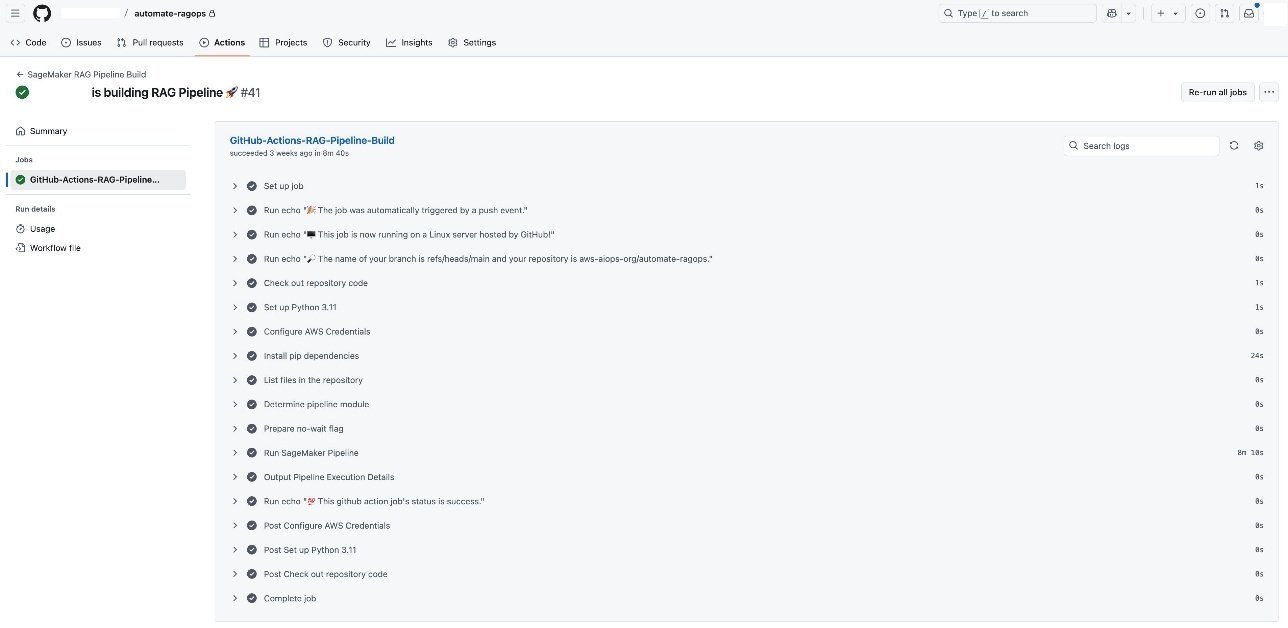

When your SageMaker RAG pipeline definition is in place, you can implement robust CI/CD practices by integrating your development workflow and toolsets already enabled at your enterprise. This setup makes it possible to automate code promotion, pipeline deployment, and model experimentation through simple Git triggers, so changes are versioned, tested, and systematically promoted across environments. For demonstration, in this post, we show the CI/CD integration using GitHub Actions and by using GitHub Actions as the CI/CD orchestrator. Each code change, such as refining chunking strategies or updating pipeline steps, triggers an end-to-end automation workflow, as shown in the following screenshot. You can use the same CI/CD pattern with your choice of CI/CD tool instead of GitHub Actions, if needed.

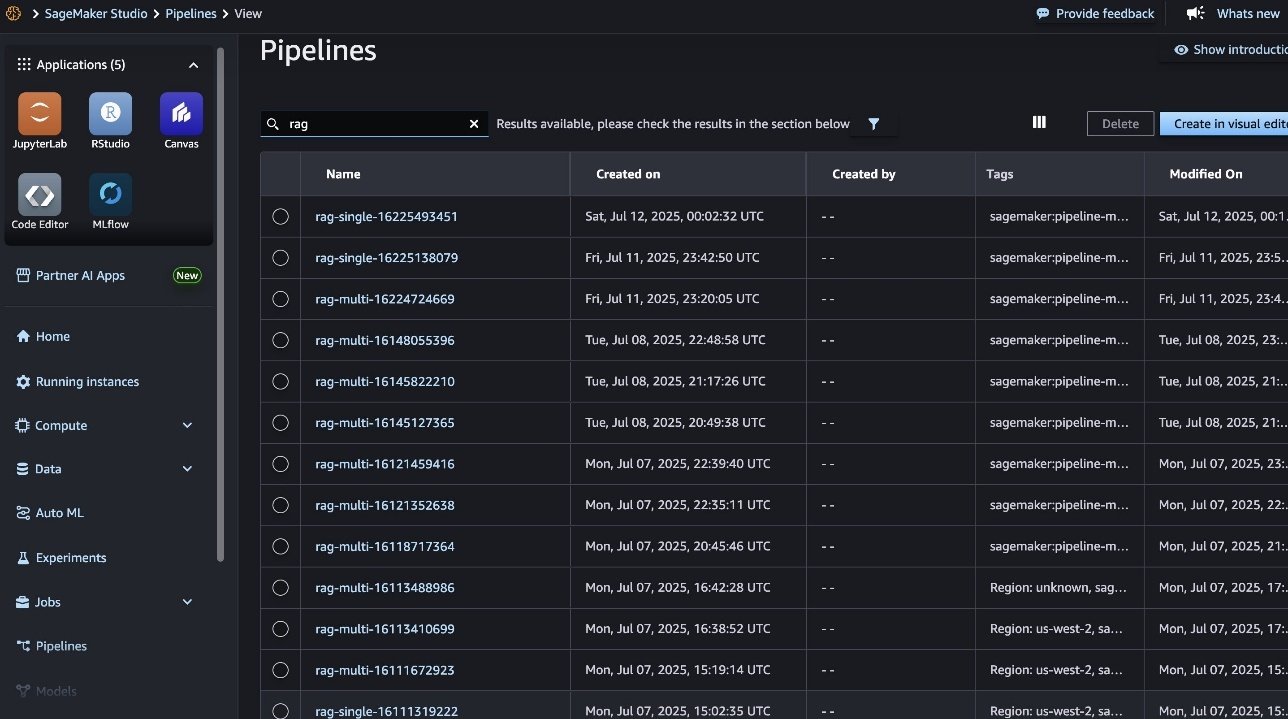

Each GitHub Actions CI/CD execution automatically triggers the SageMaker pipeline (shown in the following screenshot), allowing for seamless scaling of serverless compute infrastructure.

Throughout this cycle, SageMaker managed MLflow records every executed pipeline (shown in the following screenshot), so you can seamlessly review results, compare performance across different pipeline runs, and manage the RAG lifecycle.

After an optimal RAG pipeline configuration is determined, the new desired configuration (Git version tracking captured in MLflow as shown in the following screenshot) can be promoted to higher stages or environments directly through an automated workflow, minimizing manual intervention and reducing risk.

Clean up

To avoid unnecessary costs, delete resources such as the SageMaker managed MLflow tracking server, SageMaker pipelines, and SageMaker endpoints when your RAG experimentation is complete. You can visit the SageMaker Studio console to destroy resources that aren’t needed anymore or call appropriate AWS APIs actions.

Conclusion

By integrating SageMaker AI, SageMaker managed MLflow, and Amazon OpenSearch Service, you can build, evaluate, and deploy RAG pipelines at scale. This approach provides the following benefits:

- Automated and reproducible workflows with SageMaker Pipelines and MLflow, minimizing manual steps and reducing the risk of human error

- Advanced experiment tracking and comparison for different chunking strategies, embedding models, and LLMs, so every configuration is logged, analyzed, and reproducible

- Actionable insights from both traditional and LLM-based evaluation metrics, helping teams make data-driven improvements at every stage

- Seamless deployment to production environments, with automated promotion of validated pipelines and robust governance throughout the workflow

Automating your RAG pipeline with SageMaker Pipelines brings additional benefits: it enables consistent, version-controlled deployments across environments, supports collaboration through modular, parameterized workflows, and supports full traceability and auditability of data, models, and results. With built-in CI/CD capabilities, you can confidently promote your entire RAG solution from experimentation to production, knowing that each stage meets quality and compliance standards.

Now it’s your turn to operationalize RAG workflows and accelerate your AI initiatives. Explore SageMaker Pipelines and managed MLflow using the solution from the GitHub repository to unlock scalable, automated, and enterprise-grade RAG solutions.

About the authors

Sandeep Raveesh is a GenAI Specialist Solutions Architect at AWS. He works with customers through their AIOps journey across model training, generative AI applications like agents, and scaling generative AI use cases. He also focuses on Go-To-Market strategies, helping AWS build and align products to solve industry challenges in the generative AI space. You can find Sandeep on LinkedIn.

Sandeep Raveesh is a GenAI Specialist Solutions Architect at AWS. He works with customers through their AIOps journey across model training, generative AI applications like agents, and scaling generative AI use cases. He also focuses on Go-To-Market strategies, helping AWS build and align products to solve industry challenges in the generative AI space. You can find Sandeep on LinkedIn.

Blake Shin is an Associate Specialist Solutions Architect at AWS who enjoys learning about and working with new AI/ML technologies. In his free time, Blake enjoys exploring the city and playing music.

Blake Shin is an Associate Specialist Solutions Architect at AWS who enjoys learning about and working with new AI/ML technologies. In his free time, Blake enjoys exploring the city and playing music.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers3 months ago

Jobs & Careers3 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education3 months ago

Education3 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Funding & Business3 months ago

Funding & Business3 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries