Events & Conferences

Measuring the effectiveness of software development tools and practices

At Amazon, we constantly seek ways to optimize software development tools, processes, and practices in order to improve outcomes and experiences for our customers. Internally, Amazon has the variety of businesses, team sizes, and technologies to enable research on engineering practices that span a wide variety of circumstances. Recently, we’ve been exploring how generative artificial intelligence (genAI) affects our cost-to-serve-software (CTS-SW) metric. This post delves into the research that led to CTS-SW’s development, how various new AI-powered tools can lower CTS-SW, and our future plans in this exciting area.

Understanding CTS-SW

We developed cost to serve software as a metric to quantify how investments in improving the efficiency of building and supporting software enable teams to easily, safely, and continually deploy software to customers. It bridges the gap between our existing framework, which tracks many metrics (similar to DORA and SPACE), and the quantifiable bottom-line impact on the business. It allows developer experience teams to express their business benefits in either effective capacity (engineering years saved) or the monetary value of those savings. In a recent blog post on the AWS Cloud Enterprise Strategy Blog, we described how CTS-SW can evaluate how initiatives throughout the software development lifecycle affect the ability to deliver for customers.

At a high level, CTS-SW tracks the dollars spent per unit of software reaching customers (i.e., released for use by customers). The best unit of software to use varies based on the software architecture. Deployment works well for microservices. Code reviews or pull requests that are shipped to a customer work well for monolith-based teams or software whose release is dictated by a predetermined schedule. Finally, commits that reach customers make sense for teams that contribute updates to a central code “trunk”. We currently use deployments, as it fits our widespread use of service-oriented architecture patterns and our local team ownership.

CTS-SW is based on the same theory that underlies the cost-to-serve metric in Amazon’s fulfillment network, i.e., that the delivery of a product to a customer is the result of an immeasurably complex and highly varied process and would be affected by the entirety of any changes to it. That process is so complex, and it changes so much over time, that the attempt to quantify each of its steps and assign costs to them, known as activity-based costing, is likely to fail. This is especially true of software engineering today, as new AI tools are changing the ways software engineers do their jobs.

Cost to serve simplifies this complex process by modeling only the input costs and the output units. We can then work backwards to understand drivers and opportunities for improvement.

In the context of software development, working backwards means that we investigate changes that could affect the metric, beyond the core coding experience of working in an IDE and writing logic. We also include continuous integration/continuous delivery (CI/CD) practices, work planning, incident management practices, maintenance of existing systems, searching for information, and many other factors that characterize software development at Amazon. By working backwards, we look across the collective software builder experience and investigate how changes in different areas, such as reducing the number of alarms engineers receive, affects developers’ ability to build new experiences for customers. We have used a variety of research methods to explore these relationships, but we have primarily relied on mathematical models.

From a science perspective, Amazon is an interesting place in which to build these models because of our established culture of small software teams that manage their own services. A longstanding Amazon principle is that these teams should be small enough to be fed by two pizzas, so we refer to them as “two-pizza teams”. This local-ownership model has led to the creation of thousands of distinct services solving customer problems across the company.

Amazon’s practice of working backwards from the best possible customer experience means software teams choose the optimal combination of tooling and technology to enable that experience. These choices have led to the implementation of many different software architectures at Amazon. That variety offers an opportunity to explore how different architectures affect CTS-SW.

The Amazon Software Builder Experience (ASBX) team, our internal developer experience team, has access to rich telemetry data about these architectures and different ways of working with them. Using this data, we created a panel dataset representing the work of thousands of two-pizza teams over the past five years and including features we thought could affect CTS-SW. We model CTS-SW using the amount of developer time — the largest component of CTS-SW — per deployment. This data offers an opportunity for modeling the complete process from inception to delivery at a scale rarely seen in developer experience research.

Last year, as a first exploration of this dataset, we fit a set of linear mixed models to CTS-SW, to identify other metrics and behaviors that are highly correlated with it. Within ASBX, we were looking for input metrics that teams could optimize to lower CTS-SW. Correlations with linear mixed models can also help establish causal links between factors in the linear mixed models and CTS-SW. Linear mixed models are a good fit for this sort of problem because they have two components, one that captures the underlying relation between the outcome variable and the predictors, irrespective of team, and one that captures differences across teams.

Once we’d fit our models, we found that the following input metrics stood out as being the largest potential drivers of CTS-SW after a sensitivity analysis:

- Team velocity: This measures how many code reviews (CRs) a software team merges each week per developer on the team. Teams that check in more code have a lower CTS-SW. Our science validates that software is a team sport, and framing this as a team-level outcome instead of an individual one prevents using CR flow as a performance metric for individual engineers. Having strong engineering onboarding and deployment safety helps teams reach and sustain high velocity. This was our largest single predictor of CTS-SW.

- Delivery health (interventions per deploy, rollback rates): We find that teams that have implemented CI/CD with automation and changed safety best practices have better CTS-SW outcomes. Our data demonstrates that when you spend less time wrestling with deployment friction and more time creating value, both productivity and job satisfaction improve.

- Pages per on-call builder: This measures how many pages a team gets per week. We find that an increase in paging leads to lower CTS-SW, as paging can result in a deployment to production. However, we believe that work done in this reactive way may not be the most useful to customers in the long term. Understanding how this urgent, unplanned work interacts with new-feature delivery is an area for future research.

Our research has shown strong relationships between development factors and CTS-SW, making it an effective tool for measuring software development efficiency. We are working to expand the data we use in these models to better capture the ways in which teams build and operate their services. With this data, we will investigate the effects of software architecture decisions, informing architecture recommendations for teams across Amazon.

Validating linear mixed models with causal inference

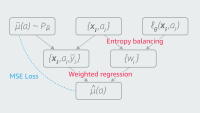

Once we found that model fitting implied a correlation between team velocity and CTS-SW, we started looking for natural experiments that would help us validate the correlation with causal evidence. The rapidly emerging set of generative AI-powered tools provided that set of natural experiments.

The first of these tools adopted at scale across Amazon was Amazon Q Developer. This tool automatically generates code completions based on existing code and comments. We investigated the tool’s effect on CR velocity by building a panel regression model with dynamic two-way fixed effects.

This model uses time-varying covariates based on observations of software builder teams over multiple time periods during a nine-month observation window, and it predicts either CR velocity or deployment velocity. We specify the percentage of the team using Q Developer in each week and pass that information to the model as well.

We also evaluate other variables passed to the model to make sure they are exogenous, i.e., not influenced by Q Developer usage, to ensure that we can make claims of a causal relationship between Q Developer usage and deployment or CR velocity. These variables include data on rollbacks and manual interventions in order to capture the impact of production and deployment incidents, which may affect the way builders are writing code.

Here’s our model specification:

yit = ai + λt + βyi,t-1 + γXit + εit

In this equation, 𝑦𝑖𝑡 is the normalized deployments per builder week or team weekly velocity for team 𝑖 at time 𝑡, 𝑎𝑖 is the team-specific fixed effect, 𝜆𝑡 is the time-specific fixed effect, 𝑦𝑖,𝑡―1 is the lagged normalized deployments or team velocity, 𝑋𝑖𝑡 is the vector of time-varying covariates (Q Developer usage rate, rollback rate, manual interventions), 𝛽𝑖𝑡 is the persistence of our dependent variable over time (i.e., it shows how much of the past value of 𝑦 carries over into the current period), and 𝜀𝑖𝑡 is the error term.

Early evidence shows that Q Developer has accelerated CR velocity and deployment velocity. More important, we found causal evidence that the launch of a new developer tool can lower CTS-SW for adopting teams and that we can measure that impact. As agentic AI grows, there will be agents for a range of tasks that engineers perform, beyond just writing code. That will require a unit of measurement that can capture their contributions holistically, without overly focusing on one area. CTS-SW enables us to measure the effects of AI across the software development lifecycle, from agents giving feedback on design docs to agents suggesting fixes to failed builds and deployments.

The road ahead

We recognize that combining experimental results can sometimes overstate an intervention’s true impact. To address this, we’re developing a baseline model that we can use to normalize our tool-based approach to ensure that our estimates of AI impact are as accurate as possible.

Looking ahead, we plan to expand our analysis to include AI’s impact on more aspects of the developer experience. By leveraging CTS-SW and developing robust methodologies for measuring AI’s impact, we’re ensuring that our AI adoption is truly customer obsessed, in that it makes Amazon’s software development more efficient. As we continue to explore and implement AI solutions, we remain committed to using data-driven approaches to improve outcomes and experiences for our customers. We look forward to sharing them with you at a later date.

Events & Conferences

A better path to pruning large language models

In recent years, large language models (LLMs) have revolutionized the field of natural-language processing and made significant contributions to computer vision, speech recognition, and language translation. One of the keys to LLMs’ effectiveness has been the exceedingly large datasets they’re trained on. The trade-off is exceedingly large model sizes, which lead to slower runtimes and higher consumption of computational resources. AI researchers know these challenges well, and many of us are seeking ways to make large models more compact while maintaining their performance.

To this end, we’d like to present a novel philosophy, “Prune Gently, Taste Often”, which focuses on a new way to do pruning, a compression process that removes unimportant connections within the layers of an LLM’s neural network. In a paper we presented at this year’s meeting of the Association for Computational Linguistics (ACL), we describe our framework, Wanda++, which can compress a model with seven billion parameters in under 10 minutes on a single GPU.

Measured according to perplexity, or how well a probability distribution predicts a given sample, our approach improves the model’s performance by 32 percent over its leading predecessor, called Wanda.

A brief history of pruning

Pruning is challenging for a number of reasons. First, training huge LLMs is expensive, and once they’re trained, runtime is expensive too. While pruning can make runtime cheaper, if it’s done later in the build process, it hurts performance. But if it’s done too early in the build process, it further exacerbates the first problem: increasing the cost of training.

When a model is trained, it builds a map of semantic connections gleaned from the training data. These connections, called parameters, gain or lose importance, or weight, as more training data is introduced. Pruning during the training stage, called “pruning-aware training,” is baked into the training recipe and performs model-wide scans of weights, at a high computational cost. What’s worse, pruning-aware training comes with a heavy trial burden of full-scale runs. Researchers must decide when to prune, how often, and what criteria to use to keep pretraining performance viable. Tuning such “hyperparameters” requires repeated model-wide culling experiments, further driving up cost

The other approach to pruning is to do it after the LLM is trained. This tends to be cheaper, taking somewhere between a few minutes and a few hours — compared to the weeks that training can take. And post-training pruning doesn’t require a large number of GPUs.

In this approach, engineers scan the model layer by layer for unimportant weights, as measured by a combination of factors such as how big the weight is and how frequently it factors into the model’s final output. If either number is low, the weight is more likely to be pruned. The problem with this approach is that it isn’t “gentle”: it shocks the structure of the model, which loses accuracy since it doesn’t learn anything from the absence of those weights, as it would have if they had been removed during training.

Striking a balance

Here’s where our philosophy presents a third path. After a model is fully trained, we scan it piece by piece, analyzing weights neither at the whole-model level nor at the layer level but at the level of decoding blocks: smaller, repeating building blocks that make up most of an LLM.

Within each decoding block, we feed in a small amount of data and collect the output to calibrate the weights, pruning the unimportant ones and updating the surviving ones for a few iterations. Since decoder blocks are small — a fraction of the size of the entire model — this approach requires only a single GPU, which can scan a block within minutes.

We liken our approach to the way an expert chef spices a complex dish. In cooking, spices are easy to overlook and hard to add at the right moment — and even risky, if handled poorly. One simply cannot add a heap of tarragon, pepper, and salt at the beginning (pruning-aware training) or at the end (layer-wide pruning) and expect to have the same results as if spices had been added carefully throughout. Similarly, our approach finds a balance between two extremes. Pruning block by block, as we do, is more like spicing a dish throughout the process. Hence the motto of our approach: Prune Gently, Taste Often.

From a technical perspective, the key is focusing on decoding blocks, which are composed of a few neural-network layers such as attention layers, multihead attention layers, and multilayer perceptrons. Even an LLM with seven billion parameters might have just 32 decoder blocks. Each block is small enough — say, 200 million parameters — to easily be scanned by a single GPU. Pruning a model at the block level saves resources by not consuming much GPU memory.

And while all pruning processes initially diminish performance, ours actually brings it back. Every time we scan a block, we balanceg pruning with performance until they’re optimized. Then we move on to the next block. This preserves both performance at the block level and overall model quality. With Wanda++, we’re offering a practical, scalable middle path for the LLM optimization process, especially for teams that don’t control the full training pipeline or budget.

What’s more, we believe our philosophy also helps address a pain point of LLM development at large companies. Before the era of LLMs, each team built its own models, with the services that a single LLM now provides achieved via orchestration of those models. Since none of the models was huge, each model development team received its own allocation of GPUs. Nowadays, however, computational resources tend to get soaked up by the teams actually training LLMs. With our philosophy, teams working on runtime performance optimization, for instance, could reclaim more GPUs, effectively expanding what they can explore.

Further implementations of Prune Gently, Taste Often could apply to other architectural optimizations. For instance, calibrating a model at the decoder-block level could convert a neural network with a dense structure, called a dense multilayer perceptron, to a less computationally intensive neural network known as a mixture of experts (MoE). In essence, per-decoder-block calibration can enable a surgical redesign of the model by replacing generic components with more efficient and better-performing alternatives such as Kolmogorov-Arnold Networks (KAN). While the Wanda++ philosophy isn’t a cure-all, we believe it opens up an exciting new path for re-thinking model compression and exploring future LLM architectures.

Events & Conferences

Three challenges in machine-based reasoning

Generative AI has made the past few years the most exhilarating time in my 30+-year career in the space of mechanized reasoning. Why? Because the computer industry and even the general public are now eager to talk about ideas that those of us working in logic have been passionate about for years. The challenges of language, syntax, semantics, validity, soundness, completeness, computational complexity, and even undecidability were previously too academic and obscure to be relevant to the masses. But all of that has changed. To those of you who are now discovering these topics: welcome! Step right in, we’re eager to work with you.

I thought it would be useful to share what I believe are the three most vexing aspects of making correct reasoning work in AI systems, e.g., generative-AI-based systems such as chatbots. The upcoming launch of the Automated-Reasoning-checks capability in Bedrock Guardrails was in fact motivated by these challenges. But we are far from done: due to the inherent difficulty of these problems, we as a community (and we on the Automated-Reasoning-checks team) will be working on these challenges for years to come.

Difficulty #1: Translating from natural to structured language

Humans usually communicate with imprecise and ambiguous language. Often, we are able to infer disambiguating detail from context. In some cases, when it really matters, we will try to clarify with each other (“did you mean to say… ?”). In other cases, even when we really should, we won’t.

This is often a source of confusion and conflict. Imagine that an employer defines eligibility for an employee HR benefit as “having a contract of employment of 0.2 full-time equivalent (FTE) or greater”. Suppose I tell you that I “spend 20% of my time at work, except when I took time off last year to help a family member recover from surgery”. Am I eligible for the benefit? When I said I “spend 20% of my time at work”, does that mean I am spending 20% of my working time, under the terms of a contract?

My statement has multiple reasonable interpretations, each with different outcomes for benefit eligibility. Something we do in Automated Reasoning checks is make multiple attempts to translate between the natural language and query predicates, using complementary approaches. This is a common interview technique: ask for the same information in different ways, and see if the facts stay consistent. In Automated Reasoning checks, we use solvers for formal logic systems to prove/disprove the equivalence of the different interpretations. If the translations differ at the semantic level, the application that uses Automated Reasoning checks can then ask for clarifications (e.g. “Can you confirm that you have a contract of employment for 20% of full time or greater?”).

Difficulty #2: Defining truth

Something that never fails to amaze me is how difficult it is for groups of people to agree on the meanings of rules. Complex rules and laws often have subtle contradictions that can go unnoticed until someone tries to reach consensus on their interpretation. The United Kingdom’s Copyrights, Designs, and Patents Act of 1988, for example, contains an inherent contradiction: it defines copyrightable works as those stemming from an author’s original intellectual creation, while simultaneously offering protection to works that require no creative human input — an incoherence that is particularly glaring in this age of AI-generated works.

The second source of trouble is that we seem to always be changing our rules. The US federal government’s per-diem rates, for example, change annually, requiring constant maintenance of any system that depends on those values.

Finally, few people actually deeply understand all of the corner cases of the rules that they are supposed to abide by. Consider the question of wearing earphones while driving: In some US states (e.g., Alaska) it’s illegal; in some states (e.g., Florida) it’s legal to wear one earphone only; while in other states (e.g., Texas), it’s actually legal. In an informal poll, very few of my friends and colleagues were confident in their understanding of the legality of wearing headphones while driving in the place where they most recently drove a car.

Automated Reasoning checks address these challenges by helping customers define what the truth should be in their domains of interest — be they tax codes, HR policies, or other rule systems — and by providing mechanisms for refining those definitions over time, as the rules change. As generative-AI-based (GenAI-based) chatbots emerged, something that captured the imagination of many of us is the idea that complex rule systems could be made accessible to the general public through natural-language queries. Chatbots could in the future give direct and easy-to-understand answers to questions like “Can I make a U-turn when driving in Tokyo, Japan?”, and by addressing the challenge of defining truth, Automated Reasoning checks can help ensure that the answer is reliable.

Difficulty #3: definitive reasoning

Imagine we have a set of rules (let’s call it R) and a statement (S) we want to verify. For example, R might be Singapore’s driving code, and S might be a question about U-turns at intersections in Singapore. We can encode R and S into Boolean logic, which computers understand, by combining Boolean variables in various ways.

Let’s say that encoding R and S needs just 500 bits — about 63 characters. This is a tiny amount of information! But even when our encoding of the rule system is small enough to fit in a text message, the number of scenarios we’d need to check is astronomical. In principle, we must consider all 2500 possible combinations before we can authoritatively declare S to be a true statement. A powerful computer today can perform hundreds of millions of operations in the time it takes you to blink. But even if we had all the computers in the world running at this blazing speed since the beginning of time, we still wouldn’t be close to checking all 2500 possibilities today.

Thankfully, the automated-reasoning community has developed a class of sophisticated tools, called SAT solvers, that make this type of combinatorial checking possible and remarkably fast in many (but not all) cases. Automated Reasoning checks make use of these tools when checking the validity of statements.

Unfortunately, not all problems can be encoded in a way that plays to the strengths of SAT solvers. For example, imagine a rule system has the provision “if every even number greater than 2 is the sum of two prime numbers, then the tax withholding rate is 30%; otherwise it’s 40%”. The problem is that to know the tax withholding rate, you need to know whether every even number greater than 2 is the sum of two prime numbers, and no one currently knows whether this is true. This statement is called Goldbach’s conjecture and has been an open problem since 1742. Still, while we don’t know the answer to Goldbach’s conjecture, we do know that it is either true or false, so we can definitively say that the tax withholding rate must be either 30% or 40%.

It’s also fun to think about whether it’s possible for a customer of Automated Reasoning checks to define a policy that is contingent on the output of Automated Reasoning checks. For instance, could the policy encode the rule “access is allowed if and only if Automated Reasoning checks say it is not allowed”? Here, no correct answer is possible, because the rule has created a contradiction by referring recursively to its own checking procedure. The best we can possibly do is answer “Unknown” (which is, in fact, what Automated Reasoning checks will answer in this instance).

The fact that a tool such as Automated Reasoning checks can return neither “true” nor “false” to statements like this was first identified by Kurt Gödel in 1931. What we know from Gödel’s result is that systems like Automated Reasoning checks can’t be both consistent and complete, so they must choose one. We have chosen to be consistent.

These three difficulties — translating natural language into structured logic, defining truth in the context of ever changing and sometimes contradictory rules, and tackling the complexity of definitive reasoning — are more than mere technical hurdles we face when we try to build AI systems with sound reasoning. They are problems that are deeply rooted in both the limitations of our technology and the intricacies of human systems.

With the forthcoming launch of Automated Reasoning checks in Bedrock Guardrails, we are tackling these challenges through a combination of complementary approaches: applying cross-checking methods to translate from ambiguous natural language to logical predicates, providing flexible frameworks to help customers develop and maintain rule systems, and employing sophisticated SAT solvers while carefully handling cases where definitive answers are not possible. As we work to improve the performance of the product on these challenges, we are not only advancing technology but also deepening our understanding of the fundamental questions that have shaped reasoning itself, from Gödel’s incompleteness theorem to the evolving nature of legal and policy frameworks.

Given our commitment to providing sound reasoning, the road ahead in the AI space is challenging. Challenge accepted!

Events & Conferences

Building a human-computer interface for everyone

What if you could control any device using only subtle hand movements?

New research from Meta’s Reality Labs is pointing even more firmly toward wrist-worn devices using surface electromyography (sEMG) becoming the future of human-computer interaction.

But how do you develop a wrist-worn input device that works for everyone?

Generalization has been one of the most significant challenges in the field of human-computer interaction (HCI). The machine learning models that power a device can be trained to respond to an individual’s hand gestures, but they struggle to apply that same learning to someone else. Essentially, novel HCI devices are usually one-size-fits-one.

On the latest episode of the Meta Tech Podcast, Pascal Hartig sits down with Sean B., Lauren G., and Jesse M. — research scientists on Meta’s EMG engineering and research team — to discuss how their team is tackling the challenge of generalization and reimagining how we interact with technology.

They discuss the road to creating a first-of-its-kind, generic human-computer neuromotor interface, what happens when software and hardware engineering meet neuroscience, and more!

Download or listen to the episode below:

You can also find the episode wherever you get your podcasts, including:

The Meta Tech Podcast is a podcast, brought to you by Meta, where we highlight the work Meta’s engineers are doing at every level – from low-level frameworks to end-user features.

Send us feedback on Instagram, Threads, or X.

And if you’re interested in learning more about career opportunities at Meta visit the Meta Careers page.

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Funding & Business1 month ago

Funding & Business1 month agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Jobs & Careers1 month ago

Jobs & Careers1 month agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education1 month ago

Education1 month agoVEX Robotics launches AI-powered classroom robotics system

-

Education1 month ago

Education1 month agoAERDF highlights the latest PreK-12 discoveries and inventions

-

Mergers & Acquisitions1 month ago

Mergers & Acquisitions1 month agoDonald Trump suggests US government review subsidies to Elon Musk’s companies

-

Jobs & Careers1 month ago

Jobs & Careers1 month agoAstrophel Aerospace Raises ₹6.84 Crore to Build Reusable Launch Vehicle

-

Podcasts & Talks1 month ago

Podcasts & Talks1 month agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks1 month ago

Podcasts & Talks1 month agoOpenAI 🤝 @teamganassi

-

Jobs & Careers1 month ago

Jobs & Careers1 month agoTelangana Launches TGDeX—India’s First State‑Led AI Public Infrastructure