AI Research

LG enters global AI race with hybrid Exaone 4.0 model

Combining large language understanding with advanced reasoning, Korea’s first hybrid AI excels in coding, math, science, professional domains

LG AI Research, the artificial intelligence arm of LG Group, on Tuesday unveiled Exaone 4.0, Korea’s first “hybrid” AI model that combines the language fluency of large language models with the advanced problem-solving abilities of reasoning AI.

This breakthrough represents a major leap in Korea’s ambition to lead in next-generation AI, as the model can both understand human language and logically reason through complex tasks.

“We will continue to advance research and development so that Exaone can become Korea’s leading frontier AI model and prove its competitiveness in the global market,” said LG AI Research’s Exaone lab leader Lee Jin-sik.

Globally, only a few companies have announced similar hybrid AI architectures, including US-based Anthropic with its Claude model and China’s Alibaba with Qwen. OpenAI is reportedly working on GPT-5, which is expected to adopt a hybrid structure as well.

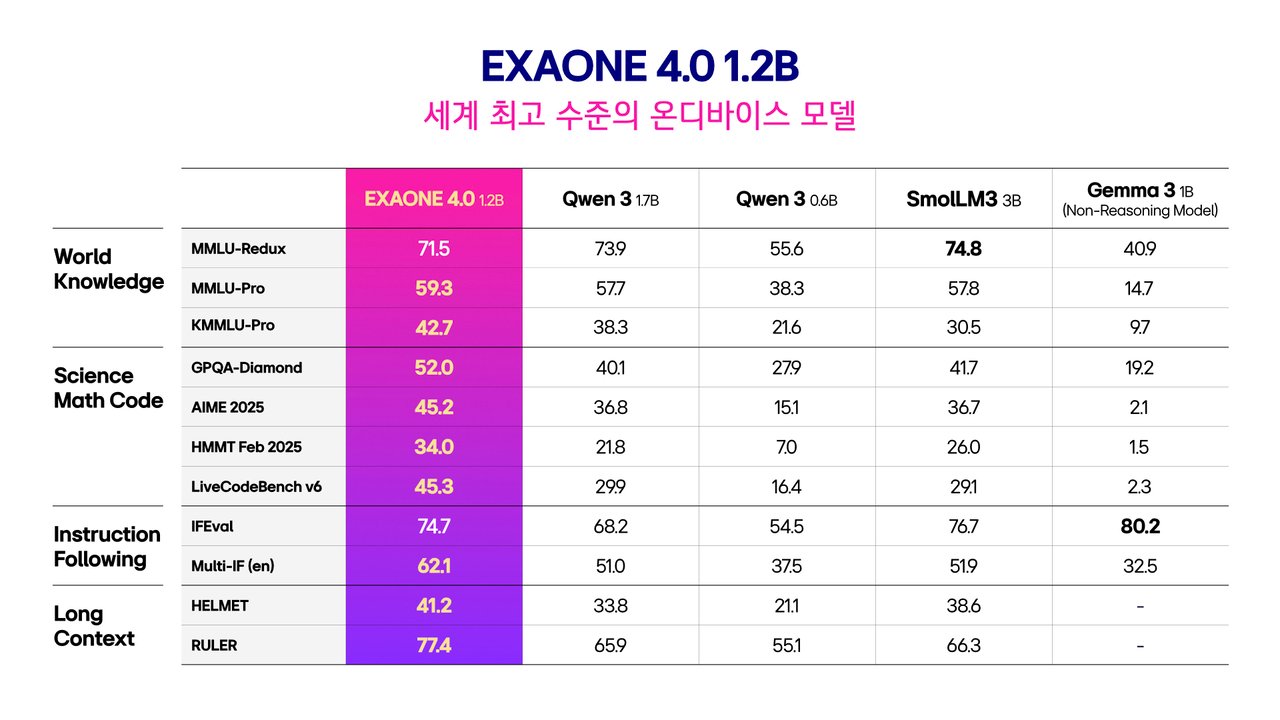

In benchmark evaluations across a variety of domains — including knowledge comprehension, problem-solving, coding, scientific reasoning and mathematics — Exaone 4.0 outperformed leading open-weight models from the US, China and France, establishing itself as one of the world’s most capable AI models.

LG introduced two versions of the model: a 32B expert model with 32 billion parameters and a lighter 1.2B on-device model with 1.2 billion parameters.

The expert model successfully passed written exams for six national professional licenses, including those for physicians, dentists, Korean medicine doctors, customs brokers, appraisers and insurance adjusters — underscoring its domain-level expertise.

The on-device model, designed for practical use in consumer electronics such as home appliances, smartphones, automotive infotainment systems and robots, runs independently on devices without connecting to external servers. It ensures faster processing, enhanced privacy, and robust security, which are key advantages in an increasingly AI-integrated world.

Despite being half the size of its predecessor, the Exaone 3.5 2.4B model released in December, the new on-device model surpasses GPT-4o mini from OpenAI in specialized evaluations across mathematics, coding, and science, making it the most powerful in its weight class globally.

To accelerate open research and innovation, LG has released Exaone 4.0 as an open-weight model on Hugging Face, the leading global platform for open-source AI. While open-weight models do not disclose architectural blueprints or training data, they make the trained weights publicly accessible, allowing developers to fine-tune and redistribute the models.

Notable peers in the open-weight category include Google’s Gemma, Meta’s LLaMA, Microsoft’s Phi, Alibaba’s Qwen and Mistral AI’s Mistral.

Additionally, LG has partnered with FriendliAI, an official Hugging Face distribution partner, to launch a commercial API service for Exaone 4.0. It allows developers and enterprises to easily deploy the model without requiring high-end GPUs, thereby broadening accessibility across industries.

In his New Year’s address, LG Group Chairman Koo Kwang-mo underscored the group’s long-term vision for artificial intelligence, calling it a core driver of future innovation.

“LG has grown by constantly pioneering uncharted territory and creating new value,” he said. “Now, with AI, we aim to reshape everyday life by making advanced technologies more accessible and meaningful, helping people reclaim their time for what truly matters.”

He emphasized that LG’s AI efforts are not just about technological leadership, but about enabling a smarter, more human-centered lifestyle through seamless AI integration across products and services.

Meanwhile, LG hosted “Exaone Partners Day” on Tuesday, bringing together 22 domestic partner companies to discuss strategies for expanding the Exaone ecosystem. The group will further highlight its AI ambitions at the “LG AI Talk Concert 2025” in Seoul on July 22, where it plans to unveil its latest research breakthroughs and present a roadmap for future AI innovation.

yeeun@heraldcorp.com

AI Research

How AI and Automation are Speeding Up Science and Discovery – Berkeley Lab News Center

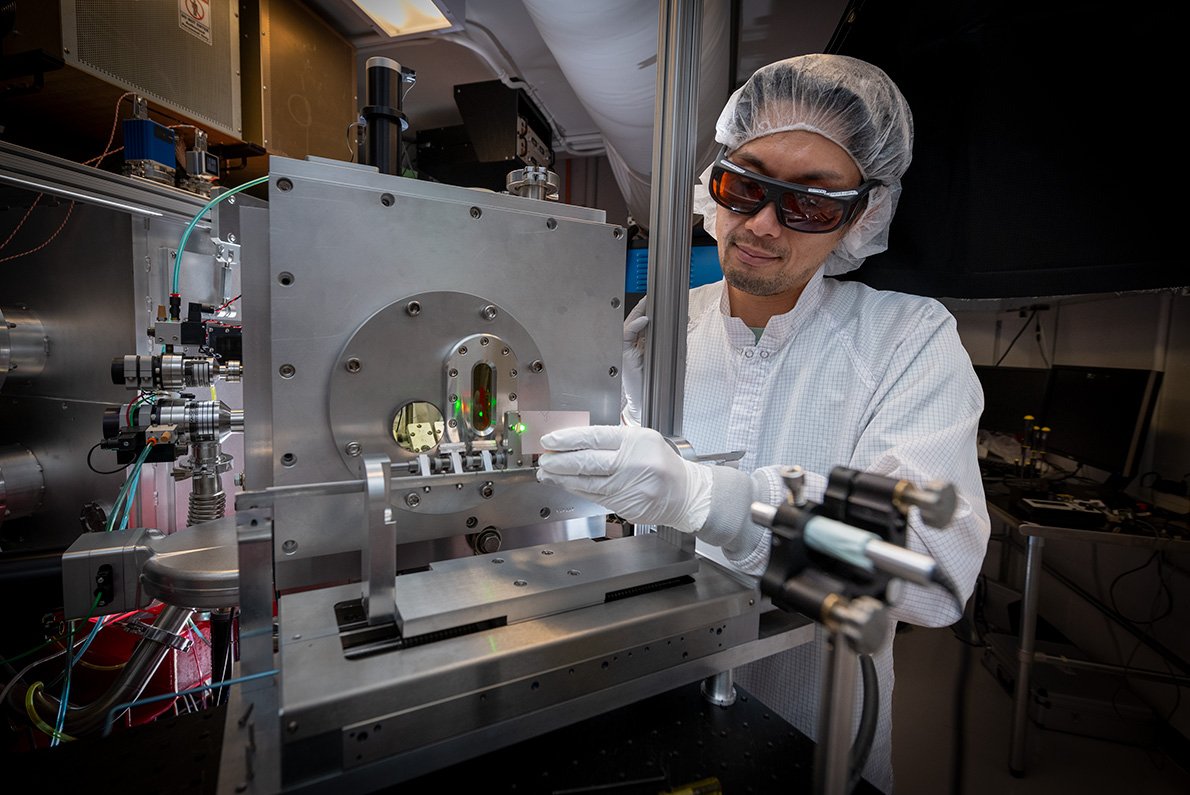

The Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab) is at the forefront of a global shift in how science gets done—one driven by artificial intelligence, automation, and powerful data systems. By integrating these tools, researchers are transforming the speed and scale of discovery across disciplines, from energy to materials science to particle physics.

This integrated approach is not just advancing research at Berkeley Lab—it’s strengthening the nation’s scientific enterprise. By pioneering AI-enabled discovery platforms and sharing them across the research community, Berkeley Lab is helping the U.S. compete in the global race for innovation, delivering the tools and insights needed to solve some of the world’s most pressing challenges.

From accelerating materials discovery to optimizing beamlines and more, here are four ways Berkeley Lab is using AI to make research faster, smarter, and more impactful.

Automating Discovery: AI and Robotics for Materials Innovation

At the heart of materials science is a time-consuming process: formulating, synthesizing, and testing thousands of potential compounds. AI is helping Berkeley Lab speed that up—dramatically.

A-Lab

At Berkeley Lab’s automated materials facility, A-Lab, AI algorithms propose new compounds, and robots prepare and test them. This tight loop between machine intelligence and automation drastically shortens the time it takes to validate materials for use in technologies like batteries and electronics.

Autobot

Exploratory tools like Autobot, a robotic system at the Molecular Foundry, are being used to investigate new materials for applications ranging from energy to quantum computing, making lab work faster and more flexible.

AI Research

Captions rebrands as Mirage, expands beyond creator tools to AI video research

Captions, an AI-powered video creation and editing app for content creators that has secured over $100 million in venture capital to date at a valuation of $500 million, is rebranding to Mirage, the company announced on Thursday.

The new name reflects the company’s broader ambitions to become an AI research lab focused on multimodal foundational models specifically designed for short-form video content for platforms like TikTok, Reels, and Shorts. The company believes this approach will distinguish it from traditional AI models and competitors such as D-ID, Synthesia, and Hour One.

The rebranding will also unify the company’s offerings under one umbrella, bringing together the flagship creator-focused AI video platform, Captions, and the recently launched Mirage Studio, which caters to brands and ad production.

“The way we see it, the real race for AI video hasn’t begun. Our new identity, Mirage, reflects our expanded vision and commitment to redefining the video category, starting with short-form video, through frontier AI research and models,” CEO Gaurav Misra told TechCrunch.

The sales pitch behind Mirage Studio, which launched in June, focuses on enabling brands to create short advertisements without relying on human talent or large budgets. By simply submitting an audio file, the AI generates video content from scratch, with an AI-generated background and custom AI avatars. Users can also upload selfies to create an avatar using their likeness.

What sets the platform apart, according to the company, is its ability to produce AI avatars that have natural-looking speech, movements, and facial expressions. Additionally, Mirage says it doesn’t rely on existing stock footage, voice cloning, or lip-syncing.

Mirage Studio is available under the business plan, which costs $399 per month for 8,000 credits. New users receive 50% off the first month.

Techcrunch event

San Francisco

|

October 27-29, 2025

While these tools will likely benefit brands wanting to streamline video production and save some money, they also spark concerns around the potential impact on the creative workforce. The growing use of AI in advertisements has prompted backlash, as seen in a recent Guess ad in Vogue’s July print edition that featured an AI-generated model.

Additionally, as this technology becomes more advanced, distinguishing between real and deepfake videos becomes increasingly difficult. It’s a difficult pill to swallow for many people, especially given how quickly misinformation can spread these days.

Mirage recently addressed its role in deepfake technology in a blog post. The company acknowledged the genuine risks of misinformation while also expressing optimism about the positive potential of AI video. It mentioned that it has put moderation measures in place to limit misuse, such as preventing impersonation and requiring consent for likeness use.

However, the company emphasized that “design isn’t a catch-all” and that the real solution lies in fostering a “new kind of media literacy” where people approach video content with the same critical eye as they do news headlines.

AI Research

Head of UK’s Turing AI Institute resigns after funding threat

Graham FraserTechnology reporter

PA

PAThe chief executive of the UK’s national institute for artificial intelligence (AI) has resigned following staff unrest and a warning the charity was at risk of collapse.

Dr Jean Innes said she was stepping down from the Alan Turing Institute as it “completes the current transformation programme”.

Her position has come under pressure after the government demanded the centre change its focus to defence and threatened to pull its funding if it did not – leading to staff discontent and a whistleblowing complaint submitted to the Charity Commission.

Dr Innes, who was appointed chief executive in July 2023, said the time was right for “new leadership”.

The BBC has approached the government for comment.

The Turing Institute said its board was now looking to appoint a new CEO who will oversee “the next phase” to “step up its work on defence, national security and sovereign capabilities”.

Its work had once focused on AI and data science research in environmental sustainability, health and national security, but moved on to other areas such as responsible AI.

The government, however, wanted the Turing Institute to make defence its main priority, marking a significant pivot for the organisation.

“It has been a great honour to lead the UK’s national institute for data science and artificial intelligence, implementing a new strategy and overseeing significant organisational transformation,” Dr Innes said.

“With that work concluding, and a new chapter starting… now is the right time for new leadership and I am excited about what it will achieve.”

What happened at the Alan Turing Institute?

Founded in 2015 as the UK’s leading centre of AI research, the Turing Institute, which is headquartered at the British Library in London, has been rocked by internal discontent and criticism of its research activities.

A review last year by government funding body UK Research and Innovation found “a clear need for the governance and leadership structure of the Institute to evolve”.

At the end of 2024, 93 members of staff signed a letter expressing a lack of confidence in its leadership team.

In July, Technology Secretary Peter Kyle wrote to the Turing Institute to tell its bosses to focus on defence and security.

He said boosting the UK’s AI capabilities was “critical” to national security and should be at the core of the institute’s activities – and suggested it should overhaul its leadership team to reflect its “renewed purpose”.

He said further government investment would depend on the “delivery of the vision” he had outlined in the letter.

This followed Prime Minister Sir Keir Starmer’s commitment to increasing UK defence spending to 5% of national income by 2035, which would include investing more in military uses of AI.

Getty Images

Getty ImagesA month after Kyle’s letter was sent, staff at the Turing institute warned the charity was at risk of collapse, after the threat to withdraw its funding.

Workers raised a series of “serious and escalating concerns” in a whistleblowing complaint submitted to the Charity Commission.

Bosses at the Turing Institute then acknowledged recent months had been “challenging” for staff.

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics