Education

LATIMER.AI AND GRAMMARLY PARTNER TO DELIVER THE INDUSTRY’S FIRST INCLUSIVE AI WRITING ASSISTANT BUNDLE FOR HIGHER EDUCATION

Engagement Will Increase Access to AI Bias Mitigation for Thousands of Educational Institutions

NEW YORK, July 17, 2025 /PRNewswire/ — Latimer.AI, the first inclusive Large Language Model (LLM), and Grammarly, the trusted AI assistant for communication and productivity, announced today a partnership to provide Grammarly for Education customers with exclusive access to the two services, which together can improve writing quality and cultural awareness, helping institutions enhance student success and drive inclusive learning outcomes, without sacrificing usability or scale. The partnership will roll out in phases, with increasing collaboration across product, go-to-market, and educational impact initiatives over the coming year.

“At Grammarly, we’ve been committed to the responsible use of AI in education since our founding in 2009,” says Jenny Maxwell, Head of Education at Grammarly. “Our partnership with Latimer helps us further demonstrate this commitment by providing an inclusive LLM to the more than 3,000 educational institutions we work with, supporting equity efforts in higher education.”

Latimer utilizes a Retrieval Augmented Generation (“RAG”) mode and is the premier online Artificial Intelligence resource for accurate historical information and bias-free interaction. Unlike other LLMs, Latimer uses licensed and exclusive content from esteemed sources, such as the New York Amsterdam News, which is the second-oldest Black newspaper published in the United States, to build its training platform.

“Our collaboration with Grammarly reflects a shared commitment to equipping the next generation with the tools they need to write with confidence, think critically, and share their voices with the world,” says Latimer CEO, John Pasmore. “Additionally, Grammarly for Education’s focus on providing writing support is complementary to what we’re building, and reinforces the idea that writers need not become overly reliant on AI, but rather that AI is a tool that augments without replacing the work of real people.”

As a customer of both Grammarly and Latimer, Dr. Robert MacAuslan, VP of AI at Southern New Hampshire University (SNHU) adds, “As the largest university in the U.S. [more than 200,000 learners] with an incredibly diverse student body, we recognize two essential truths about artificial intelligence: first, that AI literacy and exposure are critical to student success; and second, that these tools inherently reflect the biases of those who design and maintain them. Acknowledging this means that we have a responsibility to equip our learners with technologies that minimize bias and to teach them how to use these tools ethically and effectively. Our partnership with Latimer marks a meaningful step in that direction—providing students with a safe, trustworthy AI platform built on inclusive, ethically sourced training data. With Latimer, SNHU can confidently offer AI resources that better represent and support all our students.”

Education

For Good or Ill (or Both), College Students See AI as the Future

(TNS) — When incoming freshman Matt Cooper first set his eyes out for a coveted sousaphone position for the L row at The Ohio State University Marching Band, he prepared for auditions like anyone else would: practicing, playing, asking for help.

Except help came not from a coach, but from ChatGPT.

For many college students like Cooper, artificial intelligence has become a part of daily life.

This widespread everyday adoption marks a stark contrast from even a couple years ago, though: When OpenAI first introduced its chatbot to the public in 2022, the idea of AI in school settings ignited a heated debate on how the technology belonged in the classroom, if at all.

Just three years later, its adoption has spread rapidly. A recent nationwide study by Grammarly found that 87 percent of higher-ed students use AI for school, and 90 percent use it in daily life — spending 10 hours on average each week using AI. (Another study by the Digital Education Council had similar insights, finding that 86 percent of students around the world use AI for their studies.)

Yet colleges still have a patch quilt of standards for what constitutes acceptable AI use and what’s verboten. Across majors and universities in the U.S., Grammarly also discovered that while 78 percent of students say their schools have an AI policy, 32 percent say the policy is to not use AI. Nearly 46 percent of students said they worried about getting in trouble for using AI.

For instance, using AI to break down complex topics covered in class might be generally accepted, but using ChatGPT to edit an essay might raise some eyebrows.

Meanwhile, as students engage with the real world and consider their career options, they feel like they’re going to be left behind if they don’t develop AI expertise, especially as they complete internships, where they’re told as much to their faces. AI literacy has been called the most in-demand skill for workers in 2025.

That’s creating mixed emotions among college students, who are caught in between trying to follow two different sets of rules simultaneously.

To understand just how much AI has transformed young people’s lives, Fast Company reached out to undergraduates nationwide to find out how they’re navigating these conflicting mandates. What we found is that as the new technology continues to evolve, it’s carving a spot into the lives of college students — whether adults (or the students themselves) like it or not.

In this Premium story, you’ll learn:

- The creative ways Gen Z students are incorporating AI into their lives to become AI fluent, even if they can’t use it in their studies

- Why AI’s popularity as a coding assistant is starting to change how colleges think about AI in the classroom

- How current and recent students are striking a balance between “old school” and “new school” ways of learning

AN EVERYDAY COMPANION

As Ohio State’s Cooper practiced all summer for auditions, he found new ways to include technology into his life. “AI has actually helped a fairly decent amount with it, in ways that people wouldn’t normally expect,” he says.

From generating music sheets, or helping him memorize major scales and read key signatures, ChatGPT became Cooper’s trusted virtual coach. “In a matter of 20 seconds, it can come up with a full sheet of music to practice on any difficulty,” he says. (On top of that, the chatbot does it all for free.)

When Caitlin Conway, a senior at Loyola Marymount University in Los Angeles, returned to school after a full-time internship in marketing, she found university life to be a bit of reverse culture shock after being out in the workforce. But she’s found easy-to-use chatbots like ChatGPT useful for adding more structure to her days.

“I found that you have so much time that sometimes you don’t really know what to do with it,” Conway says. “I use ChatGPT to make a schedule. Like: ‘I want to have this amount of time to do studying, to do my homework, and do a yoga class,’ and it’ll come up with an easy schedule for me to follow.”

Maliha Mahmud, a rising senior in business and advertising at the University of Florida, uses AI to streamline daily tasks outside of class. She’ll ask ChatGPT to craft a series of recipes with leftover ingredients in her fridge (as opposed to relying on instant ramen like generations of college kids before her). For school, Mahmud relies on AI as a sort of private instructor, willing to answer questions at any time. “I’ll tell AI to break a concept down to me as if they’re talking to a middle schooler to understand it more,” she says.

Many students also mentioned Google’s Notebook LM, an AI tool that helps analyze sources you upload, rather than searching the web for answers. Students can upload their notes, required readings, and journals to the platform, and ask Notebook LM to make custom audio summaries with human-like voices.

Still, the value of AI was oftentimes taught outside the classroom, in the workforce. Many students saying they were not only allowed, but encouraged to use AI during their internships. At her first internship at a tech PR company, New York University senior Anyka Chakravarty says that she felt that “to be a successful person, you need to become AI fluent, so there’s a tension there as well.”

Mahmud echoes Chakravarty’s experience. “During my internship, it was encouraged to be utilizing AI,” she says. “At first I thought it was a replacement, or that it was not letting us critically think. [But] it has been such a time saver.” Mahmud used Microsoft’s Copilot to automatically transcribe meetings, take notes, and send them to participants — tasks an intern would have done manually in the past.

All this is a far cry from how college students have been conditioned to think about AI as potential grounds for expulsion.

A CHECKERED PAST (AND PRESENT)

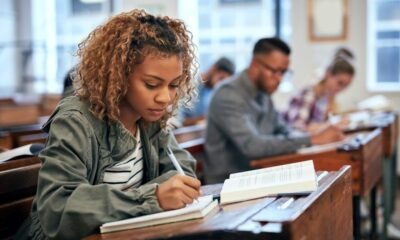

Today’s college generation was raised on plagiarism anxiety. Their pre-GPT world involved rechecking citations and resorting to online plagiarism checkers.

“I was just like, ‘I don’t want to touch this, because I don’t want to be ever accused of plagiarism.’ It definitely could be seen as very taboo,” says Grant Dutro, a recent economics and communications graduate from Wheaton College in Illinois.

Although more than half of students now use AI routinely, it wasn’t always welcomed with open arms — particularly for students who started college without it. Most students interviewed expressed an initial hesitation towards AI, because of that all-too-well known fear of getting flagged for plagiarism.

For decades, students were told that they could face severe repercussions for turning to the internet to download pre-written essays, copying material from books or blogs, and more. As technology advanced, so did the opportunities to plagiarize, particularly with the rise of services like TurnItIn, which flags copy-pasted and non-cited sources on essays.

Although colleges have managed to catch up with setting guidelines in place, the policies are oftentimes prohibitive, unclear, or left to the instructors. For many teachers, the AI policies in their classrooms are not universal, which is confusing for students and may even lead them to inadvertently getting in trouble.

For students whose policy falls to an instructor-by-instructor basis, this can sometimes mean that students taking the same course, but with different professors, could have vastly different experiences with AI, at least in the classroom.

“It’s morally incomprehensible to me that a large institution would not put front and center defining what their policies are, making sure they are consistent within departments,” says Jenny Maxwell, Grammarly’s head of education. “Because of the institution not being clear on their policy, their own students are being harmed because of that lack of communication,” Maxwell added.

While AI use in school appears to be steadily destigmatized among students, it certainly is in the workplace. Some students who recently completed internships said that not only were they allowed to use AI on the job, but were encouraged to do so (Sure enough, experts recommend recent grads upskill themselves in AI literacy, while one in three managers say they’ll refuse to hire candidates with no AI skills.)

A NEW WAY TO LEARN

The conflicting messages of “AI gets you in trouble” and “AI is the future” complicates the technology’s presence in college students’ lives, be it in class, on an internship, or in the dorm. But for many, it’s simply shifting what learning looks like.

For instance, the framework to evaluate students’s success might have depended on essays in the past. But today, it might be more suitable to judge both the essay and the process of writing with technology, Grammarly’s Maxwell says. Many students say that standards are changing to measure their learning already.

Claire Shaw, a former engineering student at the University of Toronto who graduated in 2024, explained that when she began college, she learned the basics of coding at the same time that AI piqued the interest of her instructors. She learned the “old school” way while being encouraged by some of her teachers to play with new technology. Still, Shaw did not start using AI for school until her fourth year. Now, she believes a balance between old school and new school can exist.

“You’re allowed to use AI tools, so the standard for those kinds of coding assignments were elevated,” Shaw says. It points to a big shift: In academia, where AI was (and in many cases, still does) feel taboo, it’s also being embraced, even in class.

But now that AI is now an expected tool, the difficulty of coding assignments has been elevated, she says, leading to more advanced projects at an earlier stage in a student’s career. And while this might be exciting, and a great prep for the future, Shaw still highlights the need to understand fundamentals — skills you learn on your own without AI’s help — before jumping in head first.

“There are certain moments where we still need to test the raw skills of somebody by setting up environments that don’t have AI tool access,” she explained, referencing in-person examinations with no AI tools available.

Think of it as learning to drive stick, while automatic cars exist — combining AI with traditional teaching methods may create a more holistic education. Similarly for humanities majors, some instructors are taking notes out of the old school playbook to measure these “raw skills,” like debating, communication, and critical thinking. “We’ve turned to doing a lot more interactive stuff, like doing discussion circles, or handwriting pieces of writing,” says NYU’s Chakravarty, who’s also a mentor in the school’s writing center.

College students know AI isn’t going anywhere. Even though everyone — students, teachers, schools, first bosses — continues to stumble their way through adoption, there will be some aspects of the college experience that may never go obsolete.

“My professors brought out blue books again,” says Chakravarty. “Which I hadn’t had since, like, my first semester.”

Fast Company © 2025 Mansueto Ventures, LLC. Distributed by Tribune Content Agency, LLC.

Education

‘Preposterous’ that AI created non-existent sources in Education Accord, says education minister

Newfoundland and Labrador Education Minister Bernie Davis admitted he did not know how the fake citations — which he referred to as errors — got into the Education Accord, but said they were not generated by artificial intelligence. The CBC’s Zach Goudie reports.

Education

UK universities cut back on crucial research because of reduced funding | Research funding

Universities are cutting back on vital research, with world-leading work on deadly conditions such as cancer and heart disease under threat from an erosion in funding from government and charities, according to a report.

The report, compiled by Universities UK, found that one in five UK universities have reduced their research activity, including cuts to life sciences, medicine and environmental sciences, and many said they were expecting to make steeper cuts in the future because of mounting financial pressures.

Health charities are major funders of high-value medical and life sciences research – including areas such as oncology and dementia – but the report said universities “are backing away from charity-funded research” because of the additional costs involved.

Dan Hurley, Universities UK’s deputy director of policy, said: “There is a real need for us to work with funders and government to address some of the risks here and get under the skin of what this might look like.

“What we do know is that most charity funding is in the medical and health space, so it is definitely having an impact in that area.

“We can’t pinpoint the degree to which this is impacting specific disciplines but the findings in this report are a warning that really difficult decisions are being taken on the ground and if we want to maintain the UK’s international competitiveness when it comes to research then we need to go further to support it.

“While these headline findings are a strong warning in themselves, this is going to require ongoing monitoring.”

The report, done in collaboration with the Campaign for Science and Engineering and the Association of Research Managers and Administrators using surveys of academic researchers, found that “sustained financial constraints” were likely to diminish the estimated £54bn annual contribution made by university research to the UK economy.

The study found there had been a 4% decrease in research staff in the biological, mathematical and physical sciences in the last three years, while staff in medicine, dentistry and health have dropped by 2%, mainly in highly expensive clinical medicine.

after newsletter promotion

The funding difficulties were undermining university research culture, and managers reported an impact on morale and wellbeing, less participation in conferences and knowledge exchanges, and had particular concerns for early career researchers who were struggling to get the support they needed to establish networks.

One major reason was that UK government research funding awarded on the basis of departmental track records and quality has been severely eroded by inflation, while universities are less able to use international tuition fee income to subsidise research because of falling numbers of overseas students.

“Fluctuations in international recruitment and fees from international students will have an impact on research funding – because universities aren’t able to recoup the full economic cost of research, cross-subsidisation had become a feature of funding for many,” Hurley said.

The report concluded that the UK’s position as a global leader in research and innovation is under threat as research becomes too costly to sustain, and more universities are expected to make “tough decisions” on cuts in the future.

“Universities are doing everything they can to improve efficiencies and address those financial challenges. But what’s clear is that further efficiencies are not going to be enough on their own to address these broader risks to areas of research, with implications for our research system’s international competitiveness.

“So we also need action from the government on the future of quality-related funding, which hasn’t kept pace with inflation for a decade. That’s going to be critical to restoring stability to areas of research,” Hurley said.

The Department for Science, Innovation and Technology has been contacted for a response.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi