By Amy Miller ( September 13, 2025, 00:43 GMT | Comment) — California Gov. Gavin Newsom is facing a balancing act as more than a dozen bills aimed at regulating artificial intelligence tools in a wide range of settings head to his desk for approval. He could approve bills to push back on the Trump administration’s industry-friendly avoidance of AI regulation and make California a model for other states — or he could nix bills to please wealthy Silicon Valley companies and their lobbyists.California Gov. Gavin Newsom is facing a balancing act as more than a dozen bills aimed at regulating artificial intelligence tools in a wide range of settings head to his desk for approval….

AI Insights

KentuckianaWorks addresses concerns about jobs and AI

LOUISVILLE, Ky. — While tech CEOs have made claims about the potential artificial intelligence has to wipe out parts of the workforce, Sarah Ehresman, director of labor market intelligence for KentuckianaWorks, said she thinks those concerns are overblown.

“We don’t have to fear this apocalypse of everyone losing their jobs,” Ehresman said. “It should not be something that we totally run away from.”

Generative AI has been used more and more in recent years to help workers in their professional life, with many hoping to improve their speed and efficiency.

Ehresman said she also uses AI in her daily work life to write, edit and even code. She’s able to complete a task with the help of AI within seconds.

“I mean, something like this could potentially take you a whole day to figure out, but still, definitely not two minutes,” she said. “I don’t have to spend much time doing it. But I am able to review the code and make sure it’s accurate and that I’m getting the results that I expect.”

As for fears of being replaced by technology when it comes to some jobs, Ehresman said a human element is still necessary because AI is imperfect.

“You know, artificial intelligence is known to hallucinate, produce bad results; it’s not perfect,” she said. “That’s where the human capabilities still matter a lot, to make sure that the results are what you would expect it to be.”

Whether people fear it or rely on it, Ehresman said AI is here to stay and should be embraced.

“The best thing that workers can do at this point is really figure out how to work with the technology, not run away from it because they fear that it might replace them, but figure out how to use it in an effective way to make them more productive,” Ehresman said.

According to Brookings data, it is estimated that approximately 34% of Jefferson County’s workers could see half or more of their tasks affected by the use of artificial intelligence, which is a lower rate compared to coastal tech hubs.

AI Insights

Artificial intelligence helps break barriers for Hispanic homeownership | Business

We recognize you are attempting to access this website from a country belonging to the European Economic Area (EEA) including the EU which

enforces the General Data Protection Regulation (GDPR) and therefore access cannot be granted at this time.

For any issues, contact jgnews@jg.net or call 260-461-8773.

AI Insights

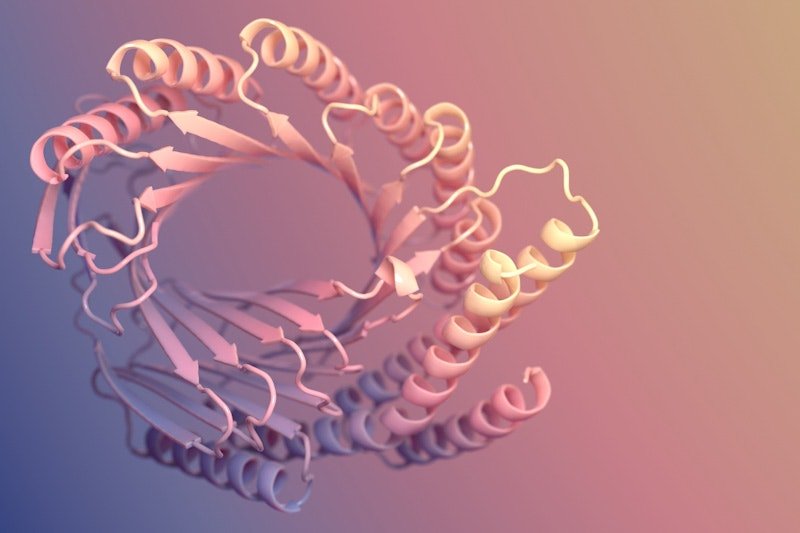

UW lab spinoff focused on AI-enabled protein design cancer treatments

A Seattle startup company has inked a deal with Eli Lilly to develop AI powered cancer treatments. The team at Lila Biologics says they’re pioneering the translation of AI design proteins for therapeutic applications. Anindya Roy is the company’s co-founder and chief scientist. He told KUOW’s Paige Browning about their work.

This interview has been edited for clarity.

Paige Browning: Tell us about Lila Biologics. You spun out of UW Professor David Baker’s protein design lab. What’s Lila’s origin story?

Anindya Roy: I moved to David Baker’s group as a postdoctoral scientist, where I was working on some of the molecules that we are currently developing at Lila. It is an absolutely fantastic place to work. It was one of the coolest experiences of my career.

The Institute for Protein Design has a program called the Translational Investigator Program, which incubates promising technologies before it spins them out. I was part of that program for four or five years where I was generating some of the translational data. I met Jake Kraft, the CEO of Lila Biologics, at IPD, and we decided to team up in 2023 to spin out Lila.

You got a huge boost recently, a collaboration with Eli Lilly, one of the world’s largest pharmaceutical companies. What are you hoping to achieve together, and what’s your timeline?

The current collaboration is one year, and then there are other targets that we can work on. We are really excited to be partnering with Lilly, mainly because, as you mentioned, it is one of the top pharma companies in the US. We are excited to learn from each other, as well as leverage their amazing clinical developmental team to actually develop medicine for patients who don’t have that many options currently.

You are using artificial intelligence and machine learning to create cancer treatments. What exactly are you developing?

Lila Biologics is a pre-clinical stage company. We use machine learning to design novel drugs. We have mainly two different interests. One is to develop targeted radiotherapy to treat solid tumors, and the second is developing long acting injectables for lung and heart diseases. What I mean by long acting injectables is something that you take every three or six months.

Tell me a little bit more about the type of tumors that you are focusing on.

We have a wide variety of solid tumors that we are going for, lung cancer, ovarian cancer, and pancreatic cancer. That’s something that we are really focused on.

And tell me a little bit about the partnership you have with Eli Lilly. What are you creating there when it comes to cancers?

The collaboration is mainly centered around targeted radiotherapy for treating solid tumors, and it’s a multi-target research collaboration. Lila Biologics is responsible for giving Lilly a development candidate, which is basically an optimized drug molecule that is ready for FDA filing. Lilly will take over after we give them the optimized molecule for the clinical development and taking those molecules through clinical trials.

Why use AI for this? What edge is that giving you, or what opportunities does it have that human intelligence can’t accomplish?

In the last couple of years, artificial intelligence has fundamentally changed how we actually design proteins. For example, in last five years, the success rate of designing protein in the computer has gone from around one to 2% to 10% or more. With that unprecedented success rate, we do believe we can bring a lot of drugs needed for the patients, especially for cancer and cardiovascular diseases.

In general, drug design is a very, very difficult problem, and it has really, really high failure rates. So, for example, 90% of the drugs that actually enter the clinic actually fail, mainly due to you cannot make them in scale, or some toxicity issues. When we first started Lila, we thought we can take a holistic approach, where we can actually include some of this downstream risk in the computational design part. So, we asked, can machine learning help us designing proteins that scale well? Meaning, can we make them in large scale, or they’re stable on the benchtop for months, so we don’t face those costly downstream failures? And so far, it’s looking really promising.

When did you realize you might be able to use machine learning and AI to treat cancer?

When we actually looked at this problem, we were thinking whether we can actually increase the clinical success rate. That has been one of the main bottlenecks of drug design. As I mentioned before, 90% of the drugs that actually enter the clinic fail. So, we are really hoping we can actually change that in next five to 10 years, where you can actually confidently predict the clinical properties of a molecule. In other words, what I’m trying to say is that can you predict how a molecule will behave in a living system. And if we can do that confidently, that will increase the success rate of drug development. And we are really optimistic, and we’ll see how it turns out in the next five to 10 years.

Beyond treating hard to tackle tumors at Lila, are there other challenges you hope to take on in the future?

Yeah. It is a really difficult problem to predict how a molecule will behave in a living system. Meaning, we are really good at designing molecules that behave in a certain way, bind to a protein in a certain way, but the moment you try to put that molecule in a human, it’s really hard to predict how that molecule will behave, or whether the molecule is going to the place of the disease, or the tissue of the disease. And that is one of the main reasons there is a 90% failure in drug development.

I think the whole field is moving towards this predictability of biological properties of a molecule, where you can actually predict how this molecule will behave in a human system, or how long it will stay in the body. I think when the computational tools become good enough, when we can predict these properties really well, I think that’s where the fun begins, and we can actually generate molecules with a really high success rate in a really short period of time.

Listen to the interview by clicking the play button above.

AI Insights

California governor facing balancing act as AI bills head to his desk | MLex

Prepare for tomorrow’s regulatory change, today

MLex identifies risk to business wherever it emerges, with specialist reporters across the globe providing exclusive news and deep-dive analysis on the proposals, probes, enforcement actions and rulings that matter to your organization and clients, now and in the longer term.

Know what others in the room don’t, with features including:

- Daily newsletters for Antitrust, M&A, Trade, Data Privacy & Security, Technology, AI and more

- Custom alerts on specific filters including geographies, industries, topics and companies to suit your practice needs

- Predictive analysis from expert journalists across North America, the UK and Europe, Latin America and Asia-Pacific

- Curated case files bringing together news, analysis and source documents in a single timeline

Experience MLex today with a 14-day free trial.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi