Events & Conferences

Journey to 1000 models: Scaling Instagram’s recommendation system

- In this post, we explore how Instagram has successfully scaled its algorithm to include over 1000 ML models without sacrificing recommendation quality or reliability.

- We delve into the intricacies of managing such a vast array of models, each with its own performance characteristics and product goals.

- We share insights and lessons learned along the way—from the initial realization that our infrastructure maturity was lagging behind our ambitious scaling goals, to the innovative solutions we implemented to bridge these gaps.

In the ever-evolving landscape of social media, Instagram serves as a hub for creative expression and connection, continually adapting to meet the dynamic needs of its global community. At the heart of this adaptability lies a web of machine learning (ML) models, each playing a crucial role in personalizing experiences. As Instagram’s reach and influence has grown, so too has the complexity of its algorithmic infrastructure. This growth, while exciting, presents a unique set of challenges, particularly in terms of reliability and scalability.

Join us as we uncover the strategies and tools that have enabled Instagram to maintain its position at the forefront of social media innovation, ensuring a seamless and engaging experience for billions of users worldwide.

Are there really that many ML models in Instagram?

Though what shows up in Feed, Stories, and Reels is personally ranked, the number of ranked surfaces goes much deeper—to which comments surface in Feed, which notifications are “important,” or whom you might tag in a post. These are all driven by ML recommendations.

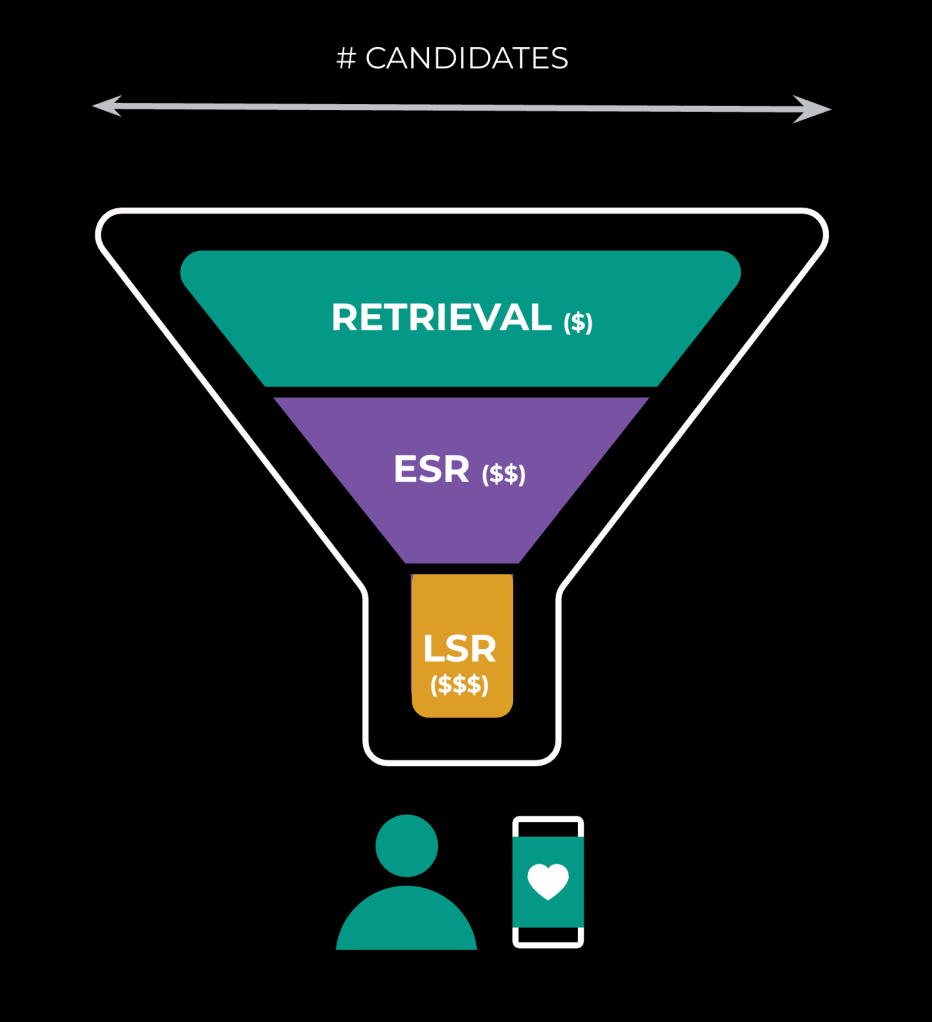

Within a given surface, we’ll have different layers of the ranking funnel: sourcing (retrieval), early-stage ranking (ESR), and late-stage ranking (LSR). We operate on fewer candidates as we progress through the funnel, as the underlying operations grow more expensive (see Figure 1 below):

Within each surface and layer, there is constant experimentation, and these permutations create a severe infrastructure challenge. We need to allow room for our ML engineers to experiment with changes such as adjusting weights for a given prediction. The net result, depicted below in Figure 2, is a large number of models serving user traffic in production:

How did we realize infra maturity wasn’t going to catch up?

Identified risks

We identified several risks associated with scaling our algorithm, rooted in complaints about ML productivity and repeating patterns of issues:

- Discovery: Even as a team focused on one app — Instagram — we couldn’t stay on top of the growth, and product ML teams were maintaining separate sources of truth, if any, for their models in production.

- Release: We didn’t have a consistent way to launch new models safely, and the process was slow, impacting ML velocity and, therefore, product innovation.

- Health: We lacked a consistent definition of model prediction quality, and with the diversity of surfaces and subtlety of degraded ranking, quality issues went unnoticed.

Solution overview

To address these risks, we implemented several solutions:

- Model registry: We built a registry that serves as a ledger for production model importance and business function foremost, among other metadata. This registry serves as our foundational source of truth, upon which we can leverage automation to uplevel system-wide observability, change management, and model health.

- Model launch tooling: We developed a more ideal flow for launching new models that includes estimation, approval, prep, scale-up, and finalization. This process is now automated, and we’ve reduced the time it takes to launch a new model from days to hours.

- Model stability: We defined and operationalized model stability, a pioneering metric that measures the accuracy of our model predictions. We’ve leveraged model stability to produce SLOs for all models in the model registry, which enables simple understanding of the entire product surface’s ML health.

Model registry

What did model investigations look like prior to the registry?

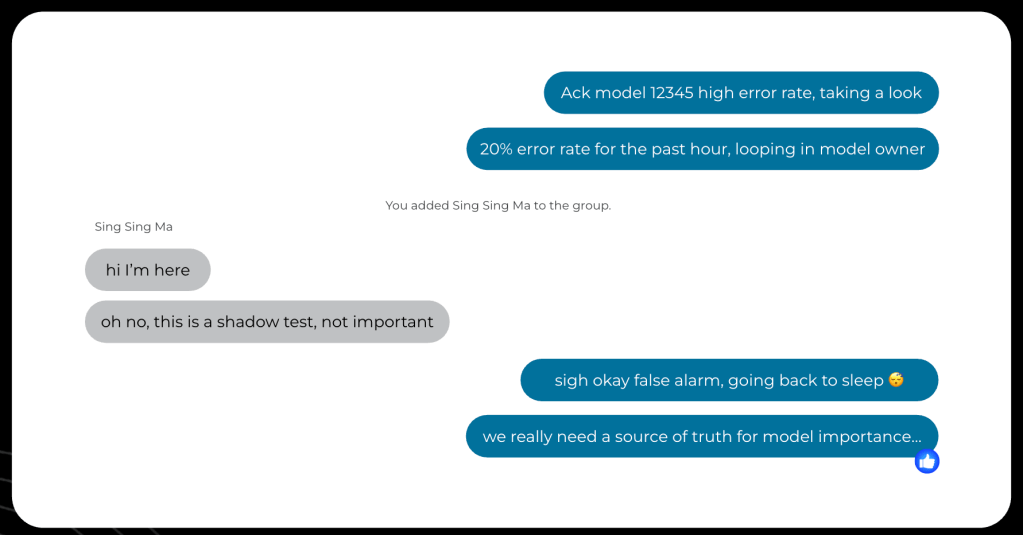

Before we created the model registry, the investigation process was a time-consuming and error-prone experience for on-call engineers and model owners. An on-call engineer had to ask multiple questions to model owners to gather information, as depicted Figure 3 below, about the context of what this model does in the stack and to clarify how important it is to the business.

Understanding this context is extremely important to the operational response: Depending on the importance of the model and the criticality of the surface it’s supporting, the response is going to differ in kind. When a model is an experiment serving a small percentage of the traffic, an appropriate response can be to end the experiment and reroute the traffic back to the main model (the baseline). But if there’s a problem with the baseline model that needs to be handled with urgency, it’s not possible to “just turn it off.” The engineer on call has to loop in the model owner, defeating the purpose of having a dedicated on-call.

To avoid holding up an operational response on a single POC, we needed a central source of truth for model importance and business function. What if the model is not available? What if 10 of these issues happen concurrently?

With the development of the model registry, we standardized the collection of model importance and business function information, ensuring most of our operational resources were going towards the most important models.

What problems did the model registry solve?

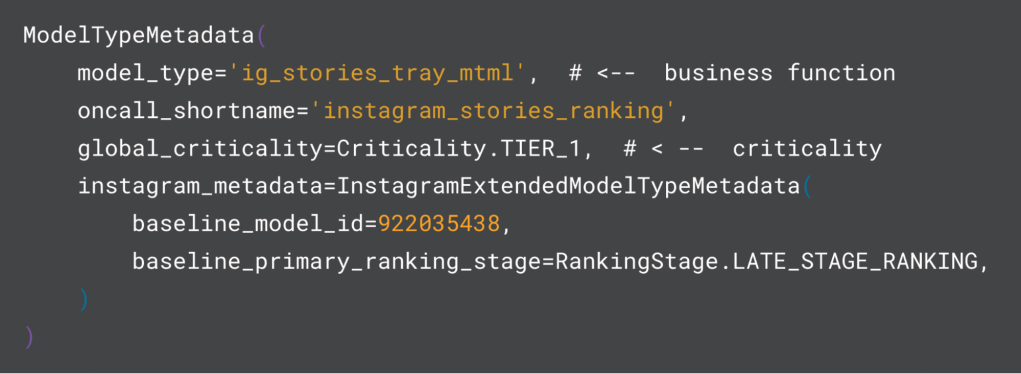

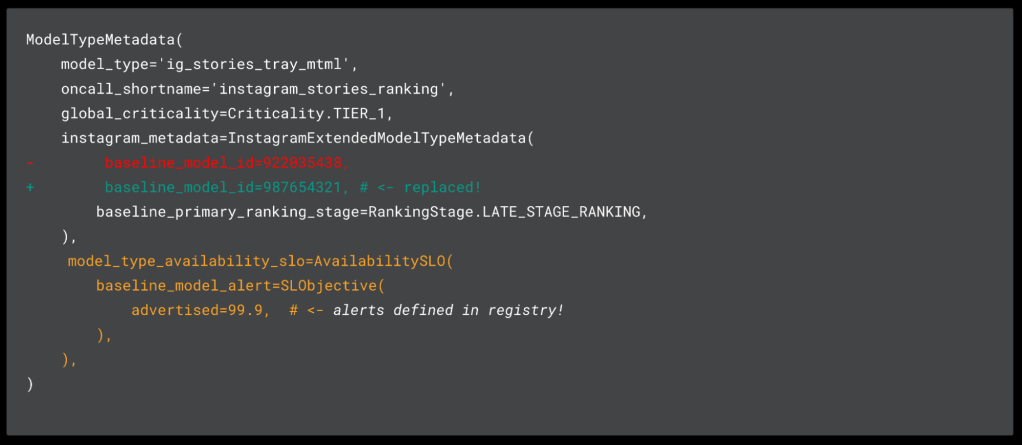

The model registry is a system of record built on top of Configerator, Meta’s distributed configuration suite . This schematized ledger (see an example in Figure 4 and detailed further below) provides read-and-write access to operational data based on the inventory of production models. It’s a flexible and extensible foundation upon which one can build automation and tools to solve problems that are specific to individual organizations within Meta that are not served by the general tooling.

As Instagram scaled its investment in AI through rapid innovation in content recommendations, the number of models and AI assets grew; as a result, it has been increasingly important — but also increasingly difficult — to maintain a minimum standard for all of our models, as we lacked an authoritative source for the business context as well as for a model’s importance.

In creating the model registry, we set out to provide a structured interface for collecting business context via model types, importance via criticality, and additional metadata that would enable model understanding. Below, we’ll get into the model types, criticality, and automation we’ve built for this purpose.

Model types

At a high level, model type describes the purpose for the ML workload where it represents a category or class of models that share a common purpose or are used in similar contexts. For example, we have “ig_stories_tray_mtml” which is a string attached to training flows, model checkpoints, inference services, and more. Put simply, a model type identifies for the reader this model’s purpose in the ranking funnel.

Let’s break it down:

“ig_stories_tray_mtml” → “ig” “stories” “tray” “mtml”

- “ig”: This model is an “ig” model as opposed to “fb” or “whatsapp”.

- “stories”: This model serves IG Stories.

- “tray”: This model serves in the main IG Stories tray (as opposed to stories in some other surface).

- “mtml”: This model is a multi-task-multi-label model, commonly used in late-stage ranking.

We can then use these model type strings to tag AI assets, and since they serve as proxies for business context, we can use them also for asset management, policy enforcement, analytics, and more.

The metadata entries in the model registry are anchored on two main types that describe model instances (ModelMetadata) as well as model types (ModelTypeMetadata). These types are made up of “core” attributes that are universally applicable, as well as “extended” attributes that allow different teams to encode their opinions about how these entries will inform operations. For example, in Instagram our extended attributes encode “baseline” and “holdout” model IDs, which are used in our ranking infrastructure to orchestrate ranking funnel execution.

Criticality

In addition to defining business function, we had to establish clear guidelines for model importance. Within Meta, SEVs and services have a unified-importance tier system where the Global Service Index (GSI) records a criticality from TIER0 to TIER4 based on the maximum incident severity level the service can cause, from SEV0 as the most critical to SEV4 as simply a “heads up.” Since GSI criticality had social proof at the company, and infra engineers were familiar with this system, we adopted these criticalities for models and now annotate them at the model type and model level.

No longer would each team decide to raise their own model services to TIER1 for themselves, increasing the burden on all teams that support these models. Teams needed to provide an immediate response (available 24/7) on call and be able to prove that their models contributed meaningfully to critical business metrics to qualify for elevated monitoring.

Configuration structure as a foundation for automation

Once we had onboarded a critical mass of Instagram models to the model registry, we could begin to fully integrate with our monitoring and observability suite using our Meta-wide configuration solution, Configerator. With this, we could now have model performance monitoring and alerts that are fully automated and integrated with our tooling for SLIs called SLICK, dashboards that allow us to monitor models across many time series dimensions, and a suite of alerting specific to the model that is driven from the entries in the model registry.

This provided all our teams confidence that our monitoring coverage was complete and automated.

Launching

While a point-in-time snapshot of models in production is great for static systems, Instagram’s ML landscape is constantly shifting. With the rapid increase of iteration on the recommendation system driving an increased number of launches, it became clear our infrastructure support to make this happen was not adequate. Time-to-launch was a bottleneck in ML velocity, and we needed to drive it down.

What did the process look like?

Conventionally, services were longstanding systems that had engineers supporting them to tune. Even when new changes would introduce new capacity regression risks, we could gate this behind change safety mechanisms.

However, our modeling and experimentation structure was unique in that we were planning for more rapid iteration, and our options were insufficient. To safely test the extent of load a new service could support, we would clone the entire service, send shadow traffic (i.e., cloned traffic that isn’t processed by our clients), and run multiple overload tests until we found a consistent peak throughput. But this wasn’t a perfect science. Sometimes we didn’t send enough traffic, and sometimes we’d send too much, and the amount could change throughout the day due to variations in global user behavior.

This could easily take two days to get right, including actually debugging the performance itself when the results weren’t expected. Once we got the result, we’d then have to estimate the final cost. Below (in Figure 5) is the formula we landed on.

The actual traffic shifting portion was tedious as well. For example, when we managed to fully estimate that we needed 500 replicas to host the new service, we might not actually have 500 spares lying around to do a full replacement, so launching was a delicate process of partially sizing up by approximately 20%, sending 20% of traffic over, and then scaling down the old service by 20% to reclaim and recycle the capacity. Rinse, repeat. Inefficient!

And by the time we got to the end of this arduous process, the ordeal still wasn’t over. Each team was responsible for correctly setting up new alerts for their baseline in a timely fashion, or else their old models could and did trigger false alarms.

How does forcing virtual pools aid product growth?

One of the prerequisites for fixing competition for resources and unblocking productivity was to put up guardrails. Prior to this, it was “first come first served,” with no clear way to even “reserve” future freed capacity. It was also hard to reason about fairness from an infra perspective: Would it make sense to give each team equal pools, or give each individual person a maximum limit?

As it turned out, not all MLEs are experimenting at the same time, due to staggered progress on their work, so individual (per-engineer) limits were not ideal. One member might be in the experimentation stage and another might be training. So our solution was to provide bandwidth to each team.

Once each team — and therefore product — had quotas distributed, their launch policy became more clear cut. Some teams established free launching as long as the team was within quota. Others required no regressions in capacity usage. But mostly this unlocked our ability to run launches in parallel, since each one required much less red tape, and prioritization was no longer done at the org level.

What other tooling improved launching?

As mentioned earlier, preplanning with capacity estimations was critical to understanding cost and ensuring reliability. We were often asked, Why not let autoscaling take care of everything? The problem was that each service could be configured slightly differently than a previously optimized service, or some architectural change could have affected the performance of the model. We didn’t have an infinite amount of supply to work with, so by the time we fully traffic-shifted everything over, we might find that we didn’t have enough supply. Reverting is costly, taking hours to get through each stage.

By doing capacity estimations in advance, this also allowed us and each team to accurately evaluate metric improvement versus cost. It might be worthwhile to double our costs if something would increase time spent on the app by 1%, but likely not for a 0.05% improvement where we could better spend that capacity funding another initiative.

With partners in AI Infra, we developed two major solutions to this process: offline performance evaluation and an automated launching platform.

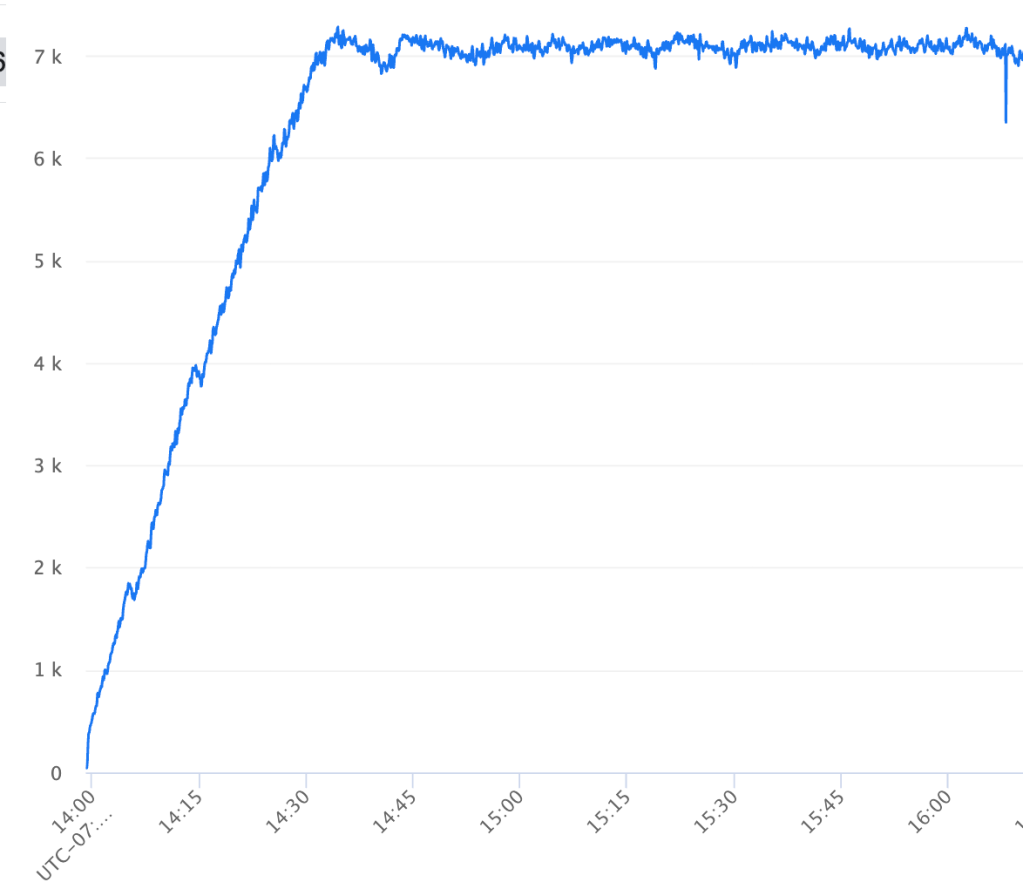

We simplified determining performance of a new service using recorded traffic. Pre-recorded traffic was continuously collected into a data warehouse that the benchmarker could read from, and we’d spin up temporary jobs with this automation. One job would replay different levels of traffic continuously and send it to another job that was a clone of the existing experiment. By putting stoppers on desired latency and error rates, the tooling would eventually output a converged stable number that we could understand as the max load (see Figure 6).

The launch platform itself would input the numbers we captured from these tests, automatically collect demand data as defined, and run that same formula to calculate a cost. The platform would then perform the upscaling/downscaling cycle for teams as we shifted traffic.

And finally, by leveraging the model registry, we were able to land this model change in code (see example in Figure 6), to help us better maintain and understand the 1000+ models within our fleet. Likewise, this bolstered our trust in the model registry, which was now directly tied to the model launch lifecycle.

This suite of launch automation has dramatically reduced the class of SEVs related to model launches, improved our pace of innovation from a few to more than 10 launches per week, and reduced the amount of time engineers spend conducting a launch by more than two days.

Model stability

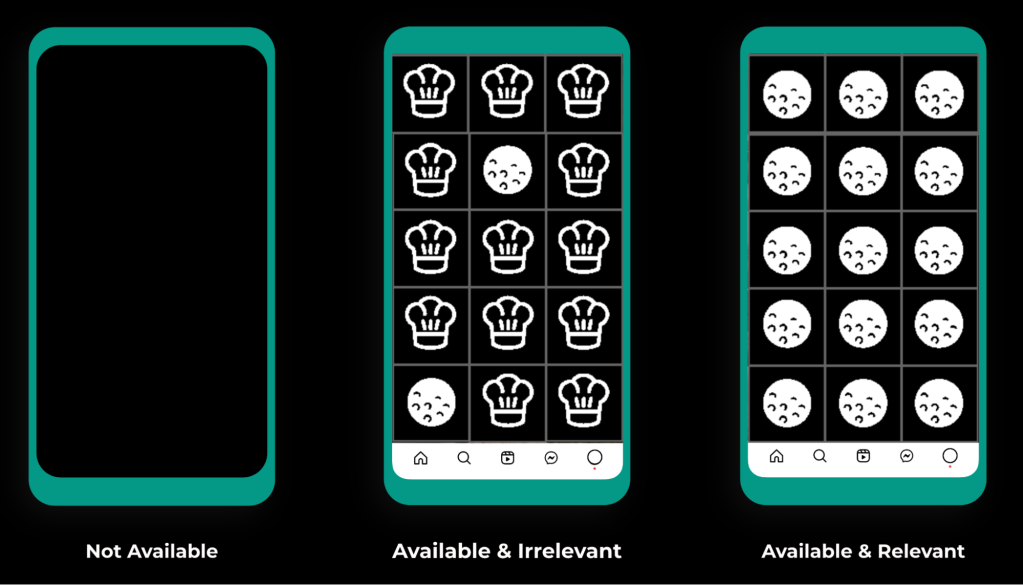

As the number of models in production increased, our organization started to feel the effects of an inconsistent measure of model health. While ranking models are run like any other distributed backend system (receive a request, produce a response), one may think a universal SLO that measures request success rate can suffice to capture holistic health. This is not the case for ranking models, as the accuracy of recommendations received carries significant importance to the end-user experience. If we consider a user who is a huge fan of golf but does not enjoy cooking content (see the “available & irrelevant” case in Figure 8 below), we see an example of this inaccuracy in practice. This is precisely what the model stability metric sought to capture.

Why is measuring ranking model reliability unique?

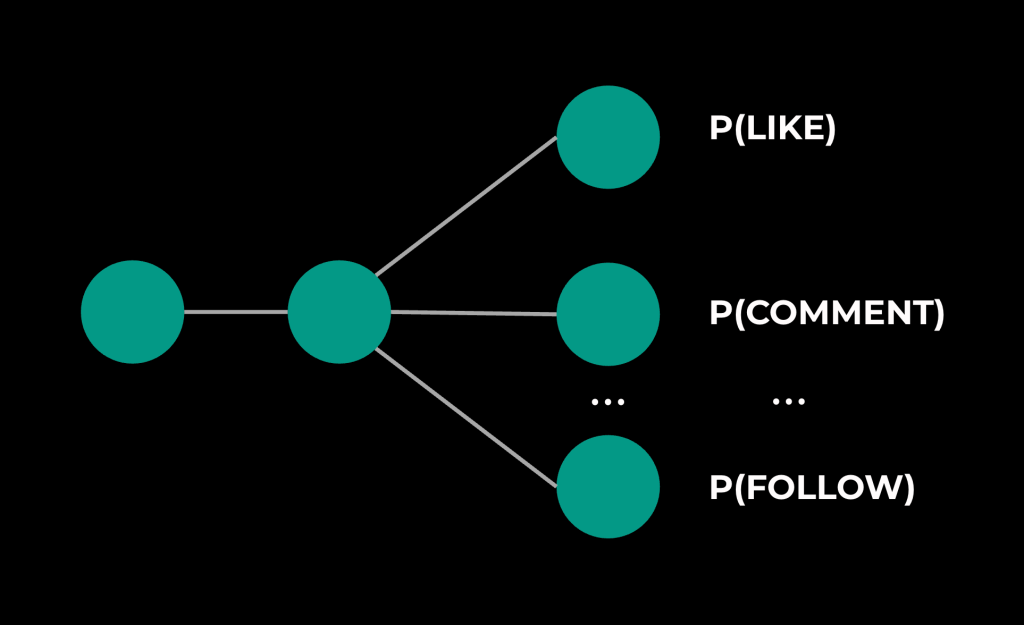

Ranking models, unlike traditional idempotent request/response backends, produce scores predicting user action given a set of candidates (PLIKE, PCOMMENT, PFOLLOW, etc.). These scores then combine and are used to determine which candidates are most relevant to an end user. It’s important that these scores accurately reflect user interest, as their accuracy is directly correlated to user engagement. If we recommend irrelevant content, user engagement suffers. The model stability metric was designed to make it easy to measure this accuracy and detect inaccuracy at our scale.

Let’s discuss how this works.

Defining model stability

Models are complex, and they produce multiple output predictions. Let’s take a simplified example (shown in Figure 9 below) of a multi-task-multi-label (MTML) model predicting three actions:

For us to claim this model is stable, we must also claim that each underlying prediction is stable.

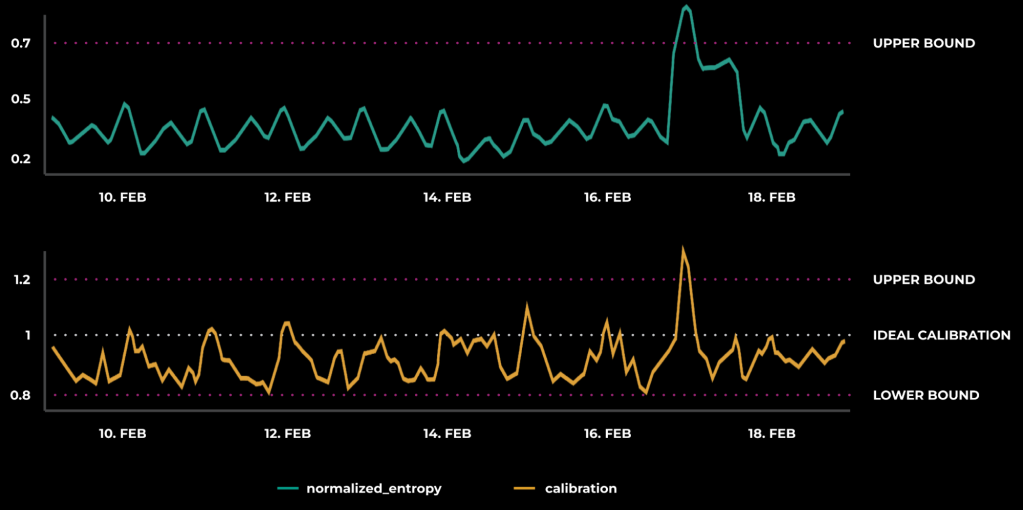

When evaluating the accuracy of a ranking model’s predictions, we typically look at two metrics:

- Model calibration, which is based on observed real-world outcomes and answers the question, “Are we over- or under-predicting user action?” It is calculated as a ratio of predicted click-through-rate (CTR) and empirical CTR. A perfect predictor will have calibration centered at 1.

- Model normalized entropy (NE), which measures the discriminative power of a predictor, and answers the question, “How well can this predictor separate action from inaction?” It is calculated as a ratio of the average log-loss per impression to what the average log-loss per impression would be if we always predicted the empirical CTR. With NE, lower values are better, and an NE of 1 is equivalent to random predictions.

(For more information regarding our choice of prediction evaluation metrics, please refer to the paper, “Practical Lessons from Predicting Clicks on Ads at Facebook.”)

A model’s predictions are unstable when either calibration or NE are out of their expected healthy ranges. To determine what a healthy range is, we must look at each metric in real time, and Figure 10 below shows what these time series can look like:

By observing the trend of a healthy prediction, we can apply thresholds for our evaluation metrics. When these thresholds are breached, the underlying prediction is considered unstable.

From here, we can define model stability as a binary indicator across a model’s predictions. It is 1 if all underlying predictions are stable, and 0 if any prediction is unstable. This is an extremely powerful method of reacting to real-time prediction instability as well as a tool for understanding trends in predictive health per model or across distinct products ranking funnels.

Operationalizing model stability

With a real-time view on model predictive health, we can leverage this unified definition of model stability and apply it to all of our models in production, once again leveraging the model registry as a ledger to hold this important data. In Figure 11 below, we can see the addition of model stability metric metadata after we determined the expected thresholds.

Given the large number of models in production, each producing many predictions, building a portable definition of model health applicable to all of our ranking models represented an important milestone toward upleveling Instagram’s ML infrastructure maturity. This has unlocked our ability to build generic alerting to guarantee detection of our most important models becoming unstable, thereby moving us closer to mitigation when our recommendation system is at risk.

Since the addition of these metrics and alerting, ML teams have discovered previously hidden issues within their models and addressed them faster than before, leading to higher-quality recommendations.

Key takeaways

In our journey to scale Instagram’s algorithm to manage over 1000 models, we have learned several critical lessons that have shaped our approach and infrastructure. These takeaways not only highlight the challenges we faced but also underscore the strategies that led to our success.

Infra understanding is the foundation to building the right tools

A unified understanding of our infrastructure footprint was essential in developing the right tools to support our scaling efforts. By identifying the gaps and potential risks in our existing systems, we were able to implement solutions such as the model registry that significantly improved our operational efficiency and reliability posture.

Helping colleagues move fast means we all move faster

By addressing the model iteration bottleneck, we enabled our teams to innovate more rapidly. Our focus on creating a seamless, self-service process for model iteration empowered client teams to take ownership of their workflows. This not only accelerated their progress but also reduced the operational burden on our infrastructure team. As a result, the entire organization benefited from increased agility and productivity.

Reliability must consider quality

Ensuring the reliability of our models required us to redefine how we measure and maintain model quality. By operationalizing model stability and establishing clear metrics for model health, we were able to proactively manage the performance of our models. This approach enables us to maintain high standards of quality across our recommendation systems, ultimately enhancing user engagement and satisfaction.

Our experience in scaling Instagram’s recommendation system has reinforced the importance of infrastructure understanding, collaboration, and a focus on quality. By building robust tools and processes, we have not only improved our own operations but also empowered our colleagues to drive innovation and growth across the platform.

Events & Conferences

Revolutionizing warehouse automation with scientific simulation

Modern warehouses rely on complex networks of sensors to enable safe and efficient operations. These sensors must detect everything from packages and containers to robots and vehicles, often in changing environments with varying lighting conditions. More important for Amazon, we need to be able to detect barcodes in an efficient way.

The Amazon Robotics ID (ARID) team focuses on solving this problem. When we first started working on it, we faced a significant bottleneck: optimizing sensor placement required weeks or months of physical prototyping and real-world testing, severely limiting our ability to explore innovative solutions.

To transform this process, we developed Sensor Workbench (SWB), a sensor simulation platform built on NVIDIA’s Isaac Sim that combines parallel processing, physics-based sensor modeling, and high-fidelity 3-D environments. By providing virtual testing environments that mirror real-world conditions with unprecedented accuracy, SWB allows our teams to explore hundreds of configurations in the same amount of time it previously took to test just a few physical setups.

Camera and target selection/positioning

Sensor Workbench users can select different cameras and targets and position them in 3-D space to receive real-time feedback on barcode decodability.

Three key innovations enabled SWB: a specialized parallel-computing architecture that performs simulation tasks across the GPU; a custom CAD-to-OpenUSD (Universal Scene Description) pipeline; and the use of OpenUSD as the ground truth throughout the simulation process.

Parallel-computing architecture

Our parallel-processing pipeline leverages NVIDIA’s Warp library with custom computation kernels to maximize GPU utilization. By maintaining 3-D objects persistently in GPU memory and updating transforms only when objects move, we eliminate redundant data transfers. We also perform computations only when needed — when, for instance, a sensor parameter changes, or something moves. By these means, we achieve real-time performance.

Visualization methods

Sensor Workbench users can pick sphere- or plane-based visualizations, to see how the positions and rotations of individual barcodes affect performance.

This architecture allows us to perform complex calculations for multiple sensors simultaneously, enabling instant feedback in the form of immersive 3-D visuals. Those visuals represent metrics that barcode-detection machine-learning models need to work, as teams adjust sensor positions and parameters in the environment.

CAD to USD

Our second innovation involved developing a custom CAD-to-OpenUSD pipeline that automatically converts detailed warehouse models into optimized 3-D assets. Our CAD-to-USD conversion pipeline replicates the structure and content of models created in the modeling program SolidWorks with a 1:1 mapping. We start by extracting essential data — including world transforms, mesh geometry, material properties, and joint information — from the CAD file. The full assembly-and-part hierarchy is preserved so that the resulting USD stage mirrors the CAD tree structure exactly.

To ensure modularity and maintainability, we organize the data into separate USD layers covering mesh, materials, joints, and transforms. This layered approach ensures that the converted USD file faithfully retains the asset structure, geometry, and visual fidelity of the original CAD model, enabling accurate and scalable integration for real-time visualization, simulation, and collaboration.

OpenUSD as ground truth

The third important factor was our novel approach to using OpenUSD as the ground truth throughout the entire simulation process. We developed custom schemas that extend beyond basic 3-D-asset information to include enriched environment descriptions and simulation parameters. Our system continuously records all scene activities — from sensor positions and orientations to object movements and parameter changes — directly into the USD stage in real time. We even maintain user interface elements and their states within USD, enabling us to restore not just the simulation configuration but the complete user interface state as well.

This architecture ensures that when USD initial configurations change, the simulation automatically adapts without requiring modifications to the core software. By maintaining this live synchronization between the simulation state and the USD representation, we create a reliable source of truth that captures the complete state of the simulation environment, allowing users to save and re-create simulation configurations exactly as needed. The interfaces simply reflect the state of the world, creating a flexible and maintainable system that can evolve with our needs.

Application

With SWB, our teams can now rapidly evaluate sensor mounting positions and verify overall concepts in a fraction of the time previously required. More importantly, SWB has become a powerful platform for cross-functional collaboration, allowing engineers, scientists, and operational teams to work together in real time, visualizing and adjusting sensor configurations while immediately seeing the impact of their changes and sharing their results with each other.

New perspectives

In projection mode, an explicit target is not needed. Instead, Sensor Workbench uses the whole environment as a target, projecting rays from the camera to identify locations for barcode placement. Users can also switch between a comprehensive three-quarters view and the perspectives of individual cameras.

Due to the initial success in simulating barcode-reading scenarios, we have expanded SWB’s capabilities to incorporate high-fidelity lighting simulations. This allows teams to iterate on new baffle and light designs, further optimizing the conditions for reliable barcode detection, while ensuring that lighting conditions are safe for human eyes, too. Teams can now explore various lighting conditions, target positions, and sensor configurations simultaneously, gleaning insights that would take months to accumulate through traditional testing methods.

Looking ahead, we are working on several exciting enhancements to the system. Our current focus is on integrating more-advanced sensor simulations that combine analytical models with real-world measurement feedback from the ARID team, further increasing the system’s accuracy and practical utility. We are also exploring the use of AI to suggest optimal sensor placements for new station designs, which could potentially identify novel configurations that users of the tool might not consider.

Additionally, we are looking to expand the system to serve as a comprehensive synthetic-data generation platform. This will go beyond just simulating barcode-detection scenarios, providing a full digital environment for testing sensors and algorithms. This capability will let teams validate and train their systems using diverse, automatically generated datasets that capture the full range of conditions they might encounter in real-world operations.

By combining advanced scientific computing with practical industrial applications, SWB represents a significant step forward in warehouse automation development. The platform demonstrates how sophisticated simulation tools can dramatically accelerate innovation in complex industrial systems. As we continue to enhance the system with new capabilities, we are excited about its potential to further transform and set new standards for warehouse automation.

Events & Conferences

Enabling Kotlin Incremental Compilation on Buck2

The Kotlin incremental compiler has been a true gem for developers chasing faster compilation since its introduction in build tools. Now, we’re excited to bring its benefits to Buck2 – Meta’s build system – to unlock even more speed and efficiency for Kotlin developers.

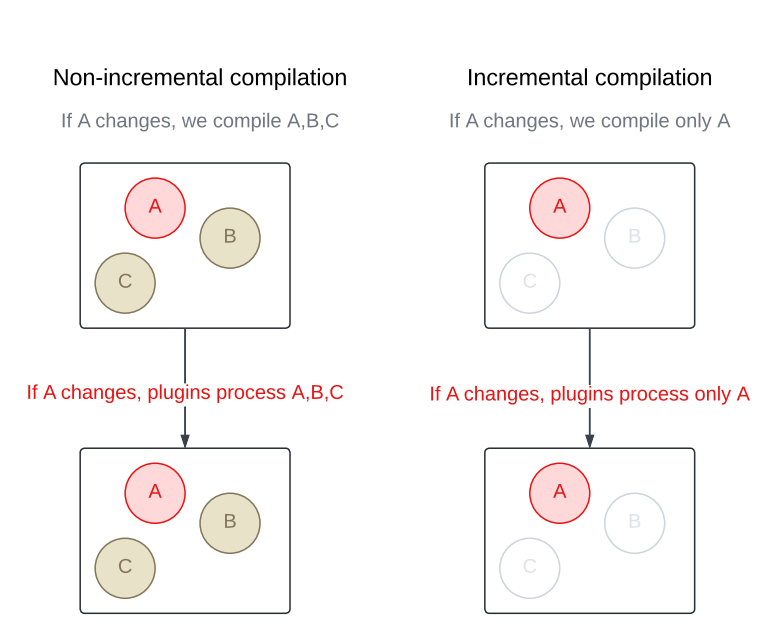

Unlike a traditional compiler that recompiles an entire module every time, an incremental compiler focuses only on what was changed. This cuts down compilation time in a big way, especially when modules contain a large number of source files.

Buck2 promotes small modules as a key strategy for achieving fast build times. Our codebase followed that principle closely, and for a long time, it worked well. With only a handful of files in each module, and Buck2’s support for fast incremental builds and parallel execution, incremental compilation didn’t seem like something we needed.

But, let’s be real: Codebases grow, teams change, and reality sometimes drifts away from the original plan. Over time, some modules started getting bigger – either from legacy or just organic growth. And while big modules were still the exception, they started having quite an impact on build times.

So we gave the Kotlin incremental compiler a closer look – and we’re glad we did. The results? Some critical modules now build up to 3x faster. That’s a big win for developer productivity and overall build happiness.

Curious about how we made it all work in Buck2? Keep reading. We’ll walk you through the steps we took to bring the Kotlin incremental compiler to life in our Android toolchain.

Step 1: Integrating Kotlin’s Build Tools API

As of Kotlin 2.2.0, the only guaranteed public contract to use the compiler is through the command-line interface (CLI). But since the CLI doesn’t support incremental compilation (at least for now), it didn’t meet our needs. Alternatively, we could integrate the Kotlin incremental compiler directly via the internal compiler’s components – APIs that are technically accessible but not intended for public use. However, relying on them would’ve made our toolchain fragile and likely to break with every Kotlin update since there’s no guarantee of backward compatibility. That didn’t seem like the right path either.

Then we came across the Build Tools API (KEEP), introduced in Kotlin 1.9.20 as the official integration point for the compiler – including support for incremental compilation. Although the API was still marked as experimental, we decided to give it a try. We knew it would eventually stabilize, and saw it as a great opportunity to get in early, provide feedback, and help shape its direction. Compared to using internal components, it offered a far more sustainable and future-proof approach to integration.

⚠️ Depending on kotlin-compiler? Watch out!

In the Java world, a shaded library is a modified version of the library where the class and package names are changed. This process – called shading – is a handy way to avoid classpath conflicts, prevent version clashes between libraries, and keeps internal details from leaking out.

Here’s quick example:

- Unshaded (original) class: com.intellij.util.io.DataExternalizer

- Shaded class: org.jetbrains.kotlin.com.intellij.util.io.DataExternalizer

The Build Tools API depends on the shaded version of the Kotlin compiler (kotlin-compiler-embeddable). But our Android toolchain was historically built with the unshaded one (kotlin-compiler). That mismatch led to java.lang.NoClassDefFoundError crashes when testing the integration because the shaded classes simply weren’t on the classpath.

Replacing the unshaded compiler across the entire Android toolchain would’ve been a big effort. So to keep moving forward, we went with a quick workaround: We unshaded the Build Tools API instead. 🙈 Using the jarjar library, we stripped the org.jetbrains.kotlin prefix from class names and rebuilt the library.

Don’t worry, once we had a working prototype and confirmed everything behaved as expected, we circled back and did it right – fully migrating our toolchain to use the shaded Kotlin compiler. That brought us back in line with the API’s expectations and gave us a more stable setup for the future.

Step 2: Keeping previous output around for the incremental compiler

To compile incrementally, the Kotlin compiler needs access to the output from the previous build. Simple enough, but Buck2 deletes that output by default before rebuilding a module.

With incremental actions, you can configure Buck2 to skip the automatic cleanup of previous outputs. This gives your build actions access to everything from the last run. The tradeoff is that it’s now up to you to figure out what’s still useful and manually clean up the rest. It’s a bit more work, but it’s exactly what we needed to make incremental compilation possible.

Step 3: Making the incremental compiler cache relocatable

At first, this might not seem like a big deal. You’re not planning to move your codebase around, so why worry about making the cache relocatable, right?

Well… that’s until you realize you’re no longer in a tiny team, and you’re definitely not the only one building the project. Suddenly, it does matter.

Buck2 supports distributed builds, which means your builds don’t have to run only on your local machine. They can be executed elsewhere, with the results sent back to you. And if your compiler cache isn’t relocatable, this setup can quickly lead to trouble – from conflicting overloads to strange ambiguity errors caused by mismatched paths in cached data.

So we made sure to configure the root project directory and the build directory explicitly in the incremental compilation settings. This keeps the compiler cache stable and reliable, no matter who runs the build or where it happens.

Step 4: Configuring the incremental compiler

In a nutshell, to decide what needs to be recompiled, the Kotlin incremental compiler looks for changes in two places:

- Files within the module being rebuilt.

- The module’s dependencies.

Once the changes are found, the compiler figures out which files in the module are affected – whether by direct edits or through updated dependencies – and recompiles only those.

To get this process rolling, the compiler needs just a little nudge to understand how much work it really has to do.

So let’s give it that nudge!

Tracking changes inside the module

When it comes to tracking changes, you’ve got two options: You can either let the compiler do its magic and detect changes automatically, or you can give it a hand by passing a list of modified files yourself. The first option is great if you don’t know which files have changed or if you just want to get something working quickly (like we did during prototyping). However, if you’re on a Kotlin version earlier than 2.1.20, you have to provide this information yourself. Automatic source change detection via the Build Tools API isn’t available prior to that. Even with newer versions, if the build tool already has the change list before compilation, it’s still worth using it to optimize the process.

This is where Buck’s incremental actions come in handy again! Not only can we preserve the output from the previous run, but we also get hash digests for every action input. By comparing those hashes with the ones from the last build, we can generate a list of changed files. From there, we pass that list to the compiler to kick off incremental compilation right away – no need for the compiler to do any change detection on its own.

Tracking changes in dependencies

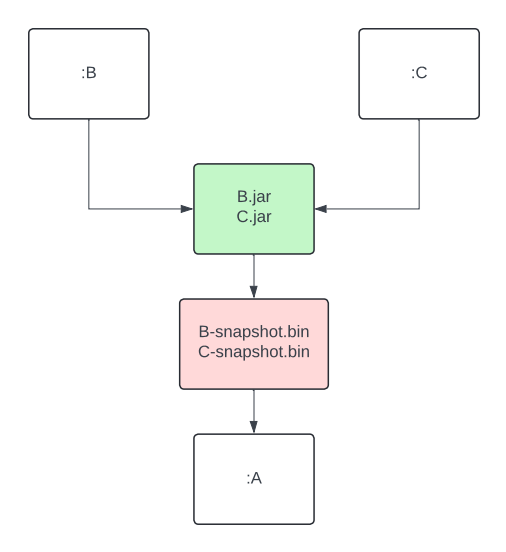

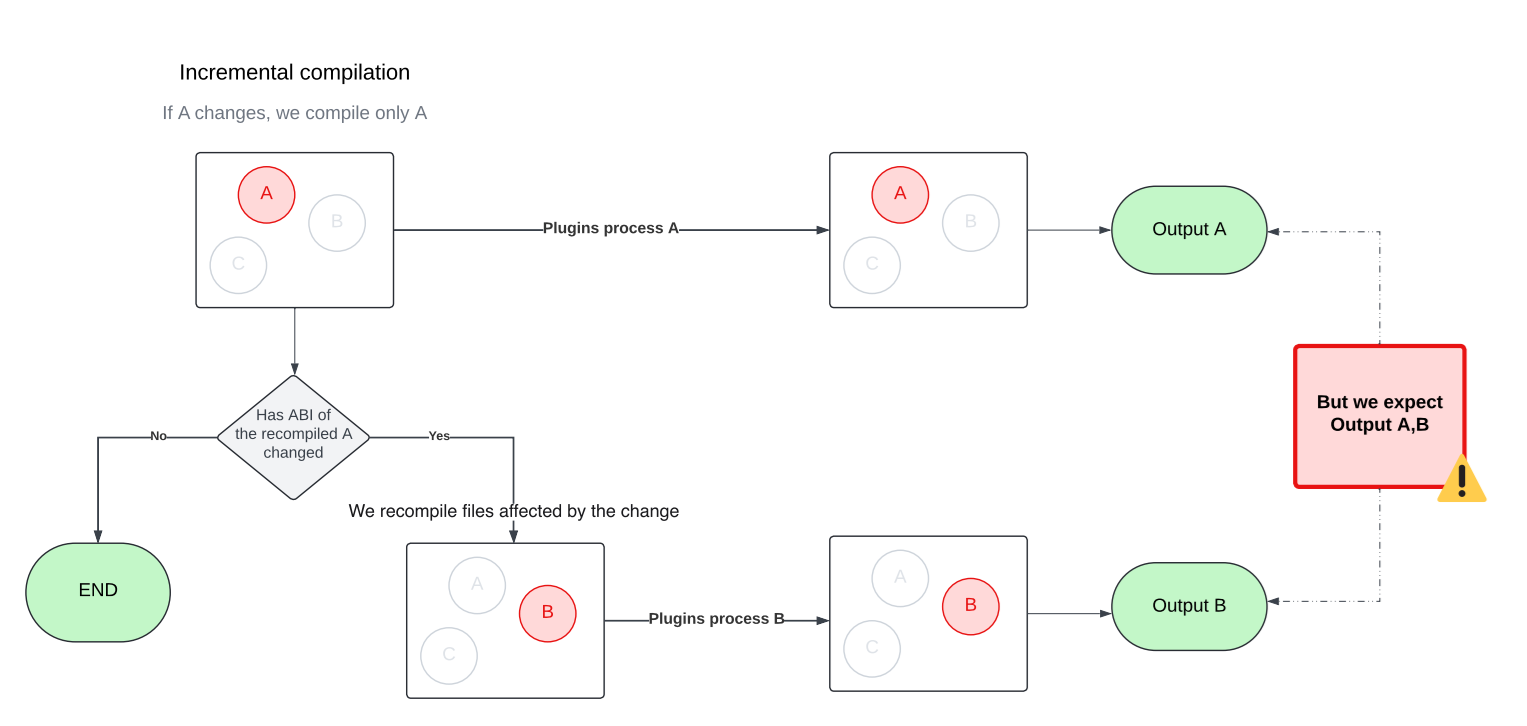

Sometimes it’s not the module itself that changes, it’s something the module depends on. In these cases, the compiler relies on classpath snapshot. These snapshots capture the Application Binary Interface (ABI) of a library. By comparing the current snapshots to the previous one, the compiler can detect changes in dependencies and figure out which files in your module are affected. This adds an extra layer of filtering on top of standard compilation avoidance.

In Buck2, we added a dedicated action to generate classpath snapshots from library outputs. This artifact is then passed as an input to the consuming module, right alongside the library’s compiled output. The best part? Since it’s a separate action, it can be run remotely or be pulled from cache, so your machine doesn’t have to do the heavy lifting of extracting ABI at this step.

If, after all, only your module changes but your dependencies do not, the API also lets you skip the snapshot comparison entirely if your build tool handles the dependency analysis on its own. Since we already had the necessary data from Buck2’s incremental actions, adding this optimization was almost free.

Step 5: Making compiler plugins work with the incremental compiler

One of the biggest challenges we faced when integrating the incremental compiler was making it play nicely with our custom compiler plugins, many of which are important to our build optimization strategy. This step was necessary for unlocking the full performance benefits of incremental compilation, but it came with two major issues we needed to solve.

🚨 Problem 1: Incomplete results

As we already know, the input to the incremental compiler does not have to include all Kotlin source files. Our plugins weren’t designed for this and ended up producing incomplete results when run on just a subset of files. We had to make them incremental as well so they could handle partial inputs correctly.

🚨 Problem 2: Multiple rounds of Compilation

The Kotlin incremental compiler doesn’t just recompile the files that changed in a module. It may also need to recompile other files in the same module that are affected by those changes. Figuring out the exact set of affected files is tricky, especially when circular dependencies come into play. To handle this, the incremental compiler approximates the affected set by compiling in multiple rounds within a single build.

💡Curious how that works under the hood? The Kotlin blog on fast compilation has a great deep dive that’s worth checking out.

This behavior comes with a side effect, though. Since the compiler may run in multiple rounds with different sets of files, compiler plugins can also be triggered multiple times, each time with a different input. That can be problematic, as later plugin runs may override outputs produced by earlier ones. To avoid this, we updated our plugins to accumulate their results across rounds rather than replacing them.

Step 6: Verifying the functionality of annotation processors

Most of our annotation processors use Kotlin Symbol Processing (KSP2), which made this step pretty smooth. KSP2 is designed as a standalone tool that uses the Kotlin Analysis API to analyze source code. Unlike compiler plugins, it runs independently from the standard compilation flow. Thanks to this setup, we were able to continue using KSP2 without any changes.

💡 Bonus: KSP2 comes with its own built-in incremental processing support. It’s fully self-contained and doesn’t depend on the incremental compiler at all.

Before we adopted KSP2 (or when we were using an older version of the Kotlin Annotation Processing Tool (KAPT), which operates as a plugin) our annotation processors ran in a separate step dedicated solely to annotation processing. That step ran before the main compilation and was always non-incremental.

Step 7: Enabling compilation against ABI

To maximize cache hits, Buck2 builds Android modules against the class ABI instead of the full JAR. For Kotlin targets, we use the jvm-abi-gen compiler plugin to generate class ABI during compilation.

But once we turned on incremental compilation, a couple of new challenges popped up:

- The jvm-abi-gen plugin currently lacks direct support for incremental compilation, which ties back to the issues we mentioned earlier with compiler plugins.

- ABI extraction now happens twice – once during compilation via jvm-abi-gen, and again when the incremental compiler creates classpath snapshots.

In theory, both problems could be solved by switching to full JAR compilation and relying on classpath snapshots to maintain cache hits. While that could work in principle, it would mean giving up some of the build optimizations we’ve already got in place – a trade-off that needs careful evaluation before making any changes.

For now, we’ve implemented a custom (yet suboptimal) solution that merges the newly generated ABI with the previous result. It gets the job done, but we’re still actively exploring better long-term alternatives.

Ideally, we’d be able to reuse the information already collected for classpath snapshot or, even better, have this kind of support built directly into the Kotlin compiler. There’s an open ticket for that: KT-62881. Fingers crossed!

Step 8: Testing

Measuring the impact of build changes is not an easy task. Benchmarking is great for getting a sense of a feature’s potential, but it doesn’t always reflect how things perform in “the real world.” Pre/post testing can help with that, but it’s tough to isolate the impact of a single change, especially when you’re not the only one pushing code.

We set up A/B testing to overcome these obstacles and measure the true impact of the Kotlin incremental compiler on Meta’s codebase with high confidence. It took a bit of extra work to keep the cache healthy across variants, but it gave us a clean, isolated view of how much difference the incremental compiler really made at scale.

We started with the largest modules – the ones we already knew were slowing builds the most. Given their size and known impact, we expected to see benefits quickly. And sure enough, we did.

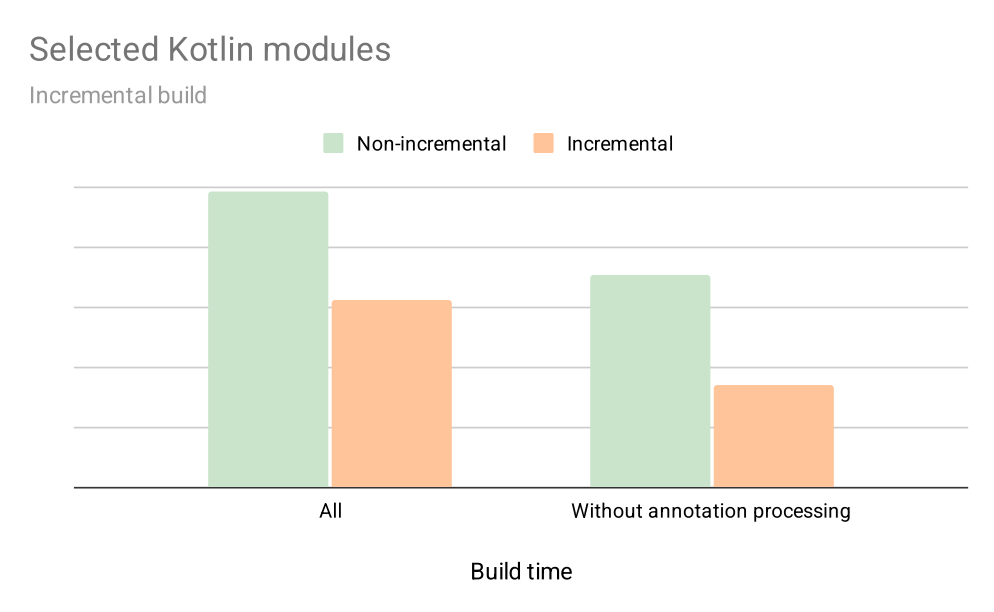

The impact of incremental compilation

The graph below shows early results on how enabling incremental compilation for selected targets impacts their local build times during incremental builds over a 4-week period. This includes not just compilation, but also annotation processing, and a few other optimisations we’ve added along the way.

With incremental compilation, we’ve seen about a 30% improvement for the average developer. And for modules without annotation processing, the speed nearly doubled. That was more than enough to convince us that the incremental compiler is here to stay.

What’s next

Kotlin incremental compilation is now supported in Buck2, and we’re actively rolling it out across our codebase! For now, it’s available for internal use only, but we’re working on bringing it to the recently introduced open source toolchain as well.

But that’s not all! We’re also exploring ways to expand incrementality across the entire Android toolchain, including tools like Kosabi (the Kotlin counterpart to Jasabi), to deliver even faster build times and even better developer experience.

To learn more about Meta Open Source, visit our open source site, subscribe to our YouTube channel, or follow us on Facebook, Threads, X and LinkedIn.

Events & Conferences

A decade of database innovation: The Amazon Aurora story

When Andy Jassy, then head of Amazon Web Services, announced Amazon Aurora in 2014, the pitch was bold but metered: Aurora would be a relational database built for the cloud. As such, it would provide access to cost-effective, fast, and scalable computing infrastructure.

In essence, he explained, Aurora would combine the cost effectiveness and simplicity of MySQL with the speed and availability of high-end commercial databases, the kind that firms typically managed on their own. In numbers, Aurora promised five times the throughput (e.g., the number of transactions, queries, read/write operations) of MySQL at one-tenth the price of commercial database solutions, all while offloading costly management challenges and maintaining performance and availability.

AWS re:Invent 2014 | Announcing Amazon Aurora for RDS

Aurora launched a year later, in 2015. Significantly, it decoupled computation from storage, a distinct contrast to traditional database architectures where the two are entwined. This fundamental innovation, along with automated backups and replication and other improvements, enabled easy scaling for both computational tasks and storage, while meeting reliability demands.

“Aurora’s design preserves the core transactional consistency strengths of relational databases. It innovates at the storage layer to create a database built for the cloud that can support modern workloads without sacrificing performance,” explained Werner Vogels, Amazon’s CTO, in 2019.

“To start addressing the limitations of relational databases, we reconceptualized the stack by decomposing the system into its fundamental building blocks,” Vogels said. “We recognized that the caching and logging layers were ripe for innovation. We could move these layers into a purpose-built, scale-out, self-healing, multitenant, database-optimized storage service. When we began building the distributed storage system, Amazon Aurora was born.”

Within two years, Aurora became the fastest-growing service in AWS history. Tens of thousands of customers — including financial-services companies, gaming companies, healthcare providers, educational institutions, and startups — turned to Aurora to help carry their workloads.

In the intervening years, Aurora has continued to evolve to suit the needs of a changing digital landscape. Most recently, in 2024, Amazon announced Aurora DSQL. A major step forward, Aurora DSQL is a serverless approach designed for global scale and enhanced adaptability to variable workloads.

Today, International Data Corporation (IDC) research estimates that firms using Aurora see a three-year return on investment of 434 percent and an operational cost reduction of 42 percent compared to other database solutions.

But what lies behind those figures? How did Aurora become so valuable to its users? To understand that, it’s useful to consider what came before.

A time for reinvention

In 2015, as cloud computing was gaining popularity, legacy firms began migrating workloads away from on-premises data centers to save money on capital investments and in-house maintenance. At the same time, mobile and web app startups were calling for remote, highly reliable databases that could scale in an instant. The theme was clear: computing and storage needed to be elastic and reliable. The reality was that, at the time, most databases simply hadn’t adapted to those needs.

Amazon engineers recognized that the cloud could enable virtually unlimited, networked storage and, separately, compute.

That rigidity makes sense considering the origin of databases and the problems they were invented to solve. The 1960s saw one of their earliest uses: NASA engineers had to navigate a complex list of parts, components, and systems as they built spacecraft for moon exploration. That need inspired the creation of the Information Management System, or IMS, a hierarchically structured solution that allowed engineers to more easily locate relevant information, such as the sizes or compatibilities of various parts and components. While IMS was a boon at the time, it was also limited. Finding parts meant engineers had to write batches of specially coded queries that would then move through a tree-like data structure, a relatively slow and specialized process.

In 1970, the idea of relational databases made its public debut when E. F. Codd coined the term. Relational databases organized data according to how it was related: customers and their purchases, for instance, or students in a class. Relational databases meant faster search, since data was stored in structured tables, and queries didn’t require special coding knowledge. With programming languages like SQL, relational databases became a dominant model for storing and retrieving structured data.

By the 1990s, however, that approach began to show its limits. Firms that needed more computing capabilities typically had to buy and physically install more on-premises servers. They also needed specialists to manage new capabilities, such as the influx of transactional workloads — as, for instance, when increasing numbers of customers added more and more pet supplies to virtual shopping carts. By the time AWS arrived in 2006, these legacy databases were the most brittle, least elastic component of a company’s IT stack.

The emergence of cloud computing promised a better way forward with more flexibility and remotely managed solutions. Amazon engineers recognized that the cloud could enable virtually unlimited, networked storage and, separately, computation.

The Amazon Relational Database Service (Amazon RDS) debuted in 2009 to help customers set up, operate, and scale a MySQL database in the cloud. And while that service expanded to include Oracle, SQL Server, and PostgreSQL, as Jeff Barr noted in a 2014 blog post, those database engines “were designed to function in a constrained and somewhat simplistic hardware environment.”

AWS researchers challenged themselves to examine those constraints and “quickly realized that they had a unique opportunity to create an efficient, integrated design that encompassed the storage, network, compute, system software, and database software”.

“The central constraint in high-throughput data processing has moved from compute and storage to the network,” wrote the authors of a SIGMOD 2017 paper describing Aurora’s architecture. Aurora researchers addressed that constraint via “a novel, service-oriented architecture”, one that offered significant advantages over traditional approaches. These included “building storage as an independent fault-tolerant and self-healing service across multiple data centers … protecting databases from performance variance and transient or permanent failures at either the networking or storage tiers.”’

The serverless era is now

In the years since its debut, Amazon engineers and researchers have ensured Aurora has kept pace with customer needs. In 2018, Aurora Serverless provided an on-demand autoscaling configuration that allowed customers to adjust computational capacity up and down based on their needs. Later versions further optimized that process by automatically scaling based on customer needs. That approach relieves the customer of the need to explicitly manage database capacity; customers need to specify only minimum and maximum levels.

Achieving that sort of “resource elasticity at high levels of efficiency” meant Aurora Serverless had to address several challenges, wrote the authors of a VLDB 2024 paper. “These included policy issues such as how to define ‘heat’ (i.e., resource usage features on which to base decision making)” and how to determine whether remedial action may be required. Aurora Serverless meets those challenges, the authors noted, by adapting and modifying “well-established ideas related to resource oversubscription; reactive control informed by recent measurements; distributed and hierarchical decision making; and innovations in the DB engine, OS, and hypervisor for efficiency.”

As of May 2025, all of Aurora’s offerings are now serverless. Customers no longer need to choose a specific server type or size or worry about the underlying hardware or operating system, patching, or backups; all that is completely managed by AWS. “One of the things that we’ve tried to design from the beginning is a database where you don’t have to worry about the internals,” Marc Brooker, AWS vice president and Distinguished Engineer, said at AWS re:Invent in 2024.

These are exactly the capabilities that Arizona State University needs, says John Rome, deputy chief information officer at ASU. Each fall, the university’s data needs explode when classes for its more than 73,000 students are in session across multiple campuses. Aurora lets ASU pay for the computation and storage it uses and helps it to adapt on the fly.

We see Amazon Aurora Serverless as a next step in our cloud maturity.

John Rome, deputy chief information officer at ASU

“We see Amazon Aurora Serverless as a next step in our cloud maturity,” Rome says, “to help us improve development agility while reducing costs on infrequently used systems, to further optimize our overall infrastructure operations.”

And what might the next step in maturity look like for the now 10-year-old Aurora service? The authors of that 2024 paper outlined several potential paths. Those include “introducing predictive techniques for live migration”; “exploiting statistical multiplexing opportunities stemming from complementary resource needs”, and “using sophisticated ML/RL-based techniques for workload prediction and decision making.”

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoAstrophel Aerospace Raises ₹6.84 Crore to Build Reusable Launch Vehicle

-

Education2 months ago

Education2 months agoAERDF highlights the latest PreK-12 discoveries and inventions