AI Insights

Jim Cramer reflects on Nvidia’s impact after record-breaking market cap

CNBC’s Jim Cramer on Wednesday reflected on the significance of one of his long-time favorite stocks, Nvidia, just after the artificial intelligence powerhouse became the first company to amass $4 trillion in market cap during trading.

“The fact is, neither Microsoft nor Apple can claim that they’re currently creating a new industrial revolution, like Nvidia can,” he said. “In fairness, they did create the last industrial revolution, the rise of the personal computer, although that was a long time ago.”

Nvidia topped $4 trillion for the first time during the day’s session, but it closed up 1.8% to settle at a market cap of $3.97 trillion. The chipmaker is the world’s most valuable company, larger than competitors Microsoft and Apple, who have both formerly held that title. Nvidia surpassed $2 trillion in February of last year and climbed above $3 trillion four months later.

The tech giant has exploded over the past few years as Wall Street and the enterprise fixate on generative AI. Nvidia’s products are broadly seen as best in class, and Big Tech hyperscalers have been clamoring for them as they compete in the AI arms race and seek to benefit from the most advanced AI models.

Cramer remarked on AI’s transformative potential, and he suggested that “every single computer with a GPU that’s not as good as Nvidia’s is obsolete.” He also indicated that Nvidia’s technology will change the way businesses operate, saying it enables humanoid robots and self-driving cars. These robots can perform mundane or dangerous jobs, and they could event replace a number of white collar workers, Cramer continued.

Nvidia is a valuable player for the U.S. in its trade relationship with China, Cramer continued. The company sometimes seems to be the U.S.’s “only bargaining chip” with China, he said, as the country wants Nvidia’s products. Even though China is one of the U.S.’s top manufacturers, Cramer said he thinks Nvidia is a bigger bargaining chip than anything that China has.

“Bottom line? Nvidia, own it, don’t trade it,” he said. “Oh, and see you at $5 trillion.”

Nvidia declined to comment.

Sign up now for the CNBC Investing Club to follow Jim Cramer’s every move in the market.

Disclaimer The CNBC Investing Club Charitable Trust owns shares of Nvidia.

Questions for Cramer?

Call Cramer: 1-800-743-CNBC

Want to take a deep dive into Cramer’s world? Hit him up!

Mad Money Twitter – Jim Cramer Twitter – Facebook – Instagram

Questions, comments, suggestions for the “Mad Money” website? madcap@cnbc.com

AI Insights

BCCC integrates artificial intelligence lessons into workforce programs – The Coastland Times

BCCC integrates artificial intelligence lessons into workforce programs

Published 2:32 pm Wednesday, September 3, 2025

- Understanding the impacts and potentials of artificial intelligence (AI) (such as this generated image) will help students enter the workforce with relevant skills that keep pace with the industry, states Beaufort County Community College. Courtesy BCCC

Beaufort County Community College (Beaufort CCC) is introducing artificial intelligence (AI) lessons into its heating and air technician and construction and building maintenances courses, making it one of the first community colleges in North Carolina to embed this technology into short-term workforce training, noted a BCCC news release.

The initiative is starting with courses in its heating and air technician program (HVAC I, II, and III) and construction and building maintenance program (Apartment Maintenance and Electrical Fundamentals). By weaving AI-related topics into these fields, Beaufort CCC is preparing students to work in an industry landscape where smart technologies and automated systems are becoming increasingly standard. Over the next two years, the college will integrate AI lessons into all its industry training and workforce initiatives in the Division of Continuing Education.

“AI can not only augment our abilities and professionals but inspire in our students the hope to achieve greater things both using AI as an advanced tool and a way to continually develop their skills to the next level,” said Justin Rose, director of industry training and workforce initiatives. “We want our students to excel in their trade and be competitive in emerging technology so they can be a leader in their field.”

The lessons cover practical applications such as AI-assisted fault detection in HVAC systems, predictive maintenance in electrical networks, and smart building management technologies. These additions align with growing industry demand for technicians who can work confidently with both traditional systems and emerging digital tools.

Workforce programs at Beaufort CCC are designed to meet immediate regional employment needs, and adding AI awareness ensures graduates can step into careers with relevant, forward-looking skills. Students will earn Microsoft badges and trophies alongside their industry recognized credentials and Beaufort CCC certificate of completion.

“This approach keeps our programs current and ensures that our graduates are employable and adaptable,” said Dr. Stacey Gerard, vice president of continuing education. “Employers want workers who can think ahead, and AI knowledge helps them do exactly that.”

The addition of AI lessons underscores Beaufort CCC’s commitment to innovation and its role as a regional leader in workforce education. The Division of Continuing Education also offers introductory workshops including AI – Introduction to Artificial Intelligence, AI – Google Workspace: Resources for your Workplace, and Harnessing Canva – Advanced AI Features.

SUBSCRIBE TO THE COASTLAND TIMES TODAY!

AI Insights

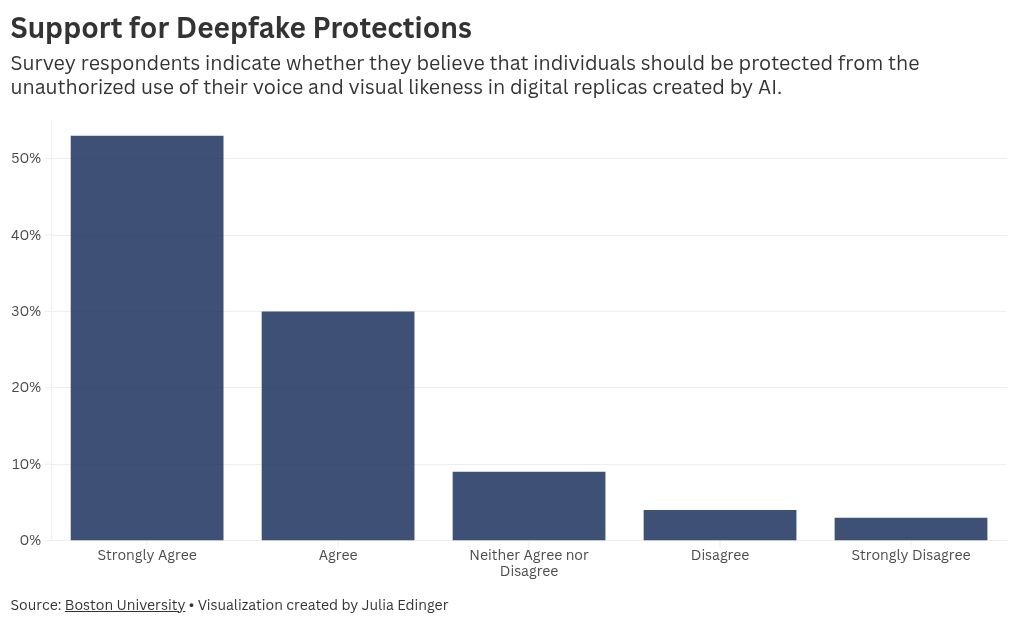

4 in 5 People Want Protection Against AI Deepfakes

A significant and bipartisan majority of people in the U.S. support protections against deepfakes, according to a survey out of Boston University.

Deepfakes, or AI-generated images misrepresenting reality, are becoming increasingly common, posing new risks related to security and fraud. Many state lawmakers have explored potential measures to protect against these and other risks. In May, the TAKE IT DOWN Act became federal law, making it a crime to share nonconsensual deepfake images of a sexual nature, but experts say this is a “drop in the bucket” of risks.

More than 4 of 5 respondents — or 84 percent — agreed or strongly agreed that individuals should be protected from unauthorized usage of their voice and likeness, according to the survey. This support is bipartisan; 84 percent of Republicans and 90 percent of Democrats support such protections.

“In this confusing environment, one principle has strong bipartisan support: the public overwhelmingly agrees that everyone’s voice and image should be protected from unauthorized AI-generated recreations,” Michelle Amazeen, associate dean of research at Boston University’s College of Communication, and Communication Research Center (CRC) director, said in a statement, acknowledging the rapid spread of disinformation fueled by the scaling back of content moderation on social media platforms.

The survey also revealed that there is significant support for the labeling and watermarking of AI-generated content on social platforms — 84 percent of people support that. A majority of respondents also support social media platforms in removing unauthorized deepfakes and providing a transparent appeals process for contesting unauthorized deepfakes. Three of four respondents support individuals having a right to license their voice and visual likeness to enhance control over the use of a person’s digital identity.

The survey was designed by the CRC at Boston University’s College of Communication and conducted by Ipsos.

The TAKE IT DOWN Act received bipartisan support, but some lawmakers want to enact further protections, as demonstrated by the introduction of the NO FAKES Act this spring to protect individuals’ digital identity against the unauthorized use of their likeness.

However, as the U.S. Congress is slow to act on AI-related safeguards for individuals, President Donald Trump’s AI Action Plan hones in on states’ autonomy to enact their own regulations to protect people from AI risks; states that do so may risk losing federal funding.

This is not the first instance of the public demonstrating significant bipartisan support for specific policies — ranging from deepfake regulation to an autonomous weapons ban — to safeguard people from AI technologies’ risks.

AI Insights

Carnival Cruise Line CIO’s measured approach to navigating Gen AI

Carnival Cruise Line has piloted over 100 different generative artificial intelligence projects. And while only six are in full production today, the cruise line operator’s chief information officer is pleased with the company’s measured progress.

“We have limited, for the time being, the number of tools that are available,” says Sean Kenny, who has served as CIO since 2017. “What we wanted to do was not have science experiments everywhere.”

Kenny says that Carnival Cruise Line established an AI governing body that meets monthly to determine which use cases should get capital funding, track progress during the piloting phase, and sign off on when an application of generative AI is ready for broader adoption.

Carnival Cruise Line’s generative AI adoption strategy is independently operated from the company’s other brands, which includes Holland America and Costa Cruises. Each division has been empowered to individually navigate their technology roadmap.

In his role as CIO, Kenny says part of his job is to bring employees along the adoption journey, promoting training courses offered from AI providers like Microsoft and corporate hackathons. He says one-on-one coaching is more effective than group sessions.

“Nobody wants to look dumb, especially if your boss is sitting at the table with you,” says Kenny, of the hesitancy he’s observed in AI group training sessions. Carnival’s employees can also reach out to a centralized AI team to ask questions if they get stuck while trying to use these relatively new AI tools.

One generative AI use case that Carnival has deployed is a tool that can help personal vacation planners, the company’s travel agents, answer guest questions. Another application of AI that Kenny is excited about is a tool helping servers make more appropriate recommendations for the perfect red wine to sip with steak. What Carnival hopes to see from the latter is an increase to the company’s net promoter score, a closely watched customer satisfaction metric that cruise lines, restaurants, retailers, and other consumer-focused companies track.

Kenny says he’s tapped the expertise of big tech giants like Google, Microsoft, and IBM for Carnival’s AI journey, but is also keeping a close eye on emerging players, especially in the cybersecurity arena. “We’re trying to be open minded to the startups,” he says. “I think it’s important for business and IT leaders to be open to the new players.”

While cruise line operators are enjoying a surge in popularity after the pandemic badly battered the industry—and Carnival itself reported an all-time high of $25 billion in annual revenue in 2024—investments in AI and other technologies could make travel by ship even more desirable.

Kenny says the technology will be especially powerful when it comes to personalization. A guest that books a Jamaican jerk chicken cooking excursion class may also be interested in a wine tasting on the boat. “In terms of the guest experience, it shows us trying to connect the dots,” says Kenny, who also cautions that Carnival doesn’t want to go overboard and be too pushy.

Kenny is closely monitoring other emerging technologies, including an ongoing test of a robotic tool that can comb through leftover food and remove foreign materials like plastic, glass, and wood. This allows Carnival to crush leftovers and pulverize it into literal fish food that can be dumped into the sea. Previously, this work was exclusively done by human hands.

Kenny is also excited about the prospect of using augmented reality and virtual reality technologies to improve training and to monitor and adjust the settings of the large diesel engines, which are so loud that they make in-person work extremely unpleasant. There aren’t any solutions available on the market that Carnival thinks are ready for adoption, but the company is keeping a close eye on progress from vendors.

What’s further along is the company’s WiFi capabilities. A decade ago, Kenny says most travelers expected that any hotel they’d check into would offer internet access, even if they had to pay extra for it, but that this expectation of connectivity wasn’t always true on a cruise ship. That’s been evolving and Kenny has mostly solved the issue by leveraging SpaceX’s Starlink and other providers to deliver far more steady internet access while at sea.

Better WiFi has allowed Carnival to upsell, giving travelers more access to an app where they can book excursions like snorkeling or visiting a historic cultural site when the boat lingers in a port. Excursions are an extra revenue boost for cruise operators like Carnival and AI-enabled travel recommendations, Kenny says, could make these journeys even more alluring.

“I don’t need my tool to go into the world wide web to pick out data and present it to you,” he says. “We can rely on our own terabytes of data.”

John Kell

Send thoughts or suggestions to CIO Intelligence here.

NEWS PACKETS

The AI PC market is rattled by tariffs. Demand for AI PCs in 2024 is expected to be softer than initially projected, as Gartner now says 78 million devices will be shipped this year, a more conservative estimate than the 114 million units the research firm had forecast last year, according to CIO Dive. Tariffs and slower economic growth have reportedly affected the adoption rate of AI PCs, though Gartner says that by 2029, these devices will be the norm among hardware providers including HP and Dell. Gartner says AI PCs will also impact the software landscape, with two in five software vendors investing in AI features that run directly on PCs by the end of 2026, up from merely 2% in 2024.

Anthropic’s new funding round nearly triples AI startup’s valuation. Bloomberg reports that Anthropic has closed on a deal to raise an additional $13 billion from investors, one of the largest ever for an AI company, at a valuation of $183 billion, up from the $61.5 billion valuation if fetched in March. The latest financing was led by investment firm Iconiq Capital, with other participants including Fidelity Management and Research Co., Lightspeed Venture Partners, and Qatar Investment Authority. Bloomberg says the total was higher than initially expected, due to strong investor demand. Anthropic, which was founded in 2021 by former employees of rival OpenAI, said that the latest funding round reflected the company’s “unprecedented velocity and reinforces our position as the leading intelligence platform for enterprises, developers, and power users.”

Nvidia’s monster 2Q comes with some concerns. Fortune reports that Nvidia’s quarterly results were “by far the biggest event of this earnings season,” as the AI chip maker posted second-quarter revenue and profits that bested Wall Street’s sky-high expectations. But some analysts have worried about a disclosure from Nvidia that 44% of revenue from chip sales to data centers is derived from just two customers, presumed to be Microsoft and Meta Platforms. There are also growing fears of more local competition from China where one rival, semiconductor firm Cambricon, reported revenue that surged 4,300% in the first half of the year. Nvidia CEO Jensen Huang touted the $50 billion AI market opportunity in China, but also warned that getting approval to sell the newest GPU chip will take time.

ADOPTION CURVE

AI coding tools proliferate, but over-reliance is a big worry. AI coding assistants like GitHub Copilot, Cursor, and Windsurf continue to see strong adoption among developers, with a recent survey finding that 78% of developers rely on these tools every day and two-thirds reporting that their organization’s use of AI coding will “increase significantly” over the next 12 months. The survey of 300 technology decision makers across North America, Europe, and Asia-Pacific, which was backed by Australian software maker Canva, also found that 95% of technologists were comfortable with candidates using AI during technical interviews.

The study did find some lingering concerns. One in three say over-reliance on AI without developer accountability was their top worry, while one in five were perturbed that junior engineers may see their development stunted. CIOs and CTOs generally have agreed that AI coding tools will dramatically change the workflows for developers to tilt in favor of editing more code rather than writing it. To that end, Canva’s survey found that 93% say AI-generated code is “always or often” reviewed.

Courtesy of Canva

JOBS RADAR

Hiring:

– State Street is seeking a chief data and AI officer, based in Boston. Posted salary range: $300K-$412.5K/year.

– AbsenceSoft is seeking a chief technical officer, a remote-based role. Posted salary range: $275K-$390K/year.

– Chanel is seeking a head of technology, based in New York City. Posted salary range: $248.6K-$300K/year.

– Starburst is seeking a chief information security officer, a remote-based role. Posted salary range: $250K-$300K/year.

Hired:

– Lululemon (No. 401 on the Fortune 500) announced the appointment of Ranju Das as chief AI and technology officer, effective September 2, and also disclosed that CIO Julie Averill will depart the apparel maker to pursue other opportunities. Das will report to CEO Calvin McDonald and joins Lululemon after previously serving as CEO and founder of Seattle-based startup Swan AI Studios. He also served as CEO of OptumLabs, the research and development arm of UnitedHealth Group.

Every Friday morning, the weekly Fortune 500 Power Moves column tracks Fortune 500 companies C-suite shifts—see the most recent edition.

– Mandarin Oriental Hotel Group appointed Raphael Bick as CIO, joining the luxury hotel operator to oversee technology and AI. Bick joins from McKinsey, where he spent nearly 15 years in leadership roles, including most recently leading the consulting firm’s technology practice in Asia.

– Renault Group promoted Philippe Brunet to the role of CTO, overseeing engineering for both the French auto manufacturer and its electric vehicle arm Ampere. Brunet has worked at Renault for his entire career and has held a wide variety of leadership roles, including as SVP of powertrain engineering and as VP of software and tuning powertrain engineering.

– ArkeaBio announced the appointment of Zach Serber as CTO, joining the agricultural bioscience company to oversee the company’s scientific efforts to develop a vaccine to reduce methane emissions from cattle. Serber previously served as a founder and chief science officer at biotechnology company Zymergen and was chief operating officer at biotech startup Evozyne.

– Fetch has appointed Ori Schnaps as CTO, joining the mobile shopping rewards app to oversee product development and the application of AI to increase personalization. Schnaps most recently served as head of core product engineering at social media site Reddit. Before that, he held leadership roles at Meta and served as a co-founder of Thrive, a personal finance startup that was acquired in 2016.

– AI Proteins promoted James Bowman to serve as the biotech company’s first-ever CTO, where he will integrate machine learning, robotics, and large-scale data analysis to create novel protein therapeutics. Bowman previously served as director of protein engineering and initially joined AI Proteins in 2021. Prior to that, he was a postdoctoral fellow at the Institute for Protein Innovation, Boston Children’s Hospital and Harvard Medical School.

– Ardent Mills appointed Ryan Kelley as CIO, joining the flour-milling and ingredient company after most recently serving as CIO at oil-and-gas exploration and production company Par Pacific Holdings. Kelley also previously served as a CIO and chief procurement officer at oil refinery operator Motiva Enterprises. He also served as a director of supply chain management at energy company Andeavor.

– Amyris announced Sunil Chandran returned to the biotechnology company to serve as CTO following a two-year tenure as chief science officer at plant-based meats producer Impossible Foods. Chandran has spent 17 years at Amyris, rising up the ranks after initially joining as a scientist to eventually becoming head of research and development.

-

Business5 days ago

Business5 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics