In the mid-20th Century, we had the Space Race kick off, and in the mid-2020s, we’re very much in the middle of the AI race. Nobody is sitting still, with parties all around the globe pushing for the next big advancements.

Chinese scientists are now making a big claim to have made one of their own. As reported by The Independent, SpikingBrain1.0 is a new large language model (LLM) out of China, which ordinarily might not be so exciting. But this isn’t supposed to be any normal LLM. SpikingBrain1.0 is reported to be as much as 100x faster than current models such as those behind ChatGPT and Copilot.

All down to the way the model operates, which is something completely new. It’s being touted as the first “brain-like” LLM, but what does that actually mean? First, a little background on how the current crop of LLMs work.

Bear with me on this one, hopefully I can make it make sense and as simply as possible. Essentially, the current crop of LLMs look at all of the words in a sentence at once. They’re looking for patterns, relationships between words, whatever their position in the sentence.

It uses a method known as Attention. Take a sentence such as this:

“The Baseball player swung the bat and hit a home run.”

You, as a human, read that sentence and instantly know what it means, because your brain immediately associates “Baseball” with the words that come after it. But to an LLM, the word “bat” on its own could be both a baseball bat or the animal. Without examining the rest of the sentence, it wouldn’t be able to make that differentiation.

Attention in an LLM will look at the whole sentence and then map out relationships between the words to understand it. It will identify the other terms, such as “swung” and “baseball player,” to identify the correct definition and make better predictions.

This connects with the training data for the LLM, where it will have learned that “baseball” and “bat” often go together.

Examining whole sentences at once takes resources, though. And the larger the input, the more resources needed to understand it. This, in part, is why current LLMs generally need massive amounts of computing power. Every word is compared to every other word, and it consumes a lot of resources.

SpikingBrain1.0 claims to mimic the human brain’s approach, focusing only on nearby words, similar to how we would approach a sentence’s context. A brain fires the nerve cells it needs to; it doesn’t run at full power all of the time.

The net result is a more efficient process, with its creators claiming between 25x and 100x performance gains over current LLMs. Compared to something such as ChatGPT, this model is supposed to selectively respond to inputs, reducing its resource requirements to operate.

As written in the research paper:

“This enables continual pre-training with less than 2 percent of the data while achieving performance comparable to mainstream open-source models.”

Perhaps equally interesting, at least for China, is that the model has been built not to rely on GPU compute from NVIDIA hardware. It has been tested on a locally produced chip from a Chinese company, MetaX.

There is, of course, much to be considered, but on paper at least, SpikingBrain1.0 could be a logical next evolution of LLMs. Much has been made of the impact AI will have on the environment, with vast energy requirements and equally vast requirements to cool these massive data centers.

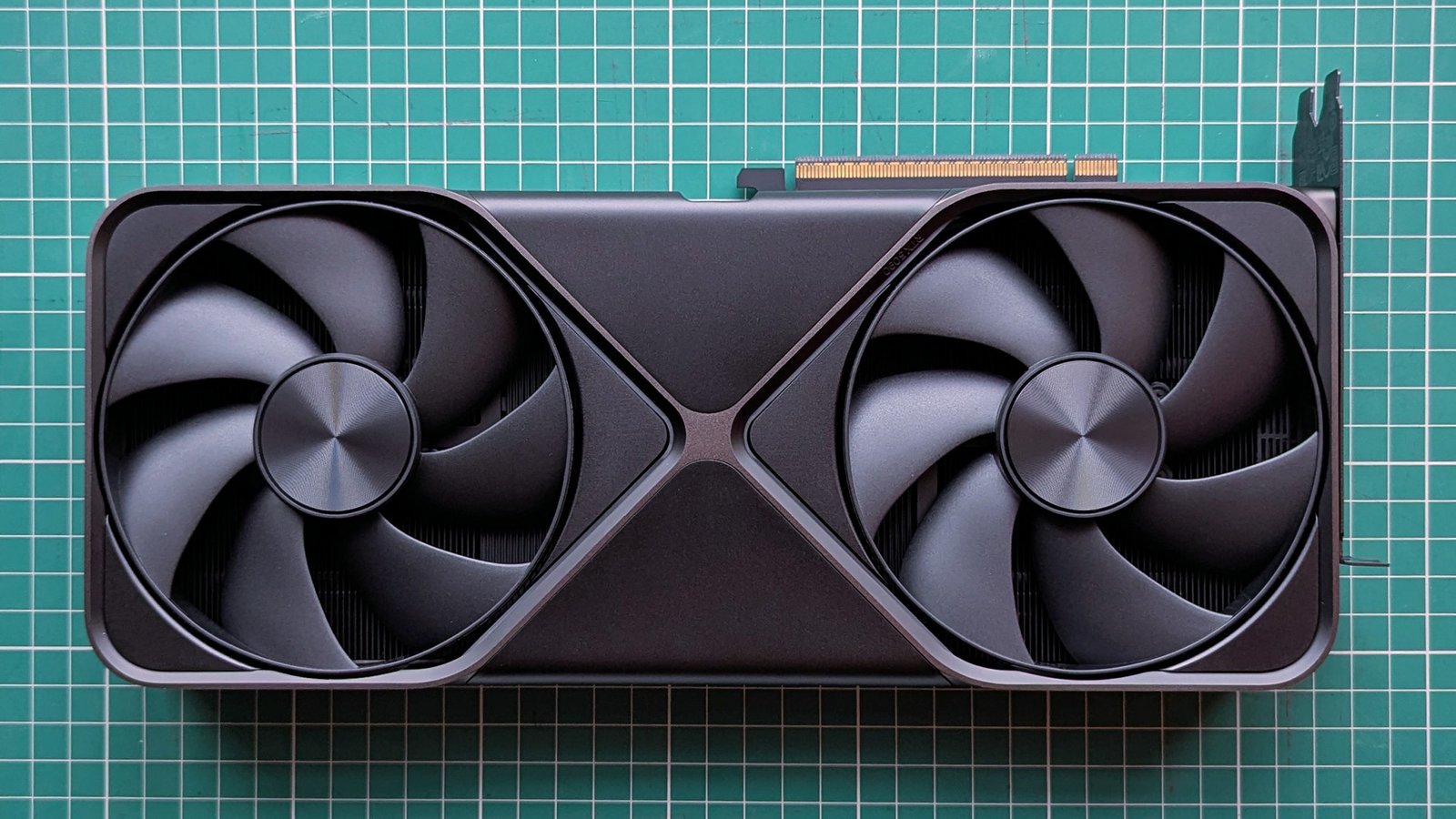

It’s bad enough running LLMs in Ollama at home with an RTX 5090. My office gets hot, and with a graphics card that can draw close to 600W, it’s hardly efficient. Scale that thought up to a data center full of GPUs.

This is an interesting development if it all pans out to be accurate. It could be the next leap forward, but only if the balance of accuracy and efficiency is there. Exciting times for sure, though.