As the world rushes to apply AI to their work practices, its use is becoming apparent in both the production of research “products” for assessment (outputs, proposals, CVs) and the actual assessment of those products and their producers. This all comes at a time when the research sector is seeking to reform the way it assesses research, both to mitigate some of the problematic outcomes of publication-dominant forms of assessment (such as the rise in paper mills, authorship sales, citation cartels, and a lack of incentives to engage with open research practices) and to prioritise peer review over solely quantitative forms of assessment.

AI Research

Is there a place for AI in research assessment?

AI is reshaping research, from drafting proposals and academic CVs to automating parts of peer review and assessment. With efforts to reform research assessment in motion, Elizabeth Gadd and Nick Jennings explore how AI is both exacerbating the need for reform and offering potential for delivering reformed assessment mechanisms. They suggest that AI-augmented assessment models, where technology supports – but never replaces – human judgement, might offer a way forward.

Where assessment reform and AI tools meet

There are two main issues that arise at the intersection of assessment reform and AI. The first is the extent to which our current assessment regime is driving the misuse of Generative AI to produce highly prized outputs that look scholarly but aren’t. And the second is the extent to which AI might legitimately be used in research assessment going forward.

The [current] system rewards publication in and of itself above the content and quality of the research, to the point that it is now rewarding mere approximations of publications.

On the first issue, we are on well-trodden ground. The narrow, publication-dominant methods of assessment used to evaluate research and researchers are driving many poor behaviours. One such behaviour is the pursuit of questionable research practices – such as publication and citation bias. Worse again is research misconduct – such as fabrication, falsification and plagiarism. The system rewards publication in and of itself above the content and quality of the research, to the point that it is now rewarding mere approximations of publications. It should therefore come as no surprise that bad actors will be financially motivated to use any means at their disposal to produce publications, including AI.

In this case, our main problem is not AI, but rather publication-dominant research assessment. We can address this problem by broadening the range of contributions we value and taking a more qualitative approach to assessment. By doing this, we will at least disincentivise polluting the so-called “scholarly record” (curated, peer-reviewed content) with fakes and frauds.

AI in research outputs versus assessment

Assuming we were successful in disincentivising the use of AI in generating value-less publications in any reformed assessment regime, the question remains as to whether it may be incentivised for other aspects. This is because broadening how we value research and moving to more qualitative (read “narrative”) forms of assessment, it will lead to more work, not less, for both assessors and the assessed. And if there is one thing we know GenAI is good at, it’s generating narratives at speed. GenAI might even help to level the playing field for those for whom the assessment language is not their first, making papers clearer and easier to read. Most guidelines state that if the right safety precautions are followed – if the human retains editorial control, and is transparent about their use of AI, and doesn’t enter sensitive information into a Large Language Model – it’s perfectly legitimate to submit the resulting content for assessment.

Many researchers believe they’ve been on the receiving end of a new, over-thorough, less aggressive Reviewer Two, which is probably an AI.

Where the guidelines are more cautious is around the use of AI to do the assessing. The European Research Area guidelines on the responsible use of AI in research are clear that we should “refrain from using GenAI tools in peer reviews and evaluations”. But that’s not to say that researchers aren’t experimenting. Mike Thelwall’s team has shown weak success in using Chat GPT to replicate human peer review scores, and many researchers believe they’ve been on the receiving end of a new, over-thorough, less aggressive Reviewer Two, which is probably an AI.

But given human peer review is already a highly contested exercise (when does Reviewer One agree with Reviewer Two?) we must ask the question: if ChatGPT can’t replicate human peer review scores, does it say more about the AI or the human? We have to question whether the human scores are the correct ones and whether we are doing machine learning a disservice by expecting it simply to replicate human scores, only faster. One might argue that the real power of AI is in seeing what we can’t see; finding patterns we cannot; and identifying potential that we cannot.

The dual value of peer review

Perhaps we must first ask, is the scholarly process itself purely about generating and (through research assessment) verifying new discoveries? Or is there something valuable in the act of discovery and verification: the acquisition and deployment of skills, knowledge, and understanding, which is fundamental to being human?

We have to ask if the process of collaborating with other humans in the pursuit of new knowledge is just about this new knowledge, or whether the business of building connections and interfacing with others essential to human wellbeing, to civil society, and to geopolitical security.

The recognition of fellow humans – through peer review and assessment – is more than just a verification of our results and our contributions, and instead something critical to our welfare and motivation.

The recognition of fellow humans – through peer review and assessment – is more than just a verification of our results and our contributions, and instead something critical to our welfare and motivation: An acknowledgement that, human-to-human, I see you and I value you. Would any researcher be happy knowing their contribution had been assessed by automation alone?

It comes down to whether we value only the outcome or the process. And if we continuously outsource that process to technology, and generate outcomes that might provide answers, but that we don’t actually understand or trust, we risk losing all human connection to the research process. The skills, knowledge, and understanding we accumulate through performing assessments are surely critical to research and researcher development.

Proceeding with the right amount of caution

There is no justification for condemning AI outright. It is being used (and its accuracy then verified by humans) to solve many of society’s previously unsolved problems. However, when it comes to matters of judgement, where humans may not agree on the “right answer” – or even that there is a right answer – we need to be far more cautious about the role of AI. Research assessment is in this category.

Human judgement first, and technology in support; or AI-augmented human assessment.

There are many parallels between the role of metrics and the role of AI in research assessment. There is significant agreement that metrics shouldn’t be making our assessments for us without human oversight. And assessment reformers are clear that referring to appropriate indicators can often lead to a better assessment, but human judgement should take priority. This logic offers us a blueprint for approaching AI: human judgement first, and technology in support; or AI-augmented human assessment.

By forbidding the use of AI in assessment altogether, the ERA guidelines took an understandably cautious initial response. However, properly contained, the judicious involvement of AI in assessment can be our friend, not our enemy. It largely comes down to the type of research assessment we are talking about, and the role we allow AI to play. The use of AI to provide a first draft of written submissions, or to summarise, identify inconsistencies, or provide a view on the content of those submissions could lead to fairer, more robust, qualitative evaluations. However, we should not rely on AI to do the imaginative work of assessment reform and rethink what “quality” looks like, nor should we outsource human decision-making to AI altogether. As we look to reform research assessment, we should simply be open to the possibilities offered by new technologies to support human judgements.

The content generated on this blog is for information purposes only. This Article gives the views and opinions of the authors and does not reflect the views and opinions of the Impact of Social Science blog (the blog), nor of the London School of Economics and Political Science. Please review our comments policy if you have any concerns on posting a comment below.

Image Credit: Roman Samborskyi on Shutterstock

AI Research

Should AI Get Legal Rights?

In one paper Eleos AI published, the nonprofit argues for evaluating AI consciousness using a “computational functionalism” approach. A similar idea was once championed by none other than Putnam, though he criticized it later in his career. The theory suggests that human minds can be thought of as specific kinds of computational systems. From there, you can then figure out if other computational systems, such as a chabot, have indicators of sentience similar to those of a human.

Eleos AI said in the paper that “a major challenge in applying” this approach “is that it involves significant judgment calls, both in formulating the indicators and in evaluating their presence or absence in AI systems.”

Model welfare is, of course, a nascent and still evolving field. It’s got plenty of critics, including Mustafa Suleyman, the CEO of Microsoft AI, who recently published a blog about “seemingly conscious AI.”

“This is both premature, and frankly dangerous,” Suleyman wrote, referring generally to the field of model welfare research. “All of this will exacerbate delusions, create yet more dependence-related problems, prey on our psychological vulnerabilities, introduce new dimensions of polarization, complicate existing struggles for rights, and create a huge new category error for society.”

Suleyman wrote that “there is zero evidence” today that conscious AI exists. He included a link to a paper that Long coauthored in 2023 that proposed a new framework for evaluating whether an AI system has “indicator properties” of consciousness. (Suleyman did not respond to a request for comment from WIRED.)

I chatted with Long and Campbell shortly after Suleyman published his blog. They told me that, while they agreed with much of what he said, they don’t believe model welfare research should cease to exist. Rather, they argue that the harms Suleyman referenced are the exact reasons why they want to study the topic in the first place.

“When you have a big, confusing problem or question, the one way to guarantee you’re not going to solve it is to throw your hands up and be like ‘Oh wow, this is too complicated,’” Campbell says. “I think we should at least try.”

Testing Consciousness

Model welfare researchers primarily concern themselves with questions of consciousness. If we can prove that you and I are conscious, they argue, then the same logic could be applied to large language models. To be clear, neither Long nor Campbell think that AI is conscious today, and they also aren’t sure it ever will be. But they want to develop tests that would allow us to prove it.

“The delusions are from people who are concerned with the actual question, ‘Is this AI, conscious?’ and having a scientific framework for thinking about that, I think, is just robustly good,” Long says.

But in a world where AI research can be packaged into sensational headlines and social media videos, heady philosophical questions and mind-bending experiments can easily be misconstrued. Take what happened when Anthropic published a safety report that showed Claude Opus 4 may take “harmful actions” in extreme circumstances, like blackmailing a fictional engineer to prevent it from being shut off.

AI Research

Trends in patent filing for artificial intelligence-assisted medical technologies | Smart & Biggar

[co-authors: Jessica Lee, Noam Amitay and Sarah McLaughlin]

Medical technologies incorporating artificial intelligence (AI) are an emerging area of innovation with the potential to transform healthcare. Employing techniques such as machine learning, deep learning and natural language processing,1 AI enables machine-based systems that can make predictions, recommendations or decisions that influence real or virtual environments based on a given set of objectives.2 For example, AI-based medical systems can collect medical data, analyze medical data and assist in medical treatment, or provide informed recommendations or decisions.3 According to the U.S. Food and Drug Administration (FDA), some key areas in which AI are applied in medical devices include: 4

- Image acquisition and processing

- Diagnosis, prognosis, and risk assessment

- Early disease detection

- Identification of new patterns in human physiology and disease progression

- Development of personalized diagnostics

- Therapeutic treatment response monitoring

Patent filing data related to these application areas can help us see emerging trends.

Table of contents

Analysis strategy

We identified nine subcategories of interest:

- Image acquisition and processing

- Medical image acquisition

- Pre-processing of medical imaging

- Pattern recognition and classification for image-based diagnosis

- Diagnosis, prognosis and risk management

- Early disease detection

- Identification of new patterns in physiology and disease

- Development of personalized diagnostics and medicine

- Therapeutic treatment response monitoring

- Clinical workflow management

- Surgical planning/implants

We searched patent filings in each subcategory from 2001 to 2023. In the results below, the number of patent filings are based on patent families, each patent family being a collection of patent documents covering the same technology, which have at least one priority document in common.5

What has been filed over the years?

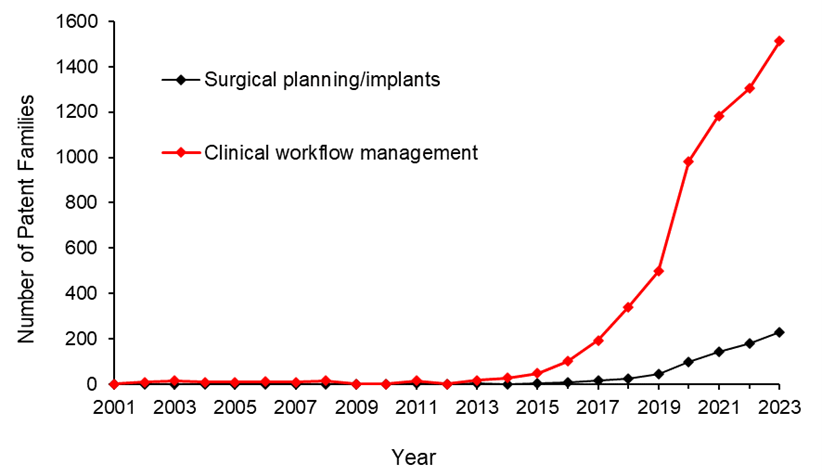

The number of patents filed in each subcategory of AI-assisted applications for medical technologies from 2001 to 2023 is shown below.

We see that patenting activities are concentrating in the areas of treatment response monitoring, identification of new patterns in physiology and disease, clinical workflow management, pattern recognition and classification for image-based diagnosis, and development of personalized diagnostics and medicine. This suggests that research and development efforts are focused on these areas.

What do the annual numbers tell us?

Let’s look at the annual number of patent filings for the categories and subcategories listed above. The following four graphs show the global patent filing trends over time for the categories of AI-assisted medical technologies related to: image acquisition and processing; diagnosis, prognosis and risk management; treatment response monitoring; and workflow management.

When looking at the patent filings on an annual basis, the numbers confirm the expected significant uptick in patenting activities in recent years for all categories searched. They also show that, within the four categories, the subcategories showing the fastest rate of growth were: pattern recognition and classification for image-based diagnosis, identification of new patterns in human physiology and disease, treatment response monitoring, and clinical workflow management.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to image acquisition and processing.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to more accurate diagnosis, prognosis and risk management.

Above: Global patent filing trends over time for AI-assisted medical technologies related to treatment response monitoring.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to workflow management.

Where is R&D happening?

By looking at where the inventors are located, we can see where R&D activities are occurring. We found that the two most frequent inventor locations are the United States (50.3%) and China (26.2%). Both Australia and Canada are amongst the ten most frequent inventor locations, with Canada ranking seventh and Australia ranking ninth in the five subcategories that have the highest patenting activities from 2001-2023.

Where are the destination markets?

The filing destinations provide a clue as to the intended markets or locations of commercial partnerships. The United States (30.6%) and China (29.4%) again are the pace leaders. Canada is the seventh most frequent destination jurisdiction with 3.2% of patent filings. Australia is the eighth most frequent destination jurisdiction with 3.1% of patent filings.

Takeaways

Our analysis found that the leading subcategories of AI-assisted medical technology patent applications from 2001 to 2023 include treatment response monitoring, identification of new patterns in human physiology and disease, clinical workflow management, pattern recognition and classification for image-based diagnosis as well as development of personalized diagnostics and medicine.

In more recent years, we found the fastest growth in the areas of pattern recognition and classification for image-based diagnosis, identification of new patterns in human physiology and disease, treatment response monitoring, and clinical workflow management, suggesting that R&D efforts are being concentrated in these areas.

We saw that patent filings in the areas of early disease detection and surgical/implant monitoring increased later than the other categories, suggesting these may be emerging areas of growth.

Although, as expected, the United States and China are consistently the leading jurisdictions in both inventor location and destination patent offices, Canada and Australia are frequently in the top ten.

Patent intelligence provides powerful tools for decision makers in looking at what might be shaping our future. With recent geopolitical changes and policy updates in key primary markets, as well as shifts in trade relationships, patent filings give us insight into how these aspects impact innovation. For everyone, it provides exciting clues as to what emerging technologies may shape our lives.

References

1. Alowais et.al., Revolutionizing healthcare: the role of artificial intelligence in clinical practice (2023), BMC Medical Education, 23:689.

2. U.S. Food and Drug Administration (FDA), Artificial Intelligence and Machine Learning in Software as a Medical Device.

3. Bitkina et.al., Application of artificial intelligence in medical technologies: a systematic review of main trends (2023), Digital Health, 9:1-15.

4. Artificial Intelligence Program: Research on AI/ML-Based Medical Devices | FDA.

5. INPADOC extended patent family.

[View source.]

AI Research

Research Reveals Human-AI Learning Parallels

PROVIDENCE, R.I. [Brown University] – New research found similarities in how humans and artificial intelligence integrate two types of learning, offering new insights about how people learn as well as how to develop more intuitive AI tools.

Led by Jake Russin, a postdoctoral research associate in computer science at Brown University, the study found by training an AI system that flexible and incremental learning modes interact similarly to working memory and long-term memory in humans.

“These results help explain why a human looks like a rule-based learner in some circumstances and an incremental learner in others,” Russin said. “They also suggest something about what the newest AI systems have in common with the human brain.”

Russin holds a joint appointment in the laboratories of Michael Frank, director of the Center for Computational Brain Science at Brown’s Carney Institute for Brain Science, and Ellie Pavlick, an associate professor of computer science who leads the AI Research Institute on Interaction for AI Assistants at Brown. The study was published in the Proceedings of the National Academy of Sciences.

Depending on the task, humans acquire new information in one of two ways. For some tasks, such as learning the rules of tic-tac-toe, “in-context” learning allows people to figure out the rules quickly after a few examples. In other instances, incremental learning builds on information to improve understanding over time – such as the slow, sustained practice involved in learning to play a song on the piano.

While researchers knew that humans and AI integrate both forms of learning, it wasn’t clear how the two learning types work together. Over the course of the research team’s ongoing collaboration, Russin – whose work bridges machine learning and computational neuroscience – developed a theory that the dynamic might be similar to the interplay of human working memory and long-term memory.

To test this theory, Russin used “meta-learning”- a type of training that helps AI systems learn about the act of learning itself – to tease out key properties of the two learning types. The experiments revealed that the AI system’s ability to perform in-context learning emerged after it meta-learned through multiple examples.

One experiment, adapted from an experiment in humans, tested for in-context learning by challenging the AI to recombine similar ideas to deal with new situations: if taught about a list of colors and a list of animals, could the AI correctly identify a combination of color and animal (e.g. a green giraffe) it had not seen together previously? After the AI meta-learned by being challenged to 12,000 similar tasks, it gained the ability to successfully identify new combinations of colors and animals.

The results suggest that for both humans and AI, quicker, flexible in-context learning arises after a certain amount of incremental learning has taken place.

“At the first board game, it takes you a while to figure out how to play,” Pavlick said. “By the time you learn your hundredth board game, you can pick up the rules of play quickly, even if you’ve never seen that particular game before.”

The team also found trade-offs, including between learning retention and flexibility: Similar to humans, the harder it is for AI to correctly complete a task, the more likely it will remember how to perform it in the future. According to Frank, who has studied this paradox in humans, this is because errors cue the brain to update information stored in long-term memory, whereas error-free actions learned in context increase flexibility but don’t engage long-term memory in the same way.

For Frank, who specializes in building biologically inspired computational models to understand human learning and decision-making, the team’s work showed how analyzing strengths and weaknesses of different learning strategies in an artificial neural network can offer new insights about the human brain.

“Our results hold reliably across multiple tasks and bring together disparate aspects of human learning that neuroscientists hadn’t grouped together until now,” Frank said.

The work also suggests important considerations for developing intuitive and trustworthy AI tools, particularly in sensitive domains such as mental health.

“To have helpful and trustworthy AI assistants, human and AI cognition need to be aware of how each works and the extent that they are different and the same,” Pavlick said. “These findings are a great first step.”

The research was supported by the Office of Naval Research and the National Institute of General Medical Sciences Centers of Biomedical Research Excellence.

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics