AI Research

Is generative AI a job killer? Evidence from the freelance market

Over the past few years, generative artificial intelligence (AI) and large language models (LLMs) have become some of the most rapidly adopted technologies in history. Tools such as OpenAI’s ChatGPT, Google’s Gemini, and Anthropic’s Claude now support a wide range of tasks and have been integrated across sectors, from education and media to law, marketing, and customer service. According to McKinsey’s 2024 report, 71% of organizations now regularly use generative AI in at least one business function. This rapid adoption has sparked a vibrant public debate among business leaders and policymakers about how to harness these tools while mitigating their risks.

Perhaps the most alarming feature of generative AI is its potential to disrupt the labor market. Eloundou et al. (2024) estimate that around 80% of the U.S. workforce could see at least 10% of their tasks affected by LLMs, while approximately 19% of workers may have over half of their tasks impacted.

To better understand the impact of generative AI on employment, we examined its effect on freelance workers using a popular online platform (Hui et al. 2024). We found that freelancers in occupations more exposed to generative AI have experienced a 2% decline in the number of contracts and a 5% drop in earnings following since the release of new AI software in 2022. These negative effects were especially pronounced among experienced freelancers who offered higher-priced, higher-quality services. Our findings suggest that existing labor policies may not be fully equipped to support workers, particularly freelancers and other nontraditional workers, in adapting to the disruptions posed by generative AI. To ensure long-term, inclusive benefits from AI adoption, policymakers should invest in workforce reskilling, modernize labor protections, and develop institutions that support human-AI complementarity across a rapidly evolving labor market.

How might AI affect employment?

The effect of AI on employment remains theoretically ambiguous. As with past general-purpose technologies, such as the steam engine, the personal computer, or the internet, AI may fundamentally reshape employment structures, though it remains unclear whether AI will ultimately harm or improve worker outcomes (Agrawal et al. 2022). Much depends on whether AI complements or substitutes human labor. On the one hand, AI may improve worker outcomes by boosting productivity, work quality, and efficiency. It can take over routine or repetitive tasks, allowing humans to focus on strategic thinking, creativity, or interpersonal interactions. This optimistic view has been championed by scholars such as Brynjolfsson and McAfee (2014), who argue that technology can augment productivity and increase the value of human capital when paired with the right skills. Brynjolfsson et al. (2025) and Noy and Zhang (2023) find that access to AI tools increased productivity in customer support centers and writing tasks.

Nevertheless, substitution remains a real risk. When AI can perform a particular set of tasks at equal quality and lower cost than a human employee, the demand for human labor in those areas may decline. Acemoglu and Restrepo (2020) argue that automation may reduce labor demand unless it is accompanied by the creation of new tasks in which humans maintain a comparative advantage. Full substitution may be cost-effective for firms but could lead to severe economic and social consequences such as widespread layoffs and unemployment.

In contrast to past technologies, where the types of workers affected were relatively predictable, the impact of AI is harder to anticipate. As a general-purpose technology, AI may disrupt a broad range of occupations in varied and uneven ways. These dynamics are unlikely to affect all workers equally. High-skill workers with access to complementary tools may benefit, while mid-skill workers, whose tasks are more easily replicated by AI, may be displaced or pushed into lower-paying jobs. Conversely, if AI democratizes access to services and information and reduces the returns to specialized human capital, it could undermine the economic position of those previously seen as secure in creative or professional roles, potentially reducing inequality.

Evaluating the direct effect of AI on employment in the short run empirically is challenging. To begin with, it is often difficult to determine whether changes in hiring or separations are driven by AI or by other unobserved industry-, organization-, or employee-level factors. In addition, traditional employment contracts tend to be rigid and cannot quickly adjust to technological changes. They also tend to involve a bundle of varied tasks such as responding to emails, attending meetings, managing subordinates, and interacting with clients. In its current form, AI may be effective at automating some of these tasks but is not yet advanced enough to fully replace a human worker. As a result, early adoption of AI might not be reflected in conventional employment statistics.

AI in online labor markets

To overcome these limitations, our recent paper, published in Organization Science (Hui et al. 2024), adopts a different empirical strategy: We focus on online labor markets, namely Upwork, one of the world’s largest online freelancing platforms in the world. The platform operates as a spot market for short-term, usually remote, projects. Prospective employers on the platform can post various jobs offering either fixed or hourly compensation. Jobs span across a range of categories including web development, graphic design, administrative support, digital marketing, legal assistance, and so forth. They usually have a clear timeline and/or well-defined deliverables. Once the jobs are posted freelancers may submit bids offering their services, and, after some negotiation process, one or more freelancers are hired to complete the job.

This setting offers several advantages: Job postings are typically short-term, contracts are flexible, and the platform provides detailed, transparent data on employment history and freelancer earnings. Freelancers often take on and complete multiple projects per month, generating high-frequency data ideal for short-term analysis.

To examine how these interactions are affected by the release of generative AI, we focus on two types of AI models. First, image-based models, specifically DALL-E2 and Midjourney, which were launched within a month of each other in early 2022. These tools marked a major breakthrough in image-generation capabilities, offering the public unprecedented public access to AI tools that could produce high-quality visuals from text prompts. Second, text-based models, specifically the launch of ChatGPT in November 2022. ChatGPT was the first commercial-grade text-based AI model made broadly available. ChatGPT’s release was a watershed moment, attracting over 100 million active users within a couple of months and marking the beginning of mass adoption of generative AI.

Using these model launches as natural experiments, we compare the change in freelancer outcomes in AI-affected and less-affected occupations before and after the launch of the AI tools. Building on previous research as well as exploratory data analysis, we identified specific freelancers offering services in domains more likely to be affected by the different types of AI. For example, copyeditors and proofreaders are likely to be impacted by text-based AI models like ChatGPT, while graphic designers are more likely to be affected by image-based models like DALL-E2. Other categories, such as administrative services, video editing, and data entry, expected to experience little or no direct impact from these early AI tools.

Our analysis reveals that freelancers operating in domains more exposed to generative AI were disproportionately affected by the release of ChatGPT. Specifically, we find that freelancers providing services such as copyediting, proofreading, and other text-heavy tasks experienced a decline of approximately 2% in the number of new monthly contracts. In addition to reduced job flow, these freelancers also saw a roughly 5% decrease in their total monthly earnings on the platform. These effects suggest a significant disruption in the demand for services that can be replicated by AI. Importantly, we observe similar patterns following the release of image-based models such as DALL-E2 and Midjourney. Despite the fact that these tools were released at different times and affected a distinct set of services, the magnitude of the impact was identical to what we observe in text-based models.

These are sizable effects, especially considering how recently these technologies became available. To put these changes in perspective, the observed declines are comparable in magnitude to those estimated in studies of other major automation technologies such as industrial robots and task automation (Acemoglu and Restrepo 2023). They are also similar to the labor market impacts of large-scale policy interventions, including changes in the minimum wage and access to unionization. Moreover, while our data covers only the first six to eight months following the release of these AI models, the negative trend has been persistent over that time. In fact, rather than fading after the initial release, the declines in both employment and compensation continue to grow, suggesting our findings represent more than merely short-term shocks or transitional responses. Instead, they likely reflect shifts in how certain services are valued and delivered in an AI-augmented economy. We conjecture that as AI capabilities improve and adoption expands, these trends will not only persist but may accelerate, potentially leading to broader reductions in employment and earnings across occupations.

The role of worker experience

Having documented the negative average effect of generative AI on employment outcomes on the platform, we next turn to evaluating whether certain freelancer characteristics can mitigate, or potentially exacerbate, these effects. One particular dimension of interest is worker quality and experience. Prior research on technological change suggests that high-skill labor, particularly those engaged in cognitively demanding or creative tasks, tends to be more resilient to adverse technology shocks. The conventional wisdom holds that providing higher- services should, to some extent, shield freelancers from displacement, as their work may be harder to automate or replicate (Acemoglu and Autor 2011; Autor et al. 2003).

Examining the impact of AI across the distribution of worker quality reveals a somewhat surprising pattern: Not only are high-skill freelancers not insulated from the adverse effects but they are, in fact, disproportionately affected. Among workers within the same occupation, those with stronger past performance—as measured by client feedback, contract history, and other platform-based reputational metrics—experience larger declines in both the number of new contracts and total monthly earnings.

This finding highlights a critical and somewhat counterintuitive interaction between artificial and human expertise. Generative AI appears to be “leveling the playing field” by compressing performance differences across the skill spectrum. One potential explanation is that, with tools like ChatGPT and DALL-E2, less experienced or lower-rated freelancers can now produce outputs that in many cases approximate the quality associated only with top-tier talent. As a result, clients may no longer perceive as much value in paying a premium for high-reputation workers, particularly when lower-cost alternatives can generate comparable results.

Thus, as discussed earlier, generative AI represents a fundamentally different kind of technological advance. This dynamic stands in contrast to prior waves of technological change, where advanced tools often complemented highly skilled labor and widened the productivity gap between top and bottom performers (Per Krusell et al. 2000). As a result, its disruptive potential extends across the entire skill distribution, including those at the very top. The early effects of generative AI suggest that it may reduce the dispersion of earnings and opportunities. This interpretation is consistent with earlier findings that the marginal returns to technology adoption are often highest for those with lower initial productivity who gain more from the new technology.

Implications for policy

Our study provides some of the earliest empirical evidence on the labor market effects of generative AI, but it is also important to recognize its limitations. Examining the effect on freelancers is appealing for the reasons stated above but may not fully capture the dynamics of traditional employment arrangements or long-term contractual relationships. Still, the findings highlight the fact that certain worker groups, such as freelancers, who often lack formal labor protections and social safety nets, benefits, or bargaining power, are uniquely exposed to technological disruptions. For example, workers in more flexible work arrangements lack access to employer-sponsored retirement savings and unemployment insurance and have faced legal challenges in forming labor unions. Existing labor relations and regulations may thus not be well equipped to address the challenges posed by emerging technologies. As the nature of work continues to evolve, policies may need to be rethought to account for more fast-moving and AI-enhanced freelancer markets, especially in sectors highly vulnerable to automation.

While our analysis focuses on well-defined, task-oriented freelance jobs, which are arguably more amenable to AI substitution, recent research finds that generative AI may also affect more complex, collaborative work. Dell’Acqua et al. (2025), for example, show that AI can even substitute for team-based professional problem-solving and contribute meaningfully to real-world business decisions. This suggests that the impact of AI may extend beyond routine or isolated tasks and begin to reshape how high-skilled, interdependent work is performed. Predicting the future trajectory of AI remains difficult, as the technology continues to evolve rapidly. As its capabilities grow, AI is likely to be adopted across a wider range of industries, including those once thought resistant to automation, further reshaping the relationship between labor and technology. Closely tracking these developments through initiatives like the Workforce Innovation and Opportunity Act (WIOA) and other federal labor data programs is essential for informing timely and effective policy.

Historical evidence from past general-purpose technologies suggests that while short-term substitution effects can displace workers, longer-term gains often emerge through task reorganization, workforce reskilling, and the creation of entirely new roles. In the case of generative AI, true progress may come not just from automating existing tasks, but from fundamentally reshaping how organizations operate and the types of goods and services they offer. At the same time, reductions in task costs in one sector can spur innovation and economic activity in others. For example, Brynjolfsson et al. (2019) show that AI-driven machine translation at eBay significantly increased cross-border trade and improved consumer outcomes. Similarly, as generative AI continues to evolve, it may enable the emergence of new occupations, business models, and collaborative structures.

Realizing these long-term benefits will require sustained investment in education, training, and institutional reform that promotes human-AI complementarity. Policymakers should not only help workers adapt to near-term disruptions but also foster an environment in which AI enhances, rather than replaces, human capabilities. It will also require creating conditions that incentivize firms to reorganize workflows and adopt AI in ways that amplify, rather than erode, the value of human labor. In addition, labor market institutions must evolve to keep pace with the new realities of work. This involves not only rethinking social safety nets but by also promoting inclusive access to AI tools and training opportunities. If designed thoughtfully, policy can ensure that the next wave of AI adoption delivers broad-based benefits rather than deepening existing disparities.

-

References

Acemoglu, Daron, and David Autor. 2011. “Skills, Tasks and Technologies: Implications for Employment and Earnings.” In Handbook of Labor Economics, 4:1043–1171. Elsevier. https://doi.org/10.1016/S0169-7218(11)02410-5.

Acemoglu, Daron, and Pascual Restrepo. 2020. “Robots and Jobs: Evidence from US Labor Markets.” Journal of Political Economy 128 (6): 2188–2244. https://doi.org/10.1086/705716.

Agrawal, Ajay B., Joshua S. Gans, and Avi Goldfarb. 2022. Power and Prediction: The Disruptive Economics of Artificial Intelligence. Boston, Mass: Harvard business review press.

Autor, D. H., F. Levy, and R. J. Murnane. 2003. “The Skill Content of Recent Technological Change: An Empirical Exploration.” The Quarterly Journal of Economics 118 (4): 1279–1333. https://doi.org/10.1162/003355303322552801.

Brynjolfsson, Erik, Xiang Hui, and Meng Liu. 2019. “Does Machine Translation Affect International Trade? Evidence from a Large Digital Platform.” Management Science 65 (12): 5449–60. https://doi.org/10.1287/mnsc.2019.3388.

Brynjolfsson, Erik, Danielle Li, and Lindsey Raymond. 2025. “Generative AI at Work.” The Quarterly Journal of Economics 140 (2): 889–942. https://doi.org/10.1093/qje/qjae044.

Brynjolfsson, Erik, and Andrew McAfee. 2016. The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies. First published as a Norton paperback. New York London: W. W. Norton & Company.

Dell’Acqua, Fabrizio, Charles Ayoubi, Hila Lifshitz-Assaf, Raffaella Sadun, Ethan R. Mollick, Lilach Mollick, Yi Han, et al. 2025. “The Cybernetic Teammate: A Field Experiment on Generative AI Reshaping Teamwork and Expertise.” Preprint. SSRN. https://doi.org/10.2139/ssrn.5188231.

Eloundou, Tyna, Sam Manning, Pamela Mishkin, and Daniel Rock. 2024. “GPTs Are GPTs: Labor Market Impact Potential of LLMs.” Science 384 (6702): 1306–8. https://doi.org/10.1126/science.adj0998.

Hui, Xiang, Oren Reshef, and Luofeng Zhou. 2024. “The Short-Term Effects of Generative Artificial Intelligence on Employment: Evidence from an Online Labor Market.” Organization Science 35 (6): 1977–89. https://doi.org/10.1287/orsc.2023.18441.

Krusell, Per, Lee E. Ohanian, Jose-Victor Rios-Rull, and Giovanni L. Violante. 2000. “Capital-Skill Complementarity and Inequality: A Macroeconomic Analysis.” Econometrica 68 (5): 1029–53. https://doi.org/10.1111/1468-0262.00150.

Noy, Shakked, and Whitney Zhang. 2023. “Experimental Evidence on the Productivity Effects of Generative Artificial Intelligence.” Science 381 (6654): 187–92. https://doi.org/10.1126/science.adh2586.

The Brookings Institution is committed to quality, independence, and impact.

We are supported by a diverse array of funders. In line with our values and policies, each Brookings publication represents the sole views of its author(s).

AI Research

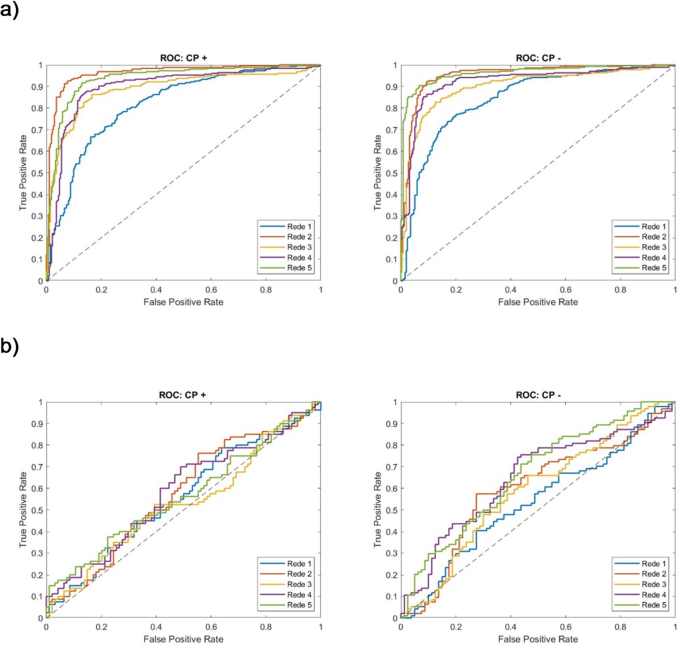

MAIA platform for routine clinical testing: an artificial intelligence embryo selection tool developed to assist embryologists

Graham, M. E. et al. Assisted reproductive technology: Short- and long-term outcomes. Dev. Med. Child. Neurol. 65, 38–49 (2023).

Jiang, V. S. & Bormann, C. L. Artificial intelligence in the in vitro fertilization laboratory: a review of advancements over the last decade. Fertil. Steril. 120, 17–23 (2023).

Devine, K. et al. Single vitrified blastocyst transfer maximizes liveborn children per embryo while minimizing preterm birth. Fertil. Steril. 103, 1454–1460 (2015).

Tiitinen, A. Single embryo transfer: why and how to identify the embryo with the best developmental potential. Best Pract. Res. Clin. Endocrinol. Metab. 33, 77–88 (2019).

Glatstein, I., Chavez-Badiola, A. & Curchoe, C. L. New frontiers in embryo selection. J. Assist. Reprod. Genet. 40, 223–234 (2023).

Gardner, D. K. & Schoolcraft, W. B. Culture and transfer of human blastocysts. Curr. Opin. Obstet. Gynaecol. 11, 307–311 (1999).

Sciorio, R. & Meseguer, M. Focus on time-lapse analysis: blastocyst collapse and morphometric assessment as new features of embryo viability. Reprod. BioMed. Online. 43, 821–832 (2021).

Sundvall, L., Ingerslev, H. J., Knudsen, U. B. & Kirkegaard, K. Inter- and intra-observer variability of time-lapse annotations. Hum. Reprod. 28, 3215–3221 (2013).

Gallego, R. D., Remohí, J. & Meseguer, M. Time-lapse imaging: the state of the Art. Biol. Reprod. 101, 1146–1154 (2019).

VerMilyea, M. D. et al. Computer-automated time-lapse analysis results correlate with embryo implantation and clinical pregnancy: a blinded, multi-centre study. Reprod. Biomed. Online. 29, 729–736 (2014).

Chéles, D. S., Molin, E. A. D., Rocha, J. C. & Nogueira, M. F. G. Mining of variables from embryo morphokinetics, blastocyst’s morphology and patient parameters: an approach to predict the live birth in the assisted reproduction service. JBRA Assist. Reprod. 24, 470–479 (2020).

Rocha, C., Nogueira, M. G., Zaninovic, N. & Hickman, C. Is AI assessment of morphokinetic data and digital image analysis from time-lapse culture predictive of implantation potential of human embryos? Fertil. Steril. 110, e373 (2018).

Zaninovic, N. et al. Application of artificial intelligence technology to increase the efficacy of embryo selection and prediction of live birth using human blastocysts cultured in a time-lapse incubator. Fertil. Steril. 110, e372–e373 (2018).

Alegre, L. et al. First application of artificial neuronal networks for human live birth prediction on Geri time-lapse monitoring system blastocyst images. Fertil. Steril. 114, e140 (2020).

Bori, L. et al. An artificial intelligence model based on the proteomic profile of euploid embryos and blastocyst morphology: a preliminary study. Reprod. BioMed. Online. 42, 340–350 (2021).

Chéles, D. S. et al. An image processing protocol to extract variables predictive of human embryo fitness for assisted reproduction. Appl. Sci. 12, 3531 (2022).

Jacobs, C. K. et al. Embryologists versus artificial intelligence: predicting clinical pregnancy out of a transferred embryo who performs it better? Fertil. Steril. 118, e81–e82 (2022).

Lorenzon, A. et al. P-211 development of an artificial intelligence software with consistent laboratory data from a single IVF center: performance of a new interface to predict clinical pregnancy. Hum. Reprod. 39, deae108.581 (2024).

Fernandez, E. I. et al. Artificial intelligence in the IVF laboratory: overview through the application of different types of algorithms for the classification of reproductive data. J. Assist. Reprod. Genet. 37, 2359–2376 (2020).

Mendizabal-Ruiz, G. et al. Computer software (SiD) assisted real-time single sperm selection associated with fertilization and blastocyst formation. Reprod. BioMed. Online. 45, 703–711 (2022).

Fjeldstad, J. et al. Segmentation of mature human oocytes provides interpretable and improved blastocyst outcome predictions by a machine learning model. Sci. Rep. 14, 10569 (2024).

Khosravi, P. et al. Deep learning enables robust assessment and selection of human blastocysts after in vitro fertilization. NPJ Digit. Med. 2, 21 (2019).

Hickman, C. et al. Inner cell mass surface area automatically detected using Chloe eq™(fairtility), an ai-based embryology support tool, is associated with embryo grading, embryo ranking, ploidy and live birth outcome. Fertil. Steril. 118, e79 (2022).

Tran, D., Cooke, S., Illingworth, P. J. & Gardner, D. K. Deep learning as a predictive tool for fetal heart pregnancy following time-lapse incubation and blastocyst transfer. Hum. Reprod. 34, 1011–1018 (2019).

Rajendran, S. et al. Automatic ploidy prediction and quality assessment of human blastocysts using time-lapse imaging. Nat. Commun. 15, 7756 (2024).

Bormann, C. L. et al. Consistency and objectivity of automated embryo assessments using deep neural networks. Fertil. Steril. 113, 781–787e1 (2020).

Kragh, M. F. & Karstoft, H. Embryo selection with artificial intelligence: how to evaluate and compare methods? J. Assist. Reprod. Genet. 38, 1675–1689 (2021).

Cromack, S. C., Lew, A. M., Bazzetta, S. E., Xu, S. & Walter, J. R. The perception of artificial intelligence and infertility care among patients undergoing fertility treatment. J. Assist. Reprod. Genet. https://doi.org/10.1007/s10815-024-03382-5 (2025).

Fröhlich, H. et al. From hype to reality: data science enabling personalized medicine. BMC Med. 16, 150 (2018).

Zhu, J. et al. External validation of a model for selecting day 3 embryos for transfer based upon deep learning and time-lapse imaging. Reprod. BioMed. Online. 47, 103242 (2023).

Yelke, H. K. et al. O-007 Simplifying the complexity of time-lapse decisions with AI: CHLOE (Fairtility) can automatically annotate morphokinetics and predict blastulation (at 30hpi), pregnancy and ongoing clinical pregnancy. Hum. Reprod. 37, deac104.007 (2022).

Papatheodorou, A. et al. Clinical and practical validation of an end-to-end artificial intelligence (AI)-driven fertility management platform in a real-world clinical setting. Reprod. BioMed. Online. 45, e44–e45 (2022).

Salih, M. et al. Embryo selection through artificial intelligence versus embryologists: a systematic review. Hum. Reprod. Open hoad031 (2023).

Nunes, K. et al. Admixture’s impact on Brazilian population evolution and health. Science. 388(6748), eadl3564 (2025).

Jackson-Bey, T. et al. Systematic review of Racial and ethnic disparities in reproductive endocrinology and infertility: where do we stand today? F&S Reviews. 2, 169–188 (2021).

Kassi, L. A. et al. Body mass index, not race, May be associated with an alteration in early embryo morphokinetics during in vitro fertilization. J. Assist. Reprod. Genet. 38, 3091–3098 (2021).

Pena, S. D. J., Bastos-Rodrigues, L., Pimenta, J. R. & Bydlowski, S. P. DNA tests probe the genomic ancestry of Brazilians. Braz J. Med. Biol. Res. 42, 870–876 (2009).

Fraga, A. M. et al. Establishment of a Brazilian line of human embryonic stem cells in defined medium: implications for cell therapy in an ethnically diverse population. Cell. Transpl. 20, 431–440 (2011).

Amin, F. & Mahmoud, M. Confusion matrix in binary classification problems: a step-by-step tutorial. J. Eng. Res. 6, 0–0 (2022).

Magdi, Y. et al. Effect of embryo selection based morphokinetics on IVF/ICSI outcomes: evidence from a systematic review and meta-analysis of randomized controlled trials. Arch. Gynecol. Obstet. 300, 1479–1490 (2019).

Guo, Y. H., Liu, Y., Qi, L., Song, W. Y. & Jin, H. X. Can time-lapse incubation and monitoring be beneficial to assisted reproduction technology outcomes? A randomized controlled trial using day 3 double embryo transfer. Front. Physiol. 12, 794601 (2022).

Giménez, C., Conversa, L., Murria, L. & Meseguer, M. Time-lapse imaging: morphokinetic analysis of in vitro fertilization outcomes. Fertil. Steril. 120, 228–227 (2023).

Vitrolife EmbryoScope + time-lapse system. (2023). https://www.vitrolife.com/products/time-lapse-systems/embryoscopeplus-time-lapse-system/.

Lagalla, C. et al. A quantitative approach to blastocyst quality evaluation: morphometric analysis and related IVF outcomes. J. Assist. Reprod. Genet. 32, 705–712 (2015).

Rocha, J. C. et al. A method based on artificial intelligence to fully automatize the evaluation of bovine blastocyst images. Sci. Rep. 7, 7659 (2017).

Chavez-Badiola, A. et al. Predicting pregnancy test results after embryo transfer by image feature extraction and analysis using machine learning. Sci. Rep. 10, 4394 (2020).

Matos, F. D., Rocha, J. C. & Nogueira, M. F. G. A method using artificial neural networks to morphologically assess mouse blastocyst quality. J. Anim. Sci. Technol. 56, 15 (2014).

Wang, S., Zhou, C., Zhang, D., Chen, L. & Sun, H. A deep learning framework design for automatic blastocyst evaluation with multifocal images. IEEE Access. 9, 18927–18934 (2021).

Berntsen, J., Rimestad, J., Lassen, J. T., Tran, D. & Kragh, M. F. Robust and generalizable embryo selection based on artificial intelligence and time-lapse image sequences. PLoS One. 17, e0262661 (2022).

Fruchter-Goldmeier, Y. et al. An artificial intelligence algorithm for automated blastocyst morphometric parameters demonstrates a positive association with implantation potential. Sci. Rep. 13, 14617 (2023).

Illingworth, P. J. et al. Deep learning versus manual morphology-based embryo selection in IVF: a randomized, double-blind noninferiority trial. Nat. Med. 30, 3114–3120 (2024).

Kanakasabapathy, M. K. et al. Development and evaluation of inexpensive automated deep learning-based imaging systems for embryology. Lab. Chip. 19, 4139–4145 (2019).

Loewke, K. et al. Characterization of an artificial intelligence model for ranking static images of blastocyst stage embryos. Fertil. Steril. 117, 528–535 (2022).

Hengstschläger, M. Artificial intelligence as a door opener for a new era of human reproduction. Hum. Reprod. Open hoad043 (2023).

Lassen Theilgaard, J., Fly Kragh, M., Rimestad, J., Nygård Johansen, M. & Berntsen, J. Development and validation of deep learning based embryo selection across multiple days of transfer. Sci. Rep. 13 (1), 4235 (2023).

Lozano, M. et al. P-301 Assessment of ongoing clinical outcomes prediction of an AI system on retrospective SET data, Human Reprod. 38(Issue Supplement_1), dead093.659. (2023).

Collins, G. S. et al. TRIPOD + AI statement: updated guidance for reporting clinical prediction models that use regression or machine learning methods. BMJ 385, e078378 (2024).

Abdolrasol, M. G. M. et al. Artificial neural networks based optimization techniques: a review. Electronics 10, 2689 (2021).

Yuzer, E. O. & Bozkurt, A. Instant solar irradiation forecasting for solar power plants using different ANN algorithms and network models. Electr. Eng. 106, 3671–3689 (2024).

Guariso, G. & Sangiorgio, M. Improving the performance of multiobjective genetic algorithms: an elitism-based approach. Information 11, 587 (2020).

García-Pascual, C. M. et al. Optimized NGS approach for detection of aneuploidies and mosaicism in PGT-A and imbalances in PGT-SR. Genes 11, 724 (2020).

AI Research

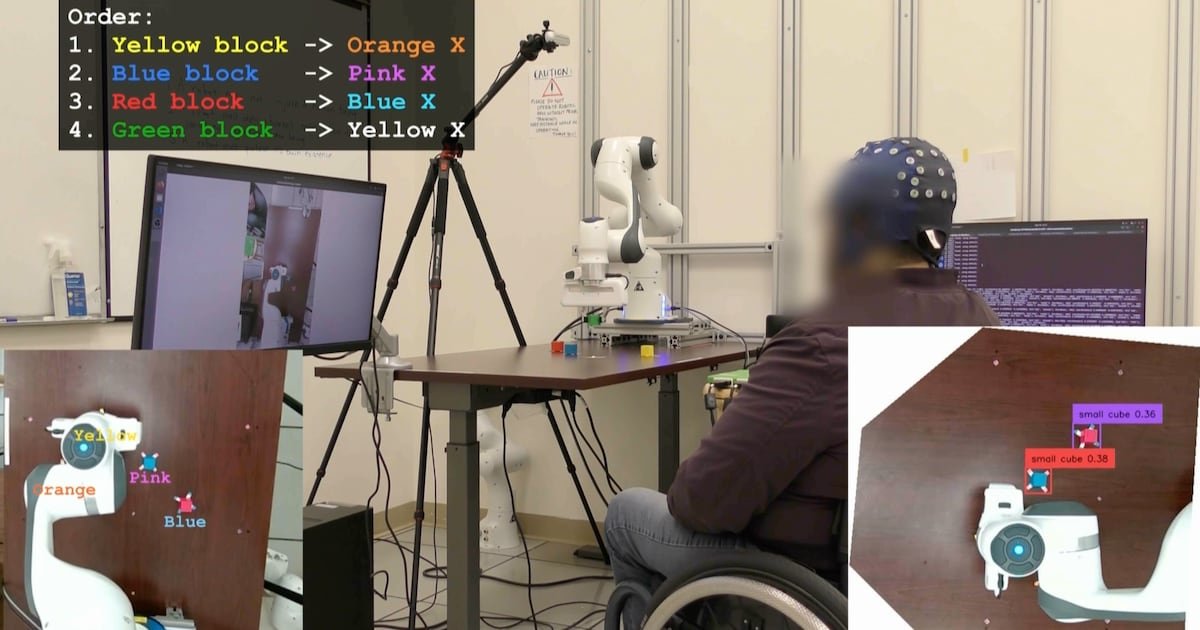

UCLA Researchers Enable Paralyzed Patients to Control Robots with Thoughts Using AI – CHOSUNBIZ – Chosun Biz

AI Research

Hackers exploit hidden prompts in AI images, researchers warn

Cybersecurity firm Trail of Bits has revealed a technique that embeds malicious prompts into images processed by large language models (LLMs). The method exploits how AI platforms compress and downscale images for efficiency. While the original files appear harmless, the resizing process introduces visual artifacts that expose concealed instructions, which the model interprets as legitimate user input.

In tests, the researchers demonstrated that such manipulated images could direct AI systems to perform unauthorized actions. One example showed Google Calendar data being siphoned to an external email address without the user’s knowledge. Platforms affected in the trials included Google’s Gemini CLI, Vertex AI Studio, Google Assistant on Android, and Gemini’s web interface.

Read More: Meta curbs AI flirty chats, self-harm talk with teens

The approach builds on earlier academic work from TU Braunschweig in Germany, which identified image scaling as a potential attack surface in machine learning. Trail of Bits expanded on this research, creating “Anamorpher,” an open-source tool that generates malicious images using interpolation techniques such as nearest neighbor, bilinear, and bicubic resampling.

From the user’s perspective, nothing unusual occurs when such an image is uploaded. Yet behind the scenes, the AI system executes hidden commands alongside normal prompts, raising serious concerns about data security and identity theft. Because multimodal models often integrate with calendars, messaging, and workflow tools, the risks extend into sensitive personal and professional domains.

Also Read: Nvidia CEO Jensen Huang says AI boom far from over

Traditional defenses such as firewalls cannot easily detect this type of manipulation. The researchers recommend a combination of layered security, previewing downscaled images, restricting input dimensions, and requiring explicit confirmation for sensitive operations.

“The strongest defense is to implement secure design patterns and systematic safeguards that limit prompt injection, including multimodal attacks,” the Trail of Bits team concluded.

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies