AI Research

Is deregulation the New AI gold rush? Inside Trump’s 90-point action plan

The EU’s sweeping risk-based rules will cover all types of artificial intelligence – Copyright AFP JADE GAO

In July 2025, the Trump administration released a 28-page blueprint, “Winning the Race: America’s AI Action Plan,” which reads like a modern-day gold-rush map. It outlines over 90 policy positions across multiple agencies, all with a single goal: to remove barriers to AI innovation. This deregulatory approach is the heart of the plan.

Why It Matters Now

With China, the EU, and private rivals all racing to lead in AI, the Trump administration argues that streamlined approvals and clearer guidelines will help U.S. firms innovate faster. Critics counter that speed may come at the expense of environmental safeguards, worker training, and protections against bias.

Staking the Claims: Anatomy of a Deregulatory Plan

The AI Action Plan is not a single law. It’s a series of executive orders and policy mandates designed to remove regulations and accelerate AI deployment. Key elements include:

- Fast-Tracked Permitting: An executive order specifically expedites federal permits for data centers and semiconductor manufacturing under existing NEPA and FAST-41 processes. This is a direct response to a major industry complaint about infrastructure build-out delays.

- AI Export Promotion: The Commerce and State Departments will partner with industry to export “secure, full-stack AI packages” to U.S. allies. This policy aims to build an American-led AI ecosystem abroad, free from foreign regulatory influence.

- “Woke” AI Guardrails Removed: New procurement rules will expunge DEI language from federal contracts, insisting that federally contracted AI must reflect “objective truth” free of ideological bias. This is a clear move to deregulate the ethical and social guardrails placed on AI development.

Prospecting for Performance: Technical Leaps & Public Pulse

The administration’s deregulatory push coincides with rapid technological advancements. The plan aims to build on these successes by removing what it sees as unnecessary red tape.

- Medical Device Claims: The FDA cleared 221 AI-enabled medical devices in 2023, up from just 6 in 2015. This surge in regulatory confidence is a direct result of new policies that allow companies to more quickly test and deploy AI tools.

- Benchmark Breakthroughs: AI performance on major benchmarks saw dramatic leaps in 2024. Scores on the MMMU, GPQA, and SWE-bench tests rose by 18.8, 48.9, and 71.7 percentage points, respectively. The plan argues that removing bureaucratic friction will accelerate this progress even further.

- Public Sentiment: This progress is met with public skepticism. A 2025 AI Index report found that only 38% of Americans believe AI will improve health and only 31% expect net job gains, a sentiment that echoes the wary attitude of a miner looking for fool’s gold.

Those gains suggest that models are learning faster than before. But breakthroughs on test benches don’t always match real-world reliability.

Data Centers: Growth & Impact

The new permit rules have unleashed a wave of data-center proposals:

- Energy Use: U.S. facilities consumed 176 terawatt-hours in 2023 (about 4.4% of national electricity) and could reach 12% by 2028.

- Emissions Toll: A Department of Energy survey of 2,100 centers found 105 million tonnes of CO₂ last year, more than half from fossil-fuel backup generators.

Faster approvals mean new investment dollars, but also sharper debates over rising energy demand and the environmental footprint of an AI boom.

Chips & Open Source: Who Benefits

Hardware and community code are twin engines of the AI economy:

- Semiconductor Exports: American chip sales hit $70.1 billion in 2024 (up 6.3%), driven by fabs in Texas and Oregon.

- Model Scans: Open-source security tools have analyzed 4.5 million AI models and flagged 350,000 potential biases or safety issues, proof that not every discovery is pure gold.

Eased export rules give chipmakers new markets, while looser sharing lets small labs, from university groups to bootstrapped startups, compete on the same playing field as hyperscale giants.

Jobs at Risk & Opportunity

No gold rush is without its claim jumpers and ghost towns:

- Automation Risk: A McKinsey study warns that 30% of U.S. work hours could be automated by 2030, triggering 12 million occupational shifts.

Commenting on the human cost of these changes, Anirudh Agarwal, Director at OutreachX, cautions, “Accelerating permits without investing in people is like staking gold claims with no plan to refine the ore.”

Claim Holders and Ghost Towns: Potential Winners & Losers

The deregulatory “gold rush” is creating clear winners and losers.

- Winners:

- Chip Makers & Fab Operators: Can build new semiconductor “mines” under eased zoning regulations.

- Cloud Giants: Can erect hyperscale campuses with fewer permit delays.

- Open-Source Labs: Are designated as official prospectors, free to pan for new open-source models.

- Losers:

- Front-Line Workers: Face shuttered roles without guaranteed retraining.

- Civil Rights Advocates: Warn that removing DEI guardrails may lead to biased or unsafe AI in critical services.

Civil Rights & Accountability Concerns

Several advocacy organizations have raised alarms about the broader impact of unfettered deregulation:

- ACLU: The plan undermines state authority by directing the Federal Communications Commission to review and potentially override state AI laws, while cutting off ‘AI-related’ federal funding to states that adopt robust protections,” Cody Venzke, senior policy counsel with the American Civil Liberties Union.

- People’s AI Action Plan: Over 80 labor, civil-rights, and environmental groups released a rival blueprint, warning that unfettered deregulation caters to Big Tech, sidelines public interest, and undermines worker protections.

- State Protections: Critics note the federal plan overrides thoughtful local safeguards, stripping states of the right to prevent AI-driven bias in housing, healthcare, and law enforcement, and risks “unfettered abuse” of AI systems.

Mapping the Aftermath

Deregulation has opened the sluices for an AI gold rush, fueling boomtowns in tech hubs and reshaping local economies. Yet, as with every frontier rush, the real test comes when the veins run dry. Will communities that staked their claims emerge wealthier, or face the ghost-town fate of those left sifting yesterday’s tailings? As Congress, courts, and citizens weigh in, the question remains: in this 90-point gold rush, who finds riches, and who pays the toll?

AI Research

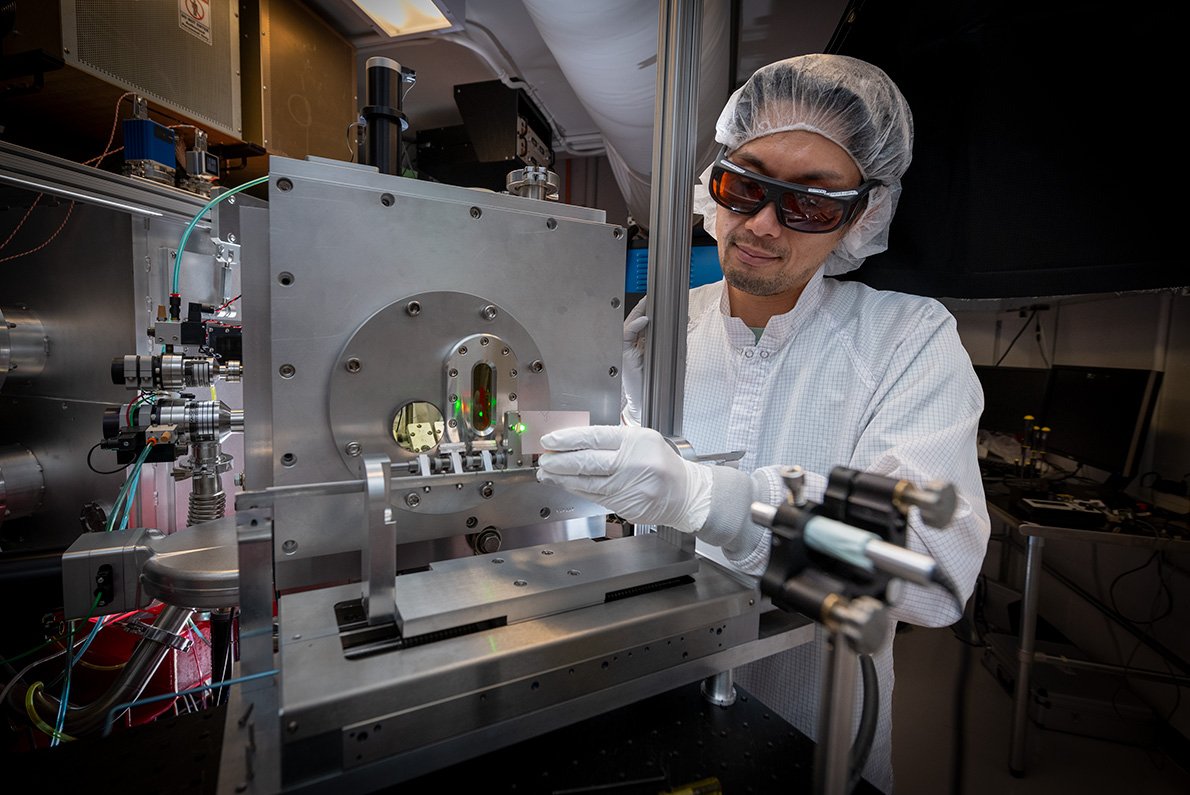

How AI and Automation are Speeding Up Science and Discovery – Berkeley Lab News Center

The Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab) is at the forefront of a global shift in how science gets done—one driven by artificial intelligence, automation, and powerful data systems. By integrating these tools, researchers are transforming the speed and scale of discovery across disciplines, from energy to materials science to particle physics.

This integrated approach is not just advancing research at Berkeley Lab—it’s strengthening the nation’s scientific enterprise. By pioneering AI-enabled discovery platforms and sharing them across the research community, Berkeley Lab is helping the U.S. compete in the global race for innovation, delivering the tools and insights needed to solve some of the world’s most pressing challenges.

From accelerating materials discovery to optimizing beamlines and more, here are four ways Berkeley Lab is using AI to make research faster, smarter, and more impactful.

Automating Discovery: AI and Robotics for Materials Innovation

At the heart of materials science is a time-consuming process: formulating, synthesizing, and testing thousands of potential compounds. AI is helping Berkeley Lab speed that up—dramatically.

A-Lab

At Berkeley Lab’s automated materials facility, A-Lab, AI algorithms propose new compounds, and robots prepare and test them. This tight loop between machine intelligence and automation drastically shortens the time it takes to validate materials for use in technologies like batteries and electronics.

Autobot

Exploratory tools like Autobot, a robotic system at the Molecular Foundry, are being used to investigate new materials for applications ranging from energy to quantum computing, making lab work faster and more flexible.

AI Research

Captions rebrands as Mirage, expands beyond creator tools to AI video research

Captions, an AI-powered video creation and editing app for content creators that has secured over $100 million in venture capital to date at a valuation of $500 million, is rebranding to Mirage, the company announced on Thursday.

The new name reflects the company’s broader ambitions to become an AI research lab focused on multimodal foundational models specifically designed for short-form video content for platforms like TikTok, Reels, and Shorts. The company believes this approach will distinguish it from traditional AI models and competitors such as D-ID, Synthesia, and Hour One.

The rebranding will also unify the company’s offerings under one umbrella, bringing together the flagship creator-focused AI video platform, Captions, and the recently launched Mirage Studio, which caters to brands and ad production.

“The way we see it, the real race for AI video hasn’t begun. Our new identity, Mirage, reflects our expanded vision and commitment to redefining the video category, starting with short-form video, through frontier AI research and models,” CEO Gaurav Misra told TechCrunch.

The sales pitch behind Mirage Studio, which launched in June, focuses on enabling brands to create short advertisements without relying on human talent or large budgets. By simply submitting an audio file, the AI generates video content from scratch, with an AI-generated background and custom AI avatars. Users can also upload selfies to create an avatar using their likeness.

What sets the platform apart, according to the company, is its ability to produce AI avatars that have natural-looking speech, movements, and facial expressions. Additionally, Mirage says it doesn’t rely on existing stock footage, voice cloning, or lip-syncing.

Mirage Studio is available under the business plan, which costs $399 per month for 8,000 credits. New users receive 50% off the first month.

Techcrunch event

San Francisco

|

October 27-29, 2025

While these tools will likely benefit brands wanting to streamline video production and save some money, they also spark concerns around the potential impact on the creative workforce. The growing use of AI in advertisements has prompted backlash, as seen in a recent Guess ad in Vogue’s July print edition that featured an AI-generated model.

Additionally, as this technology becomes more advanced, distinguishing between real and deepfake videos becomes increasingly difficult. It’s a difficult pill to swallow for many people, especially given how quickly misinformation can spread these days.

Mirage recently addressed its role in deepfake technology in a blog post. The company acknowledged the genuine risks of misinformation while also expressing optimism about the positive potential of AI video. It mentioned that it has put moderation measures in place to limit misuse, such as preventing impersonation and requiring consent for likeness use.

However, the company emphasized that “design isn’t a catch-all” and that the real solution lies in fostering a “new kind of media literacy” where people approach video content with the same critical eye as they do news headlines.

AI Research

Head of UK’s Turing AI Institute resigns after funding threat

Graham FraserTechnology reporter

PA

PAThe chief executive of the UK’s national institute for artificial intelligence (AI) has resigned following staff unrest and a warning the charity was at risk of collapse.

Dr Jean Innes said she was stepping down from the Alan Turing Institute as it “completes the current transformation programme”.

Her position has come under pressure after the government demanded the centre change its focus to defence and threatened to pull its funding if it did not – leading to staff discontent and a whistleblowing complaint submitted to the Charity Commission.

Dr Innes, who was appointed chief executive in July 2023, said the time was right for “new leadership”.

The BBC has approached the government for comment.

The Turing Institute said its board was now looking to appoint a new CEO who will oversee “the next phase” to “step up its work on defence, national security and sovereign capabilities”.

Its work had once focused on AI and data science research in environmental sustainability, health and national security, but moved on to other areas such as responsible AI.

The government, however, wanted the Turing Institute to make defence its main priority, marking a significant pivot for the organisation.

“It has been a great honour to lead the UK’s national institute for data science and artificial intelligence, implementing a new strategy and overseeing significant organisational transformation,” Dr Innes said.

“With that work concluding, and a new chapter starting… now is the right time for new leadership and I am excited about what it will achieve.”

What happened at the Alan Turing Institute?

Founded in 2015 as the UK’s leading centre of AI research, the Turing Institute, which is headquartered at the British Library in London, has been rocked by internal discontent and criticism of its research activities.

A review last year by government funding body UK Research and Innovation found “a clear need for the governance and leadership structure of the Institute to evolve”.

At the end of 2024, 93 members of staff signed a letter expressing a lack of confidence in its leadership team.

In July, Technology Secretary Peter Kyle wrote to the Turing Institute to tell its bosses to focus on defence and security.

He said boosting the UK’s AI capabilities was “critical” to national security and should be at the core of the institute’s activities – and suggested it should overhaul its leadership team to reflect its “renewed purpose”.

He said further government investment would depend on the “delivery of the vision” he had outlined in the letter.

This followed Prime Minister Sir Keir Starmer’s commitment to increasing UK defence spending to 5% of national income by 2035, which would include investing more in military uses of AI.

Getty Images

Getty ImagesA month after Kyle’s letter was sent, staff at the Turing institute warned the charity was at risk of collapse, after the threat to withdraw its funding.

Workers raised a series of “serious and escalating concerns” in a whistleblowing complaint submitted to the Charity Commission.

Bosses at the Turing Institute then acknowledged recent months had been “challenging” for staff.

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics