AI Research

How AI Is Reshaping Architecture – Texas A&M Stories

Imagine you could describe a five-story apartment building in simple words and instantly see a 3D model you can explore through mixed reality. No difficult software or coding. Just say what you want, and watch it come to life.

At Texas A&M’s College of Architecture, researchers are working on making this future real. Thanks to funding by the National Science Foundation (NSF), they are creating new tools that combine artificial intelligence (AI), augmented reality (AR) and spatial reasoning.

Dr. Wei Yan, a professor and researcher, leads these projects in the Department of Architecture. In July, he began his 20th year at Texas A&M and took on a new role as interim head of the department.

Yan’s research team includes doctoral students leading their own projects. Their work has caught the attention of experts across the country. In May, his team earned a Best Paper Award at the 2025 IEEE Conference on Artificial Intelligence.

Together, they are building new tools that are changing how architecture is taught and practiced.

Architecture doctoral student Guangxi Feng (left) and master’s in architecture student Travis Halverson (right) test Augmented Reality glasses.

Credit: Texas A&M University College of Architecture

Describe A Building, Then See It Appear

What if you could start designing a building just by typing a sentence?

That’s what Text-to-Visual Programming GPT (Text2VP) does. It’s a new generative AI tool made by doctoral student Guangxi Feng. Yan said that generative AI can already create text, images, videos and even 3D models from text prompts.

Using OpenAI’s GPT-4.1, Text2VP lets people create a 3D model that they can change right away by describing a building in simple words.

Users can change the shape, size and layout without writing any code, guided by their architectural knowledge. “This way, the human designers and AI collaborate on the project,” Yan said.

Normally, completing tasks in design software can take hours or days. Text2VP speeds up early design work, so designers can spend more time being creative instead of dealing with technical details.

“This lowers the barrier to entry,” Yan said. “It allows students to experiment and learn design logic more intuitively.” The tool will be tested in mixed reality, where users can walk through and change their 3D models. Yan said immersive spaces help people understand complex spatial concepts faster than using regular computer screens.

Even though it’s still being developed, Yan said it could change the way students and professional designers start their projects.

His team is also exploring AI’s role in Building Information Modeling (BIM). BIM is a process for creating digital models of buildings that include both the design and information about the building’s parts. The process is difficult to master, even for professionals, but Yan and doctoral students Jaechang Ko and John Ajibefun are testing how AI could make it easier and more accessible for architects.

A demonstration shows the AI chatbot in action. The chatbot analyzes a multi-story 3D architectural model and offers real-time feedback.

Credit: Provided photo

Talk To Your Model, Get Instant Feedback

Building on this progress, Yan’s lab is testing how talking to an AI chatbot can help with design. The chatbot lets users interact with their model through conversation and works right in a web browser.

Doctoral student Farshad Askari created a chatbot that lets users “talk” to their 3D building models. After uploading a design, users can ask questions about its structure, layout or how well it works. The chatbot answers with text advice and helpful pictures. It can even compare the models to industry standards or sustainability goals.

The chatbot uses trusted information in a knowledge base and a live view of the uploaded building model with GPT-4o Vision to act like a real-time design assistant.

Soon, it could read detailed building data and work with standard document types like Industry Foundation Classes (IFC), allowing even deeper design checks.

“This kind of dialogue-driven design could one day power a whole new workflow,” Yan said. “It’s about creating feedback loops between the designer, the model and intelligent systems.”

Teaching AI To Understand Space Like People

Design isn’t just about shape and use. It also needs spatial intelligence: being able to picture, turn and move objects in 3D.

While people do this naturally, AI still has a hard time. “Spatial intelligence is a core skill in architecture and STEM fields,” Yan said.

To study this problem, doctoral candidate Monjoree Uttamasha led an NSF-funded project testing AI models like ChatGPT, Llama and Gemini. They used the Revised Purdue Spatial Visualization Test, a common test for spatial intelligence. Their study won Best Paper in the Computer Vision category at the 2025 IEEE Conference on AI.

The results were clear: without extra context, AI models often failed to notice how shapes rotated or changed in space. Human participants outperformed the AI by a wide margin.

However, when given simple visual guides and math notations, the AI got a lot better. These findings show that AI can learn spatial thinking, but it needs more training with background information.

With the right help, AI tools can start to think more like human designers. Yan’s team sees this project, along with others in their lab, as a step toward improving AI technology and how design is taught.

“This research points to ways we can enhance both AI tools and educational methods,” Yan said. The lab’s work builds on more than 20 years of research at Texas A&M, combining computational design methods, machine learning and architectural visualization.

AI Research

Keeping AI-generated content authentic – LAS News

Where some see artificial intelligence (AI) flattening human creativity, Kaili Meyer (‘17 journalism and mass communication) sees an opportunity to prove that authenticity and individuality are still the heart of communication.

As founder of the sales and web copywriting company Reveal Studio Co., Meyer built her business and later included AI as an extension of her work. She developed tools that train AI to preserve the integrity of an individual’s tone.

Wellness to print

Meyer began her undergraduate studies at Iowa State in kinesiology but realized she had a talent for writing.

“I thought about what I was really good at, and the answer was writing,” Meyer said.

She pivoted to a journalism and mass communication major, with a minor in psychology. Her passion for writing led her to work on student publications in the Greenlee School of Journalism and Communication, where she founded a health and wellness magazine.

That drive to build something from scratch set the tone for her entrepreneurial approach to her business.

Building an AI copywriting company

After graduation, Meyer joined Principal Financial in institutional investing, where she translated complex economic reports into accessible updates for stakeholders. She gained business skills – but her creative energy was missing.

By freelancing on the side for content, copy and magazines, she eventually replaced her salary, left corporate life, and began the process of launching her own company.

Reveal Studio Co started out with direct client interactions, grew to include a template shop, and now includes AI tools.

In 2023, the AI chatbot ChatGPT had its one-year anniversary with over 1.7 billion users. As generative AI went mainstream and pushed into more areas, Meyer was skeptical of the rapidly growing adoption of AI in society. She began to flag AI-written content everywhere and set out to prove that it could never replicate the human voice.

“In doing so, I proved myself wrong,” Meyer said.

As Meyer researched AI, she realized it could be tailored to one’s own persona.

She developed The Complete AI Copy Buddy, a training manual that teaches an AI platform to mimic an individual’s style. By completing a template and submitting it to an AI source, users can acquire anything they need – from content ideas to full pieces such as social posts, emails, web copy and business collateral – all specifically tailored to their audience, brand, and voice.

The launch of the training manual earned $60,000 in two weeks, more than her first year’s corporate salary.

That success propelled Meyer into creating The Sales & Copy Bestie, a custom Generative Pre-trained Transformer (GPT) built from her knowledge in psychology and copywriting. Contractors support her work while she keeps the control and direction.

“If people are going to use AI – which they are – I might as well help them do it better,” she said.

Meyer prioritizes sales psychology, understanding how neuroscience drives decisions and taking that information to form effective and persuasive messages.

“Copy is messaging intended to get somebody to take action,” Meyer explained. “If I don’t understand what makes someone’s brain want to take action, then I can’t write really good copy.”

Meyer’s clients range from educators and creative service providers to lawyers, accountants, and business owners seeking sharper websites, sales pages, or email funnels.

Meyers’ vision of success

Meyer attributes her growth to persistence and a pure mindset.

“I don’t view anything as failure. Everything is just a step closer to where you want to be,” she said.

This year, Meyer plans to balance her entrepreneurial success with her creative side. She is finishing a poetry book, sketching artwork, and outlining her first novel.

“I’ve spent eight years building a really successful business,” Meyer said. “Now I want to build a life outside of work that fulfills me.”

AI Research

Accelerating HPC and AI research in universities with Amazon SageMaker HyperPod

This post was written with Mohamed Hossam of Brightskies.

Research universities engaged in large-scale AI and high-performance computing (HPC) often face significant infrastructure challenges that impede innovation and delay research outcomes. Traditional on-premises HPC clusters come with long GPU procurement cycles, rigid scaling limits, and complex maintenance requirements. These obstacles restrict researchers’ ability to iterate quickly on AI workloads such as natural language processing (NLP), computer vision, and foundation model (FM) training. Amazon SageMaker HyperPod alleviates the undifferentiated heavy lifting involved in building AI models. It helps quickly scale model development tasks such as training, fine-tuning, or inference across a cluster of hundreds or thousands of AI accelerators (NVIDIA GPUs H100, A100, and others) integrated with preconfigured HPC tools and automated scaling.

In this post, we demonstrate how a research university implemented SageMaker HyperPod to accelerate AI research by using dynamic SLURM partitions, fine-grained GPU resource management, budget-aware compute cost tracking, and multi-login node load balancing—all integrated seamlessly into the SageMaker HyperPod environment.

Solution overview

Amazon SageMaker HyperPod is designed to support large-scale machine learning operations for researchers and ML scientists. The service is fully managed by AWS, removing operational overhead while maintaining enterprise-grade security and performance.

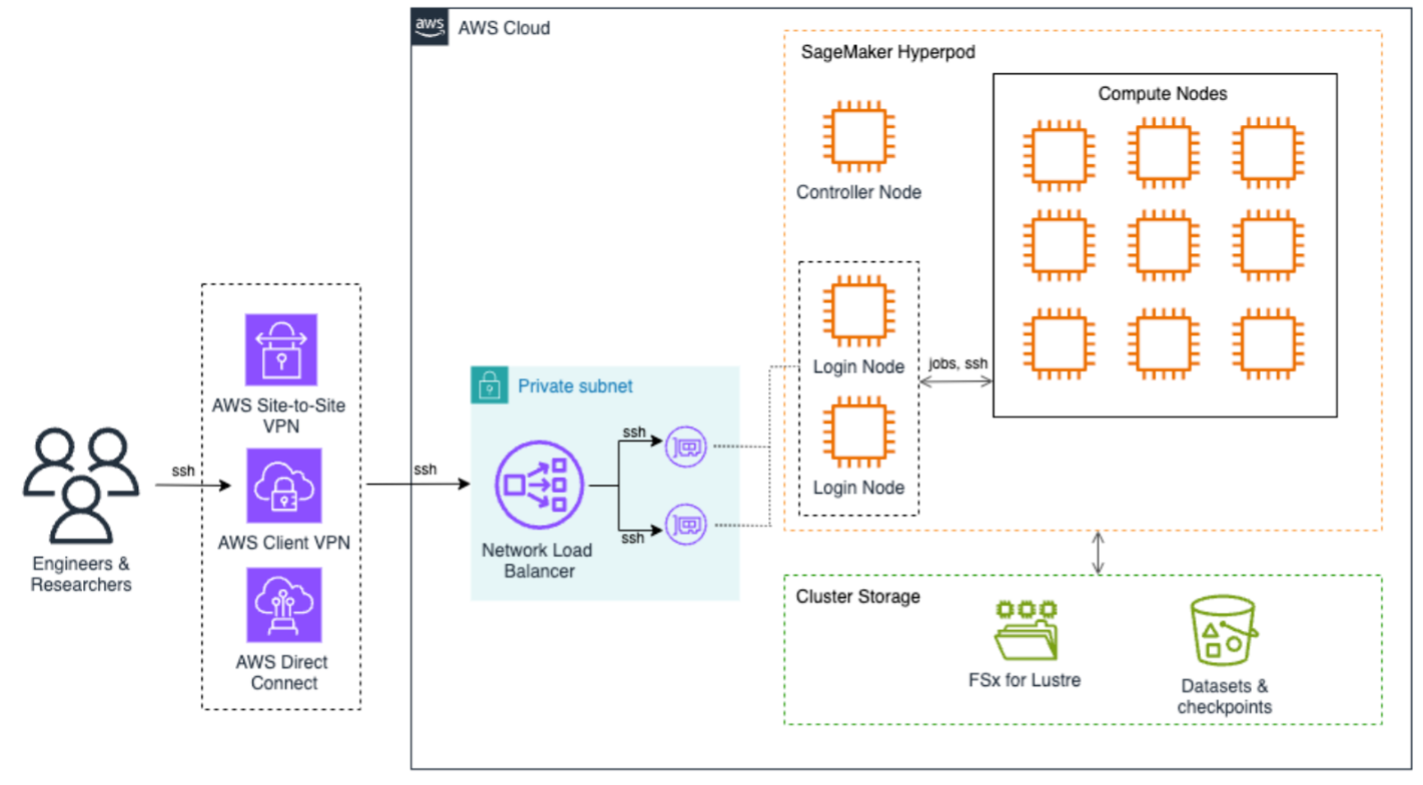

The following architecture diagram illustrates how to access SageMaker HyperPod to submit jobs. End users can use AWS Site-to-Site VPN, AWS Client VPN, or AWS Direct Connect to securely access the SageMaker HyperPod cluster. These connections terminate on the Network Load Balancer that efficiently distributes SSH traffic to login nodes, which are the primary entry points for job submission and cluster interaction. At the core of the architecture is SageMaker HyperPod compute, a controller node that orchestrates cluster operations, and multiple compute nodes arranged in a grid configuration. This setup supports efficient distributed training workloads with high-speed interconnects between nodes, all contained within a private subnet for enhanced security.

The storage infrastructure is built around two main components: Amazon FSx for Lustre provides high-performance file system capabilities, and Amazon S3 for dedicated storage for datasets and checkpoints. This dual-storage approach provides both fast data access for training workloads and secure persistence of valuable training artifacts.

The implementation consisted of several stages. In the following steps, we demonstrate how to deploy and configure the solution.

Prerequisites

Before deploying Amazon SageMaker HyperPod, make sure the following prerequisites are in place:

- AWS configuration:

- The AWS Command Line Interface (AWS CLI) configured with appropriate permissions

- Cluster configuration files prepared:

cluster-config.jsonandprovisioning-parameters.json

- Network setup:

- An AWS Identity and Management (IAM) role with permissions for the following:

Launch the CloudFormation stack

We launched an AWS CloudFormation stack to provision the necessary infrastructure components, including a VPC and subnet, FSx for Lustre file system, S3 bucket for lifecycle scripts and training data, and IAM roles with scoped permissions for cluster operation. Refer to the Amazon SageMaker HyperPod workshop for CloudFormation templates and automation scripts.

Customize SLURM cluster configuration

To align compute resources with departmental research needs, we created SLURM partitions to reflect the organizational structure, for example NLP, computer vision, and deep learning teams. We used the SLURM partition configuration to define slurm.conf with custom partitions. SLURM accounting was enabled by configuring slurmdbd and linking usage to departmental accounts and supervisors.

To support fractional GPU sharing and efficient utilization, we enabled Generic Resource (GRES) configuration. With GPU stripping, multiple users can access GPUs on the same node without contention. The GRES setup followed the guidelines from the Amazon SageMaker HyperPod workshop.

Provision and validate the cluster

We validated the cluster-config.json and provisioning-parameters.json files using the AWS CLI and a SageMaker HyperPod validation script:

Then we created the cluster:

Implement cost tracking and budget enforcement

To monitor usage and control costs, each SageMaker HyperPod resource (for example, Amazon EC2, FSx for Lustre, and others) was tagged with a unique ClusterName tag. AWS Budgets and AWS Cost Explorer reports were configured to track monthly spending per cluster. Additionally, alerts were set up to notify researchers if they approached their quota or budget thresholds.

This integration helped facilitate efficient utilization and predictable research spending.

Enable load balancing for login nodes

As the number of concurrent users increased, the university adopted a multi-login node architecture. Two login nodes were deployed in EC2 Auto Scaling groups. A Network Load Balancer was configured with target groups to route SSH and Systems Manager traffic. Lastly, AWS Lambda functions enforced session limits per user using Run-As tags with Session Manager, a capability of Systems Manager.

For details about the full implementation, see Implementing login node load balancing in SageMaker HyperPod for enhanced multi-user experience.

Configure federated access and user mapping

To facilitate secure and seamless access for researchers, the institution integrated AWS IAM Identity Center with their on-premises Active Directory (AD) using AWS Directory Service. This allowed for unified control and administration of user identities and access privileges across SageMaker HyperPod accounts. The implementation consisted of the following key components:

- Federated user integration – We mapped AD users to POSIX user names using Session Manager

run-astags, allowing fine-grained control over compute node access - Secure session management – We configured Systems Manager to make sure users access compute nodes using their own accounts, not the default

ssm-user - Identity-based tagging – Federated user names were automatically mapped to user directories, workloads, and budgets through resource tags

For full step-by-step guidance, refer to the Amazon SageMaker HyperPod workshop.

This approach streamlined user provisioning and access control while maintaining strong alignment with institutional policies and compliance requirements.

Post-deployment optimizations

To help prevent unnecessary consumption of compute resources by idle sessions, the university configured SLURM with Pluggable Authentication Modules (PAM). This setup enforces automatic logout for users after their SLURM jobs are complete or canceled, supporting prompt availability of compute nodes for queued jobs.

The configuration improved job scheduling throughput by freeing idle nodes immediately and reduced administrative overhead in managing inactive sessions.

Additionally, QoS policies were configured to control resource consumption, limit job durations, and enforce fair GPU access across users and departments. For example:

- MaxTRESPerUser – Makes sure GPU or CPU usage per user stays within defined limits

- MaxWallDurationPerJob – Helps prevent excessively long jobs from monopolizing nodes

- Priority weights – Aligns priority scheduling based on research group or project

These enhancements facilitated an optimized, balanced HPC environment that aligns with the shared infrastructure model of academic research institutions.

Clean up

To delete the resources and avoid incurring ongoing charges, complete the following steps:

- Delete the SageMaker HyperPod cluster:

- Delete the CloudFormation stack used for the SageMaker HyperPod infrastructure:

This will automatically remove associated resources, such as the VPC and subnets, FSx for Lustre file system, S3 bucket, and IAM roles. If you created these resources outside of CloudFormation, you must delete them manually.

Conclusion

SageMaker HyperPod provides research universities with a powerful, fully managed HPC solution tailored for the unique demands of AI workloads. By automating infrastructure provisioning, scaling, and resource optimization, institutions can accelerate innovation while maintaining budget control and operational efficiency. Through customized SLURM configurations, GPU sharing using GRES, federated access, and robust login node balancing, this solution highlights the potential of SageMaker HyperPod to transform research computing, so researchers can focus on science, not infrastructure.

For more details on making the most of SageMaker HyperPod, check out the SageMaker HyperPod workshop and explore further blog posts about SageMaker HyperPod.

About the authors

Tasneem Fathima is Senior Solutions Architect at AWS. She supports Higher Education and Research customers in the United Arab Emirates to adopt cloud technologies, improve their time to science, and innovate on AWS.

Tasneem Fathima is Senior Solutions Architect at AWS. She supports Higher Education and Research customers in the United Arab Emirates to adopt cloud technologies, improve their time to science, and innovate on AWS.

Mohamed Hossam is a Senior HPC Cloud Solutions Architect at Brightskies, specializing in high-performance computing (HPC) and AI infrastructure on AWS. He supports universities and research institutions across the Gulf and Middle East in harnessing GPU clusters, accelerating AI adoption, and migrating HPC/AI/ML workloads to the AWS Cloud. In his free time, Mohamed enjoys playing video games.

Mohamed Hossam is a Senior HPC Cloud Solutions Architect at Brightskies, specializing in high-performance computing (HPC) and AI infrastructure on AWS. He supports universities and research institutions across the Gulf and Middle East in harnessing GPU clusters, accelerating AI adoption, and migrating HPC/AI/ML workloads to the AWS Cloud. In his free time, Mohamed enjoys playing video games.

AI Research

Better Artificial Intelligence Stock: Nvidia vs. Intel

Can investors expect better results from Intel now that the federal government has taken a stake in it?

There are plenty of ways to play the artificial intelligence (AI) craze that’s dominating Wall Street these days. The tried-and-true stock is Nvidia (NVDA -2.71%), the designer of the advanced chips that are the tech world’s most popular choices for running large language models, generative AI, and other cutting-edge functions. Nvidia has made a lot of investors richer over the last few years, and has now grown to become the largest publicly traded company in the world, with a market capitalization approaching $4.4 trillion.

But another possible pick for tech sector investors is Intel (INTC -1.52%), which is more of a legacy computing company. Intel has lagged badly in the AI race, particularly with its foundry division, but it could benefit from the recent investment by the U.S. government, which has taken a 10% stake in the company.

Intel stock is up by 20% so far in 2025. Could it be a better AI investment from here than Nvidia?

Image source: Intel.

The market position for Nvidia

Nvidia’s graphics processing units (GPUs) are the industry standard when it comes to providing the types of computing power required to teach AI models and deploy them in real-world applications. Its CUDA parallel computing platform lets developers write code and build applications on Nvidia GPUs. Every GPU is a parallel processor — capable of performing thousands of operations at once. The CUDA platform helps developers take certain types of computationally heavy processes and divide them into small individual threads that can be handled separately and simultaneously by such chips, thus getting more effectiveness out of them. The results are faster processing times and a more efficient use of computing resources.

That’s particularly important because it keeps hyperscalers and other developers locked into the Nvidia platform when they take their projects live — because CUDA can only be run on Nvidia’s chips. Its Hopper GPUs were the gold standard for GPUs, but now it’s selling its new Blackwell architecture chips, which deliver faster performance with lower power consumption. Blackwell sales generated $11 billion for Nvidia in the first quarter they were available — its fiscal 2025 Q4, which ended Jan. 26 — and boomed to $27 billion in the first quarter of its fiscal 2026. Blackwell sales rose another 17% to roughly $31.6 billion in fiscal Q2, which ended July 27. That was about 76% of the company’s data center sales. CEO Jensen Huang described demand for the Blackwell GPUs as “extraordinary.”

The market position for Intel

Intel, meanwhile, is the market leader in the data center central processing unit (CPU) space, but it’s facing serious challenges from rivals Advanced Micro Devices and Arm Holdings. Analysts with Mercury Research and International Data Corporation (IDC) predict that Intel’s market share will slip to 55% this year as AMD’s rises to 36%. Further, they project that Intel’s market share will fall below 50% by 2027, with AMD getting about 40% and Arm getting between 10% and 12% of the market.

Intel has also been attempting to build up its third-party foundry business, but that unit has struggled to find its footing. While Taiwan Semiconductor Manufacturing is still getting the lion’s share of the world’s chip fabrication business, Intel has had trouble landing clients. Management has announced that it’s shelving its plans to build chip foundries in Germany and Poland, and will slow the pace of construction at its foundry project in Ohio.

The company is investing more than $100 billion in its domestic foundry business, with its next plant expected to open this year in Arizona.

“We are also taking the actions needed to build a more financially disciplined foundry,” CEO Lip-Bu Tan said in the fiscal Q2 earnings press release. “It’s going to take time, but we see clear opportunities to enhance our competitive position, improve our profitability and create long-term shareholder value.”

What’s moving Intel stock now

While Intel is in a weaker financial position than Nvidia, some investors are speculating that it could be hitting a bottom — especially now that the U.S. government has taken a stake in the business. The Trump administration announced in August that it would purchase 433.3 million shares of Intel stock, taking a 9.9% stake in the company. The U.S. also gets a five-year warrant for $20 per share to take an additional 5% of shares should Intel not own a majority of its foundry business.

These moves are part of a push by Washington to encourage the development and manufacturing of high-end semiconductors in the U.S.

“As the only semiconductor company that does leading-edge logic R&D and manufacturing in the U.S., Intel is deeply committed to ensuring the world’s most advanced technologies are American made,” Tan said.

There’s still skepticism about Intel

Investors have already baked some high expectations into Intel’s stock price. Its forward price-to-earnings ratio, which a couple of years ago was roughly in line with Nvidia’s, has surged higher since then, and is now approaching 200, while Nvidia trades at a more reasonable 38.

NVDA PE Ratio (Forward) data by YCharts.

Intel’s stock hasn’t traded at levels like this in two decades. “The stock looks incredibly expensive here,” Wayne Kaufman, chief market analyst at Phoenix Financial Services, told Bloomberg. “That kind of multiple is a bet that the government will push Intel so hard on customers that it becomes a winner.”

Most analysts who revisited Intel following the Trump administration announcement reiterated their hold positions, but also are projecting significant downside for the stock. Bernstein’s Stacy Rasgon has a $21 12-month price target on Intel, which would amount to a roughly 12% downside, while TD Cowen’s Joshua Buchalter has a $20 price target.

Intel has had a net loss of $21 billion over its last four reported quarters, and I don’t see a path for the company to turn its finances around abruptly enough to justify its frothy forward P/E. While its still-downtrodden share price might represent a buying opportunity for investors, I think it’s a shaky bet at best considering that Intel is playing catch-up in AI.

Intel’s new government backing gives it a potential tailwind, but Nvidia’s leadership in GPUs, its CUDA platform, and its AI infrastructure make it a safer bet for long-term investors.

Patrick Sanders has positions in Nvidia. The Motley Fool has positions in and recommends Advanced Micro Devices, Intel, Nvidia, and Taiwan Semiconductor Manufacturing. The Motley Fool recommends the following options: short August 2025 $24 calls on Intel and short November 2025 $21 puts on Intel. The Motley Fool has a disclosure policy.

-

Business1 week ago

Business1 week agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics