Events & Conferences

How a lifelong music student uses melody and lyrics in TTS research

Ariadna Sanchez grew up steeped in the world of music performance and orchestra, learning to play violin at age 5 and setting her sights on a life in music. Today she’s a text-to-speech (TTS) research scientist at Amazon — and those early musical interests contributed to her career path.

Sanchez works in polyglot TTS, which involves researching voice models that can speak any language with a native accent. Ultimately, TTS is a mixed discipline — not just engineering or purely tech — and Sanchez says her musical background leads her to find novel solutions or look at problems in a unique way.

Linking music with tech

Growing up with a music-intensive education in Barcelona, Spain, Sanchez was thinking ahead to university by the time she was 15, and she wanted to find a degree program with a connection to music. She found one in the telecommunications engineering department at Barcelona’s Polytechnic University of Catalonia, where one of the branches was speech, music, and video processing. She was also intrigued by the program’s AI and machine learning offerings.

Courtesy of Ariadna Sanchez

At the time, she was focused on music and how it could be applied to machine learning. One of her professors was working on creating a voice that could be modulated in different ways to sound more human, combining language and technological elements.

“That got me into the path of realizing ‘Oh, I actually really like this TTS side of things,’” she says. An internship at an acoustics consultancy also helped her realize that she wanted to do work where she could look for breakthroughs and “discover new things,” she says.

In her senior thesis, she combined those interests to develop an audio-based game. Especially attracted to well-written, story-based games, Sanchez says she plays all types of video games, which are a hobby and a passion for her.

“I tried to understand how acoustics from different environments can affect the player’s perception and how the player can enjoy and navigate through an audio-only game,” she says.

The path to TTS research

An internship at Telefónica, where her work involved machine learning that focused on text-based natural language processing, helped determine the next steps on her journey. After finishing her undergraduate degree, she pursued a master’s in speech and language processing at the University of Edinburgh in Scotland.

Courtesy of Ariadna Sanchez

There she studied natural language understanding, human-computer interaction, text-to-speech, and automatic speech recognition.

“I found TTS to be more engaging, overall,” she says. “Speech is not only about what you say, but also how you say it, how the person speaking sounds, et cetera.”

Sanchez took it upon herself to learn more about the nuances of languages including English, Scottish Gaelic, and Japanese. She links her fascination with that subject to her longtime interest in a wide variety of music, from punk to classical to mainstream pop and fusion styles. Her TTS research also piqued her interest in learning about languages and how they differ from each other.

“I have always really liked melodic music with lyrics, which made me intrigued about the nuances of language, how lyrics are composed and semantics of language,” she says. “It also made me really invested in learning languages to be able to understand the music I listen to.”

When Amazon recruiters visited the University of Edinburgh as Sanchez was finishing her degree, they were looking for a language engineer fluent in Spanish and hired her as a language engineer intern.

That internship resulted in a full-time role at Amazon.

“My background is mostly on the engineering side, so I not only built more skills on the linguistics front during my internship, but I also learned a lot about how a team cooperates, and how important prioritization is to make a project successful.”

Many accents, one voice

Now, almost four years into her job as a research scientist, Sanchez focuses on providing a more uniform voice experience. In the past, new languages and accents on Echo devices have had distinct voices, such as American Spanish and European Spanish, which sound like two different people. The goal of Sanchez’s research is to design models that pronounce words from various languages with the correct local accent, but in the same voice, for continuity.

Alexa speaking in several languages in a masculine-sounding voice

“If you have a multilingual household like mine, it’s a bit weird to have different voices speaking different languages,” she noted. “But if you have the same person speaking all these different languages back to you, it sounds less jarring.” She and her team have already proven this can work by having the British English and American English masculine-sounding speakers now using the same voice.

Sanchez says that her work is also influenced by her reading about technology ethics, especially works by authors Cathy O’Neil and Caroline Criado Perez.

“Providing more voice options is important,” she says. “Having a bigger range of voices brings more variety and brings more validation to different communities.” To that end, her team works on developing polyglot voices that represent a wider range of voices and speaking styles.

In September of this year, Sanchez presented “Unify and conquer: How phonetic feature representation affects polyglot text-to-speech (TTS)” at Interspeech 2022. The paper — one of three accepted to Interspeech — explored the two primary approaches to representing linguistic features within a multilingual model.

In the paper, Sanchez and her coauthors note that: “The main contribution of this paper lies in the experimentation and evaluation aimed at understanding how unified representations and separate representations of input linguistic features affect the quality of Polyglot synthesis in terms of naturalness and accent of the voice. To the best of our knowledge, this is the first work conducting a systematic study and evaluation on this subject.”

“When we were looking at our design choices for multispeaker multilingual models, we did not find any literature that compared different types of linguistic features thoroughly,” she says. “We decided to explore and write about two very distinct approaches to representing input linguistic features — unifying them based on phonetic knowledge, or separating all tokens that represent phonemes from different languages/accents. With this, we found that using a unified representation led to more natural and stable speech, while also having cleaner accent.”

And while this was an important step, Sanchez emphasized there are several more steps to take: “To move forward in the field, we need to improve the control we have on speech parameters, like pitch, intonation, tone, and timbre, in isolation.”

She and her team continue to work toward even more natural-sounding speech that is closer to the way people actually speak.

“We’re at a very exciting point of text-to-speech, where we are moving away from the old TTS systems that sounded robotic towards a more approachable and friendly voice,” she says. “In the end, that is an important factor that allows our customers to have more engaging conversations with Alexa every day.”

Events & Conferences

Simplifying book discovery with ML-powered visual autocomplete suggestions

Every day, millions of customers search for books in various formats (audiobooks, e-books, and physical books) across Amazon and Audible. Traditional keyword autocomplete suggestions, while helpful, usually require several steps before customers find their desired content. Audible took on the challenge of making book discovery more intuitive and personalized while reducing the number of steps to purchase.

We developed an instant visual autocomplete system that enhances the search experience across Amazon and Audible. As the user begins typing a query, our solution provides visual previews with book covers, enabling direct navigation to relevant landing pages instead of the search result page. It also delivers real-time personalized format recommendations and incorporates multiple searchable entities, such as book pages, author pages, and series pages.

Our system needed to understand user intent from just a few keystrokes and determine the most relevant books to display, all while maintaining low latency for millions of queries. Using historical search data, we match keystrokes to products, transforming partial inputs into meaningful search suggestions. To ensure quality, we implemented confidence-based filtering mechanisms, which are particularly important for distinguishing between general queries like “mystery” and specific title searches. To reflect customers’ most recent interests, the system applies time-decay functions to long historical user interaction data.

To meet the unique requirements of each use case, we developed two distinct technical approaches. On Audible, we deployed a deep pairwise-learning-to-rank (DeepPLTR) model. The DeepPLTR model considers pairs of books and learns to assign a higher score to the one that better matches the customer query.

The DeepPLTR model’s architecture consists of three specialized towers. The left tower factors in contextual features and recent search patterns using a long-short-term-memory model, which processes data sequentially and considers its prior decisions when issuing a new term in the sequence. The middle tower handles keyword and item engagement history. The right tower factors in customer taste preferences and product descriptions to enable personalization. The model learns from paired examples, but at runtime, it relies on books’ absolute scores to assemble a ranked list.

For Amazon, we implemented a two-stage modeling approach involving a probabilistic information-retrieval model to determine the book title that best matches each keyword and a second model that personalizes the book format (audiobooks, e-books, and physical books). This dual-strategy approach maintains low latency while still enabling personalization.

In practice, a customer who types “dungeon craw” in the search bar now sees a visual recommendation for the book Dungeon Crawler Carl, complete with book cover, reducing friction by bypassing a search results page and sending the customer directly to the product detail page. On Audible, the system also personalizes autocomplete results and enriches the discovery experience with relevant connections. These include links to the author’s complete works (Matt Dinniman’s author page) and, for titles that belong to a series, links to the full collection (such as the Dungeon Crawler Carl series).

On Amazon, when the customer clicks on the title, the model personalizes the right book-format (audiobooks, e-books, physical books) recommendation and directs the customer to the right product detail page.

In both cases, after the customer has entered a certain number of keystrokes, the system employs a model to detect customer intent (e.g., book title intent for Amazon or author intent for Audible) and determine which visual widget should be displayed.

Audible and Amazon books’ visual autocomplete provides customers with more relevant content more rapidly than traditional autocomplete, and its direct navigation reduces the number of steps to find and access desired books — all while handling millions of queries at low latency.

This technology is not just about making book discovery easier; it is laying the foundation for future improvements in search personalization and visual discovery across Amazon’s ecosystem.

Acknowledgements: Jiun Kim, Sumit Khetan, Armen Stepanyan, Jack Xuan, Nathan Brothers, Eddie Chen, Vincent Lee, Soumy Ladha, Justine Luo, Yuchen Zeng, David Torres, Gali Deutsch, Chaitra Ramdas, Christopher Gomez, Sharmila Tamby, Melissa Ma, Cheng Luo, Jeffrey Jiang, Pavel Fedorov, Ronald Denaux, Aishwarya Vasanth, Azad Bajaj, Mary Heer, Adam Lowe, Jenny Wang, Cameron Cramer, Emmanuel Ankrah, Lydia Diaz, Suzette Islam, Fei Gu, Phil Weaver, Huan Xue, Kimmy Dai, Evangeline Yang, Chao Zhu, Anvy Tran, Jessica Wu, Xiaoxiong Huang, Jiushan Yang

Events & Conferences

Revolutionizing warehouse automation with scientific simulation

Modern warehouses rely on complex networks of sensors to enable safe and efficient operations. These sensors must detect everything from packages and containers to robots and vehicles, often in changing environments with varying lighting conditions. More important for Amazon, we need to be able to detect barcodes in an efficient way.

The Amazon Robotics ID (ARID) team focuses on solving this problem. When we first started working on it, we faced a significant bottleneck: optimizing sensor placement required weeks or months of physical prototyping and real-world testing, severely limiting our ability to explore innovative solutions.

To transform this process, we developed Sensor Workbench (SWB), a sensor simulation platform built on NVIDIA’s Isaac Sim that combines parallel processing, physics-based sensor modeling, and high-fidelity 3-D environments. By providing virtual testing environments that mirror real-world conditions with unprecedented accuracy, SWB allows our teams to explore hundreds of configurations in the same amount of time it previously took to test just a few physical setups.

Camera and target selection/positioning

Sensor Workbench users can select different cameras and targets and position them in 3-D space to receive real-time feedback on barcode decodability.

Three key innovations enabled SWB: a specialized parallel-computing architecture that performs simulation tasks across the GPU; a custom CAD-to-OpenUSD (Universal Scene Description) pipeline; and the use of OpenUSD as the ground truth throughout the simulation process.

Parallel-computing architecture

Our parallel-processing pipeline leverages NVIDIA’s Warp library with custom computation kernels to maximize GPU utilization. By maintaining 3-D objects persistently in GPU memory and updating transforms only when objects move, we eliminate redundant data transfers. We also perform computations only when needed — when, for instance, a sensor parameter changes, or something moves. By these means, we achieve real-time performance.

Visualization methods

Sensor Workbench users can pick sphere- or plane-based visualizations, to see how the positions and rotations of individual barcodes affect performance.

This architecture allows us to perform complex calculations for multiple sensors simultaneously, enabling instant feedback in the form of immersive 3-D visuals. Those visuals represent metrics that barcode-detection machine-learning models need to work, as teams adjust sensor positions and parameters in the environment.

CAD to USD

Our second innovation involved developing a custom CAD-to-OpenUSD pipeline that automatically converts detailed warehouse models into optimized 3-D assets. Our CAD-to-USD conversion pipeline replicates the structure and content of models created in the modeling program SolidWorks with a 1:1 mapping. We start by extracting essential data — including world transforms, mesh geometry, material properties, and joint information — from the CAD file. The full assembly-and-part hierarchy is preserved so that the resulting USD stage mirrors the CAD tree structure exactly.

To ensure modularity and maintainability, we organize the data into separate USD layers covering mesh, materials, joints, and transforms. This layered approach ensures that the converted USD file faithfully retains the asset structure, geometry, and visual fidelity of the original CAD model, enabling accurate and scalable integration for real-time visualization, simulation, and collaboration.

OpenUSD as ground truth

The third important factor was our novel approach to using OpenUSD as the ground truth throughout the entire simulation process. We developed custom schemas that extend beyond basic 3-D-asset information to include enriched environment descriptions and simulation parameters. Our system continuously records all scene activities — from sensor positions and orientations to object movements and parameter changes — directly into the USD stage in real time. We even maintain user interface elements and their states within USD, enabling us to restore not just the simulation configuration but the complete user interface state as well.

This architecture ensures that when USD initial configurations change, the simulation automatically adapts without requiring modifications to the core software. By maintaining this live synchronization between the simulation state and the USD representation, we create a reliable source of truth that captures the complete state of the simulation environment, allowing users to save and re-create simulation configurations exactly as needed. The interfaces simply reflect the state of the world, creating a flexible and maintainable system that can evolve with our needs.

Application

With SWB, our teams can now rapidly evaluate sensor mounting positions and verify overall concepts in a fraction of the time previously required. More importantly, SWB has become a powerful platform for cross-functional collaboration, allowing engineers, scientists, and operational teams to work together in real time, visualizing and adjusting sensor configurations while immediately seeing the impact of their changes and sharing their results with each other.

New perspectives

In projection mode, an explicit target is not needed. Instead, Sensor Workbench uses the whole environment as a target, projecting rays from the camera to identify locations for barcode placement. Users can also switch between a comprehensive three-quarters view and the perspectives of individual cameras.

Due to the initial success in simulating barcode-reading scenarios, we have expanded SWB’s capabilities to incorporate high-fidelity lighting simulations. This allows teams to iterate on new baffle and light designs, further optimizing the conditions for reliable barcode detection, while ensuring that lighting conditions are safe for human eyes, too. Teams can now explore various lighting conditions, target positions, and sensor configurations simultaneously, gleaning insights that would take months to accumulate through traditional testing methods.

Looking ahead, we are working on several exciting enhancements to the system. Our current focus is on integrating more-advanced sensor simulations that combine analytical models with real-world measurement feedback from the ARID team, further increasing the system’s accuracy and practical utility. We are also exploring the use of AI to suggest optimal sensor placements for new station designs, which could potentially identify novel configurations that users of the tool might not consider.

Additionally, we are looking to expand the system to serve as a comprehensive synthetic-data generation platform. This will go beyond just simulating barcode-detection scenarios, providing a full digital environment for testing sensors and algorithms. This capability will let teams validate and train their systems using diverse, automatically generated datasets that capture the full range of conditions they might encounter in real-world operations.

By combining advanced scientific computing with practical industrial applications, SWB represents a significant step forward in warehouse automation development. The platform demonstrates how sophisticated simulation tools can dramatically accelerate innovation in complex industrial systems. As we continue to enhance the system with new capabilities, we are excited about its potential to further transform and set new standards for warehouse automation.

Events & Conferences

Enabling Kotlin Incremental Compilation on Buck2

The Kotlin incremental compiler has been a true gem for developers chasing faster compilation since its introduction in build tools. Now, we’re excited to bring its benefits to Buck2 – Meta’s build system – to unlock even more speed and efficiency for Kotlin developers.

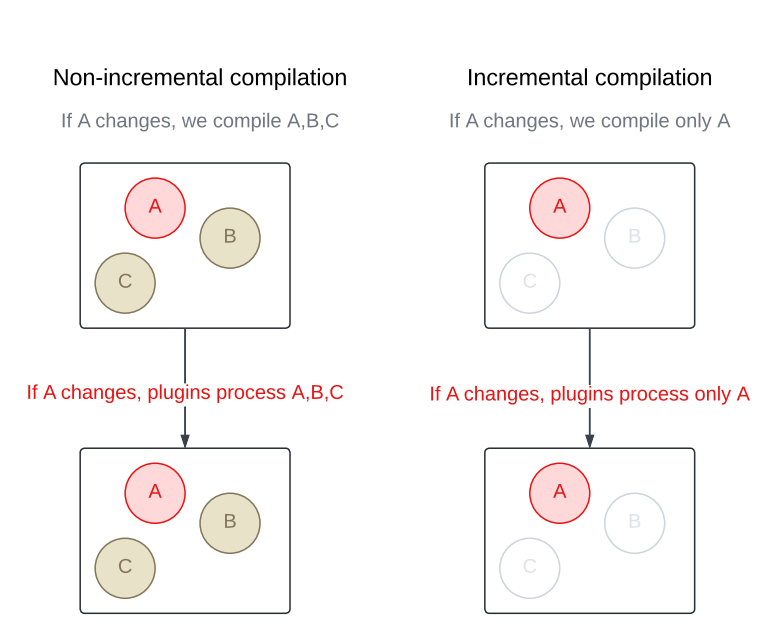

Unlike a traditional compiler that recompiles an entire module every time, an incremental compiler focuses only on what was changed. This cuts down compilation time in a big way, especially when modules contain a large number of source files.

Buck2 promotes small modules as a key strategy for achieving fast build times. Our codebase followed that principle closely, and for a long time, it worked well. With only a handful of files in each module, and Buck2’s support for fast incremental builds and parallel execution, incremental compilation didn’t seem like something we needed.

But, let’s be real: Codebases grow, teams change, and reality sometimes drifts away from the original plan. Over time, some modules started getting bigger – either from legacy or just organic growth. And while big modules were still the exception, they started having quite an impact on build times.

So we gave the Kotlin incremental compiler a closer look – and we’re glad we did. The results? Some critical modules now build up to 3x faster. That’s a big win for developer productivity and overall build happiness.

Curious about how we made it all work in Buck2? Keep reading. We’ll walk you through the steps we took to bring the Kotlin incremental compiler to life in our Android toolchain.

Step 1: Integrating Kotlin’s Build Tools API

As of Kotlin 2.2.0, the only guaranteed public contract to use the compiler is through the command-line interface (CLI). But since the CLI doesn’t support incremental compilation (at least for now), it didn’t meet our needs. Alternatively, we could integrate the Kotlin incremental compiler directly via the internal compiler’s components – APIs that are technically accessible but not intended for public use. However, relying on them would’ve made our toolchain fragile and likely to break with every Kotlin update since there’s no guarantee of backward compatibility. That didn’t seem like the right path either.

Then we came across the Build Tools API (KEEP), introduced in Kotlin 1.9.20 as the official integration point for the compiler – including support for incremental compilation. Although the API was still marked as experimental, we decided to give it a try. We knew it would eventually stabilize, and saw it as a great opportunity to get in early, provide feedback, and help shape its direction. Compared to using internal components, it offered a far more sustainable and future-proof approach to integration.

⚠️ Depending on kotlin-compiler? Watch out!

In the Java world, a shaded library is a modified version of the library where the class and package names are changed. This process – called shading – is a handy way to avoid classpath conflicts, prevent version clashes between libraries, and keeps internal details from leaking out.

Here’s quick example:

- Unshaded (original) class: com.intellij.util.io.DataExternalizer

- Shaded class: org.jetbrains.kotlin.com.intellij.util.io.DataExternalizer

The Build Tools API depends on the shaded version of the Kotlin compiler (kotlin-compiler-embeddable). But our Android toolchain was historically built with the unshaded one (kotlin-compiler). That mismatch led to java.lang.NoClassDefFoundError crashes when testing the integration because the shaded classes simply weren’t on the classpath.

Replacing the unshaded compiler across the entire Android toolchain would’ve been a big effort. So to keep moving forward, we went with a quick workaround: We unshaded the Build Tools API instead. 🙈 Using the jarjar library, we stripped the org.jetbrains.kotlin prefix from class names and rebuilt the library.

Don’t worry, once we had a working prototype and confirmed everything behaved as expected, we circled back and did it right – fully migrating our toolchain to use the shaded Kotlin compiler. That brought us back in line with the API’s expectations and gave us a more stable setup for the future.

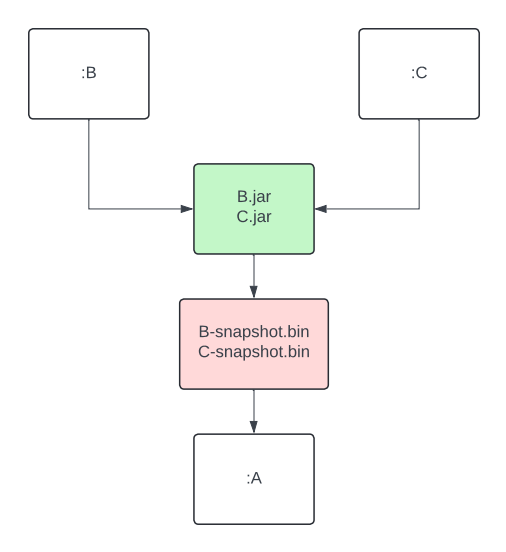

Step 2: Keeping previous output around for the incremental compiler

To compile incrementally, the Kotlin compiler needs access to the output from the previous build. Simple enough, but Buck2 deletes that output by default before rebuilding a module.

With incremental actions, you can configure Buck2 to skip the automatic cleanup of previous outputs. This gives your build actions access to everything from the last run. The tradeoff is that it’s now up to you to figure out what’s still useful and manually clean up the rest. It’s a bit more work, but it’s exactly what we needed to make incremental compilation possible.

Step 3: Making the incremental compiler cache relocatable

At first, this might not seem like a big deal. You’re not planning to move your codebase around, so why worry about making the cache relocatable, right?

Well… that’s until you realize you’re no longer in a tiny team, and you’re definitely not the only one building the project. Suddenly, it does matter.

Buck2 supports distributed builds, which means your builds don’t have to run only on your local machine. They can be executed elsewhere, with the results sent back to you. And if your compiler cache isn’t relocatable, this setup can quickly lead to trouble – from conflicting overloads to strange ambiguity errors caused by mismatched paths in cached data.

So we made sure to configure the root project directory and the build directory explicitly in the incremental compilation settings. This keeps the compiler cache stable and reliable, no matter who runs the build or where it happens.

Step 4: Configuring the incremental compiler

In a nutshell, to decide what needs to be recompiled, the Kotlin incremental compiler looks for changes in two places:

- Files within the module being rebuilt.

- The module’s dependencies.

Once the changes are found, the compiler figures out which files in the module are affected – whether by direct edits or through updated dependencies – and recompiles only those.

To get this process rolling, the compiler needs just a little nudge to understand how much work it really has to do.

So let’s give it that nudge!

Tracking changes inside the module

When it comes to tracking changes, you’ve got two options: You can either let the compiler do its magic and detect changes automatically, or you can give it a hand by passing a list of modified files yourself. The first option is great if you don’t know which files have changed or if you just want to get something working quickly (like we did during prototyping). However, if you’re on a Kotlin version earlier than 2.1.20, you have to provide this information yourself. Automatic source change detection via the Build Tools API isn’t available prior to that. Even with newer versions, if the build tool already has the change list before compilation, it’s still worth using it to optimize the process.

This is where Buck’s incremental actions come in handy again! Not only can we preserve the output from the previous run, but we also get hash digests for every action input. By comparing those hashes with the ones from the last build, we can generate a list of changed files. From there, we pass that list to the compiler to kick off incremental compilation right away – no need for the compiler to do any change detection on its own.

Tracking changes in dependencies

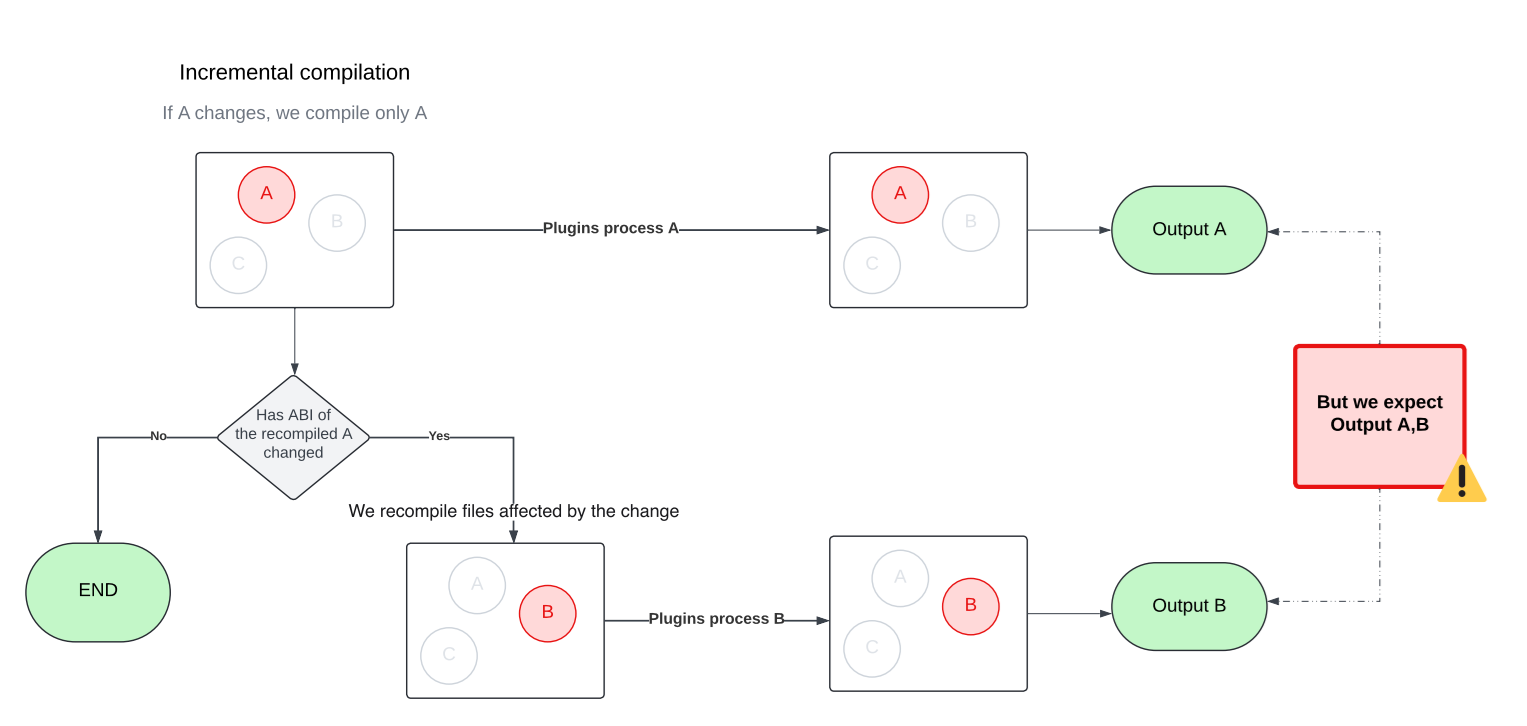

Sometimes it’s not the module itself that changes, it’s something the module depends on. In these cases, the compiler relies on classpath snapshot. These snapshots capture the Application Binary Interface (ABI) of a library. By comparing the current snapshots to the previous one, the compiler can detect changes in dependencies and figure out which files in your module are affected. This adds an extra layer of filtering on top of standard compilation avoidance.

In Buck2, we added a dedicated action to generate classpath snapshots from library outputs. This artifact is then passed as an input to the consuming module, right alongside the library’s compiled output. The best part? Since it’s a separate action, it can be run remotely or be pulled from cache, so your machine doesn’t have to do the heavy lifting of extracting ABI at this step.

If, after all, only your module changes but your dependencies do not, the API also lets you skip the snapshot comparison entirely if your build tool handles the dependency analysis on its own. Since we already had the necessary data from Buck2’s incremental actions, adding this optimization was almost free.

Step 5: Making compiler plugins work with the incremental compiler

One of the biggest challenges we faced when integrating the incremental compiler was making it play nicely with our custom compiler plugins, many of which are important to our build optimization strategy. This step was necessary for unlocking the full performance benefits of incremental compilation, but it came with two major issues we needed to solve.

🚨 Problem 1: Incomplete results

As we already know, the input to the incremental compiler does not have to include all Kotlin source files. Our plugins weren’t designed for this and ended up producing incomplete results when run on just a subset of files. We had to make them incremental as well so they could handle partial inputs correctly.

🚨 Problem 2: Multiple rounds of Compilation

The Kotlin incremental compiler doesn’t just recompile the files that changed in a module. It may also need to recompile other files in the same module that are affected by those changes. Figuring out the exact set of affected files is tricky, especially when circular dependencies come into play. To handle this, the incremental compiler approximates the affected set by compiling in multiple rounds within a single build.

💡Curious how that works under the hood? The Kotlin blog on fast compilation has a great deep dive that’s worth checking out.

This behavior comes with a side effect, though. Since the compiler may run in multiple rounds with different sets of files, compiler plugins can also be triggered multiple times, each time with a different input. That can be problematic, as later plugin runs may override outputs produced by earlier ones. To avoid this, we updated our plugins to accumulate their results across rounds rather than replacing them.

Step 6: Verifying the functionality of annotation processors

Most of our annotation processors use Kotlin Symbol Processing (KSP2), which made this step pretty smooth. KSP2 is designed as a standalone tool that uses the Kotlin Analysis API to analyze source code. Unlike compiler plugins, it runs independently from the standard compilation flow. Thanks to this setup, we were able to continue using KSP2 without any changes.

💡 Bonus: KSP2 comes with its own built-in incremental processing support. It’s fully self-contained and doesn’t depend on the incremental compiler at all.

Before we adopted KSP2 (or when we were using an older version of the Kotlin Annotation Processing Tool (KAPT), which operates as a plugin) our annotation processors ran in a separate step dedicated solely to annotation processing. That step ran before the main compilation and was always non-incremental.

Step 7: Enabling compilation against ABI

To maximize cache hits, Buck2 builds Android modules against the class ABI instead of the full JAR. For Kotlin targets, we use the jvm-abi-gen compiler plugin to generate class ABI during compilation.

But once we turned on incremental compilation, a couple of new challenges popped up:

- The jvm-abi-gen plugin currently lacks direct support for incremental compilation, which ties back to the issues we mentioned earlier with compiler plugins.

- ABI extraction now happens twice – once during compilation via jvm-abi-gen, and again when the incremental compiler creates classpath snapshots.

In theory, both problems could be solved by switching to full JAR compilation and relying on classpath snapshots to maintain cache hits. While that could work in principle, it would mean giving up some of the build optimizations we’ve already got in place – a trade-off that needs careful evaluation before making any changes.

For now, we’ve implemented a custom (yet suboptimal) solution that merges the newly generated ABI with the previous result. It gets the job done, but we’re still actively exploring better long-term alternatives.

Ideally, we’d be able to reuse the information already collected for classpath snapshot or, even better, have this kind of support built directly into the Kotlin compiler. There’s an open ticket for that: KT-62881. Fingers crossed!

Step 8: Testing

Measuring the impact of build changes is not an easy task. Benchmarking is great for getting a sense of a feature’s potential, but it doesn’t always reflect how things perform in “the real world.” Pre/post testing can help with that, but it’s tough to isolate the impact of a single change, especially when you’re not the only one pushing code.

We set up A/B testing to overcome these obstacles and measure the true impact of the Kotlin incremental compiler on Meta’s codebase with high confidence. It took a bit of extra work to keep the cache healthy across variants, but it gave us a clean, isolated view of how much difference the incremental compiler really made at scale.

We started with the largest modules – the ones we already knew were slowing builds the most. Given their size and known impact, we expected to see benefits quickly. And sure enough, we did.

The impact of incremental compilation

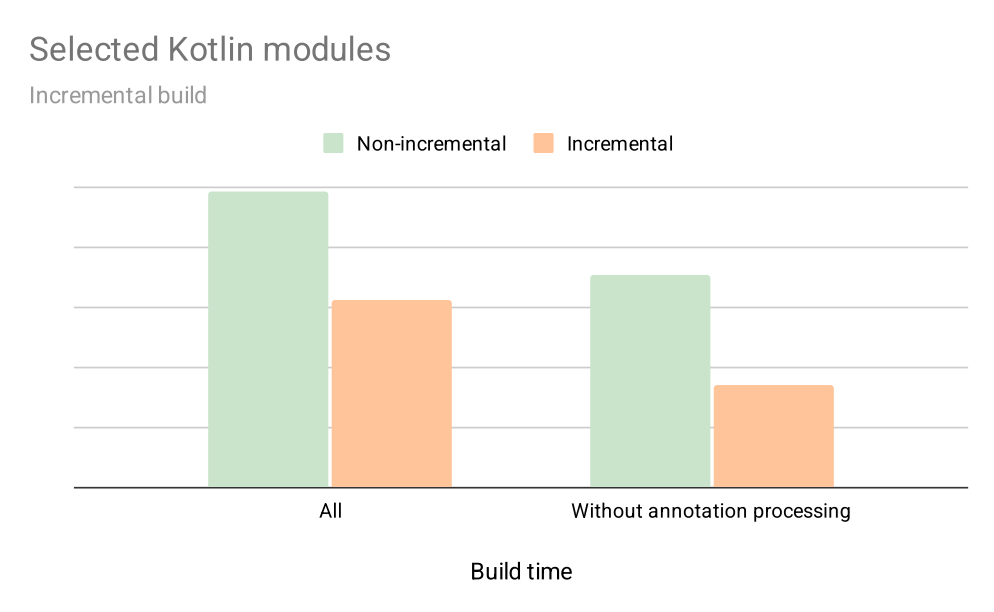

The graph below shows early results on how enabling incremental compilation for selected targets impacts their local build times during incremental builds over a 4-week period. This includes not just compilation, but also annotation processing, and a few other optimisations we’ve added along the way.

With incremental compilation, we’ve seen about a 30% improvement for the average developer. And for modules without annotation processing, the speed nearly doubled. That was more than enough to convince us that the incremental compiler is here to stay.

What’s next

Kotlin incremental compilation is now supported in Buck2, and we’re actively rolling it out across our codebase! For now, it’s available for internal use only, but we’re working on bringing it to the recently introduced open source toolchain as well.

But that’s not all! We’re also exploring ways to expand incrementality across the entire Android toolchain, including tools like Kosabi (the Kotlin counterpart to Jasabi), to deliver even faster build times and even better developer experience.

To learn more about Meta Open Source, visit our open source site, subscribe to our YouTube channel, or follow us on Facebook, Threads, X and LinkedIn.

-

Business4 days ago

Business4 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoAERDF highlights the latest PreK-12 discoveries and inventions

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics