Education

Glasgow Caledonian pauses recruitment to BCA-risk courses

- Scottish university writes to agents to inform them of “pause” on course recruitment, along with withdrawal of offers and refunds of depsosits

- Only courses that don’t meet the new BCA metrics are impacted – but they have not been named yet

- The move comes as British universities audit the threat to sponsor licence following a compliance crackdown outlined in Keir Starmer’s immigration white paper.

Writing to its network of global recruitment partners, Glasgow Caledonian University has acted upon its own internal analysis of visa refusals, enrolment and course completion rates by pausing recruitment to specific courses where there could be a risk to BCA compliance.

Communications seen by The PIE News quote the vice-chancellor, Stephen Decent, as saying: “In order to ensure that the [university] is able to achieve the more stringent requirements of the white paper, and ensure our ongoing and future compliance with our legal responsibilities as a sponsor of international students, we have taken the decision to implement a number of short-term and temporary changes to international student intake.”

“We have identified a number of courses at risk of non-compliance with the new UKVI metrics – and we have made the decision to pause recruitment to these courses for September 2025,” he continued.

The communication goes on to say that the university will be withdrawing offers on these courses for September 2025 and refunding deposits paid in time for students to source alternative courses. However, it remains unseen as to which courses will be impacted. The university has reached out to all affected students and its overseas partners and stressed that “everyone directly impacted has been fully informed”.

“While Glasgow Caledonian remains highly attractive to students from around the world, and we both welcome and value our international students, we have taken the decision to temporarily pause international student recruitment to a number of postgraduate programmes for the September 2025 intake,” a spokesperson for the university confirmed to The PIE. “This is a proactive and strategic step in light of anticipated changes outlined in the UK government’s immigration white paper.”

While the new BCA metrics – which would see the minimum pass requirement for each metric tightened by five percentage points – are not being formally enforced yet, delegates at last month’s UKCISA conference were told by compliance officials that they fully expected all policies set out to be enacted in the future.

We have identified a number of courses at risk of non-compliance with the new UKVI metrics – and we have made the decision to pause recruitment to these courses for September 2025

Stephen Decent, Glasgow Caledonian University

Policy changes such as BCA metric boundaries would not require legislative change and therefore could be introduced and enforced quickly. As a result, many universities have been conducting their own internal audits to see if they would be compliant or not.

“These new thresholds will present a greater challenge for many institutions, including our own, particularly if implemented without transition time or additional support mechanisms. Doing nothing is therefore not an option,” the Glasgow Caledonian University spokesperson said.

They added that the move represented only a “short-term pause”, giving it the time it needs to “review and, where necessary, adjust” entry processes for international students to make sure the institution is in “the strongest position possible” to meet the tightened thresholds.

Universities continue to be concerned about the proposed timing of the new metrics and their potential impact on smaller institutions. More clarity is needed on how non-completion is recorded, especially if a university is punished for students who legitimately decided not to continue with their studies and return home.

The immigration white paper outlines more stringent metrics for Basic Compliance Assessment (BCA):

- Reduction of visa refusal rate from 10% to 5%

- Increase of course completion rate from 85% to 90%

- Increase of enrolment rate from 90% to 95%

While Glasgow Caledonian University has not yet revealed the courses that will be subject to a pause in recruitment, it is well documented that postgraduate programs such as Masters of Research (MRes) courses have been attracting applicants seeking to bring dependants.

The PIE has previously reported on other institutions curtailing MRes recruitment mid-cycle, in a bid to reduce compliance risk.

Speculation has increased about institutions potentially facilitating students to switch to MSc programmes in a bid to reduce numbers enrolled on MRes programs in recent intakes, although this tactic would not help impacted students who need to obtain dependant visas for their loved ones.

The university has explained that the recruitment pause will enable time for the work to be undertaken to ensure that all courses are compliant with the new UKVI metrics.

“This will have a short-term impact, and we are aiming to reopen these courses as quickly as possible, primarily from Trimester B onwards and on the proviso that any reopened activities put us in as strong a position of compliance and success as possible” the university stated in its letter to stakeholders.

Colleagues from across the sector continue to be concerned about the BCA reforms, calling them “arbitrary“.

Last month, The PIE reported that former Home Secretary Jack Straw had warned that unscrupulous recruitment practices at some mid-ranking UK universities were having real-world consequences for the sector.

Speaking at Duolingo English Language Test’s inaugural DETcon London conference, he raised concerns that some universities has “expanded dramatically” in terms of their international intake – and that “the rest of the sector will pay the price” as the government clamps down on compliance.

Education

A largely invisible role of international students: Fueling the innovation economy

PITTSBURGH — Saisri Akondi had already started a company in her native India when she came to Carnegie Mellon University to get a master’s degree in biomedical engineering, business and design.

Before she graduated, she had co-founded another: D.Sole, for which Akondi, who is 28, used the skills she’d learned to create a high-tech insole that can help detect foot complications from diabetes, which results in 6.8 million amputations a year.

D.Sole is among technology companies in Pittsburgh that collectively employ a quarter of the local workforce at wages much higher than those in the city’s traditional steel and other metals industries. That’s according to the business development nonprofit the Pittsburgh Technology Council, which says these companies pay out an annual $27.5 billion in salaries alone.

A “significant portion” of Pittsburgh’s transformation into a tech hub has been driven by international students like Akondi, said Sean Luther, head of InnovatePGH, a coalition of civic groups and government agencies promoting innovation businesses.

“Next Happens Here,” reads the sign above the entrance to the co-working space where Luther works and technology companies are incubated, in an area near Carnegie Mellon and the University of Pittsburgh dubbed the Pittsburgh Innovation District. The neighborhood is filled with people of various ethnicities speaking a variety of languages over lunch and coffee.

What might happen next to the international students and graduates who have helped fuel this tech economy has become an anxiety-inducing subject of those conversations, as the second presidential administration of Donald Trump brings visa crackdowns, funding cuts and other attacks on higher education — including here, in a state that voted for Trump.

Related: Interested in innovations in higher education? Subscribe to our free biweekly higher education newsletter.

Inside the bubble of the universities and the tech sector, “there’s so much support you get,” Akondi observed, in a gleaming conference room at Carnegie Mellon. “But there still is a part of the population that asks, ‘What are you doing here?’ ”

Much of the ongoing conversation about international students has focused on undergraduates and their importance to university revenues and enrollment. Many of these students — especially in graduate schools — fill a less visible role in the economy, however. They conduct research that can lead to commercial applications, have skills employers need and start a surprising number of their own companies in the United States.

“The high-tech engineering and computer science activities that are central to regional economic development today are hugely dependent on these students,” said Mark Muro, a senior fellow at the Brookings Institution who studies technology and innovation. “If you go into a lab, it will be full of non-American people doing the crucial research work that leads to intellectual property, technology partnerships and startups.”

Some 143 U.S. companies valued at $1 billion or more were started by people who came to the country as international students, according to the National Foundation for American Policy, a nonprofit that conducts research on immigration and trade. These companies have an average of 860 employees each and include SpaceX, founded by Elon Musk, who was born in South Africa and graduated from the University of Pennsylvania.

Whether or not they invent new products or found businesses of their own, international graduates are “a vital source” of workers for U.S.-based tech companies, the National Science Foundation reported last year in an annual survey on the state of American science and engineering.

It’s supply and demand, said Dave Mawhinney, a professor of entrepreneurship at Carnegie Mellon and founding executive director of its Swartz Center for Entrepreneurship, which helps many of that school’s students do research that can lead to products and startups. “And the demand for people with those skills exceeds the supply.”

States with the most international students

California: 140,858

New York: 135,813

Texas: 89,546

Massachusetts: 82,306

Illinois: 62,299

Pennsylvania: 50,514

Florida: 44,767

Source: NAFSA: Association of International Educators. Figures are from the 2023-24 academic year, the most recent available.

Related: So much for saving the planet. Climate careers, and many others, evaporate for class of 2025

That’s in part because comparatively few Americans are going into fields including science, technology, engineering and math. Even before the pandemic disrupted their educations, only 20 percent of college-bound American high school students were prepared for college-level courses in these subjects. U.S. students scored lower in math than their counterparts in 21 of the 37 participating nations of the Organization for Economic Cooperation and Development on an international assessment test in 2022, the most recent year for which the outcomes are available.

One result is that international students make up more than a third of master’s and doctoral degree recipients in science and engineering at American universities. Two-thirds of U.S. university graduate students and more than half of workers in AI and AI-related fields are foreign born, according to Georgetown University’s Center for Security and Emerging Technology.

“A real point of strength, and a reason our robotics companies especially have been able to grow their head counts, is because of those non-native-born workers,” said Luther, in Pittsburgh. “Those companies are here specifically because of that talent.”

International students are more than just contributors to this city’s success in tech. “They have been drivers” of it, Mawhinney said, in his workspace overlooking the studio where the iconic children’s television program “Mister Rogers’ Neighborhood” was taped.

“Every year, 3,000 of the smartest people in the world come here, and a large proportion of those are international,” he said of Carnegie Mellon’s graduate students. “Some of them go into the research laboratories and work on new ideas, and some come having ideas already. You have fantastic students who are here to help you build your company or to be entrepreneurs themselves.”

Boosters of the city’s tech-driven turnaround say what’s been happening in Pittsburgh is largely unappreciated elsewhere. It followed the effective collapse of the steel industry in the 1980s, when unemployment hit 18 percent.

In 2006, Google opened a small office at Carnegie Mellon to take advantage of the faculty and student expertise in computer science and other fields there and at neighboring higher education institutions; the company later moved to a nearby former Nabisco factory and expanded its Pittsburgh workforce to 800 employees. Apple, software and AI giant SAP and other tech firms followed.

“It was the talent that brought them here, and so much of that talent is international,” said Audrey Russo, CEO of the Pittsburgh Technology Council.

Sixty-one percent of the master’s and doctoral students at Carnegie Mellon come from abroad, according to the university. So do 23 percent of those at Pitt, an analysis of federal data shows.

Related: International students are rethinking coming to the US. That’s a problem for colleges

The city has become a world center for self-driving car technology. Uber opened an advanced research center here. The autonomous vehicle company Motional — a joint venture between Hyundai and the auto parts supplier Aptiv — moved in. So did the Ford- and Volkswagen-backed Argo AI, which eventually dissolved, but whose founders went on to create the Pittsburgh-based self-driving truck developer Stack AV. The Ford subsidiary Latitude AI and the autonomous flight company Near Earth Autonomy also are headquartered in Pittsburgh.

Among other tech firms with homes here: Duolingo, which has 830 employees and is worth an estimated $22 billion. It was co-founded by a professor at Carnegie Mellon and a graduate of the university who both came to the United States as international students, from Guatemala and Switzerland, respectively.

InnovatePGH tracks 654 startups that are smaller than those big conglomerates but together employ an estimated 25,000 workers. Unemployment in Pittsburgh (3.5 percent in April) is below the national average (3.9 percent). Now Pitt and others are developing Hazelwood Green, which includes a former steel mill that closed in 1999, into a new district housing life sciences, robotics and other technology companies.

In a series of webinars about starting businesses, offered jointly to students at Pitt and Carnegie Mellon, the most popular installment is about how to found a startup on a student visa, said Rhonda Schuldt, director of Pitt’s Big Idea Center, in a storefront on Forbes Avenue in the Innovation District.

Some international undergraduates continue into graduate school or take jobs with companies that sponsor them so they can keep working on their ideas, Schuldt said.

“They want to stay in Pittsburgh and build businesses here,” she said.

There are clear worries that this momentum could come to a halt if the supply of international students continues a slowdown that began even before the new Trump term, thanks to visa processing delays and competition from other countries.

The number of international graduate students dropped in the fall by 2 percent, before the presidential election, according to the Institute of International Education. Further declines are expected following the government’s pause on student visa interviews, publicity surrounding visa revocations and arrests and cuts to federal research funding.

It’s too early to know what will happen this fall. But D. Sole co-founder Saisri Akondi has heard from friends who planned to come to the United States that they can’t get visas. “Most of these students wanted to start companies,” she said.

“I would be lying if I said nothing has changed,” said Akondi, who has been accepted into a master’s degree program in business administration at the Stanford University Graduate School of Business under her existing student visa, though she said her company will stay in Pittsburgh. “The fear has increased.”

This could affect whether tech companies continue to come to Pittsburgh, said Russo, at least unless and until more Americans are better prepared for and recruited into tech-related graduate programs. That’s something universities have not yet begun to do, since the unanticipated threat to their international students erupted only in March, and that would likely take years.

“Who’s going to do the research? Who’s going to be in these teams?” asked Russo. “We’re hurting ourselves deeply.”

The impact could transcend the research and development ecosystem. “I think we’ll see almost immediate ramifications in Pittsburgh in terms of higher-skilled, higher-wage companies hiring here,” said Sean Luther, at InnovatePGH. “And that affects the grocery shops, the barbershops, the real estate.”

There are other, more nuanced impacts.

“Whether we like it or not, it’s a global world. It’s a global economy. The problems that these students want to solve are global problems,” Schuldt said. “And one of the things that is really important in solving the world’s problems is to have a robust mix of countries, of cultures — that opportunity to learn how others see the world. That is one of the most valuable things students tell us they get here.”

Pittsburgh is a prime example of a place whose economy is vulnerable to a decline in the number of international students, said Brookings’ Muro. But it’s not unique.

“These scholars become entrepreneurs. They’re adding to the U.S. economy new ideas and new companies,” he said. Without them, “the economy would be smaller. Research wouldn’t get done. Journal articles wouldn’t be written. Patents wouldn’t be filed. Fewer startups would occur.”

The United States, said Muro, “has cleaned up by being the absolute central place for this. The system has been incredibly beneficial to the United States. The hottest technologies are inordinately reliant on these excellent minds from around the world. And their being here is critical to American leadership.”

Contact writer Jon Marcus at 212-678-7556, jmarcus@hechingerreport.org or jpm.82 on Signal.

This story about international students was produced by The Hechinger Report, a nonprofit, independent news organization focused on inequality and innovation in education. Sign up for our higher education newsletter. Listen to our higher education podcast.

Education

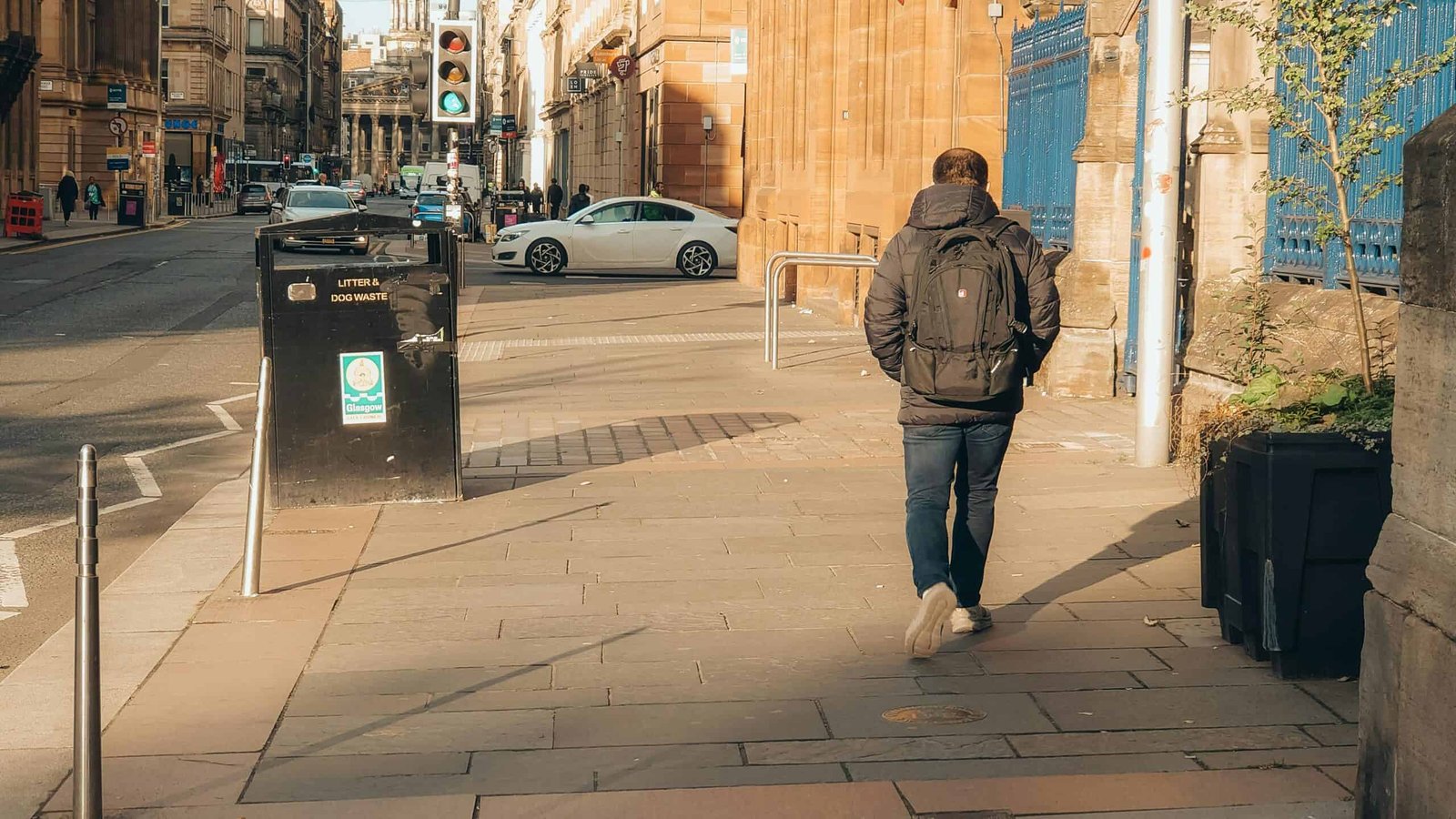

First week ‘critical’ to avoid children missing school later, parents told

Hazel ShearingEducation correspondent

Getty Images

Getty ImagesPupils in England who missed school during the first week back in September 2024 were more likely to miss large parts during the rest of the year, figures suggest.

More than half (57%) of pupils who were partially absent in week one became “persistently absent” – missing at least 10% of school, according to government data first seen by the BBC.

By contrast, of pupils who fully attended the first week, 14% became persistently absent.

Education Secretary Bridget Phillipson said schools and parents should “double down” to get children in at the start of the 2025 term, which is this week for most English schools.

A head teachers’ union said more support was needed “outside of the school gates” to boost attendance.

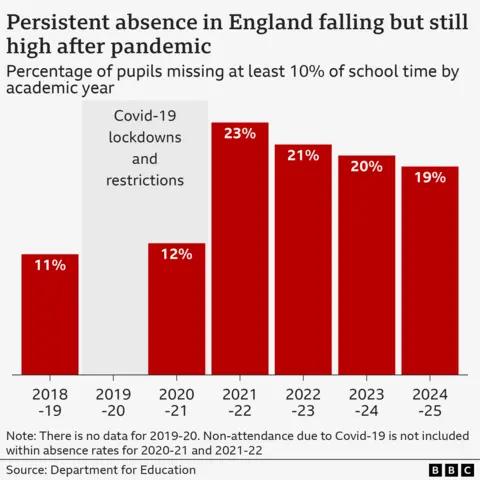

Schools have always grappled with attendance issues, but they became much worse after the pandemic in 2020 and schools closed to most pupils during national lockdowns.

Attendance has improved since, but it remains a bigger problem than before Covid.

Overall, about 18% of pupils were persistently absent in the 2024-25 school year, down from a peak of 23% in 2021-22 but higher than the pre-Covid levels of about 11%.

The Department for Education (DfE) said the data from the first week of the 2024-25 school year showed the start of term was “critical” for tackling persistent absence.

Karl Stewart, head teacher at Shaftesbury Junior School in Leicester, said his school’s attendance rates were higher than average and but there was a “definite dip” in the two years after Covid.

“I get why. Some of that wasn’t necessarily parents not wanting to send them in. It was because either they had got Covid or other things, they were saying, ‘We’ll just keep them off now to be sure’,” he said.

The school has incentives like awards and class competitions to keep absence rates down, and Mr Stewart said attendance had more or less returned to pre-Covid levels.

“When we have the children in every day the results are just better,” he said.

“If you’re here, that gives you more time for your teacher to notice you, for us to see all that good behaviour [and] that really hard work – and that’s what we want.”

But, like lots of schools, he said some parents still took their children on unauthorised term-time holidays to make the most of cheaper costs.

Others, he said, have taken children for medical treatments overseas to avoid NHS waiting lists.

The education secretary said that while attendance improved last year, absence levels “remain critically high, putting at risk the life chances of a whole generation of young people”.

“Every day of school missed is a day stolen from a child’s future,” Phillipson said.

“As the new term kicks off, we need schools and parents to double down on the energy, the drive and the relentlessness that’s already boosted the life chances of millions of children, to do the same for millions more.”

The DfE said 800 schools were set to be supported by regional school improvement teams – through attendance and behaviour hubs.

These hubs are made up of 90 exemplary schools which will offer support to improve struggling schools through training sessions, events and open days.

It said it had appointed the first 21 schools that will lead the programme.

However, Pepe Di’Iasio, general secretary of the Association of School and College Leaders, said attendance hubs were not a “silver bullet” and a more “strategic approach” was needed.

“I think the government has worked really hard to improve attendance and it continues to be a priority for them, but there’s certainly more to do,” he told the BBC.

“So many of the challenges that [school leaders] are facing come from beyond the school gates – children suffering with high levels of anxiety, issues around mental health.”

He said school leaders wanted quicker access to support for those pupils and specialist staff in schools, but pupils also needed “great role models” in the community through youth clubs and volunteer groups.

The Conservatives said Labour’s Schools Bill had dismantled a “system that has driven up standards for decades”.

Shadow education secretary Laura Trott said: “Behaviour and attendance are two of the biggest challenges facing schools and it’s about time the government acted.”

She added: “There must be clear consequences for poor behaviour not just to protect the pupils trying to learn, but to recognise when mainstream education isn’t the right setting for those causing disruption.”

Additional reporting by Nathan Standley

Education

AI in the classrooms: How Bangladeshi schools are adapting to a new digital era

The recent explosion of Artificial Intelligence (AI) has pervaded numerous industries, going from a futuristic concept to an everyday reality. However, the impact of AI on schooling has been exceptionally staggering.

From helping students complete assignments to reshaping the way teachers think about homework and exams, AI is beginning to redefine education all over the world.

Bangladesh is no different.

Artificial Intelligence isn’t just coming to Bangladeshi classrooms—it’s already here. While its promise of convenience and quick solutions is quite alluring to students, the ever-growing presence of AI in schools has raised difficult questions: is learning actually taking place anymore or is it being replaced by answers generated not from thought, but from machines?

In schools across Bangladesh, AI tools like ChatGPT have quietly revolutionised how students complete their homework, how teachers prepare lessons, and how institutions rethink education altogether.

Is it a blessing or a bane?

Students have quickly adapted to using advanced AI chatbots like ChatGPT, making AI an unavoidable and integral part of academic life. From essays to homework, students are increasingly finding ways to rely on AI not just to work faster, but to sidestep studying altogether.

Many schools and educators have now been forced to accept that resisting AI is no longer an option. Schools must adapt to the new reality or risk becoming redundant.

Yafa Rahman, Vice Principal and Senior Business Studies Teacher of Adroit International School, told The Business Standard, “Talks about integrating AI in the school curriculum is a global concern, and my school has had meetings with Pearson Education on how to do that in the best possible manner as well as train teachers to use AI in a beneficial way while being able to spot unethical AI use. This is an ongoing discussion, and we will see many changes soon.”

Yafa explained that her school also employs AI tools to structure assignments and class content. Rather than banning AI altogether, she believes in channelling students’ fascination with technology into meaningful learning. “Students rely on technology so much that if we incorporate any technology into the learning process, students instantly become more interested,” she said.

Rethinking the curriculum

The convenience of AI comes with a heavy cost. Teachers are reporting a surge in AI-generated assignments. Entire essays, reports, and even personal reflections are being turned in with no human touch. And it’s getting harder to spot the difference.

Educators have responded by rethinking the very structure of education in the country. Oral assessments, in-class essays, and presentations have become increasingly common, as schools seek to test students’ independent thinking rather than their ability to reproduce AI-generated answers.

“For assignments meant to show knowledge and understanding, I’ve returned to using pencil and paper to prevent AI use. For reflective assignments, I encourage students to use AI but remind them to think critically. You do not always have to agree with what AI generated, and key facts and figures must be checked with reliable sources,” said Olivier Gautheron, a Science Teacher at International School Dhaka (ISD) who has earned the “AI Essentials for Educators” certification from Edtech Teachers in the US.

This hybrid approach reflects a wider consensus among educators that AI should not be ignored but incorporated responsibly, encouraging students to refine their critical faculties alongside their digital literacy.

It’s no longer just about stopping AI from being used. It’s about guiding how it’s used.

AI detection

Detecting AI-generated work isn’t straightforward. In universities, plagiarism software and AI detectors are standard. But in schools, teachers often rely on their personal knowledge of each student’s writing style and capability, using their instincts to identify when a student’s writing does not look like their own.

But Gautheron warns against over-reliance on intuition, preferring restraint over wrongful accusations.

“I believe it all comes down to knowing your students and their abilities,” he said. “There’s a high chance of mistakenly identifying student work as AI-generated when it’s not.”

He recalled an incident when he suspected a student of using AI, only to learn that the child had simply used software to improve grammar without altering the ideas. “This is perfectly acceptable, as the purpose of the assignment was for students to generate their own ideas,” he added.

He believes the solution lies not in advanced software but in dialogue. “Although software exists to detect AI, there are other softwares to make them undetectable. I believe that the best way to detect inappropriate use of AI is asking your students directly. If I feel that a student’s work quality is very different from previous tasks, simply asking them to clarify a few ideas of their work is enough.”

For resource-constrained schools, this approach is also pragmatic, since not every institution can afford detection software.

AI for teachers

Just like students, teachers are also increasingly turning to AI for lesson planning and content creation

Emran Taher, Cambridge examiner and senior English instructor at Mastermind School, sees AI as a game-changer.

“It is not just the students who use AI. Teachers and schools are using it too. I can keep my syllabus up-to-date and incorporate more relevant topics and examples instead of just relying on textbooks. This helps grab students’ interest while reducing issues like bunking classes.”

He also uses AI for personalised instruction. By feeding student data—age, class level, strengths, and weaknesses—into AI tools, he receives tailored recommendations that help him address individual needs. “There are no bad students, only bad teachers,” he said.

Striking the right balance

AI’s presence in schools reveals a tension: the same tool that can personalise learning and spark creativity can also be used to bypass real thinking. This balancing act between embracing innovation and preserving the essence of education appears to be the defining challenge of AI use.

However, there is no turning back. AI is already embedded in how schools operate. What matters now is how educators choose to respond. As Bangladeshi schools navigate this shift, teacher training, investment in digital infrastructure, and the development of ethical guidelines will all be crucial.

Some see AI as a threat to academic honesty. Others see it as a catalyst for overdue change in the old, rigid education system. But everyone realises that the role of teachers must evolve to address the new digital landscape.

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Business2 days ago

Business2 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoAstrophel Aerospace Raises ₹6.84 Crore to Build Reusable Launch Vehicle