AI Research

Genie 2: A large-scale foundation world model

Acknowledgements

Genie 2 was led by Jack Parker-Holder with technical leadership by Stephen Spencer, with key contributions from Philip Ball, Jake Bruce, Vibhavari Dasagi, Kristian Holsheimer, Christos Kaplanis, Alexandre Moufarek, Guy Scully, Jeremy Shar, Jimmy Shi and Jessica Yung, and contributions from Michael Dennis, Sultan Kenjeyev and Shangbang Long. Yusuf Aytar, Jeff Clune, Sander Dieleman, Doug Eck, Shlomi Fruchter, Raia Hadsell, Demis Hassabis, Georg Ostrovski, Pieter-Jan Kindermans, Nicolas Heess, Charles Blundell, Simon Osindero, Rushil Mistry gave advice. Past contributors include Ashley Edwards and Richie Steigerwald.

The Generalist Agents team was led by Vlad Mnih with key contributions from Harris Chan, Maxime Gazeau, Bonnie Li, Fabio Pardo, Luyu Wang, Lei Zhang

The SIMA team, with particular support from Frederic Besse, Tim Harley, Anna Mitenkova and Jane Wang

Tim Rocktäschel, Satinder Singh and Adrian Bolton coordinated, managed and advised the overall project.

We’d also like to thank Zoubin Gharamani, Andy Brock, Ed Hirst, David Bridson, Zeb Mehring, Cassidy Hardin, Hyunjik Kim, Noah Fiedel, Jeff Stanway, Petko Yotov, Mihai Tiuca, Soheil Hassas Yeganeh, Nehal Mehta, Richard Tucker, Tim Brooks, Alex Cullum, Max Cant, Nik Hemmings, Richard Evans, Valeria Oliveira, Yanko Gitahy Oliveira, Bethanie Brownfield, Charles Gbadamosi, Giles Ruscoe, Guy Simmons, Jony Hudson, Marjorie Limont, Nathaniel Wong, Sarah Chakera, Nick Young.

AI Research

Trends in patent filing for artificial intelligence-assisted medical technologies | Smart & Biggar

[co-authors: Jessica Lee, Noam Amitay and Sarah McLaughlin]

Medical technologies incorporating artificial intelligence (AI) are an emerging area of innovation with the potential to transform healthcare. Employing techniques such as machine learning, deep learning and natural language processing,1 AI enables machine-based systems that can make predictions, recommendations or decisions that influence real or virtual environments based on a given set of objectives.2 For example, AI-based medical systems can collect medical data, analyze medical data and assist in medical treatment, or provide informed recommendations or decisions.3 According to the U.S. Food and Drug Administration (FDA), some key areas in which AI are applied in medical devices include: 4

- Image acquisition and processing

- Diagnosis, prognosis, and risk assessment

- Early disease detection

- Identification of new patterns in human physiology and disease progression

- Development of personalized diagnostics

- Therapeutic treatment response monitoring

Patent filing data related to these application areas can help us see emerging trends.

Table of contents

Analysis strategy

We identified nine subcategories of interest:

- Image acquisition and processing

- Medical image acquisition

- Pre-processing of medical imaging

- Pattern recognition and classification for image-based diagnosis

- Diagnosis, prognosis and risk management

- Early disease detection

- Identification of new patterns in physiology and disease

- Development of personalized diagnostics and medicine

- Therapeutic treatment response monitoring

- Clinical workflow management

- Surgical planning/implants

We searched patent filings in each subcategory from 2001 to 2023. In the results below, the number of patent filings are based on patent families, each patent family being a collection of patent documents covering the same technology, which have at least one priority document in common.5

What has been filed over the years?

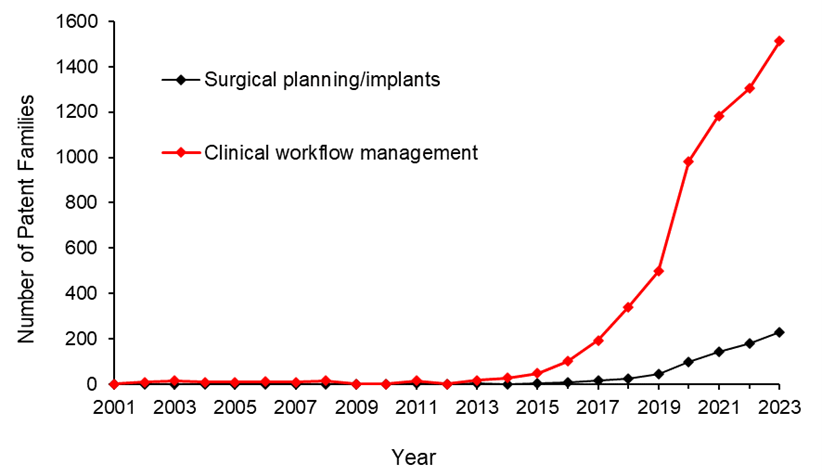

The number of patents filed in each subcategory of AI-assisted applications for medical technologies from 2001 to 2023 is shown below.

We see that patenting activities are concentrating in the areas of treatment response monitoring, identification of new patterns in physiology and disease, clinical workflow management, pattern recognition and classification for image-based diagnosis, and development of personalized diagnostics and medicine. This suggests that research and development efforts are focused on these areas.

What do the annual numbers tell us?

Let’s look at the annual number of patent filings for the categories and subcategories listed above. The following four graphs show the global patent filing trends over time for the categories of AI-assisted medical technologies related to: image acquisition and processing; diagnosis, prognosis and risk management; treatment response monitoring; and workflow management.

When looking at the patent filings on an annual basis, the numbers confirm the expected significant uptick in patenting activities in recent years for all categories searched. They also show that, within the four categories, the subcategories showing the fastest rate of growth were: pattern recognition and classification for image-based diagnosis, identification of new patterns in human physiology and disease, treatment response monitoring, and clinical workflow management.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to image acquisition and processing.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to more accurate diagnosis, prognosis and risk management.

Above: Global patent filing trends over time for AI-assisted medical technologies related to treatment response monitoring.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to workflow management.

Where is R&D happening?

By looking at where the inventors are located, we can see where R&D activities are occurring. We found that the two most frequent inventor locations are the United States (50.3%) and China (26.2%). Both Australia and Canada are amongst the ten most frequent inventor locations, with Canada ranking seventh and Australia ranking ninth in the five subcategories that have the highest patenting activities from 2001-2023.

Where are the destination markets?

The filing destinations provide a clue as to the intended markets or locations of commercial partnerships. The United States (30.6%) and China (29.4%) again are the pace leaders. Canada is the seventh most frequent destination jurisdiction with 3.2% of patent filings. Australia is the eighth most frequent destination jurisdiction with 3.1% of patent filings.

Takeaways

Our analysis found that the leading subcategories of AI-assisted medical technology patent applications from 2001 to 2023 include treatment response monitoring, identification of new patterns in human physiology and disease, clinical workflow management, pattern recognition and classification for image-based diagnosis as well as development of personalized diagnostics and medicine.

In more recent years, we found the fastest growth in the areas of pattern recognition and classification for image-based diagnosis, identification of new patterns in human physiology and disease, treatment response monitoring, and clinical workflow management, suggesting that R&D efforts are being concentrated in these areas.

We saw that patent filings in the areas of early disease detection and surgical/implant monitoring increased later than the other categories, suggesting these may be emerging areas of growth.

Although, as expected, the United States and China are consistently the leading jurisdictions in both inventor location and destination patent offices, Canada and Australia are frequently in the top ten.

Patent intelligence provides powerful tools for decision makers in looking at what might be shaping our future. With recent geopolitical changes and policy updates in key primary markets, as well as shifts in trade relationships, patent filings give us insight into how these aspects impact innovation. For everyone, it provides exciting clues as to what emerging technologies may shape our lives.

References

1. Alowais et.al., Revolutionizing healthcare: the role of artificial intelligence in clinical practice (2023), BMC Medical Education, 23:689.

2. U.S. Food and Drug Administration (FDA), Artificial Intelligence and Machine Learning in Software as a Medical Device.

3. Bitkina et.al., Application of artificial intelligence in medical technologies: a systematic review of main trends (2023), Digital Health, 9:1-15.

4. Artificial Intelligence Program: Research on AI/ML-Based Medical Devices | FDA.

5. INPADOC extended patent family.

[View source.]

AI Research

Research Reveals Human-AI Learning Parallels

PROVIDENCE, R.I. [Brown University] – New research found similarities in how humans and artificial intelligence integrate two types of learning, offering new insights about how people learn as well as how to develop more intuitive AI tools.

Led by Jake Russin, a postdoctoral research associate in computer science at Brown University, the study found by training an AI system that flexible and incremental learning modes interact similarly to working memory and long-term memory in humans.

“These results help explain why a human looks like a rule-based learner in some circumstances and an incremental learner in others,” Russin said. “They also suggest something about what the newest AI systems have in common with the human brain.”

Russin holds a joint appointment in the laboratories of Michael Frank, director of the Center for Computational Brain Science at Brown’s Carney Institute for Brain Science, and Ellie Pavlick, an associate professor of computer science who leads the AI Research Institute on Interaction for AI Assistants at Brown. The study was published in the Proceedings of the National Academy of Sciences.

Depending on the task, humans acquire new information in one of two ways. For some tasks, such as learning the rules of tic-tac-toe, “in-context” learning allows people to figure out the rules quickly after a few examples. In other instances, incremental learning builds on information to improve understanding over time – such as the slow, sustained practice involved in learning to play a song on the piano.

While researchers knew that humans and AI integrate both forms of learning, it wasn’t clear how the two learning types work together. Over the course of the research team’s ongoing collaboration, Russin – whose work bridges machine learning and computational neuroscience – developed a theory that the dynamic might be similar to the interplay of human working memory and long-term memory.

To test this theory, Russin used “meta-learning”- a type of training that helps AI systems learn about the act of learning itself – to tease out key properties of the two learning types. The experiments revealed that the AI system’s ability to perform in-context learning emerged after it meta-learned through multiple examples.

One experiment, adapted from an experiment in humans, tested for in-context learning by challenging the AI to recombine similar ideas to deal with new situations: if taught about a list of colors and a list of animals, could the AI correctly identify a combination of color and animal (e.g. a green giraffe) it had not seen together previously? After the AI meta-learned by being challenged to 12,000 similar tasks, it gained the ability to successfully identify new combinations of colors and animals.

The results suggest that for both humans and AI, quicker, flexible in-context learning arises after a certain amount of incremental learning has taken place.

“At the first board game, it takes you a while to figure out how to play,” Pavlick said. “By the time you learn your hundredth board game, you can pick up the rules of play quickly, even if you’ve never seen that particular game before.”

The team also found trade-offs, including between learning retention and flexibility: Similar to humans, the harder it is for AI to correctly complete a task, the more likely it will remember how to perform it in the future. According to Frank, who has studied this paradox in humans, this is because errors cue the brain to update information stored in long-term memory, whereas error-free actions learned in context increase flexibility but don’t engage long-term memory in the same way.

For Frank, who specializes in building biologically inspired computational models to understand human learning and decision-making, the team’s work showed how analyzing strengths and weaknesses of different learning strategies in an artificial neural network can offer new insights about the human brain.

“Our results hold reliably across multiple tasks and bring together disparate aspects of human learning that neuroscientists hadn’t grouped together until now,” Frank said.

The work also suggests important considerations for developing intuitive and trustworthy AI tools, particularly in sensitive domains such as mental health.

“To have helpful and trustworthy AI assistants, human and AI cognition need to be aware of how each works and the extent that they are different and the same,” Pavlick said. “These findings are a great first step.”

The research was supported by the Office of Naval Research and the National Institute of General Medical Sciences Centers of Biomedical Research Excellence.

AI Research

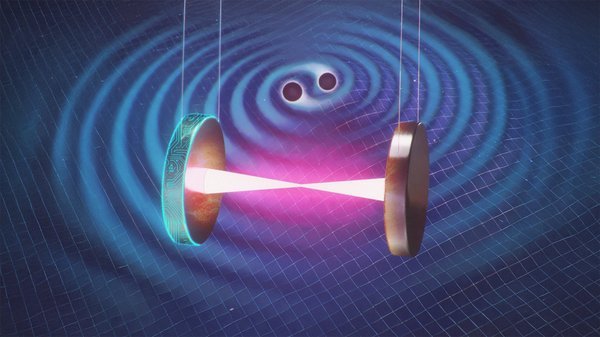

Artificial Intelligence Helps Boost LIGO

The US National Science Foundation LIGO (Laser Interferometer Gravitational-wave Observatory) has been called the most precise ruler in the world for its ability to measure motions smaller than 1/10,000 the width of a proton. By making these extremely precise measurements, LIGO, which consists of two facilities—one in Washington and one in Louisiana—can detect undulations in space-time called gravitational waves that roll outward from colliding cosmic bodies such as black holes.

LIGO ushered in the field of gravitational-wave astronomy beginning in 2015 when it made the first-ever direct detection of these ripples, a discovery that subsequently earned three of its founders the Nobel Prize in Physics in 2017. Improvements to LIGO’s interferometers mean that it now detects an average of about one black hole merger every three days during its current science run. Together with its partners, the Virgo gravitational-wave detector in Italy and KAGRA in Japan, the observatory has in total detected hundreds of black hole merger candidates, in addition to a handful involving at least one neutron star.

Researchers want to further enhance LIGO’s abilities, so that they can detect a larger variety of black-hole mergers, including more massive mergers that might belong to a hypothesized intermediate-mass class bridging the gap between stellar-mass black holes and much larger supermassive black holes residing at the centers of galaxies. They also want to make it easier for LIGO to find black holes with eccentric, or oblong, orbits, as well as catch mergers earlier in the coalescing process, when the dense bodies spiral in toward one another.

To do this, researchers at Caltech and Gran Sasso Science Institute in Italy teamed up with Google DeepMind to develop a new AI method–called Deep Loop Shaping–that can better hush unwanted noise in LIGO’s detectors. The term “noise” can refer to any number of pesky background disturbances that interfere with data collection. The noise can be literal noise, as in sound waves, but in the case of LIGO, the term often refers to a very tiny amount of jiggling in the giant mirrors at the heart of LIGO. Too much jiggling can mask gravitational-wave signals.

Now, reporting in Science, the researchers show that their new AI algorithm, though still a proof-of-concept, quieted motions of the LIGO mirrors by 30 to 100 times more than what is possible using traditional noise-reduction methods alone.

“We were already at the forefront of innovation, making the most precise measurements in the world, but with AI, we can boost LIGO’s performance to detect bigger black holes,” says co-author Rana Adhikari, professor of physics at Caltech. “This technology will help us not only improve LIGO but also build LIGO India and even bigger gravitational-wave detectors.”

The approach could also improve technologies that use control systems. “In the future, Deep Loop Shaping could also be applied to many other engineering problems involving vibration suppression, noise cancellation and highly dynamic or unstable systems important in aerospace, robotics, and structural engineering,” write study co-authors Brendan Tracey and Jonas Buchli, an engineer and scientist, respectively, at Google DeepMind, in a blog post about the study.

The Stillest Mirrors

Both the Louisiana and Washington LIGO facilities are shaped like enormous “L’s,” in which each arm of the L contains a vacuum tube that houses advanced laser technology. Within the 4-kilometer-long tubes, lasers bounce back and forth with the aid of giant 40-kilogram suspended mirrors at each end. As gravitational waves reach Earth from space, they distort space-time in such a way that the length of one arm changes relative to the other by infinitesimally small amounts. LIGO’s laser system detects these minute, subatomic-length changes to the arms, registering gravitational waves.

But to achieve this level of precision, engineers at LIGO must ensure that background noises are kept at bay. This study looked specifically at unwanted noises, or motions, in LIGO’s mirrors that occur when the mirrors shift in orientation from the desired position by very tiny amounts. Although both of the LIGO facilities are relatively far from the coast, one of the strongest sources of these mirror vibrations is ocean waves.

“It’s as if the LIGO detectors are sitting at the beach,” explains co-author Christopher Wipf, a gravitational-wave interferometer research scientist at Caltech. “Water is sloshing around on Earth, and the ocean waves create these very low-frequency, slow vibrations that both LIGO facilities are severely disturbed by.”

The solution to the problem works much like noise-canceling headphones, Wipf explains. “Imagine you are sitting on the beach with noise-canceling headphones. A microphone picks up the ocean sounds, and then a controller sends a signal to your speaker to counteract the wave noise,” he says. “This is similar to how we control ocean and other seismic ground-shaking noise at LIGO.”

However, as is the case with noise-canceling headphones, there is a price. “If you have ever listened to these headphones in a quiet area, you might hear a faint hiss. The microphone has its own intrinsic noise. This self-inflicted noise is what we want to get rid of in LIGO,” Wipf says.

LIGO already handles the problem extremely well using a traditional feedback control system. The controller senses the rumble in the mirrors caused by seismic noise and then counteracts these vibrations, but in a way that introduces a new higher-frequency quiver in the mirrors—like the hiss in the headphones. The controller senses the hiss too and constantly reacts to both types of disturbances to keep the mirrors as still as possible. This type of system is sometimes compared to a waterbed: Trying to quiet waves at one frequency leads to extra jiggling at another frequency. Controllers can automatically sense the disturbances and stabilize a system.

Researchers want to further improve the LIGO control system by reducing this controller-induced hiss, which interferes with gravitational-wave signals in the lower-frequency portion of the observatory’s range. LIGO detects gravitational waves with a frequency between 10 and 5,000 Hertz (humans hear sound waves with a frequency between 20 and 20,000 Hertz). The unwanted hiss lies in the range between 10 and 30 Hertz—and this is where more massive black holes mergers would be picked up, as well as where black holes would be caught near the beginning of their final death spirals (for instance, the famous “chirps” heard by LIGO start in lower frequencies then rise to a higher pitch.)

About four years ago, Jan Harms, a former Caltech research assistant professor who is now a professor at Gran Sasso Science Institute, reached out to experts at Google DeepMind to see if they could help develop an AI method to better control vibrations in LIGO’s mirrors. At that point, Adhikari got involved, and the researchers began working with Google DeepMind to try different AI methods. In the end, they used a technique called reinforcement learning, which essentially taught the AI algorithm how to better control the noise.

“This method requires a lot of training,” Adhikari says. “We supplied the training data, and Google DeepMind ran the simulations. Basically, they were running dozens of simulated LIGOs in parallel. You can think of the training as playing a game. You get points for reducing the noise and dinged for increasing it. The successful ‘players’ keep going to try to win the game of LIGO. The result is beautiful—the algorithm works to suppress mirror noise.”

Richard Murray (BS ‘85), the Thomas E. and Doris Everhart Professor of Control and Dynamical Systems and Bioengineering at Caltech, explains that without AI, scientists and engineers mathematically model a system they want to control in explicit detail. “But with AI, if you train it on a model of sufficient detail, it can exploit features in the system that you wouldn’t have considered using classical methods,” he says. An expert in control theory for complex systems, Murray (who is not an author on the current study) develops AI tools for certain control systems, such as those used in self-driving vehicles.

“We think this research will inspire more students to want to work at LIGO and be part of this remarkable innovation,” Adhikari says. “We are at the bleeding edge of what’s possible in measuring tiny, quantum distances.”

So far, the new AI method was tested on LIGO for only an hour to demonstrate that it works. The team is looking forward to conducting longer duration tests and ultimately implementing the method on several LIGO systems. “This is a tool that changes how we think about what ground-based detectors are capable of,” Wipf says. “It makes an incredibly challenging problem less daunting.”

The Science paper titled “Improving cosmological reach of LIGO using Deep Loop Shaping” was supported in part by the National Science Foundation, which funds LIGO.

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics