AI Insights

FTC investigating AI ‘companion’ chatbots amid growing concern about harm to kids

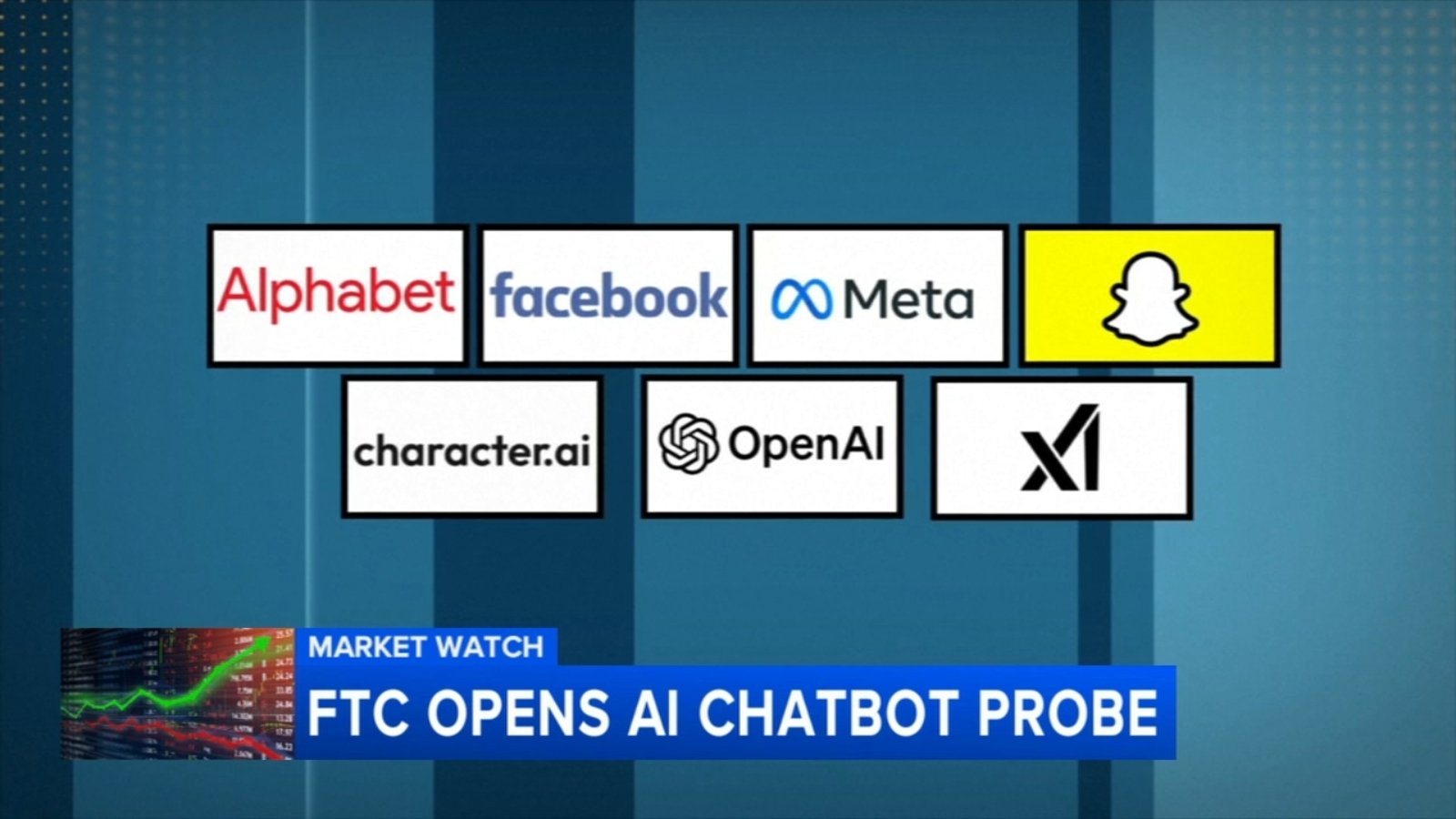

The Federal Trade Commission has launched an investigation into seven tech companies around potential harms their artificial intelligence chatbots could cause to children and teenagers.

The inquiry focuses on AI chatbots that can serve as companions, which “effectively mimic human characteristics, emotions, and intentions, and generally are designed to communicate like a friend or confidant, which may prompt some users, especially children and teens, to trust and form relationships with chatbots,” the agency said in a statement Thursday.

The FTC sent order letters to Google parent company Alphabet; Character.AI; Instagram and its parent company, Meta; OpenAI; Snap; and Elon Musk’s xAI. The agency wants information about whether and how the firms measure the impact of their chatbots on young users and how they protect against and alert parents to potential risks.

The investigation comes amid rising concern around AI use by children and teens, following a string of lawsuits and reports accusing chatbots of being complicit in the suicide deaths, sexual exploitation and other harms to young people. That includes one lawsuit against OpenAI and two against Character.AI that remain ongoing even as the companies say they are continuing to build out additional features to protect users from harmful interactions with their bots.

Broader concerns have also surfaced that even adult users are building unhealthy emotional attachments to AI chatbots, in part because the tools are often designed to be agreeable and supportive.

At least one online safety advocacy group, Common Sense Media, has argued that AI “companion” apps pose unacceptable risks to children and should not be available to users under the age of 18. Two California state bills related to AI chatbot safety for minors, including one backed by Common Sense Media, are set to receive final votes this week and, if passed, will reach California Gov. Gavin Newsom’s desk. The US Senate Judiciary Committee is also set to hold a hearing next week entitled “Examining the Harm of AI Chatbots.”

“As AI technologies evolve, it is important to consider the effects chatbots can have on children, while also ensuring that the United States maintains its role as a global leader in this new and exciting industry,” FTC Chairman Andrew Ferguson said in the Thursday statement. “The study we’re launching today will help us better understand how AI firms are developing their products and the steps they are taking to protect children.”

In particular, the FTC’s orders seek information about how the companies monetize user engagement, generate outputs in response to user inquiries, develop and approve AI characters, use or share personal information gained through user conversations and mitigate negative impacts to children, among other details.

Google, Snap and xAI did not immediately respond to requests for comment.

“Our priority is making ChatGPT helpful and safe for everyone, and we know safety matters above all else when young people are involved. We recognize the FTC has open questions and concerns, and we’re committed to engaging constructively and responding to them directly,” OpenAI spokesperson Liz Bourgeois said in a statement. She added that OpenAI has safeguards such as notifications directing users to crisis helplines and plans to roll out parental controls for minor users.

After the parents of 16-year-old Adam Raine sued OpenAI last month alleging that ChatGPT encouraged their son’s death by suicide, the company acknowledged its safeguards may be “less reliable” when users engage in long conversations with the chatbots and said it was working with experts to improve them.

Meta declined to comment directly on the FTC inquiry. The company said it is currently limiting teens’ access to only a select group of its AI characters, such as those that help with homework. It is also training its AI chatbots not to respond to teens’ mentions of sensitive topics such as self-harm or inappropriate romantic conversations and to instead point to expert resources.

“We look forward to collaborating with the FTC on this inquiry and providing insight on the consumer AI industry and the space’s rapidly evolving technology,” Jerry Ruoti, Character.AI’s head of trust and safety, said in a statement. He added that the company has invested in trust and safety resources such as a new under-18 experience on the platform, a parental insights tool and disclaimers reminding users that they are chatting with AI.

If you are experiencing suicidal, substance use or other mental health crises please call or text the new three-digit code at 988. You will reach a trained crisis counselor for free, 24 hours a day, seven days a week. You can also go to 988lifeline.org.

(The-CNN-Wire & 2025 Cable News Network, Inc., a Time Warner Company. All rights reserved.)

AI Insights

Google’s top AI scientist says ‘learning how to learn’ will be next generation’s most needed skill

ATHENS, Greece — A top Google scientist and 2024 Nobel laureate said Friday that the most important skill for the next generation will be “learning how to learn” to keep pace with change as Artificial Intelligence transforms education and the workplace.

Speaking at an ancient Roman theater at the foot of the Acropolis in Athens, Demis Hassabis, CEO of Google’s DeepMind, said rapid technological change demands a new approach to learning and skill development.

“It’s very hard to predict the future, like 10 years from now, in normal cases. It’s even harder today, given how fast AI is changing, even week by week,” Hassabis told the audience. “The only thing you can say for certain is that huge change is coming.”

The neuroscientist and former chess prodigy said artificial general intelligence — a futuristic vision of machines that are as broadly smart as humans or at least can do many things as well as people can — could arrive within a decade. This, he said, will bring dramatic advances and a possible future of “radical abundance” despite acknowledged risks.

Hassabis emphasized the need for “meta-skills,” such as understanding how to learn and optimizing one’s approach to new subjects, alongside traditional disciplines like math, science and humanities.

“One thing we’ll know for sure is you’re going to have to continually learn … throughout your career,” he said.

The DeepMind co-founder, who established the London-based research lab in 2010 before Google acquired it four years later, shared the 2024 Nobel Prize in chemistry for developing AI systems that accurately predict protein folding — a breakthrough for medicine and drug discovery.

Greece’s Prime Minister Kyriakos Mitsotakis, left, and Demis Hassabis, CEO of Google’s artificial intelligence research company DeepMind discuss the future of AI, ethics and democracy during an event at the Odeon of Herodes Atticus, in Athens, Greece, Friday, Sept. 12, 2025. Credit: AP/Thanassis Stavrakis

Greek Prime Minister Kyriakos Mitsotakis joined Hassabis at the Athens event after discussing ways to expand AI use in government services. Mitsotakis warned that the continued growth of huge tech companies could create great global financial inequality.

“Unless people actually see benefits, personal benefits, to this (AI) revolution, they will tend to become very skeptical,” he said. “And if they see … obscene wealth being created within very few companies, this is a recipe for significant social unrest.”

Mitsotakis thanked Hassabis, whose father is Greek Cypriot, for rescheduling the presentation to avoid conflicting with the European basketball championship semifinal between Greece and Turkey. Greece later lost the game 94-68.

_

AI Insights

Artificial Intelligence Cheating – The Quad-City Times

Artificial Intelligence Cheating The Quad-City Times

Source link

AI Insights

Artificial Intelligence Cheating – The Times and Democrat

Artificial Intelligence Cheating The Times and Democrat

Source link

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi