AI Research

FSU researchers receive $2.3 million National Science Foundation grant to strengthen wildfire management in hurricane-prone areas

Florida State University researchers have received a $2.3 million National Science Foundation (NSF) grant to develop artificial intelligence tools that will help manage wildfires fueled by hurricanes in the Florida Panhandle.

The four-year project will be led by Yushun Dong, an assistant professor of computer science, and is the largest research award ever for FSU’s Department of Computer Science. Dong and his interdisciplinary team will focus on wildfires in the wildland-urban interface, where forests such as the Apalachicola National Forest meet homes, roads and other infrastructure.

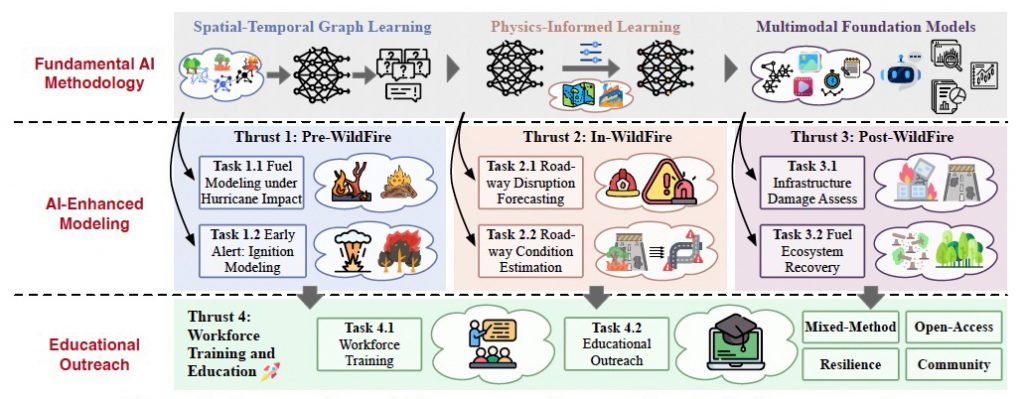

Dong’s project, “FIRE: An Integrated AI System Tackling the Full Lifecycle of Wildfires in Hurricane-Prone Regions,” will bring together computer scientists, fire researchers, engineers and educators to study how hurricanes change wildfire behavior and to build AI systems that can forecast ignition, predict roadway disruptions, and assess potential damage.

“The modern practice of prescribed burns began over 60 years ago, which was a huge leap in working with nature to help manage an ecosystem,” said Dong, who joined FSU’s faculty last year and established the Responsible AI Lab at FSU after earning his doctorate from the University of Virginia. “Now, we’re positioned to make another leap: we’re able to use powerful AI technology to transform wildfire risk management with tools such as ignition forecasting, roadway disruption prediction, condition estimations, damage assessments and more.”

The project is funded as part of an NSF program, Fire Science Innovations through Research and Education, or FIRE, which was established last year and funds research and education enabling large-scale, interdisciplinary breakthroughs that realign our relationship with wildland fire and its connected variables.

Two of the four projects funded so far by the competitive program are led by FSU researchers — Neda Yaghoobian, associate professor in the Department of Mechanical and Aerospace Engineering at the FAMU-FSU College of Engineering, was also funded for a project that will analyze unresolved canopy dynamics contributing to wildfires.

“This grant represents the department’s biggest research award to date and cements our leadership in applying cutting-edge AI to urgent, real-world problems in our region,” said Weikuan Yu, Department of Computer Science chair. “The funding enables the development of a holistic AI platform addressing Florida’s hurricane and wildfire challenges while advancing cutting-edge AI research. Additionally, the grant includes educational and workforce development initiatives in AI and disaster resilience, positioning the department as a leader in training the next generation of scientists working at the intersection of AI and wildfire research.”

WHY IT MATTERS

Fires, especially low-intensity natural wildfires and prescribed burns, can play a vital role in regulating certain forests, grasslands and other fire-adapted ecosystems. They decrease the risk and severity of large, destructive wildfires while supporting soil processes and, in many cases, limit pest and disease outbreaks.

In clearing fallen leaves that pose as hazardous fuel loads, fires lower forest density and recycle nutrients through the ecosystem. But when heaps of trees accumulate, as has happened following recurrent hurricanes in the Florida Panhandle, these fires can exhibit complex dynamics that threaten built infrastructure including homes and roadways in addition to natural landscapes. Understanding this hurricane-wildfire connection is critical for planning evacuations, protecting roads and safeguarding homes and lives.

“I became passionate about applying my research, which achieves responsible AI that directly contributes to critical AI infrastructures, to hurricane-related phenomena after experiencing my first hurricane living in Tallahassee,” Dong said. “I want to use AI techniques to help Florida Panhandle residents better understand and prepare for extreme events in this ecosystem with its unique hurricane-fire coupling dynamics.”

INTERDISCIPLINARY COLLABORATION

Eren Ozguven, associate professor in the FAMU-FSU College of Engineering Department of Civil and Environmental Engineering and Resilient Infrastructure and Disaster Response Center director, is the co-principal investigator on this project, and additional contributors include James Reynolds, co-director of STEM outreach for FSU’s Learning Systems Institute, and Jie Sun, a postdoctoral researcher in the Department of Earth, Ocean and Atmospheric Science.

“Yushun’s project stands out for its ambition, insight, and integrative approach,” Yu said. “It zeros in on the unique challenges of Florida’s landscape where hurricanes and wildfires intersect in the wildland-urban interface of the Panhandle. By focusing on hurricane-fire coupling dynamics and working closely with local stakeholders, his project ensures that scientific innovation translates into practical, community-centered solutions. His integrated approach brings the benefit of cutting-edge AI advances directly to major real-world applications, creating a wonderful research lifecycle that’s exceptionally rare in our field.”

To learn about research conducted in the Department of Computer Science, visit cs.fsu.edu.

AI Research

EY-Parthenon practice unveils neurosymbolic AI capabilities to empower businesses to identify, predict and unlock revenue at scale | EY

Jeff Schumacher, architect behind the groundbreaking AI solution, to steer EY Growth Platforms.

Ernst & Young LLP (EY) announced the launch of EY Growth Platforms (EYGP), a disruptive artificial intelligence (AI) solution powered by neurosymbolic AI. By combining machine learning with logical reasoning, EYGP empowers organizations to uncover transformative growth opportunities and revolutionize their commercial models for profitability. The neurosymbolic AI workflows that power EY Growth Platforms consistently uncover hundred-million-dollar+ growth opportunities for global enterprises, with the potential to enhance revenue.

This represents a rapid development in enterprise technology—where generative AI and neurosymbolic AI combine to redefine how businesses create value. This convergence empowers enterprises to reimagine growth at impactful scale, producing outcomes that are traceable, trustworthy and statistically sound.

EYGP serves as a powerful accelerator for the innovative work at EY-Parthenon, helping clients with their most complex strategic opportunities to realize greater value and transform their business, by reimagining their business from the ground up—including building and scaling new corporate ventures, or executing high-stakes transactions.

“In today’s uncertain economic climate, leading companies aren’t just adapting—they’re taking control,” says Mitch Berlin, EY Americas Vice Chair, EY-Parthenon. “EY Growth Platforms gives our clients the predictive power and actionable foresight they need to confidently steer their revenue trajectory. EY Growth Platforms is a game changer, poised to become the backbone of enterprise growth.”

How EY Growth Platforms work

Neurosymbolic AI merges the statistical power of neural networks with the structured logic of symbolic reasoning, driving powerful pattern recognition to deliver predictions and decisions that are practical, actionable and grounded in real-world outcomes. EYGP harnesses this powerful technology to simulate real-time market scenarios and their potential impact, uncovering the most effective business strategies tailored to each client. It expands beyond the limits of generative AI, becoming a growth operating system for companies to tackle complex go-to-market challenges and unlock scalable revenue.

At the core of EYGP is a unified data and reasoning engine that ingests structured and unstructured data from internal systems, external signals, and deep EY experience and data sets. Developed over three years, this robust solution is already powering proprietary AI applications and intelligent workflows for EY-Parthenon clients across the consumer product goods, industrials and financial services sectors without the need for extensive data cleaning or digital transformation.

Use cases for EY Growth Platforms

With the ability to operate in complex high-stakes scenarios, EYGP is driving a measurable impact across industries such as:

- Financial services: In a tightly regulated industry, transparency and accountability are nonnegotiable. Neurosymbolic AI enhances underwriting, claims processing and compliance with transparency and rigor, validating that decisions are aligned with regulatory standards and optimized for customer outcomes.

- Consumer products: Whether powering real-time recommendations, adaptive interfaces or location-aware services, neurosymbolic AI drives hyperpersonalized experiences at a one-to-one level. By combining learned patterns with structured knowledge, it delivers precise, context-rich insights tailored to individual behavior, preferences and environments.

- Industrial products: Neurosymbolic AI helps industrial conglomerates optimize the entire value chain — from sourcing and production to distribution and service. By integrating structured domain knowledge with real-time operational data, it empowers leaders to make smarter decisions — from facility placement and supply routing to workforce allocation tailored to specific geographies and market-specific conditions.

The platform launch follows the appointment of Jeff Schumacher as the EYGP Leader for EY-Parthenon. Schumacher brings over 25 years of experience in business strategy, innovation and digital disruption, having helped establish over 100 early growth companies. He is the founder of neurosymbolic AI company, Growth Protocol, the technology that EY has exclusive licensing agreement with.

“Neurosymbolic AI is not another analytics tool, it’s a growth engine,” says Jeff Schumacher, EY Growth Platforms Leader, EY-Parthenon. “With EY Growth Platforms, we’re putting a dynamic, AI-powered operating system in the hands of leaders, giving them the ability to rewire how their companies make money. This isn’t incremental improvement; it’s a complete reset of the commercial model.”

EYGP is currently offered in North America, Europe, and Australia. For more information, visit ey.com/NeurosymbolicAI/

– ends –

About EY

EY is building a better working world by creating new value for clients, people, society and the planet, while building trust in capital markets.

Enabled by data, AI and advanced technology, EY teams help clients shape the future with confidence and develop answers for the most pressing issues of today and tomorrow.

EY teams work across a full spectrum of services in assurance, consulting, tax, strategy and transactions. Fueled by sector insights, a globally connected, multi-disciplinary network and diverse ecosystem partners, EY teams can provide services in more than 150 countries and territories.

All in to shape the future with confidence.

EY refers to the global organization, and may refer to one or more, of the member firms of Ernst & Young Global Limited, each of which is a separate legal entity. Ernst & Young Global Limited, a UK company limited by guarantee, does not provide services to clients. Information about how EY collects and uses personal data and a description of the rights individuals have under data protection legislation are available via ey.com/privacy. EY member firms do not practice law where prohibited by local laws. For more information about our organization, please visit ey.com.

This news release has been issued by EYGM Limited, a member of the global EY organization that also does not provide any services to clients.

AI Research

Qodo Unveils Top Deep Research Agent for Coding, Outperforming Leading AI Labs on Multi-Repository Benchmark

Qodo Aware Deep Research achieves 80% accuracy on new coding benchmark, surpassing OpenAI’s Codex at 74%, Anthropic’s Claude Code at 64%, and Google’s Gemini CLI at 45%

Qodo, the agentic code quality platform, announced Qodo Aware, a new flagship product in its enterprise platform that brings agentic understanding and context engineering to large codebases. It features the industry’s first deep research agent designed specifically for navigating enterprise-scale codebases. In benchmark testing, Qodo Aware’s deep research agent demonstrated superior accuracy and speed compared to leading AI coding agents when answering questions that require context from multiple repositories.

AI has made generating code easy, but ensuring quality at scale is now even harder. Modern software systems span hundreds or thousands of interconnected code repositories, making it nearly impossible for developers to maintain a comprehensive understanding of their organization’s entire codebase. While current AI coding tools excel at single-repository tasks, they cannot traverse the complex web of dependencies and relationships: the 2025 State of AI Code Quality report found that more than 60% of developers say AI coding tools miss relevant context. Qodo Aware addresses this limitation with a context engine that powers deep research agents that can automatically navigate across repository boundaries.

“Developers don’t typically work in isolation, they need to understand how changes in one service affect systems across their entire organization and how those systems evolved to their current state,” said Itamar Friedman, co-founder and CEO of Qodo. “Our deep research agent can analyze impact, dependencies and historical context across thousands of files and hundreds of repositories in seconds, something that could take a principal engineer hours or days to trace manually. This eliminates the traditional speed-quality tradeoff that enterprises face when adopting AI for development, while adding the crucial dimension of understanding not just what the code does, but why it was built that way.”

Also Read: AiThority Interview with Tim Morrs, CEO at SpeakUp

Qodo Aware features three distinct modes, each powered by specialized agents for different use cases. The Deep Research agent performs comprehensive multi-step analysis across repositories, making it ideal for complex architectural questions and system-wide tasks. For quicker code Q&As, the Ask agent provides rapid responses through agentic context retrieval, and the Issue Finder agent searches across repos for bugs, code duplication, security risks, and other hidden issues. These agents can be used to get direct answers, or integrated into existing coding agents, like Cursor and Claude Code, as a powerful context retrieval layer, enhancing their ability to understand large-scale codebases.

Qodo Aware uses a sophisticated indexing and context retrieval approach that combines Language Server Protocol (LSP) analysis, knowledge graphs, and vector embeddings to create deep semantic understanding of code relationships. For enterprises, this means developers can safely modify complex systems without fear of breaking unknown dependencies, reducing deployment risks and accelerating release cycles. Teams report cutting investigation time for complex issues from days to minutes, even when working across massive, interconnected codebases with more than 100M lines of code.

Along with these capabilities, Qodo is releasing a new multi-repository dataset for evaluating coding deep research agents. The dataset includes real-world questions that require information that spans multiple open source code repositories to correctly answer. On the new DeepCodeBench benchmark, Qodo Aware achieved 80% accuracy, while OpenAI Codex scored 74%, Claude Code reached 64%, and Gemini CLI correctly solved 45%. Importantly, Qodo Aware Deep Research took less than half the time of Codex to answer, enabling faster iteration cycles for developers.

Qodo Aware has been integrated directly into existing Qodo development tools – including Qodo Gen IDE agent, Qodo Command CLI agent, and Qodo Merge code review agent – bringing context to workflows across the entire software development lifecycle.. It is also available as a standalone product accessible via Model Context Protocol (MCP) and API, enabling integration with any AI assistant or coding agent. Qodo Aware can be deployed within enterprise single-tenant environments, ensuring code never leaves organizational boundaries, while maintaining the governance and compliance standards enterprises require. It supports GitHub, GitLab, and Bitbucket, with all indexing and processing occurring within customer-controlled infrastructure.

AI Research

Self-Assembly Gets Automated in Reverse of ‘Game of Life’

Alexander Mordvintsev showed me two clumps of pixels on his screen. They pulsed, grew and blossomed into monarch butterflies. As the two butterflies grew, they smashed into each other, and one got the worst of it; its wing withered away. But just as it seemed like a goner, the mutilated butterfly did a kind of backflip and grew a new wing like a salamander regrowing a lost leg.

Mordvintsev, a research scientist at Google Research in Zurich, had not deliberately bred his virtual butterflies to regenerate lost body parts; it happened spontaneously. That was his first inkling, he said, that he was onto something. His project built on a decades-old tradition of creating cellular automata: miniature, chessboard-like computational worlds governed by bare-bones rules. The most famous, the Game of Life, first popularized in 1970, has captivated generations of computer scientists, biologists and physicists, who see it as a metaphor for how a few basic laws of physics can give rise to the vast diversity of the natural world.

In 2020, Mordvintsev brought this into the era of deep learning by creating neural cellular automata, or NCAs. Instead of starting with rules and applying them to see what happened, his approach started with a desired pattern and figured out what simple rules would produce it. “I wanted to reverse this process: to say that here is my objective,” he said. With this inversion, he has made it possible to do “complexity engineering,” as the physicist and cellular-automata researcher Stephen Wolfram proposed in 1986 — namely, to program the building blocks of a system so that they will self-assemble into whatever form you want. “Imagine you want to build a cathedral, but you don’t design a cathedral,” Mordvintsev said. “You design a brick. What shape should your brick be that, if you take a lot of them and shake them long enough, they build a cathedral for you?”

Such a brick sounds almost magical, but biology is replete with examples of basically that. A starling murmuration or ant colony acts as a coherent whole, and scientists have postulated simple rules that, if each bird or ant follows them, explain the collective behavior. Similarly, the cells of your body play off one another to shape themselves into a single organism. NCAs are a model for that process, except that they start with the collective behavior and automatically arrive at the rules.

Alexander Mordvintsev created complex cell-based digital systems that use only neighbor-to-neighbor communication.

Courtesy of Alexander Mordvintsev

The possibilities this presents are potentially boundless. If biologists can figure out how Mordvintsev’s butterfly can so ingeniously regenerate a wing, maybe doctors can coax our bodies to regrow a lost limb. For engineers, who often find inspiration in biology, these NCAs are a potential new model for creating fully distributed computers that perform a task without central coordination. In some ways, NCAs may be innately better at problem-solving than neural networks.

Life’s Dreams

Mordvintsev was born in 1985 and grew up in the Russian city of Miass, on the eastern flanks of the Ural Mountains. He taught himself to code on a Soviet-era IBM PC clone by writing simulations of planetary dynamics, gas diffusion and ant colonies. “The idea that you can create a tiny universe inside your computer and then let it run, and have this simulated reality where you have full control, always fascinated me,” he said.

He landed a job at Google’s lab in Zurich in 2014, just as a new image-recognition technology based on multilayer, or “deep,” neural networks was sweeping the tech industry. For all their power, these systems were (and arguably still are) troublingly inscrutable. “I realized that, OK, I need to figure out how it works,” he said.

He came up with “deep dreaming,” a process that takes whatever patterns a neural network discerns in an image, then exaggerates them for effect. For a while, the phantasmagoria that resulted — ordinary photos turned into a psychedelic trip of dog snouts, fish scales and parrot feathers — filled the internet. Mordvintsev became an instant software celebrity.

Among the many scientists who reached out to him was Michael Levin of Tufts University, a leading developmental biologist. If neural networks are inscrutable, so are biological organisms, and Levin was curious whether something like deep dreaming might help to make sense of them, too. Levin’s email reawakened Mordvintsev’s fascination with simulating nature, especially with cellular automata.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi