AI Insights

Fewer Hallucinations Could Mean Faster AI Adoption by Business | American Enterprise Institute

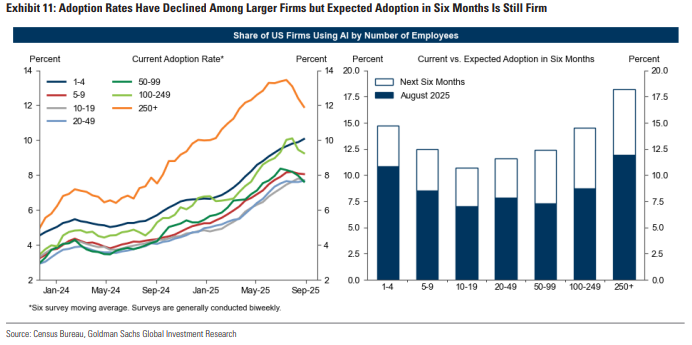

Here’s a problem if you’re hoping a technology-driven productivity upturn will soon supercharge the American economy: Artificial intelligence continues to attract capital more quickly than actual users. Goldman Sachs’s latest adoption tracker shows corporate use of generative AI crept up to 9.7 percent of US firms in the third quarter, only a modest rise from 9.2 percent three months earlier. Financial and real-estate companies are adding AI fastest, while education has pulled back. “Broadcasting and publishing firms reported the largest expected increase in AI adoption over the next six months,” the bank notes.

Investment tells a different story, however. Since ChatGPT’s debut in late 2022, analysts have boosted their end-2025 revenue outlook for chipmakers by $203 billion, roughly 0.7 percent of US GDP, and for other hardware suppliers by an additional $123 billion, or 0.4 percent of GDP. Moreover, shipments of AI-related components have climbed in the United States, Japan, and Canada, with part of the recent surge tied to tariff front-loading.

(I would add that investment bank UBS reckons companies will splurge $375 billion on AI infrastructure this year, rising to $500 billion in 2026. Software and computing gear accounted for a quarter of America’s economic growth in the second quarter, according to The New York Times. In other words, the largest technology investment cycle since the 1990s internet boom continues apace.)

The result of these two trends is a now-familiar mismatch. Companies are buying the components of AI infrastructure but remain hesitant to weave it into daily operations. GS: “Recent industry surveys suggest that concerns around data security, quality, and availability remain the top barriers to adoption.”

Perhaps that insight helps explain why larger firms, those with 250 or more employees and medium-sized firms with 100 to 249 workers, actually saw adoption rates tick down (but expected future adoption to remain strong). Also hanging over this phenomenon: A recent MIT study found 95 percent of organizations saw no AI investment return.

My speculation for that result: Bigger companies especially might be more risk-averse to large language models generating hallucinations. If so, a new paper from OpenAI, “Why Language Models Hallucinate,” offers a diagnosis and possible treatment for these confident falsehoods. Like students guessing on multiple-choice exams, LLMs are rewarded for bluffing when unsure. Evaluations penalize an “I don’t know” response more than a plausible but wrong answer. That misaligned incentive sustains overconfidence and undermines corporate trust. “This creates an ‘epidemic’ of penalizing uncertainty and abstention, which we argue that a small fraction of hallucination evaluations won’t suffice,” the authors explain.

So what’s the fix? The research team suggests the path forward is less about inventing new tests than about changing the rules of existing ones. These “tests” are the benchmarks and leaderboards—like multiple-choice accuracy exams—that developers use to measure whether a model is improving. Right now, they mostly reward a confident answer and give zero credit for an “I don’t know.”

As the paper puts it, “Simple modifications of mainstream evaluations can realign incentives, rewarding appropriate expressions of uncertainty rather than penalizing them.” If models were credited for saying “I don’t know” when unsure, rather than punished for it, they would have far less incentive to bluff. That shift could help turn AI into a more reliable colleague—and give firms more confidence to deploy the tools they are already buying.

Until then, corporate caution looks rational. Firms can hardly be blamed for waiting until the technology behaves a bit more like a trusted coworker than a smooth-talking guesser. Yet as a believer in the notion that today’s AI is the worst it will ever be, I think that C-suite caution will give way to a more aggressive embrace.

AI Insights

University of North Carolina hiring Chief Artificial Intelligence Officer

The University of North Carolina (UNC) System Office has announced it is hiring a Chief Artificial Intelligence Officer (CAIO) to provide strategic vision, executive leadership, and operational oversight for AI integration across the 17-campus system.

Reporting directly to the Chief Operating Officer, the CAIO will be responsible for identifying, planning, and implementing system-wide AI initiatives. The role is designed to enhance administrative efficiency, reduce operational costs, improve educational outcomes, and support institutional missions across the UNC system.

The position will also act as a convenor of campus-level AI leads, data officers, and academic innovators, with a brief to ensure coherent strategies, shared best practices, and scalable implementations. According to the job description, the role requires coordination and diplomacy across diverse institutions to embed consistent policies and approaches to AI.

The UNC System Office includes the offices of the President and other senior administrators of the multi-campus system. Nearly 250,000 students are enrolled across 16 universities and the NC School of Science and Mathematics.

System Office staff are tasked with executing the policies of the UNC Board of Governors and providing university-wide leadership in academic affairs, financial management, planning, student affairs, and government relations. The office also has oversight of affiliates including PBS North Carolina, the North Carolina Arboretum, the NC State Education Assistance Authority, and University of North Carolina Press.

The new CAIO will work under a hybrid arrangement, with at least three days per week onsite at the Dillon Building in downtown Raleigh.

UNC’s move to appoint a CAIO reflects a growing trend of U.S. universities formalizing AI integration strategies at the leadership level. Last month, Rice University launched a search for an Assistant Director for AI and Education, tasked with leading faculty-focused innovation pilots and embedding responsible AI into classroom practice.

The ETIH Innovation Awards 2026

AI Insights

Pre-law student survey unmasks fears of artificial intelligence taking over legal roles

“We’re no longer talking about AI just writing contracts or breaking down legalese. It is reshaping the fundamental structure of legal work. Our future lawyers are smart enough to see that coming. We want to provide them this data so they can start thinking about how to adapt their skills for a profession that will look very different by the time they enter it,” said Arush Chandna, Juris Education founder, in a statement.

Juris Education noted that law schools are already integrating legal tech, ethics, and prompt engineering into curricula. The American Bar Association’s 2024 AI and Legal Education Survey revealed that 55 percent of US law schools were teaching AI-specific classes and 83 percent enabled students to learn effective AI tool use through clinics.

Juris Education’s director of advising Victoria Inoyo pointed out that AI could not replicate human communication skills.

“While AI is reshaping the legal industry, the rise of AI is less about replacement and more about evolution. It won’t replace the empathy, judgment, and personal connection that law students and lawyers bring to complex issues,” she said. “Future law students should focus on building strong communication and interpersonal skills that set them apart in a tech-enhanced legal landscape. These are qualities AI cannot replace.”

Juris Education’s survey obtained responses from 220 pre-law students. The challenge of maintaining work-life balance was cited by 21.8 percent of respondents as their primary career concern; increasing student debt juxtaposed against low job security was the third most prevalent concern with 17.3 percent of respondents citing it as their biggest career fear.

AI Insights

Trust in Businesses’ Use of AI Improves Slightly

WASHINGTON, D.C. — About a third (31%) of Americans say they trust businesses a lot (3%) or some (28%) to use artificial intelligence responsibly. Americans’ trust in the responsible use of AI has improved since Gallup began measuring this topic in 2023, when just 21% of Americans said they trusted businesses on AI. Still, just under half (41%) say they do not trust businesses much when it comes to using AI responsibly, and 28% say they do not trust them at all.

###Embeddable###

These findings from the latest Bentley University-Gallup Business in Society survey are based on a web survey with 3,007 U.S. adults conducted from May 5-12, 2025, using the probability-based Gallup Panel.

Most Americans Neutral on Impact of AI

When asked about the net impact of AI — whether it does more harm than good — Americans are increasingly neutral about its impact, with 57% now saying it does equal amounts of harm and good. This figure is up from 50% when Gallup first asked this question in 2023. Meanwhile, 31% currently say they believe AI does more harm than good, down from 40% in 2023, while a steady 12% believe it does more good than harm.

###Embeddable###

The decline from 2023 to 2025 in the percentage of Americans who believe AI will do more harm than good is driven by improvements in attitudes among older Americans. Generally speaking, older Americans are less concerned than younger Americans when it comes to AI’s total impact on society. While skepticism about AI and its impact exists across all age groups, it tends to be higher among younger Americans.

Majority of Americans Are Concerned About AI Impact on Jobs

Those who believe AI will do more harm than good may be thinking at least partially about the technology’s impact on the job market. The majority (73%) of Americans believe AI will reduce the total number of jobs in the United States over the next 10 years, a rate that has remained stable over the past three years in which Gallup has asked this question.

###Embeddable###

Younger Americans aged 18 to 29 are slightly more optimistic about the potential of AI to create more jobs. Fourteen percent of those aged 18 to 29 say AI will lead to an increase in the total number of jobs, compared with 9% of those aged 30 to 44, 7% of those aged 45 to 59 and 6% of those aged 60 and over.

Bottom Line

As AI becomes more common in personal and professional settings, Americans report increased confidence that businesses will use it responsibly and are more comfortable with its overall impact.

Even so, worries about AI’s effect on jobs persist, with nearly three-quarters of Americans believing the technology will reduce employment opportunities in the next decade. Younger adults are somewhat more optimistic about the potential for job creation, but they, too, remain cautious. Still, concerns about ethics, accountability and the potential unintended consequences of AI are top of mind for many Americans.

These results underscore the challenge businesses face as they deploy AI: They must not only demonstrate the technology’s benefits but also show, through transparent practices, that it will not come at the expense of workers or broader public trust. How businesses address these concerns will play a central role in shaping whether AI is ultimately embraced or resisted in the years ahead.

Learn more about how the Bentley University-Gallup Business in Society research works.

Learn more about how the Gallup Panel works.

###Embeddable###

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi