AI Research

Experts discuss AI strategy, implementation and risks

The basics:

- Panelists emphasize AI risk assessment and governance

- Upskilling and training are crucial for successful AI adoption

- Transparency and data security remain top concerns

- AI strategies must stay flexible to adapt to rapid changes

It is a topic that is everywhere – artificial intelligence – and NJBIZ recently hosted a panel discussion about this critical technology that is rapidly evolving and affecting society in myriad ways.

The 90-minute discussion, moderated by NJBIZ Editor Jeffrey Kanige, featured a panel of experts comprising:

- Joshua Levy; director, Business & Commercial Litigation Group, firm general counsel, AI & Emerging Technologies Committee chair; Gibbons PC

- Carl Mazzanti, co-founder and president, eMazzanti Technologies

- Hrishikesh Pippadipally, CIO, Wiss

- Mike Stubna, director of software engineering and data science, Hackensack Meridian Health

- Oya Tukel, dean of the Martin Tuchman School of Management, New Jersey Institute of Technology

The discussion opened with the panelists discussing how their respective organizations are deploying AI both within their own organizations and for stakeholders.

‘Where do I start?’

“I actually wanted to pick up on the question of what an AI strategy looks like,” said Kanige. “The questions we get constantly – and we’re getting them now – is ‘I get it – I hear what you’re all saying. We need to use AI. Where do I start?

“I’d like to hear about what you think are the most important components of your own AI strategy – and how that might translate into other businesses – in different lines of work, if that’s possible?”

Stubna led off – and said that is a good question.

“There’s a few components of our overall strategy. As I mentioned, one of the big components is a comprehensive risk assessment,” said Stubna. So, before adopting any sort of new AI-powered tool, it’s important to attempt to understand and quantify the risks associated with it.

“For example, maybe you’re using a service to summarize pages and pages of information. If you’re sending this service proprietary information, and they have the ability to save that and use it for their own purposes, that’s a risk, right? Because that might be leaked out by them. So, understanding the risk is a really big part of the strategy for every solution.”

He said that the other component is the value that the solution provides.

“So, in many cases with, especially, workflow optimization or administrative efficiencies, the value could be quantified in terms of hours saved,” Stubna explained. “Of course, vendors will tell you that their solution will save so much time – but it’s really important to do your own due diligence. We make extensive use of pilots of tools. So trying them out in a small, controlled setting, with a few users or a subset of users, to really try to quantify the value that’s coming out of a particular solution.”

AI guardrails

Pippadipally went next. He said that when thinking of an AI strategy, business leaders need to establish responsible AI governance.

“What’s your company policy; what software can we use?” the Wiss CIO asked. He noted that his firm has strict guardrails and approvals on what can and cannot not be used. “So, that’s more from the risk perspective. But moving on from there, in terms of adoption – and how do we structure this within our firm. I would probably phrase this in terms of – people process technology.

“Technology is only as good as those using it. If you don’t know how to use it, there’s no use to it. So, we have done a big round of upskilling-AI training six months ago. It’s kind of embedded constant learning. We’re already thinking what’s new from the last six months – try to incorporate it and have a well-rounded training.”

He stressed that the training process is important because it entails actually going through real use cases from the pilots that the company conducted.

“How the co-workers are using it in their day-to-day lives – and that’s the most impactful way to do it.”

Replay: AI Panel Discussion

Click through to register to watch the full panel discussion!

Levy said, “I certainly agree that the evaluation of the risk is paramount. And I think I mentioned before, for us, confidentiality of our data and our clients. Data is front of mind – and then also scrutinizing the value. And so maybe to approach it from an angle that hasn’t been discussed: expense.

“At Gibbons, we are about a 150-160 attorney law firm. And we have the overhead we have. But there are firms larger than us that can afford maybe multiple highly overlapping platforms. And I’m sure there are firms smaller that have to make even more careful decisions about which technologies to invest in. It’s an ever-changing landscape, and we try and approach it with humility and understanding that – what we may be investing in now might not necessarily be what we need in the future.

“And we try to remain flexible to the changing environment.”

Securing data

Mazzanti said that there is so much “tool creep” taking place because everyone’s offering something – and each saying that they are the best. He noted that his firm is seeing trends within clients’ organizations where a heavy number of users are already utilizing a particular tool.

“Maybe we should consider embracing that to the organization because your staff already voted,” Mazzanti said about what he will tell these clients. “They’ve already said that this is the one they like the most – and you already have a bunch of heavy users. Maybe we could do that.”

In referencing risk assessment and governance, Mazzanti suggested digging a moat around data.

“So, robotic process automation has been around for a long time. We’ve been feeding it data to have it go do these individual tasks. That was before there was some intelligent or the generative concept came across. Well, when you were feeding the data, it was typically your own set in in your own servers – in your own environment being run. And now that it’s generally available and you can rent, not a lot of the tools are offering to put security around your data,” he explained.

“I’m very surprised at customers who deploy without any sort of plan whatsoever,” he said, pointing to incidents that can occur with data being leaked or breached because the proper controls were not set up. “Privacy tags – super important. Start doing that around your HR, your offer letters, your salaries, things like that.”

Staying vigilant

“Oya, you’ve heard a couple of things – you’ve heard about upskilling and training. You’ve also heard about what these kids nowadays are doing. What do you think when you hear that?” Kanige asked. “How much do you upskill? Obviously, everyone coming out of NJIT will be immediately upskilled and trained – to step right into all of these jobs that we’re talking about.

“But if a company is in the market for that kind of thing? How hard is it for folks to grasp these concepts – and how hard is it to teach them how to do these kinds of things?”

Tukel noted that the next generation is very tech savvy.

“I have to say, maybe it’s more encouraging for faculty to be in the forefront together with the students using the technology. Because sometimes we are skeptical about what a technology can do,” Tukel explained. “So, we always go back to the fundamentals of what we need to learn. I agree with Carl that there are a lot of loose ends with the new technology – up until it solidifies.

“We are at the stage where there are a lot of mistakes and a lot of problems happening with AI. And many of those, as everybody has been mentioning, is the safety and security of the property we own. The most expensive property we own is data these days. Companies are very worried about accessibility of data around the world coming back and hacking your systems tomorrow.

“And it can really make the company disappear tomorrow.”

The most expensive property we own is data these days.

– Oya Tukel, dean of the Martin Tuchman School of Management, NJIT

Because of that reality, Tukel said that workers understanding the AI strategy is very critical.

“Like why do we use this AI? Where we will use and where we will not use? And I think we have to be a little bit patient up until the companies put bells and whistles around the AI tools that make it for more of us to be more comfortable of using it.”

Be transparent

From there, the conversation snaked through a number of topics around AI, such as the limits of what the technology can and cannot do – and should and should not do; the risks and potential pitfalls of the technology; cybersecurity; the workforce impacts; regulatory issues; inclusivity; and more, before Kanige came around for a final round to the panelists.

Tukel stressed the need for transparency into the process of the use of AI.

“I think it is OK to not look at this tool as a shortcut that covers our areas of deficiencies. It’s a tool that helps us,” said Tukel. She noted, however, that the technology still has its problems in terms of bias in the data and algorithm. “But declaring that this was prepared by me – but using AI tools – in your documentation for public-facing writings and news you are putting out, can definitely put you in a better position.”

“[J]ust know that if you come to Hackensack Meridian Health, that we’re on the forefront of using AI,” said Stubna. “And you can have a lot of confidence that it’s something that we take extremely seriously – ensuring that this is used in a responsible way that really focuses on patient care first and foremost.”

“At Wiss, we have heavy transparency,” said Pippadipally. “We try to use AI to enrich the product of client outcomes as much as we can. Anything, any financial issues that you guys have, feel free to reach out.”

“If we had to leave someone with parting words here – it would be, choose a good partner that’s done this – binds your vision and values and know you support to walk hand-in-hand with your suppliers,” said Mazzanti, stressing service delivery. “My organization is incredibly partner friendly. We work with your team to evolve with your best interest at heart. You’ve heard from some great panelists here today.

“We welcome the dialogue. And if we can help what you’re already doing, be from good to great or great to awesome; we welcome the opportunity to do that.”

Stay flexible

Levy closed it out.

“I suppose I’ll piggyback on to Carl, what you said – find the right partners. My own approach to this is, simply, keeping my eyes open and trying to stay humble in this ever-changing environment. Even if I’m here, even if all of us are here, because in some ways we’re experts in this arena – I don’t know that I think anyone truly has a perfect understanding of where any of our industries are going to be in five years, 10 years, 20 years.

“And we just have to be flexible and keep that in mind – and work with the right folks to navigate the future.”

AI Research

Clanker! This slur against robots is all over the internet – but is it offensive? | Artificial intelligence (AI)

Name: Clanker.

Age: 20 years old.

Appearance: Everywhere, but mostly on social media.

It sounds a bit insulting. It is, in fact, a slur.

What kind of slur? A slur against robots.

Because they’re metal? While it’s sometimes used to denigrate actual robots – including delivery bots and self-driving cars – it’s increasingly used to insult AI chatbots and platforms such as ChatGPT.

I’m new to this – why would I want to insult AI? For making up information, peddling outright falsehoods, generating “slop” (lame or obviously fake content) or simply not being human enough.

Does the AI care that you’re insulting it? That’s a complex and hotly debated philosophical question, to which the answer is “no”.

Then why bother? People are taking out their frustrations on a technology that is becoming pervasive, intrusive and may well threaten their future employment.

Clankers, coming over here, taking our jobs! That’s the idea.

Where did this slur originate? First used to refer pejoratively to battle androids in a Star Wars game in 2005, clanker was later popularised in the Clone Wars TV series. From there, it progressed to Reddit, memes and TikTok.

And is it really the best we can do, insult-wise? Popular culture has spawned other anti-robot slurs – there’s “toaster” from Battlestar Galactica, and “skin-job” from Blade Runner – but “clanker” seems to have won out for now.

It seems like a stupid waste of time, but I guess it’s harmless enough. You say that, but many suggest using “clanker” could help to normalise actual bigotry.

Oh, come on now. Popular memes and spoof videos tend to treat “clanker” as being directly analogous to a racial slur – suggesting a future where we all harass robots as if they were an oppressed minority.

So what? They’re just clankers. “Naturally, when we trend in that direction, it does play into those tropes of how people have treated marginalised communities before,” says linguist Adam Aleksic.

I’m not anti-robot; I just wouldn’t want my daughter to marry one. Can you hear how that sounds?

I have a feeling we’re going to be very embarrassed about all this in 10 years. Probably. Some people argue that, by insulting AI, we’re crediting it with a level of humanity it doesn’t warrant.

That would certainly be my assessment. However, the “Roko’s basilisk” thought experiment posits that a future artificial superintelligence might punish all those who failed to help it flourish in the first place.

I guess calling it a clanker would count. We may end up apologising to our robot overlords for past hate crimes.

Or perhaps they’ll see the funny side of all this? Assuming the clankers develop a sense of humour some day.

Do say: “The impulse to coin this slur says more about our anxieties than it does about the technology itself.”

Don’t say: “Some of my best friends are clankers.”

AI Research

Most ninth-graders use AI: survey

-

By Rachel Lin and Lery Hiciano / Staff reporter, with staff writer

About 69 percent of ninth-graders use artificial intelligence (AI), most commonly for homework, creating images or videos, and chatting, a survey found yesterday.

The poll was conducted by the National Academy of Educational Research (NAER) and Academia Sinica as part of the Taiwan Assessment of Student Achievement Longitudinal Study.

Asked about AI, 94.2 percent of the students knew what generative AI was, although 31 percent said that they had never used it, the survey showed.

Photo: Rachel Lin, Taipei Times

Among those who said that they used generative AI, 6.8 percent used it daily, 3.9 percent used it five to six times per week, 12.2 percent three to four times per week and 46 percent used it once or twice a week, the survey found.

About 53.2 percent of ninth-graders said that teachers had taught them how to use generative AI, while 46.8 percent said their teachers did not, suggesting that the adoption rate of the technology could continue to improve.

Students said they used AI for homework, translation, research and content creation, indicating that the technology has already become a part of their studies and daily habits across academic and creative interests.

This could reflect how younger, digitally native groups are more amenable to new technologies, and points to a growing trend of using AI in a balanced way, the NAER said.

The gap between those who are aware of AI and those who use it suggests that most are not becoming advanced or heavy users, it said.

As about half of schools are teaching students how to use AI, it suggests that teachers recognize how important the technology is becoming, the NAER said, adding that the wide array of ways in which students use AI tools also shows its wide-ranging capability and potential.

AI Research

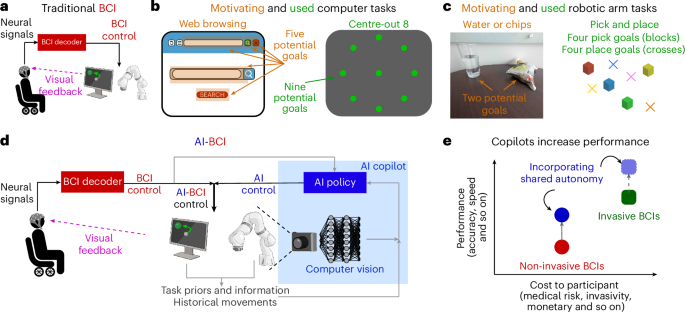

Brain–computer interface control with artificial intelligence copilots

Hochberg, L. R. et al. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature 442, 164–171 (2006).

Gilja, V. et al. Clinical translation of a high-performance neural prosthesis. Nat. Med. 21, 1142–1145 (2015).

Pandarinath, C. et al. High performance communication by people with paralysis using an intracortical brain-computer interface. eLife 6, e18554 (2017).

Hochberg, L. R. et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature 485, 372–375 (2012).

Collinger, J. L. et al. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet 381, 557–564 (2013).

Wodlinger, B. et al. Ten-dimensional anthropomorphic arm control in a human brain-machine interface: difficulties, solutions, and limitations. J. Neural Eng. 12, 016011 (2015).

Aflalo, T. et al. Decoding motor imagery from the posterior parietal cortex of a tetraplegic human. Science 348, 906–910 (2015).

Edelman, B. J. et al. Noninvasive neuroimaging enhances continuous neural tracking for robotic device control. Sci. Robot. 4, eaaw6844 (2019).

Reddy, S., Dragan, A. D. & Levine, S. Shared autonomy via deep reinforcement learning. In Proc. Robotics: Science and Systems https://doi.org/10.15607/RSS.2018.XIV.005 (RSS, 2018).

Laghi, M., Magnanini, M., Zanchettin, A. & Mastrogiovanni, F. Shared-autonomy control for intuitive bimanual tele-manipulation. In 2018 IEEE-RAS 18th International Conference on Humanoid Robots (Humanoids) 1–9 (IEEE, 2018).

Tan, W. et al. On optimizing interventions in shared autonomy. In Proc. AAAI Conference on Artificial Intelligence 5341–5349 (AAAI, 2022).

Yoneda, T., Sun, L., Yang, G., Stadie, B. & Walter, M. To the noise and back: diffusion for shared autonomy. In Proc. Robotics: Science and Systems https://doi.org/10.15607/RSS.2023.XIX.014 (RSS, 2023).

Peng, Z., Mo, W., Duan, C., Li, Q. & Zhou, B. Learning from active human involvement through proxy value propagation. Adv. Neural Inf. Process. Syst. 36, 20552–20563 (2023).

McMahan, B. J., Peng, Z., Zhou, B. & Kao, J. C. Shared autonomy with IDA: interventional diffusion assistance. Adv. Neural Inf. Process. Syst. 37, 27412–27425 (2024).

Shannon, C. E. Prediction and entropy of printed English. Bell Syst. Tech. J. 30, 50–64 (1951).

Karpathy, A., Johnson, J. & Fei-Fei, L. Visualizing and understanding recurrent networks. In International Conference on Learning Representations https://openreview.net/pdf/71BmK0m6qfAE8VvKUQWB.pdf (ICLR, 2016).

Radford, A. et al. Language models are unsupervised multitask learners. OpenAI https://cdn.openai.com/better-language-models/language_models_are_unsupervised_multitask_learners.pdf (2019).

Gilja, V. et al. A high-performance neural prosthesis enabled by control algorithm design. Nat. Neurosci. 15, 1752–1757 (2012).

Dangi, S., Orsborn, A. L., Moorman, H. G. & Carmena, J. M. Design and analysis of closed-loop decoder adaptation algorithms for brain-machine interfaces. Neural Comput. 25, 1693–1731 (2013).

Orsborn, A. L. et al. Closed-loop decoder adaptation shapes neural plasticity for skillful neuroprosthetic control. Neuron 82, 1380–1393 (2014).

Silversmith, D. B. et al. Plug-and-play control of a brain–computer interface through neural map stabilization. Nat. Biotechnol. 39, 326–335 (2021).

Kim, S.-P., Simeral, J. D., Hochberg, L. R., Donoghue, J. P. & Black, M. J. Neural control of computer cursor velocity by decoding motor cortical spiking activity in humans with tetraplegia. J. Neural Eng. 5, 455 (2008).

Sussillo, D. et al. A recurrent neural network for closed-loop intracortical brain–machine interface decoders. J. Neural Eng. 9, 026027 (2012).

Sussillo, D., Stavisky, S. D., Kao, J. C., Ryu, S. I. & Shenoy, K. V. Making brain–machine interfaces robust to future neural variability. Nat. Commun. 7, 13749 (2016).

Kao, J. C. et al. Single-trial dynamics of motor cortex and their applications to brain-machine interfaces. Nat. Commun. 6, 7759 (2015).

Kao, J. C., Nuyujukian, P., Ryu, S. I. & Shenoy, K. V. A high-performance neural prosthesis incorporating discrete state selection with hidden Markov models. IEEE Trans. Biomed. Eng. 64, 935–945 (2016).

Shenoy, K. V. & Carmena, J. M. Combining decoder design and neural adaptation in brain-machine interfaces. Neuron 84, 665–680 (2014).

Lawhern, V. J. et al. EEGNet: a compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 15, 056013 (2018).

Forenzo, D., Zhu, H., Shanahan, J., Lim, J. & He, B. Continuous tracking using deep learning-based decoding for noninvasive brain–computer interface. PNAS Nexus 3, pgae145 (2024).

Pfurtscheller, G. & Da Silva, F. L. Event-related EEG/MEG synchronization and desynchronization: basic principles. Clin. Neurophysiol. 110, 1842–1857 (1999).

Olsen, S. et al. An artificial intelligence that increases simulated brain–computer interface performance. J. Neural Eng. 18, 046053 (2021).

Schulman, J., Wolski, F., Dhariwal, P., Radford, A. & Klimov, O. Proximal policy optimization algorithms. Preprint at https://arxiv.org/abs/1707.06347 (2017).

Liu, S. et al. Grounding DINO: marrying DINO with grounded pre-training for open-set object detection. In 18th European Conference 38–55 (ACM, 2024).

Golub, M. D., Yu, B. M., Schwartz, A. B. & Chase, S. M. Motor cortical control of movement speed with implications for brain-machine interface control. J. Neurophysiol. 112, 411–429 (2014).

Sachs, N. A., Ruiz-Torres, R., Perreault, E. J. & Miller, L. E. Brain-state classification and a dual-state decoder dramatically improve the control of cursor movement through a brain-machine interface. J. Neural Eng. 13, 016009 (2016).

Kao, J. C., Nuyujukian, P., Ryu, S. I. & Shenoy, K. V. A high-performance neural prosthesis incorporating discrete state selection with hidden Markov models. IEEE Trans. Biomed. Eng. 64, 935–945 (2017).

Stieger, J. R. et al. Mindfulness improves brain–computer interface performance by increasing control over neural activity in the alpha band. Cereb. Cortex 31, 426–438 (2021).

Stieger, J. R., Engel, S. A. & He, B. Continuous sensorimotor rhythm based brain computer interface learning in a large population. Sci. Data 8, 98 (2021).

Edelman, B. J., Baxter, B. & He, B. EEG source imaging enhances the decoding of complex right-hand motor imagery tasks. IEEE Trans. Biomed. Eng. 63, 4–14 (2016).

Scherer, R. et al. Individually adapted imagery improves brain-computer interface performance in end-users with disability. PLoS ONE 10, e0123727 (2015).

Millan, J. d. R. et al. A local neural classifier for the recognition of EEG patterns associated to mental tasks. IEEE Trans. Neural Netw. 13, 678–686 (2002).

Huang, D. et al. Decoding subject-driven cognitive states from EEG signals for cognitive brain–computer interface. Brain Sci. 14, 498 (2024).

Meng, J. et al. Noninvasive electroencephalogram based control of a robotic arm for reach and grasp tasks. Sci. Rep. 6, 38565 (2016).

Jeong, J.-H., Shim, K.-H., Kim, D.-J. & Lee, S.-W. Brain-controlled robotic arm system based on multi-directional CNN-BiLSTM network using EEG signals. IEEE Trans. Neural Syst. Rehabil. Eng. 28, 1226–1238 (2020).

Zhang, R. et al. NOIR: neural signal operated intelligent robots for everyday activities. In Proc. 7th Conference on Robot Learning 1737–1760 (PMLR, 2023).

Jeon, H. J., Losey, D. P. & Sadigh, D. Shared autonomy with learned latent actions. In Proc. Robotics: Science and Systems https://doi.org/10.15607/RSS.2020.XVI.011 (RSS, 2020).

Javdani, S., Bagnell, J. A. & Srinivasa, S. S. Shared autonomy via hindsight optimization. In Proc. Robotics: Science and Systems https://doi.org/10.15607/RSS.2015.XI.032 (RSS, 2015).

Newman, B. A. et al. HARMONIC: a multimodal dataset of assistive human-robot collaboration. Int. J. Robot. Res. 41, 3–11 (2022).

Jain, S. & Argall, B. Probabilistic human intent recognition for shared autonomy in assistive robotics. ACM Trans. Hum. Robot Interact. 9, 2 (2019).

Losey, D. P., Srinivasan, K., Mandlekar, A., Garg, A. & Sadigh, D. Controlling assistive robots with learned latent actions. In 2020 IEEE International Conference on Robotics and Automation (ICRA) 378–384 (IEEE, 2020).

Cui, Y. et al. No, to the right: online language corrections for robotic manipulation via shared autonomy. In Proc. 2023 ACM/IEEE International Conference on Human-Robot Interaction 93–101 (ACM, 2023).

Karamcheti, S. et al. Learning visually guided latent actions for assistive teleoperation. In Proc. 3rd Conference on Learning for Dynamics and Control 1230–1241 (PMLR, 2021).

Chi, C. et al. Diffusion policy: visuomotor policy learning via action diffusion. Int. J. Rob. Res. https://doi.org/10.1177/02783649241273668 (2024).

Brohan, A. et al. RT-1: robotics transformer for real-world control at scale. In Proc. Robotics: Science and Systems https://doi.org/10.15607/RSS.2023.XIX.025 (RSS, 2023).

Brohan, A. et al. RT-2: vision-language-action models transfer web knowledge to robotic control. In Proc. 7th Conference on Robot Learning 2165–2183 (PMLR, 2023).

Nair, S., Rajeswaran, A., Kumar, V., Finn, C. & Gupta, A. R3M: a universal visual representation for robot manipulation. In Proc. 6th Conference on Robot Learning 892–909 (PMLR, 2023).

Ma, Y. J. et al. VIP: towards universal visual reward and representation via value-implicit pre-training. In 11th International Conference on Learning Representations https://openreview.net/pdf?id=YJ7o2wetJ2 (ICLR, 2023).

Khazatsky, A. et al. DROID: a large-scale in-the-wild robot manipulation dataset. In Proc. Robotics: Science and Systems https://doi.org/10.15607/RSS.2024.XX.120 (RSS, 2024).

Open X-Embodiment Collaboration. Open X-Embodiment: robotic learning datasets and RT-X models. In 2024 IEEE International Conference on Robotics and Automation (ICRA) 6892–6903 (IEEE, 2024).

Willett, F. R. et al. A high-performance speech neuroprosthesis. Nature 620, 1031–1036 (2023).

Leonard, M. K. et al. Large-scale single-neuron speech sound encoding across the depth of human cortex. Nature 626, 593–602 (2024).

Card, N. S. et al. An accurate and rapidly calibrating speech neuroprosthesis. N. Engl. J. Med. 391, 609–618 (2024).

Sato, M. et al. Scaling law in neural data: non-invasive speech decoding with 175 hours of EEG data. Preprint at https://arxiv.org/abs/2407.07595 (2024).

Kaifosh, P., Reardon, T. R. & CTRL-labs at Reality Labs. A generic non-invasive neuromotor interface for human–computer interaction. Nature https://doi.org/10.1038/s41586-025-09255-w (2025).

Zeng, H. et al. Semi-autonomous robotic arm reaching with hybrid gaze-brain machine interface. Front. Neurorobot. 13, 111 (2019).

Shafti, A., Orlov, P. & Faisal, A. A. Gaze-based, context-aware robotic system for assisted reaching and grasping. In 2019 International Conference on Robotics and Automation 863–869 (IEEE, 2019).

Argall, B. D. Autonomy in rehabilitation robotics: an intersection. Annu. Rev. Control Robot. Auton. Syst. 1, 441–463 (2018).

Nuyujukian, P. et al. Monkey models for brain-machine interfaces: the need for maintaining diversity. In Proc. 33rd Annual Conference of the IEEE EMBS 1301–1305 (IEEE, 2011).

Suminski, A. J., Tkach, D. C., Fagg, A. H. & Hatsopoulos, N. G. Incorporating feedback from multiple sensory modalities enhances brain-machine interface control. J. Neurosci. 30, 16777–16787 (2010).

Kaufman, M. T. et al. The largest response component in motor cortex reflects movement timing but not movement type. eNeuro 3, ENEURO.0085–16.2016 (2016).

Dangi, S. et al. Continuous closed-loop decoder adaptation with a recursive maximum likelihood algorithm allows for rapid performance acquisition in brain-machine interfaces. Neural Comput. 26, 1811–1839 (2014).

Fitts, P. M. The information capacity of the human motor system in controlling the amplitude of movement. J. Exp. Psychol. 47, 381 (1954).

Gramfort, A. et al. MNE software for processing MEG and EEG data. NeuroImage 86, 446–460 (2014).

Lee, J. Y. et al. Data: brain–computer interface control with artificial intelligence copilots. Zenodo https://doi.org/10.5281/zenodo.15165133 (2025).

Lee, J. Y. et al. kaolab-research/bci_raspy. Zenodo https://doi.org/10.5281/zenodo.15164641 (2025).

Lee, J. Y. et al. kaolab-research/bci_plot. Zenodo https://doi.org/10.5281/zenodo.15164643 (2025).

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies