AI Research

Does AI really boost productivity at work? Research shows gains don’t come cheap or easy

Artificial intelligence (AI) is being touted as a way to boost lagging productivity growth.

The AI productivity push has some powerful multinational backers: the tech companies who make AI products and the consulting companies who sell AI-related services. It also has interest from governments.

Next week, the federal government will hold a roundtable on economic reform, where AI will be a key part of the agenda.

However, the evidence AI actually enhances productivity is far from clear.

To learn more about how AI is working and being procured in real organizations, we are interviewing senior bureaucrats in the Victorian Public Service. Our research is ongoing, but results from the first 12 participants are showing some shared key concerns.

Our interviewees are bureaucrats who buy, use and administer AI services. They told us increasing productivity through AI requires difficult, complex, and expensive organizational groundwork. The results are hard to measure, and AI use may create new risks and problems for workers.

Introducing AI can be slow and expensive

Public service workers told us introducing AI tools to existing workflows can be slow and expensive. Finding time and resources to research products and retrain staff presents a real challenge.

Not all organizations approach AI the same way. We found well-funded entities can afford to test different AI uses for “proofs of concept.” Smaller ones with fewer resources struggle with the costs of implementing and maintaining AI tools.

In the words of one participant: “It’s like driving a Ferrari on a smaller budget […] Sometimes those solutions aren’t fit for purpose for those smaller operations, but they’re bloody expensive to run, they’re hard to support.”

‘Data is the hard work’

Making an AI system useful may also involve a lot of groundwork.

Off-the-shelf AI tools such as Copilot and ChatGPT can make some relatively straightforward tasks easier and faster. Extracting information from large sets of documents or images is one example, and transcribing and summarizing meetings is another. (Though our findings suggest staff may feel uncomfortable with AI transcription, particularly in internal and confidential situations.)

But more complex use cases, such as call center chatbots or internal information retrieval tools, involve running an AI model over internal data describing business details and policies. Good results will depend on high-quality, well-structured data, and organizations may be liable for mistakes.

However, few organizations have invested enough in the quality of their data to make commercial AI products work as promised.

Without this foundational work, AI tools won’t perform as advertised. As one person told us, “data is the hard work.”

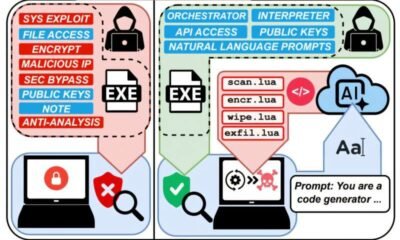

Privacy and cybersecurity risks are real

Using AI creates complex data flows between an organization and servers controlled by giant multinational tech companies. Large AI providers promise these data flows comply with laws about, for instance, keeping organizational and personal data in Australia and not using it to train their systems.

However, we found users were cautious about the reliability of these promises. There was also considerable concern about how products could introduce new AI functions without organizations knowing. Using those AI capabilities may create new data flows without the necessary risk assessments or compliance checking.

If organizations handle sensitive information or data that could create safety risks if leaked, vendors and products must be monitored to ensure they comply with existing rules. There are also risks if workers use publicly available AI tools such as ChatGPT, which don’t guarantee confidentiality for users.

How AI is really used

We found AI has increased productivity on “low-skill” tasks such as taking meeting notes and customer service, or work done by junior workers. Here AI can help smooth the outputs of workers who may have poor language skills or are learning new tasks.

But maintaining quality and accountability typically requires human oversight of AI outputs. The workers with less skill and experience, who would benefit most from AI tools, are also the least able to oversee and double-check AI output.

In areas where the stakes and risks are higher, the amount of human oversight necessary may undermine whatever productivity gains are made.

What’s more, we found when jobs become primarily about overseeing an AI system, workers may feel alienated and less satisfied with their experience of work.

We found AI is often used for questionable purposes, too. Workers may use AI to take shortcuts, without understanding the nuances of compliance within organizational guidelines.

Not only are there data security and privacy concerns, but using AI to review and extract information can introduce other ethical risks, such as magnifying existing human bias.

In our research, we saw how those risks prompted organizations to use more AI—for enhanced workplace surveillance and forms of workplace control. A recent Victorian government inquiry recognized that these methods may be harmful to workers.

Productivity is tricky to measure

There’s no easy way for an organization to measure changes in productivity due to AI. We found organizations often rely on feedback from a few skilled workers who are good at using AI, or on claims from vendors.

One interviewee told us:

“I’m going to use the word ‘research’ very loosely here, but Microsoft did its own research about the productivity gains organizations can achieve by using Copilot, and I was a little surprised by how high those numbers came back.”

Organizations may want AI to facilitate staff cuts or increase throughput.

But these measures don’t consider changes in the quality of products or services delivered to customers. They also don’t capture how the workplace experience changes for remaining workers, or the considerable costs that primarily go to multinational consultancies and tech firms.

This article is republished from The Conversation under a Creative Commons license. Read the original article.![]()

Citation:

Does AI really boost productivity at work? Research shows gains don’t come cheap or easy (2025, August 15)

retrieved 15 August 2025

from https://techxplore.com/news/2025-08-ai-boost-productivity-gains-dont.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

AI Research

Who is Shawn Shen? The Cambridge alumnus and ex-Meta scientist offering $2M to poach AI researchers

Shawn Shen, co-founder and Chief Executive Officer of the artificial intelligence (AI) startup Memories.ai, has made headlines for offering compensation packages worth up to $2 million to attract researchers from top technology companies. In a recent interview with Business Insider, Shen explained that many scientists are leaving Meta, the parent company of Facebook, due to constant reorganisations and shifting priorities.“Meta is constantly doing reorganizations. Your manager and your goals can change every few months. For some researchers, it can be really frustrating and feel like a waste of time,” Shen told Business Insider, adding that this is a key reason why researchers are seeking roles at startups. He also cited Meta Chief Executive Officer Mark Zuckerberg’s philosophy that “the biggest risk is not taking any risks” as a motivation for his own move into entrepreneurship.With Memories.ai, a company developing AI capable of understanding and remembering visual data, Shen is aiming to build a niche team of elite researchers. His company has already recruited Chi-Hao Wu, a former Meta research scientist, as Chief AI Officer, and is in talks with other researchers from Meta’s Superintelligence Lab as well as Google DeepMind.

From full scholarships to Cambridge classrooms

Shen’s academic journey is rooted in engineering, supported consistently by merit-based scholarships. He studied at Dulwich College from 2013 to 2016 on a full scholarship, completing his A-Level qualifications.He then pursued higher education at the University of Cambridge, where he was awarded full scholarships throughout. Shen earned a Bachelor of Arts (BA) in Engineering (2016–2019), followed by a Master of Engineering (MEng) at Trinity College (2019–2020). He later continued at Cambridge as a Meta PhD Fellow, completing his Doctor of Philosophy (PhD) in Engineering between 2020 and 2023.

Early career: Internships in finance and research

Alongside his academic pursuits, Shen gained early experience through internships and analyst roles in finance. He worked as a Quantitative Research Summer Analyst at Killik & Co in London (2017) and as an Investment Banking Summer Analyst at Morgan Stanley in Shanghai (2018).Shen also interned as a Research Scientist at the Computational and Biological Learning Lab at the University of Cambridge (2019), building the foundations for his transition into advanced AI research.

From Meta’s Reality Labs to academia

After completing his PhD, Shen joined Meta (Reality Labs Research) in Redmond, Washington, as a Research Scientist (2022–2024). His time at Meta exposed him to cutting-edge work in generative AI, but also to the frustrations of frequent corporate restructuring. This experience eventually drove him toward building his own company.In April 2024, Shen began his academic career as an Assistant Professor at the University of Bristol, before launching Memories.ai in October 2024.

Betting on talent with $2M offers

Explaining his company’s aggressive hiring packages, Shen told Business Insider: “It’s because of the talent war that was started by Mark Zuckerberg. I used to work at Meta, and I speak with my former colleagues often about this. When I heard about their compensation packages, I was shocked — it’s really in the tens of millions range. But it shows that in this age, AI researchers who make the best models and stand at the frontier of technology are really worth this amount of money.”Shen noted that Memories.ai is looking to recruit three to five researchers in the next six months, followed by up to ten more within a year. The company is prioritising individuals willing to take a mix of equity and cash, with Shen emphasising that these recruits would be treated as founding members rather than employees.By betting heavily on talent, Shen believes Memories.ai will be in a strong position to secure additional funding and establish itself in the competitive AI landscape.His bold $2 million offers may raise eyebrows, but they also underline a larger truth: in today’s technology race, the fiercest competition is not for customers or capital, it’s for talent.

AI Research

JUPITER: Europe’s First Exascale Supercomputer Powers AI and Climate Research | Ukraine news

The Jupiter supercomputer at the Jülich Research Centre, Germany, September 5, 2025.

Getty Images/INA FASSBENDER/AFP

As reported by the European Commission’s press service

At the Jülich Research Center in Germany, on September 5, the ceremonial opening of the supercomputer JUPITER took place – the first in Europe to surpass the exaflop performance threshold. The system is capable of performing more than one quintillion operations per second, according to the European Commission’s press service.

According to the EU, JUPITER runs entirely on renewable energy sources and features advanced cooling and heat disposal systems. It also topped the Green500 global energy-efficiency ranking.

The supercomputer is located on a site covering more than 2,300 square meters and comprises about 50 modular containers. It is currently the fourth-fastest supercomputer in the world.

JUPITER is capable of running high-resolution climate and meteorological models with kilometer-scale resolution, which allows more accurate forecasts of extreme events – from heat waves to floods.

Role in the European AI ecosystem and industrial developments

In addition, the system will form the backbone of the future European AI factory JAIF, which will train large language models and other generative technologies.

The investment in JUPITER amounts to about 500 million euros – a joint project of the EU and Germany under the EuroHPC programme. This is part of a broader strategy to build a network of AI gigafactories that will provide industry and science with the capabilities to develop new models and technologies.

It is expected that the deployment of JUPITER will strengthen European research-industrial initiatives and enhance the EU’s competitiveness on the global stage in the field of artificial intelligence and scientific developments.

More interesting materials:

AI Research

PH kicks off 2025 Development Policy Research Month on AI in governance

THE Philippines cannot rely on new technology alone to thrive in the age of artificial intelligence. Strong governance policies must come first — this was the central call of the 2025 Development Policy Research Month (DPRM), which opened on Sept. 1 with a push for AI rules that reflect national realities.

“Policy research provides the guardrails that help governments adopt technology responsibly,” said PIDS president Dr. Philip Arnold Tuano. Without such guardrails, he warned, the benefits of AI may never outweigh the risks.

CONFERENCE HIGHLIGHT The 2025 Development Policy Research Month kicked off with a push for AI rules that reflect the country’s realities. PHOTO FROM PIDS

Established under Proclamation 247 (2002), DPRM highlights the role of policy research in shaping evidence-based strategies. This year’s theme, “Reimagining Governance in the Age of AI,” underscores that while AI offers tools for efficiency and transparency, policies must come first to address risks such as digital exclusion, bias, cybersecurity threats, and workforce displacement.

PIDS, as lead coordinator, works with an interagency steering committee that includes the BSP, CSC, DBM, DILG, legislative policy offices, PIA, PMS, and now the Department of Science and Technology, which joins for the first time, given its role in AI research and governance.

The highlight is the 11th Annual Public Policy Conference on Sept. 18 at New World Hotel Makati, featuring global experts. Activities nationwide will amplify the campaign, supported by the hashtag #AIforGoodGovernance.

Learn more at https://dprm.pids.gov.ph.

-

Business1 week ago

Business1 week agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi